Analysis of Variance

ANOVA

Regression analysis

Regression analysis attempts to explain data (the dependent variable scores) in terms of a set of independent variables or predictors (the model) and a residual component (error). Typically, a researcher who applies regression is interested in predicting a quantitative dependent variable from one or more quantitative independent variables, and in determining the relative contribution of each independent variable to the prediction: there is interest in what proportion of the variation in the dependent variable can be attributed to variation in the independent variable(s).

Regression also may employ categorical (also known as nominal or qualitative) predictors: the use of independent variables such as sex, marital status and type of teaching method is common.

Moreover, as regression is the elementary form of GLM, it is possible to construct regression GLMs equivalent to any ANOVA and ANCOVA GLMs by selecting and organizing quantitative variables to act as categorical variables. Nevertheless, the convention of referring to these particular quantitative variables as categorical variables will be maintained.

Analysis of variance

ANOVA also can be thought of in terms of a model plus error. Here, the dependent variable scores constitute the data, the experimental conditions constitute the model and the component of the data not accommodated by the model, again, is represented by the error term. Typically, the researcher applying ANOVA is interested in whether the mean dependent variable scores obtained in the experimental conditions differ significantly. This is achieved by determining how much variation in the dependent variable scores is attributable to differences between the scores obtained in the experimental conditions, and comparing this with the error term, which is attributable to variation in the dependent variable scores within each of the experimental conditions: there is interest in what proportion of variation in the dependent variable can be attributed to the manipulation of the experimental variable(s).

Although the dependent variable in ANOVA is most likely to be measured on a quantitative scale, the statistical comparison is drawn between the groups of subjects receiving different experimental conditions and is categorical in nature, even when the experimental conditions differ along a quantitative scale. Therefore, ANOVA is a particular type of regression analysis that employs quantitative predictors to act as categorical predictors.

Analysis of covariance

As ANCOVA is the statistical technique that combines regression and ANOVA, it too can be described in terms of a model plus error. As in regression and ANOVA, the dependent variable scores constitute the data, but the model includes not only experimental conditions, but also one or more quantitative predictor variables. These quantitative predictors, known as covariates (also concomitant or control variables), represent sources of variance that are thought to influence the dependent variable, but have not been controlled by the experimental procedures. ANCOVA determines the covariation (correlation) between the covariate(s) and the dependent variable and then removes that variance associated with the covariate(s) from the dependent variable scores, prior to determining whether the differences between the experimental condition (dependent variable score) means are significant.

As mentioned, this technique, in which the influence of the experimental conditions remains the major concern, but one or more quantitative variables that predict the dependent variable also are included in the GLM, is labelled ANCOVA most frequently, and in psychology is labelled ANCOVA exclusively (e.g. Cohen & Cohen, 1983; Pedhazur, 1997, cf. Cox & McCullagh, 1982). A very important, but seldom emphasized, aspect of the ANCOVA method is that the relationship between the covariate(s) and the dependent variable, upon which the adjustments depend, is determined empirically from the data.

GLM approach

1. Conceptually, a major advantage is the continuity the GLM reveals between regression, ANOVA and ANCOVA. Rather than having to learn about three apparently discrete techniques, it is possible to develop an understanding of a consistent modelling approach that can be applied to the different circumstances covered by regression, ANOVA and ANCOVA. A number of practical advantages also stem from the utility of the simply conceived and easily calculated error terms. The GLM conception divides data into model and error, and it follows that the better the model explains the data, the less the error. Therefore, the set of predictors constituting a GLM can be selected by their ability to reduce the error term. Comparing a GLM of the data that contains the predictor(s) under consideration with a GLM that does not, in terms of error reduction, provides a way of estimating effects that is both intuitively appreciable and consistent across regression, ANOVA and ANCOVA applications.

2. Moreover, as most GLM assumptions concern the error terms, residuals, the error term estimates, provide a common means by which the assumptions underlying regression, ANOVA and ANCOVA can be assessed. This also opens the door for sophisticated statistical techniques, developed primarily to assist regression error analysis, to be applied to both ANOVA and ANCOVA.

3. Finally, recognizing ANOVA and ANCOVA as instances of the GLM also provides connection to an extensive and useful literature on methods, analysis strategies and related techniques, such as structural equation modelling, which are pertinent to experimental and non-experimental analyses alikeANCOVA

ANCOVA (Analysis of Covariance) Overview

ANCOVA is a blend of ANOVA and regression, allowing researchers to adjust for the effects of one or more continuous covariates that might influence the dependent variable. This method is useful for enhancing the precision of the analysis by controlling for variables that are not the main focus of the study but could affect the outcome.

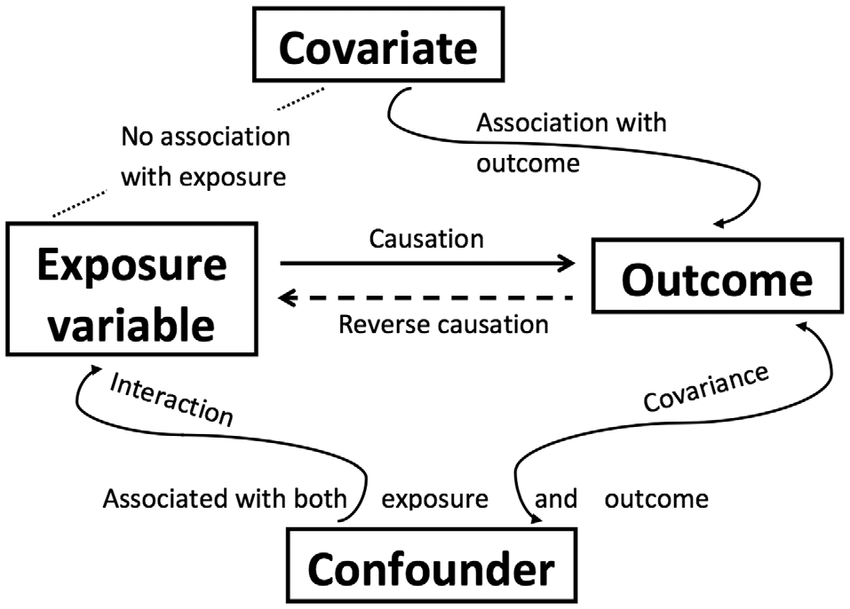

Covariates: These are variables that are expected to influence the dependent variable. They are included in the ANCOVA model to control for their effects, thus isolating the effect of the independent variable(s) more effectively. Covariates are continuous predictor variables, unlike the categorical factors in ANOVA.

Confounding Variables: Unlike covariates, which are related only to the dependent variable, confounding variables affect both the independent and dependent variables. They introduce spurious associations, making it hard to discern the true effect of the independent variable.

Reducing Within-Group Error Variance

By incorporating covariates into the analysis, ANCOVA can minimize the error variance within groups. This is crucial for:

Maximizing the between-groups variance, which helps in detecting true effects of the treatments or conditions being studied.

Enhancing the statistical power of the test, leading to a larger F-statistic and more robust conclusions.

Importance of Independence: The covariate should not be affected by the treatment or condition. If there is a significant relationship between the covariate and the treatment, then the covariate may actually be a confounder rather than just a covariate. This complicates the analysis because it suggests that adjustments for this variable might need more sophisticated methods like mediation analysis, rather than just control through ANCOVA.

Testing Independence: This can be assessed by using a one-way ANOVA to check if the covariate significantly varies across the treatment groups. If it does, this violates the assumption of independence, indicating that the covariate might interact with the independent variable(s) in influencing the dependent variable.

ANCOVA Model

The general linear model (GLM) for ANCOVA is expressed as: \[ Y_{ij} = \mu + \alpha_j + \beta Z_{ij} + \epsilon_{ij} \]

- \(\mu\) is the overall mean.

- \(\alpha_j\) is the effect of the jth treatment.

- \(\beta\) is the regression coefficient for the covariate.

- \(Z_{ij}\) is the score of the covariate for the ith subject in the jth group.

- \(\epsilon_{ij}\) represents the random error.

This model combines the categorical treatment effects (as in ANOVA) with the continuous influence of the covariates (as in regression). The regression coefficient \(\beta\) shows how changes in the covariate are associated with changes in the dependent variable, independent of the treatment effect.

One-way ANOVA

Introduction

These are models for data from experiments where several groups are compared, and where the sample sizes are equal for all groups. Independence of data values is a crucial assumption for these models. If they are not independent, then you might be able to use one of the alternatives. Other assumptions strictly needed for these models are homogeneity of error variance and normality of the observations within each group. But these are not as important as the independence assumption (unless severely violated).

The one-way analysis of variance (ANOVA) is used to test the difference in our dependent variable between three or more different groups of observations. Our grouping variable is our independent variable. In other words, we use the one-way ANOVA when we have a research question with a continuous dependent variable and a categorical independent variable with three or more categories in which different participants are in each category.

The one-way ANOVA is also known as an independent factor ANOVA (note that it is NOT the same as a factorial ANOVA, which means there are two or more IVs!).

The mathematical formula for one-way ANOVA can be expressed as: Total Sum of Squares (SST) = Sum of Squares Between Groups (SSB) + Sum of Squares Within Groups (SSW)

Model Representation:

\(Y_{ij} = \mu + \alpha_j + \epsilon_{ij}\)

- \(Y_{ij}\): The response variable.

- \(\mu\): The overall mean of the response.

- \(\alpha_j\): The effect of the \(j\)th group.

- \(\epsilon_{ij}\): The random error component.

Components of Variance: - Total Sum of Squares (SST): Represents the total variation in the data. - Sum of Squares Between Groups (SSB): Represents the variation between the means of the groups. - Sum of Squares Within Groups (SSW): Represents the variation within each group.

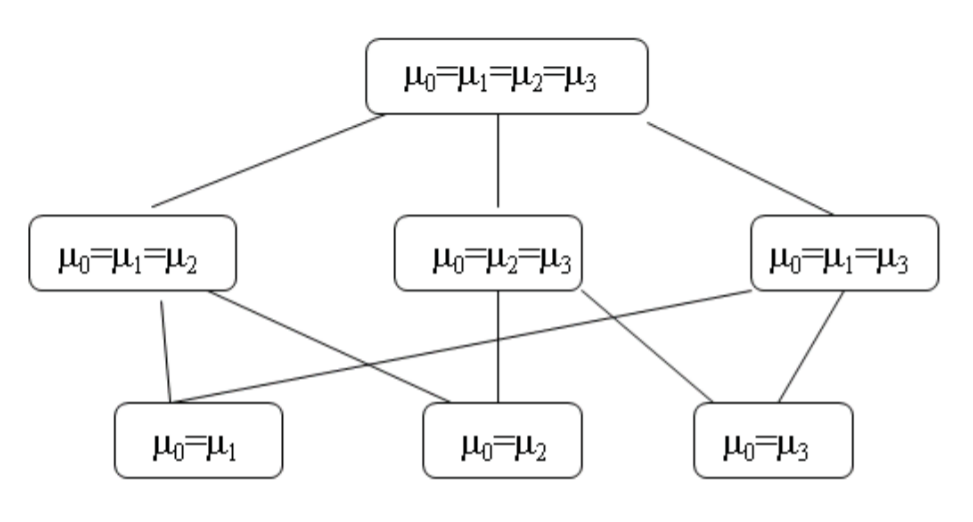

Purpose and Hypotheses of One-way ANOVA: - Omnibus Test: One-way ANOVA is an omnibus statistic used to test if there is any difference among the group means. - Null Hypothesis (H0): There is no difference in means between the groups; all group means are the same. - Alternative Hypothesis (H1): There is a difference in means between the groups; at least one group has a significantly different mean compared to the other groups.

Additional Notes: - One-way ANOVA does not specify where the differences lie between the means. For identifying specific differences, one needs to perform planned contrasts or post-hoc procedures, which are discussed in subsequent chapters.

This summary captures the essence of the concepts related to one-way ANOVA as presented in the image. If you need further explanation on any of these points or additional statistical concepts, feel free to ask!

Modeling Assumptions and Basic Analysis

The model for the data throughout this chapter is assumed to be \[ y_{i j}=\mu_{i}+\varepsilon_{i j} \] where the \(y_{i j}\) are the observed data, with \(i=1, \ldots, g\) indicating group and \(j=1, \ldots, n\) indicating measurement observed within a group. This is a full-rank parameterization, unlike the default PROC GLM parameterization, which is not of full rank since it includes the “intercept” term \(\gamma\).

The \(\mu_{i}\) are assumed to be fixed, unknown population mean values, and the errors \(\varepsilon_{i j}\) are assumed to be random variables that

- have mean zero,

- have constant variance \(\sigma^{2}\),

- are independent, and

- are normally distributed.

Constant Variance

The assumption of constant variance is also called homoscedasticity, and its violation is called heteroscedasticity. As it turns out, at least in the balanced one-way model, heteroscedasticity is not necessarily much of a problem, and inferences can still be approximately valid with mild violations of this assumption.

Levene’s Test for Homogeneity

There are also formal statistical tests for homoscedasticity, available with the HOVTEST option in the GLM MEANS statement; you can use this in conjunction with the informal descriptive and graphical assessments.

proc glm data=Wloss;

class Diet;

model Wloss=Diet;

means Diet / hovtest;

run; Independence

The assumption that the measurements are independent is crucial. In the extreme, its violation can lead to estimates and inferences that are effectively based on much less information than it might appear that you have, based on the sample size of your data set. Common ways for this assumption to be violated include

- there are repeated measurements on the subjects (measurements on the same subject are usually correlated),

- subjects are “paired” in some fashion, such as the husband/wife

- the data involve time series or spatial autocorrelation.

As with heteroscedasticity, autocorrelation can be diagnosed with informal graphical and formal inferential measures, but the other two violations (which are probably more common in ANOVA) require knowledge of the design for the data—how it was collected. You can check for the various types of dependence structure using hypothesis tests, but, again, testing methods should not be used exclusively to diagnose seriousness of the problem.

Normality

It is usually not critical that the distribution of the response be precisely normal: the Central Limit Theorem states that estimated group means are approximately normally distributed even if the observations have non-normal distributions. This happy fact provides approximate largesample justification for the methods described in this chapter, as long as the other assumptions are valid. However, if the sample sizes are small and the distributions are not even close to normal, then the Central Limit Theorem may not apply.

Testing the Normality Assumption in ANOVA

proc glm data=Wloss;

class Diet;

model Wloss=Diet;

output out=wlossResid r=wlossResid;

run;

proc univariate data=wlossResid normal;

var wlossResid;

ods select TestsForNormality;

run; Check Assumptions for One-Way ANOVA

Assumptions for One-Way ANOVA: 1. Normal Distribution of the Dependent Variable: - Methods to check: - Shapiro-Wilk test - Q-Q plot - Analysis of skewness and kurtosis - Visual inspection of data distribution

- Homogeneity of Variances:

- Tested using Levene’s test.

- Dependent Variable is Interval or Ratio:

- This indicates that the dependent variable should be continuous.

- Independence of Scores:

- Scores must be independent between groups.

Assumptions Cannot be Directly Tested: - Assumptions 3 and 4 are based on knowledge of the data rather than empirical testing.

Response to Assumption Violations

- Normality Satisfied and Homogeneity of Variance

Satisfied:

- Use one-way ANOVA (standard ANOVA function).

- Normality Satisfied but Homogeneity of Variance Not

Satisfied:

- Use Welch’s F-test (modified ANOVA function that does not assume equal variances).

- Normality Not Satisfied (Regardless of Homogeneity of

Variance):

- Use Kruskal-Wallis test, which is a non-parametric alternative to the one-way ANOVA.

Robustness of ANOVA

- One-way ANOVA is somewhat robust to violations of normality and

homogeneity of variance, but this robustness applies primarily when:

- Group sizes and variances are equal or nearly equal.

- Variances should not differ drastically (e.g., no more than a 2:1 ratio).

- Group sizes should not be extremely unbalanced (e.g., no fewer than 10 cases in the smallest group).

Implications of Violation:

- If the group with the larger variance also has more cases, it can lead to an F-statistic that is non-significant or smaller than it should be.

- Conversely, if the group with the larger variance has fewer cases, the F-statistic can be misleadingly high (significant or larger than it should be).

Parameter Estimates

Means and SD

The estimated population means are the individual sample means for each group, \[ \hat{\mu}_{i}=\bar{y}_{i}=\frac{\sum_{j=1}^{n} y_{i j}}{n}, \] and the estimated common variance of the errors is the pooled mean squared error (MSE), \[ \hat{\sigma}^{2}=\mathrm{MSE}=\frac{\sum_{i=1}^{g} \sum_{j=1}^{n}\left(y_{i j}-\bar{y}_{i}\right)^{2}}{g(n-1)} \] These formulas are special cases of the general formulas \(\hat{\boldsymbol{\beta}}=\left(\mathbf{X}^{\prime} \mathbf{X}\right)^{-1} \mathbf{X}^{\prime} \mathbf{Y}\) and \(\hat{\sigma}^{2}=(\mathbf{Y}-\mathbf{X} \hat{\boldsymbol{\beta}})^{\prime}(\mathbf{Y}-\mathbf{X} \hat{\boldsymbol{\beta}}) / d f\); here the \(\mathbf{X}\) matrix is full rank.

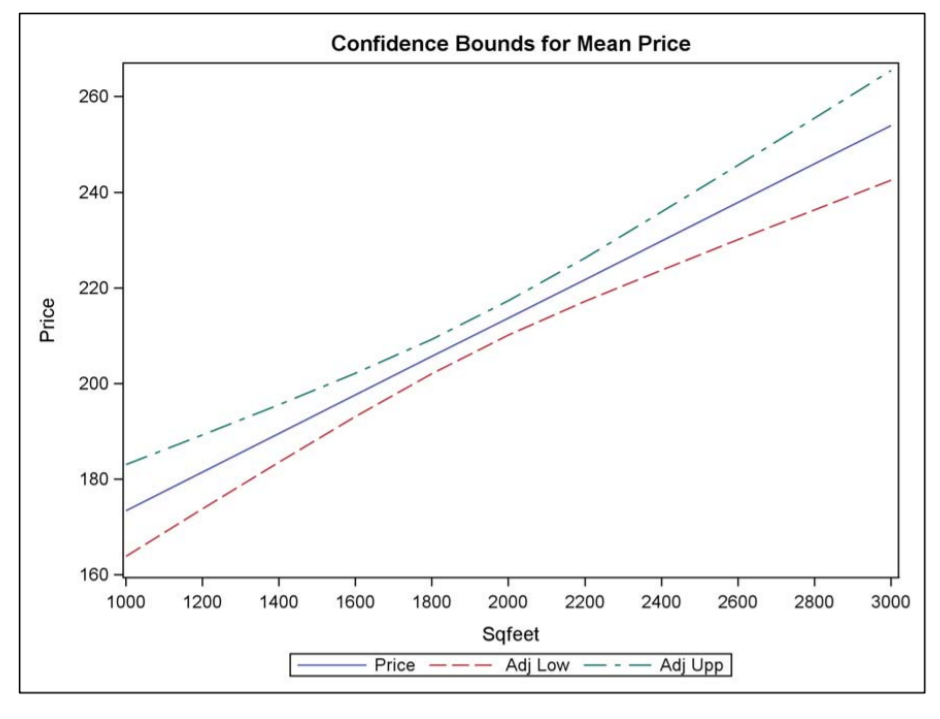

Simultaneous Confidence Intervals

The general form of the simultaneous confidence interval \[ \mathbf{c}^{\prime} \hat{\boldsymbol{\beta}} \pm c_{\alpha} s . e .\left(\mathbf{c}^{\prime} \hat{\boldsymbol{\beta}}\right) \] produces intervals for the difference of means \(\mu_{i}-\mu_{i^{\prime}}\) having the form \[ \bar{y}_{i}-\bar{y}_{i^{\prime}} \pm c_{\alpha} \hat{\sigma} \sqrt{2 / n} \] where \(c_{\alpha}\) is a critical value that is selected to make the \(\mathrm{FWE}=\alpha .\) The term \(\hat{\sigma} \sqrt{2 / n}\) is the square root of the estimated variance of the difference, also called the standard error of the estimated difference.

In the case of non-multiplicity-adjusted confidence intervals, you set \(c_{\alpha}\) equal to the \(1-\alpha / 2\) quantile of the \(t\) distribution, \(t_{1-\alpha / 2, g(n-1)} .\) Each confidence interval thus constructed will contain the true difference \(\mu_{i}-\mu_{i^{\prime}}\) with confidence \(100(1-\alpha) \%\).

However, when you look at many intervals (say, \(k\) of them) then all \(k\) intervals will contain their respective true differences simultaneously with much lower confidence. The Bonferroni inequality gives a pessimistic estimate of the simultaneous confidence of these \(k\) non multiplicity-adjusted intervals as \(100 \times(1-k \alpha) \%\). This implies that you can construct Bonferroni-adjusted confidence intervals by setting \(c_{\alpha}=t_{1-\alpha^{\prime} / 2, g(n-1)}\), where \(\alpha^{\prime}=\alpha / k\). However, the Bonferroni method is conservative: the value \(c_{\alpha}=t_{1-\alpha^{\prime} / 2, g(n-1)}\) is larger than it needs to be, in the sense that the actual simultaneous confidence level will be somewhat larger than the nominal level \(100(1-\alpha) \%\).

Relationship Between F-test and T-test

Relationship Between F-test and T-test

ANOVA (Analysis of Variance) simultaneously examines for differences between any number of conditions while maintaining the Type I error rate at a specified significance level, typically 0.05. ANOVA can be thought of as an extension of the t-test for more than two conditions, maintaining Type I error constancy. This is evident when ANOVA is applied to just two conditions, in which case it effectively becomes identical to performing a t-test. The F and t statistics derived in such cases will directly correspond, resulting in identical p-values.

Transformation Between F and T Statistics: If you are working with two groups and conduct an F-test, you can directly convert the F-value to a t-statistic (and vice versa) using the following formulas: \[ F = t^2 \] \[ t = \sqrt{F} \] This conversion is useful if you initially conduct one test and then realize you need the other for comparison or consistency in reporting.

One-Tailed T-Test vs. ANOVA: Despite the direct relationship between t and F values, confusion may arise, especially under specific testing conditions like directional hypotheses. For instance, a one-tailed t-test assessing a directional hypothesis may yield significant results (e.g., \(t(20) = 1.725, p = 0.05\)), but an ANOVA performed on the same data could yield an F-value (e.g., \(F(1,20) = 2.976, p = 0.100\)) that is not significant at a 0.05 level. This discrepancy arises because the F-test inherently addresses a two-tailed question even when used in a one-tailed format, as it does not inherently support directional hypotheses due to its reliance on squared differences (removing directionality).

Implications of F-test Characteristics: The F-test is fundamentally a one-tailed test, but it does not inherently support testing directional hypotheses. It’s designed to compare variances and, as such, examines whether one variance is significantly greater than another without implying a direction (positive or negative difference). This is critical to understand when choosing between a one-tailed or two-tailed F-test:

- One-Tailed F-Test: Appropriate if your research hypothesis predicts a specific directional effect (positive or negative).

- Two-Tailed F-Test: More suitable for non-directional hypotheses or when the direction of the effect is not specified.

Understanding Test Distributions: The choice between a one-tailed and a two-tailed test also reflects the underlying distribution of the test statistic. Symmetrical distributions like the t and z distributions inherently support two-tailed testing. In contrast, asymmetrical distributions like those used in F-tests and chi-square tests inherently do not have a “one-tailed vs. two-tailed” option as they are based on distributions with only one tail. This structural aspect of the test statistic dictates how hypothesis testing is approached, influencing the interpretation and application of test results.

Statistical Power: A one-tailed test provides greater statistical power than a two-tailed test at the same alpha level, assuming the direction of the effect is correctly specified. This is because a one-tailed test focuses the statistical analysis on one direction, increasing the ability to detect an effect if one exists in that specified direction.

R Implementation

df.residualreturns the degrees of freedom of the residualcoefreturns the estimated coefficients (and sometimes their standard deviations)residualsreturns residualsdeviancereturns the variancefittedreturns the fitted valuelogLikcalculates the log-likelihood and returns the number of argumentsAICcomputes the Akaike information criterion (AIC) (depending on logLik())

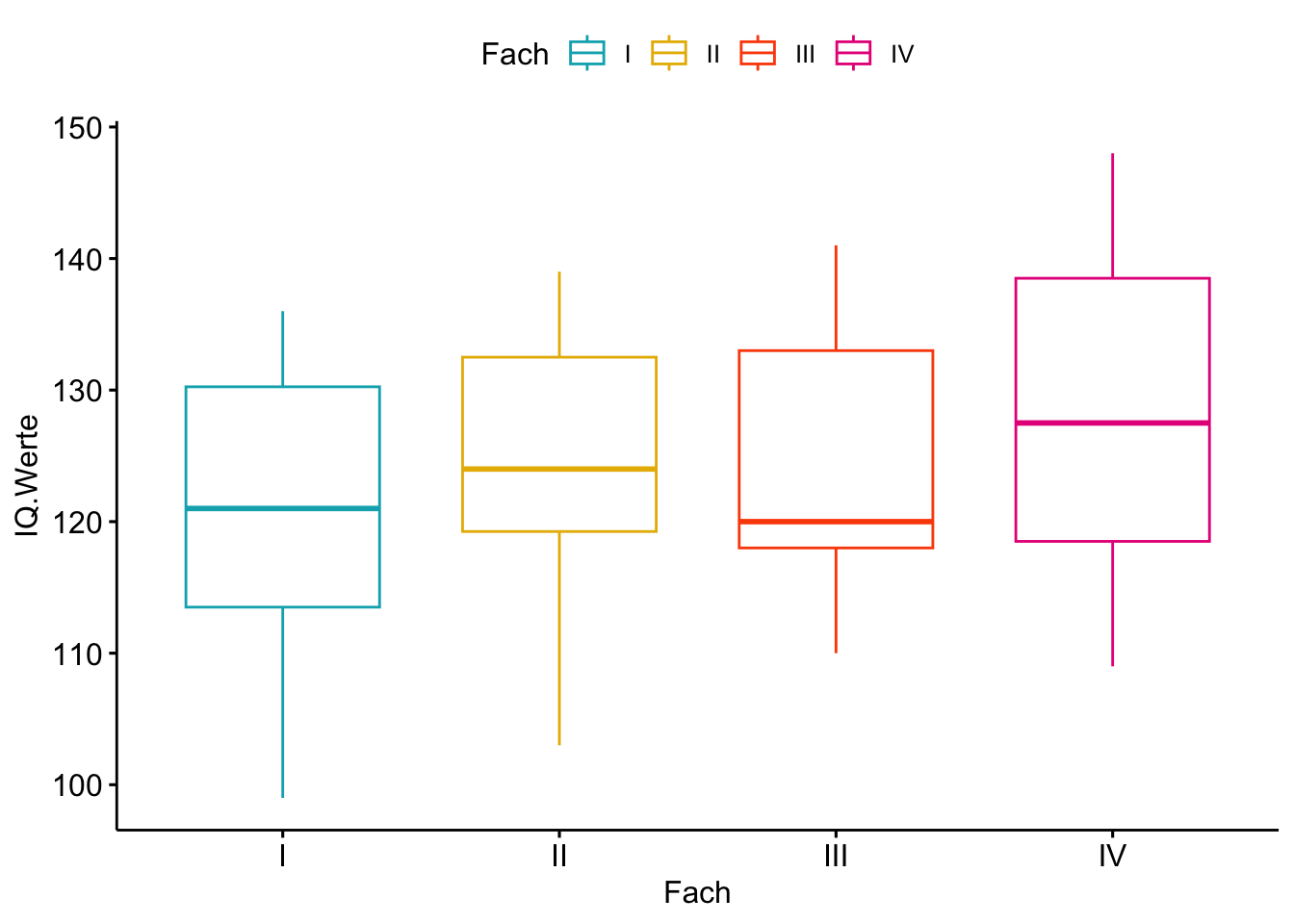

## Date exploration

Intelligenz <- data.frame(IQ.Werte=c(99, 131, 118, 112, 128, 136, 120, 107,

134, 122,134, 103, 127, 121, 139, 114,

121, 132,120, 133, 110, 141, 118, 124,

111, 138, 120,117, 125, 140, 109, 128,

137, 110, 138, 127, 141, 119, 148),

Fach=c(rep(1,10),rep(2,8),rep(3,9),rep(4,12)))

Intelligenz$Fach <- factor(Intelligenz$Fach,labels=c("I", "II", "III","IV"))

## Compute summary statistics

group_by(Intelligenz, Fach) %>%

dplyr::summarise(

count = n(),

mean = mean(IQ.Werte, na.rm = TRUE),

sd = sd(IQ.Werte, na.rm = TRUE)

)## Visualize your data with ggpubr

# library("ggpubr")

ggboxplot(Intelligenz, x = "Fach", y = "IQ.Werte",

color = "Fach",

palette = c("#00AFBB", "#E7B800", "#FC4E07","#E7298A"),

order = c("I","II","III","IV"),

ylab = "IQ.Werte", xlab = "Fach")

## Model Fitting

Intelligenz.aov <- aov(IQ.Werte~Fach, data=Intelligenz)

summary(Intelligenz.aov)## Df Sum Sq Mean Sq F value Pr(>F)

## Fach 3 319 106.4 0.736 0.538

## Residuals 35 5060 144.6SAS Implementation

Comparing Weight Loss for Five Different Regimens Ott (1988) reports an experiment undertaken to evaluate the effectiveness of five weightreducing agents. There are 10 male subjects in each group who have been randomly assigned to one of the regimens A, B, C, D, or E. This is a classic example of the balanced one-way ANOVA setup. After a fixed length of time, the weight loss of each of the 50 subjects is measured. The goal of the study is to rank the treatments, to the extent possible, using the observed weight loss data for the 50 subjects. These box plots are convenient for depicting and comparing the distributions of the data in each treatment group.

data Wloss;

do Diet = 'A','B','C','D','E';

do i = 1 to 10;

input Wloss @@;

output;

end;

end;

datalines;

12.4 10.7 11.9 11.0 12.4 12.3 13.0 12.5 11.2 13.1

9.1 11.5 11.3 9.7 13.2 10.7 10.6 11.3 11.1 11.7

8.5 11.6 10.2 10.9 9.0 9.6 9.9 11.3 10.5 11.2

8.7 9.3 8.2 8.3 9.0 9.4 9.2 12.2 8.5 9.9

12.7 13.2 11.8 11.9 12.2 11.2 13.7 11.8 11.5 11.7

;

proc sgplot data=Wloss;

vbox Wloss/category=Diet;

run;Model Diagnosis

- Homogeneity Test

- Homogeneity of variance assumption using Plot

- Homogeneity of variance assumption using Levene’s test, which is less sensitive to departures from normal distribution. (insensitive to deviation from normal distribution)

- Homogeneity of variance assumption with no assumption of equal variances (relaxes the homogeneity of variance assumptions)

- Normality assumption

- Multiple Test

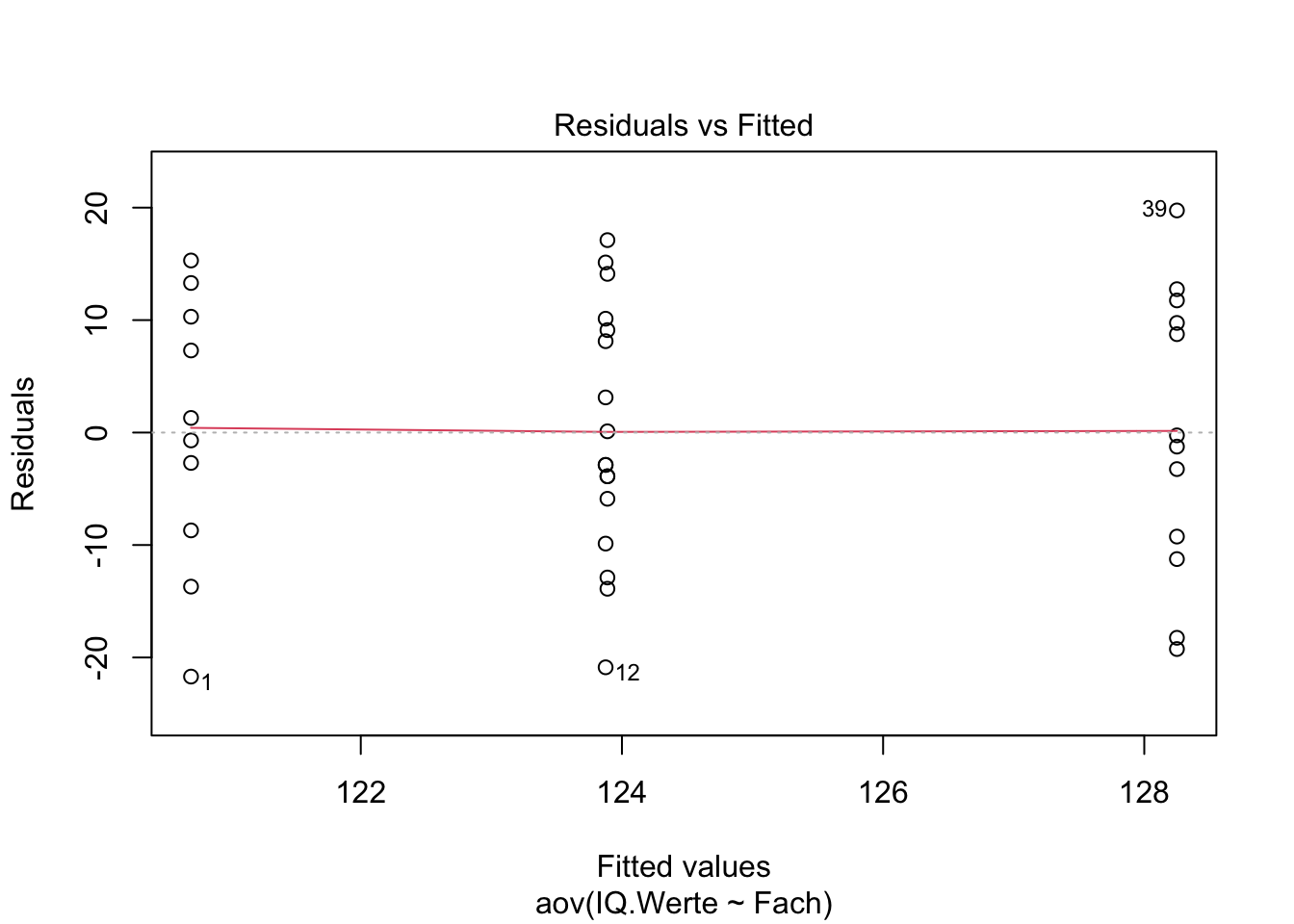

Homogeneity Test

## Check the homogeneity of variance assumption

plot(Intelligenz.aov, 1)

## Levene’s test, Homogeneity of variance assumption

# library(car)

leveneTest(IQ.Werte ~ Fach, data = Intelligenz)## With no assumption of equal variances

oneway.test(IQ.Werte~Fach, data=Intelligenz)##

## One-way analysis of means (not assuming equal variances)

##

## data: IQ.Werte and Fach

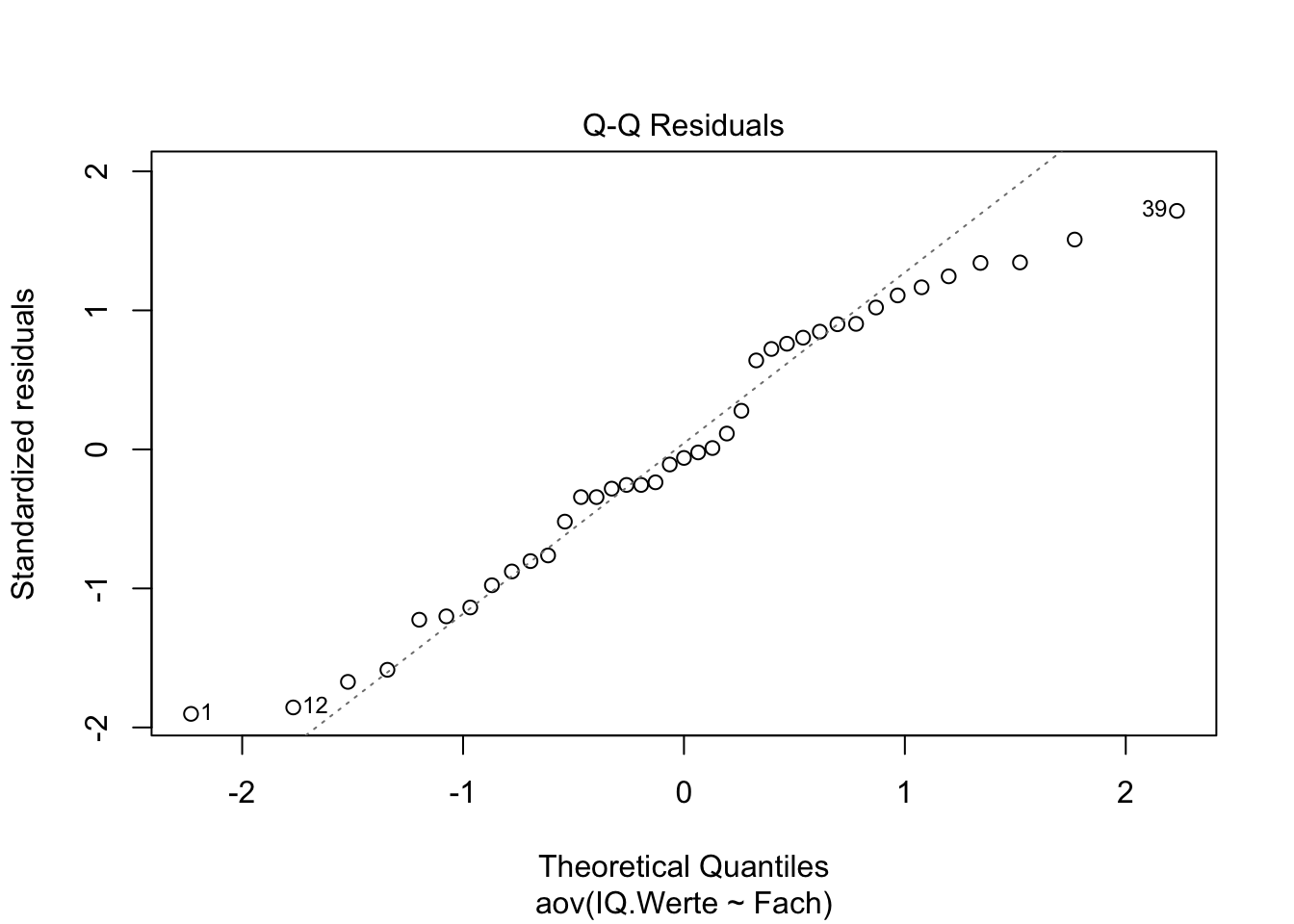

## F = 0.64123, num df = 3.000, denom df = 18.758, p-value = 0.598Normality assumption

Using QQ Plot or Shapiro–Wilk test to test residuals

## Check the normality assumption

plot(Intelligenz.aov, 2)

## Shapiro–Wilk test to test residuals

## Extract the residuals and run Shapiro-Wilk test

aov_residuals <- residuals(object = Intelligenz.aov )

shapiro.test(x = aov_residuals )##

## Shapiro-Wilk normality test

##

## data: aov_residuals

## W = 0.96021, p-value = 0.1813Violation of assumptions

Random Effects Models

\[Y_{ij} = \mu + \alpha_i + \epsilon_{ij},\] We assume \(\alpha_i \; \textrm{i.i.d.} \sim N(0, \sigma_{\alpha}^2).\) and \[E[Y_{ij}] = \mu\] \[\text{Var}(Y_{ij}) = \sigma_{\alpha}^2 + \sigma^2\] \[\text{Cor}(Y_{ij}, Y_{kl}) = \left\{ \begin{array}{cc} 0 & i \neq k \\ \sigma_{\alpha}^2 / (\sigma_{\alpha}^2 + \sigma^2) & i = k, j \neq l (\text{intraclass correlation (ICC)})\\ 1 & i = k, j = l \end{array} \right.\]

MLE is not usable for parameter estimation for the variance components \(\sigma_a^2\) and \(\sigma^2\), Restricted maximum likelihood (REML) is applied here. REML is less biased. The parameter \(\mu\) is estimated with maximum-likelihood assuming that the variances are known.

Non-Parametric Test

Non-parametric alternative to one-way ANOVA test, Kruskal-Wallis rank sum test, which can be used when ANOVA assumptions are not met.

kruskal.test(IQ.Werte~Fach, data=Intelligenz)Unbalanced One-Way ANOVA and Analysis-of-Covariance (ANCOVA)

These data are similar to the balanced ANOVA except that sample sizes may be unbalanced, or the comparisons between means might be done while controlling one or more covariates (e.g., confounding variables, pre-experimental measurements). The distributional assumptions are identical to those of the ANOVA, with the exception that for ANCOVA, the normality assumption must be evaluated by using residuals and not actual data values. When discussing statistical analysis involving Unbalanced One-Way ANOVA and Analysis-of-Covariance (ANCOVA), it’s crucial to consider how these methods differ from a balanced ANOVA and what specific challenges and adjustments they require.

Unbalanced One-Way ANOVA

Unbalanced ANOVA refers to situations where the sample sizes across the groups being compared are not equal. This imbalance can affect the analysis in several ways:

Power and Error Rates: Unequal group sizes can lead to reductions in statistical power and can impact the error rates. In general, when group sizes are equal, the power of the ANOVA test is maximized, and the estimation of variance is more reliable.

Variance Estimation: In a balanced ANOVA, the estimation of within-group and between-group variances are straightforward and less susceptible to sample size differences. In an unbalanced design, the variances might be estimated with bias if the larger sample sizes overly influence the mean estimates.

Statistical Techniques: Certain adjustments may be necessary when dealing with unbalanced data. For instance, using Type III sum of squares in the ANOVA calculations helps handle the imbalance by making the sums of squares independent of the order in which variables are entered into the model. This is particularly important in software like SAS or SPSS, where the type of sums of squares can be specified.

Analysis-of-Covariance (ANCOVA)

ANCOVA is an extension of ANOVA that introduces covariates into the analysis. These covariates are continuous variables that potentially influence the dependent variable but are not the main focus of the research. For instance, if you are studying the effect of a diet on weight loss, age might be a covariate if it’s believed to affect weight loss but is not the main variable of interest.

Control for Confounding: ANCOVA allows researchers to statistically control for the effects of covariates, which might confound the relationship between the dependent variable and the independent variable(s). This enhances the accuracy of the conclusions about the main effects.

Assumption of Homogeneity of Regression Slopes: One critical assumption in ANCOVA is that the relationship (slope) between the covariate(s) and the dependent variable must be the same across all groups (levels of the independent variable). This assumption is known as the homogeneity of regression slopes and is crucial for the proper application of ANCOVA.

Evaluating Normality Using Residuals: Unlike ANOVA, where normality is typically assessed using the actual data values or transformed values, ANCOVA requires the evaluation of normality through the residuals. Residuals are the differences between the observed values and the values predicted by the covariate(s). Analyzing residuals helps ensure that the adjustments made for the covariates are appropriately accounting for their effects, and that the error terms (residuals) of the model distribute normally.

Practical Implications

- Statistical Software: When performing either unbalanced ANOVA or ANCOVA, statistical software like R, SPSS, or SAS can be utilized to handle complex calculations, and they offer options to adjust for imbalances and include covariates effectively.

- Diagnostic Checks: It is essential to perform diagnostic checks to validate the assumptions of homogeneity of variances, normality of residuals, and homogeneity of regression slopes. Plots like residual plots, Q-Q plots, or leverage plots can be particularly helpful.

In summary, while unbalanced ANOVA and ANCOVA introduce more complexity into the analysis, they provide powerful tools for dealing with real-world data where conditions are rarely perfectly balanced or free from confounding variables. Understanding how to adjust for these complexities can significantly enhance the validity and reliability of the study’s findings.

Factorial ANOVA

Independent Factorial ANOVA

Factorial ANOVA is a statistical method that extends the basic principles of a one-way ANOVA to analyze the effects of two or more independent variables simultaneously on a continuous dependent variable. This methodology is highly versatile, supporting designs that assess both the main effects of each factor and the interactions between them, which can reveal more complex patterns of influence on the dependent variable. Here’s a breakdown of the different types of factorial designs, along with explanations of factorial ANOVA and its implications for interpreting experimental results:

Types of Factorial Designs:

Independent Factorial Design: This design involves multiple between-group independent variables (IVs). Each factor is manipulated across different groups of subjects without any repetition within the same subject.

Repeated Measures Factorial Design: Known as within-group designs, these involve one or more factors being repeated within the same subjects. This can also be referred to as a two-way (or three-way, etc., depending on the number of IVs) repeated measures ANOVA, where each subject is exposed to each condition.

Mixed Factorial Design: This design incorporates elements of both independent and repeated measures designs, where some factors are manipulated between groups and others within the same subjects.

Understanding Factorial ANOVA:

One-way ANOVA: Primarily tests for differences among two or more independent groups based on a single independent variable. It’s used to determine whether the means of the groups are significantly different, such as comparing the performance of students from different schools.

Factorial ANOVA: Unlike one-way ANOVA, factorial ANOVA can test the effects of two or more independent variables on a dependent variable. This approach not only examines the main effects of each independent variable but also explores the interaction effects between them. For instance, it could assess how different teaching methods (one factor) affect various age groups (another factor) concerning student performance.

Repeated Factorial ANOVA

Repeated measures factorial designs are specialized instances of randomized block designs, designed to handle data where measurements are taken on the same subjects under different conditions. Unlike designs where each measure is taken on independent subjects, these designs consider the correlation between measures on the same subjects.

Randomized Block and Repeated Measures Designs

In randomized block designs, subjects are grouped into blocks based on one or more characteristics that are expected to affect the outcome. For example, subjects in one block might have high IQ scores, while those in another have low IQ scores. Each block of subjects then experiences the same experimental conditions, allowing the experiment to control for the blocking variable.

When each block consists of only one subject who undergoes all conditions, this setup becomes a repeated measures design. This design is powerful for reducing variability caused by differences between subjects because each subject acts as their own control.

Counterbalancing in Crossover Designs

Counterbalancing is used to control for order effects in studies where subjects undergo multiple conditions. This is crucial because the order in which conditions are presented can influence the results. For instance, fatigue or learning might skew results in later conditions if not properly managed.

To manage this, researchers can use several strategies:

Full Crossover Design: All possible orders of conditions are used. For example, with three conditions (A, B, C), six permutations (ABC, ACB, BAC, BCA, CAB, CBA) ensure that each condition’s effect is not confounded by its position in the sequence. Subjects are assigned to each order, balancing out any order effects.

Factorial Model Construction: By including all orders and crossing them with other experimental factors, a factorial model can be constructed. This model will account for variances due to order effects and their potential interactions with other variables in the study.

Latin Square Designs

Latin square designs offer a structured way to arrange treatments so that each treatment appears only once in each row or column. This design is particularly effective for controlling order effects when there are more conditions:

Basic Setup: Each condition appears exactly once in each row and each column, an approach that efficiently manages the number of required subjects and conditions.

Balanced Latin Squares: Sometimes referred to as “digram-balanced,” these are designed such that each condition precedes and follows every other condition. This balance only works when the number of conditions is even.

Adaptation for Odd Numbers of Conditions: When the number of conditions is odd, balanced Latin squares are not feasible. Instead, researchers might use randomly permuted Latin squares to achieve a similar balance.

Mixed factorial design

Mixed Factorial Design Overview

Mixed factorial designs blend characteristics of both repeated measures and independent groups factorial designs. In this type of design, at least one factor is a “within-subjects” factor (meaning the same subjects are measured under different conditions), and at least one factor is a “between-subjects” factor (meaning different groups of subjects are measured under each condition).

General Linear Model (GLM) for Mixed Factorial ANOVA

The GLM for mixed factorial ANOVA accommodates the interaction between independent and repeated measures within the same experimental framework. This model allows researchers to assess not only the main effects of each type of factor but also their interaction effects, which can reveal how different levels of one factor affect responses at different levels of another factor across different groups of subjects.

Equation:

\[ Y_{ijk} = \mu + \tau_i + \alpha_j + (\tau\alpha)_{ij} + \beta_k + (\tau\beta)_{ik} + (\alpha\beta)_{jk} + (\tau\alpha\beta)_{ijk} + \epsilon_{ijk} \]

- \(\mu\) is the overall mean or grand mean of all observations.

- \(\tau_i\) is the effect of the ith level of the within-subjects factor.

- \(\alpha_j\) is the effect of the jth level of the between-subjects factor.

- \(\beta_k\) represents the random effects of subjects within the between-subjects factor.

- \((\tau\alpha)_{ij}\) is the interaction effect between the within-subjects factor and the between-subjects factor.

- \((\tau\beta)_{ik}\), \((\alpha\beta)_{jk}\), and \((\tau\alpha\beta)_{ijk}\) are the higher-level interactions involving subjects.

- \(\epsilon_{ijk}\) is the random error associated with each observation.

Key Features of Mixed Factorial Designs:

Complex Interactions: This design allows for the examination of interactions between subject-based (within-subject) factors and group-based (between-subject) factors, providing a deeper understanding of how different variables influence each other.

Efficient Use of Data: By combining both within-subjects and between-subjects factors, mixed designs can make more efficient use of data than pure between-subjects designs, particularly in terms of controlling for potential confounding variables that vary between individuals.

Flexibility: Mixed designs offer great flexibility in experimental setup, allowing researchers to tailor their studies to specific research questions that involve both repeated measures and independent group comparisons.

Statistical Analysis Considerations:

- Error Terms: The error structure in mixed factorial designs can be complex. The model must account for variability between subjects within groups and variability due to repeated measures on the same subjects.

- Sphericity: The assumption of sphericity, which is required in repeated measures ANOVA, must be checked and corrected if violated. This assumption tests whether the variances of the differences between all combinations of related group (level) means are equal.

- Multiple Comparisons: When significant interactions are found, post-hoc tests are often necessary to explore these interactions further. These tests can help clarify which specific levels of the factors differ from each other.

Introduction

\[ Y_{i j k}=\mu+\alpha_{i}+\beta_{j}+(\alpha \beta)_{i j}+\epsilon_{i j k} \] - ai is the main effect of factor \(A\) at leveli - \(\beta \mathrm{j}\) is the main effect of factor \(\mathrm{B}\) at level \(\mathrm{j}\) - \((aß)ij\) is the interaction effect between \(A\) and \(B\) for the level combination i,j (it is not the product ai)

Test: the total sum of squares \[ S S_{T}=S S_{A}+S S_{B}+S S_{A B}+S S_{E} \]

- \(SS_A = \sum_{i=1}^a b n (\widehat{\alpha}_i)^2\) “between rows”

- \(SS_B = \sum_{j=1}^b a n (\widehat{\beta}_j)^2\) “between columns”

- \(SS_{AB} = \sum_{i=1}^a \sum_{j=1}^b n (\widehat{\alpha\beta})_{ij}^2\) “correction”

- \(SS_E = \sum_{i=1}^a \sum_{j=1}^b \sum_{k=1}^n (y_{ijk} - \overline{y}_{ij\cdot})^2\) Error “within cells”

- \(SS_T = \sum_{i=1}^a \sum_{j=1}^b \sum_{k=1}^n (y_{ijk} - \overline{y}_{\cdot\cdot\cdot})^2\) “total”

Marginal Means in Factorial Designs

Marginal means are computed by averaging the means across the levels of other factors in the design, thus isolating the effect of one particular factor. These means are essential for: - Understanding how each factor influences the dependent variable while controlling for the influence of other factors. - Comparing the effects of each level of a factor on the outcome, averaged over the levels of other factors involved in the study.

In factorial ANOVA, marginal means are particularly informative because they reflect the composite effect of interactions between factors on the dependent variable. For example:

- If Factor A (e.g., teaching method) and Factor B (e.g., study time) are being tested, the marginal mean for each level of Factor A would be the average outcome across all levels of Factor B, and vice versa.

- This averaging helps identify if the performance differences are primarily due to one factor regardless of the levels of another, or if the differences are specifically due to interactions between these factors.

Interpretation of Marginal Means:

- In the context of a factorial design, marginal means allow for the comparison of factor effects across different conditions or settings.

- They help in discerning whether the observed differences in outcomes are due to systematic effects of the factors under study or if they stem from the particular combinations of these factors (i.e., interactions).

R implementation

## Date exploration

my_data <- ToothGrowth

# Show a random sample

set.seed(1234)

dplyr::sample_n(my_data, 10)# Convert dose as a factor and recode the levels

my_data$dose <- factor(my_data$dose,

levels = c(0.5, 1, 2),

labels = c("D0.5", "D1", "D2"))

## Compute mean and SD by groups using dplyr R package:

require("dplyr")

group_by(my_data, supp, dose) %>%

summarise(

count = n(),

mean = mean(len, na.rm = TRUE),

sd = sd(len, na.rm = TRUE)

)# Visualize data

# library("ggpubr")

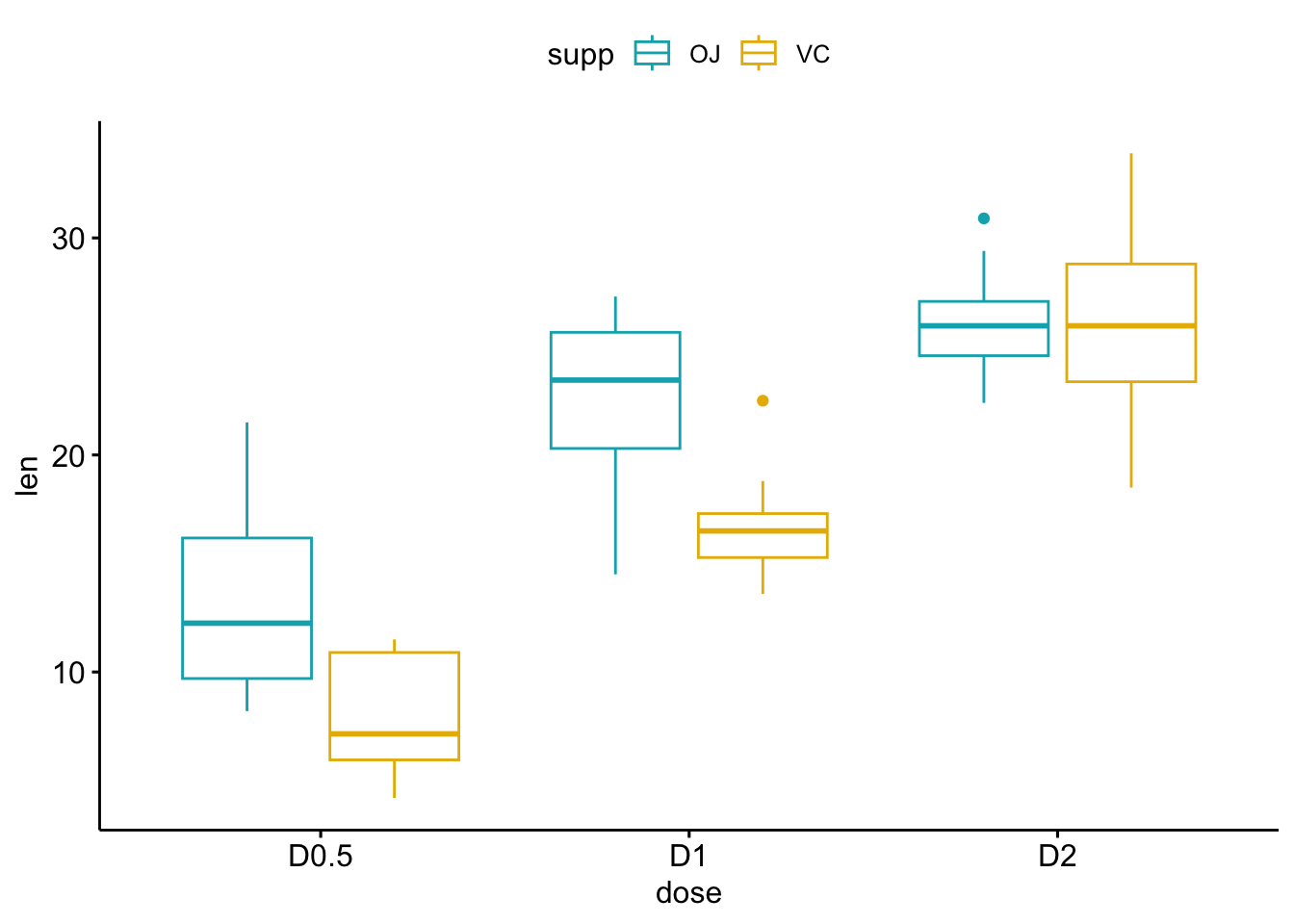

ggboxplot(my_data, x = "dose", y = "len", color = "supp",

palette = c("#00AFBB", "#E7B800"))

## Two-way interaction plot

# library("ggpubr")

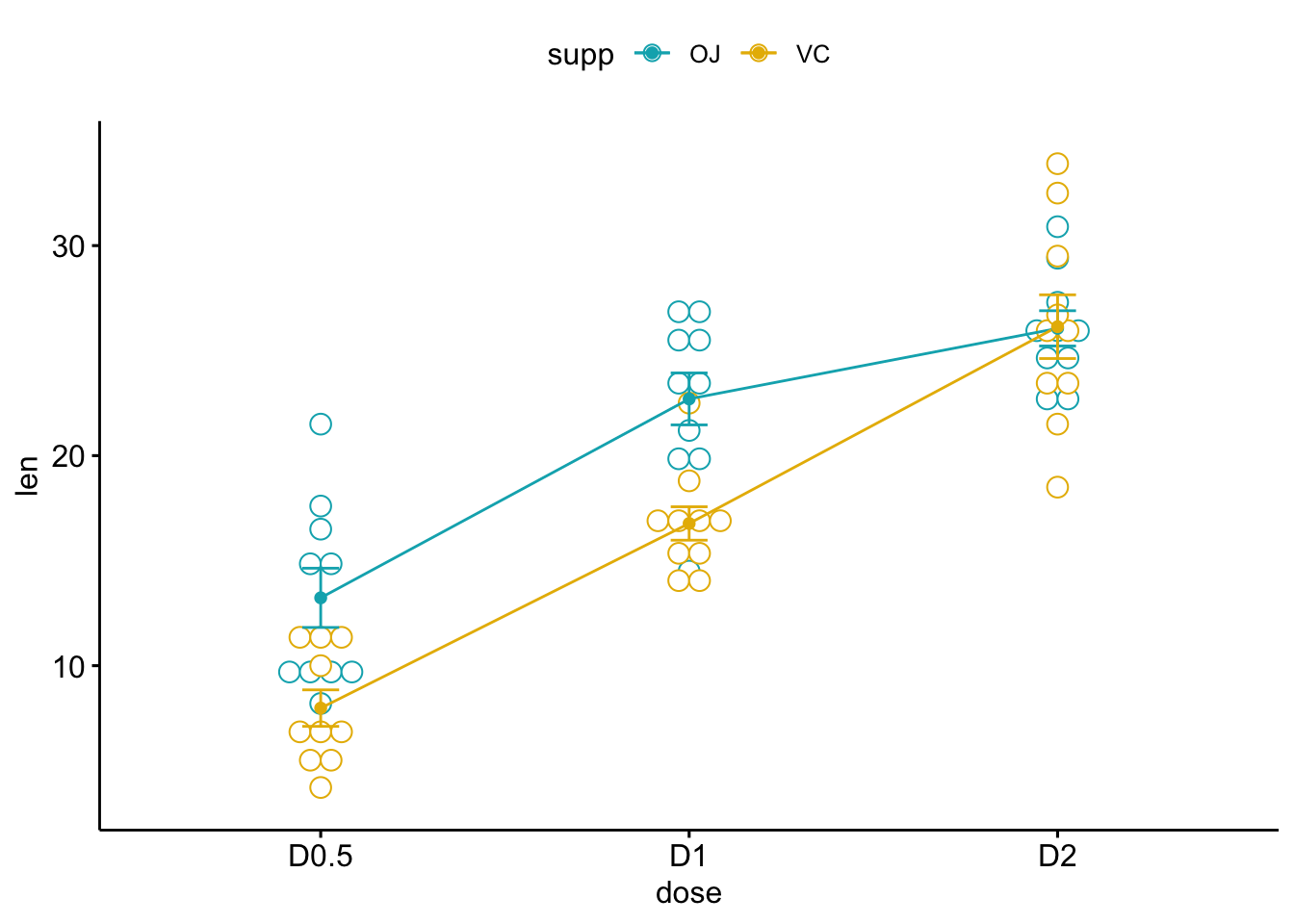

ggline(my_data, x = "dose", y = "len", color = "supp",

add = c("mean_se", "dotplot"),

palette = c("#00AFBB", "#E7B800"))

## Model Fitting

## Compute two-way ANOVA test

res.aov2 <- aov(len ~ supp + dose, data = my_data)

summary(res.aov2)## Df Sum Sq Mean Sq F value Pr(>F)

## supp 1 205.4 205.4 14.02 0.000429 ***

## dose 2 2426.4 1213.2 82.81 < 2e-16 ***

## Residuals 56 820.4 14.7

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1## Two-way ANOVA with interaction effect

res.aov3 <- aov(len ~ supp * dose, data = my_data)

res.aov3 <- aov(len ~ supp + dose + supp:dose, data = my_data)

summary(res.aov3)## Df Sum Sq Mean Sq F value Pr(>F)

## supp 1 205.4 205.4 15.572 0.000231 ***

## dose 2 2426.4 1213.2 92.000 < 2e-16 ***

## supp:dose 2 108.3 54.2 4.107 0.021860 *

## Residuals 54 712.1 13.2

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## dose的p值<2e-16(显着),表明剂量水平与显着不同的牙齿长度len 有关。

## supp * dose之间相互作用的p值为0.02(显着),表明dose与牙齿长度len之间的关系取决于supp方法

## 在交互作用不明显的情况下,应使用加性模型。

## Diagnosis

## 1. Compute mean and SD by groups using dplyr R package:

require("dplyr")

group_by(my_data, supp, dose) %>%

summarise(

count = n(),

mean = mean(len, na.rm = TRUE),

sd = sd(len, na.rm = TRUE)

)model.tables(res.aov3, type="means", se = TRUE)## Tables of means

## Grand mean

##

## 18.81333

##

## supp

## supp

## OJ VC

## 20.663 16.963

##

## dose

## dose

## D0.5 D1 D2

## 10.605 19.735 26.100

##

## supp:dose

## dose

## supp D0.5 D1 D2

## OJ 13.23 22.70 26.06

## VC 7.98 16.77 26.14

##

## Standard errors for differences of means

## supp dose supp:dose

## 0.9376 1.1484 1.6240

## replic. 30 20 10## 2. Multiple Test

pairwise.t.test(my_data$len, my_data$dose,

p.adjust.method = "BH")##

## Pairwise comparisons using t tests with pooled SD

##

## data: my_data$len and my_data$dose

##

## D0.5 D1

## D1 1.0e-08 -

## D2 4.4e-16 1.4e-05

##

## P value adjustment method: BH## Multiple pairwise-comparison between the means of groups

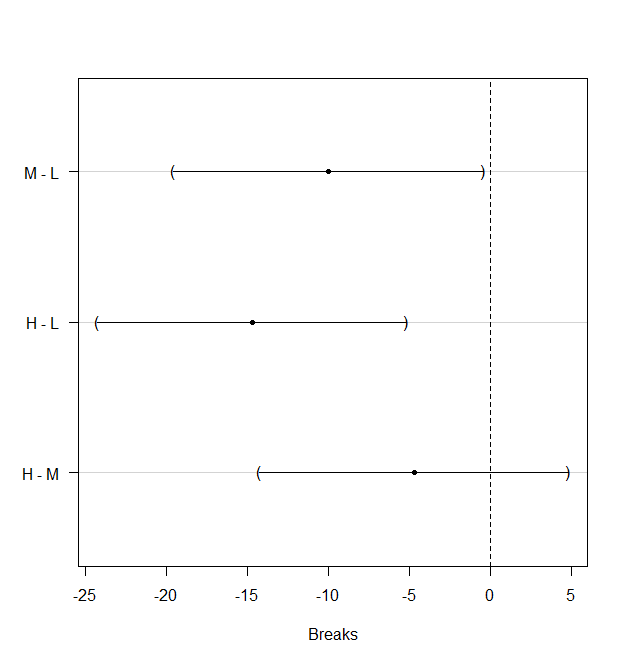

TukeyHSD(res.aov3, which = "dose")## Tukey multiple comparisons of means

## 95% family-wise confidence level

##

## Fit: aov(formula = len ~ supp + dose + supp:dose, data = my_data)

##

## $dose

## diff lwr upr p adj

## D1-D0.5 9.130 6.362488 11.897512 0.0e+00

## D2-D0.5 15.495 12.727488 18.262512 0.0e+00

## D2-D1 6.365 3.597488 9.132512 2.7e-06# library(multcomp)

summary(glht(res.aov2, linfct = mcp(dose = "Tukey")))##

## Simultaneous Tests for General Linear Hypotheses

##

## Multiple Comparisons of Means: Tukey Contrasts

##

##

## Fit: aov(formula = len ~ supp + dose, data = my_data)

##

## Linear Hypotheses:

## Estimate Std. Error t value Pr(>|t|)

## D1 - D0.5 == 0 9.130 1.210 7.543 <1e-05 ***

## D2 - D0.5 == 0 15.495 1.210 12.802 <1e-05 ***

## D2 - D1 == 0 6.365 1.210 5.259 <1e-05 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

## (Adjusted p values reported -- single-step method)## 3. Homogeneity and normality

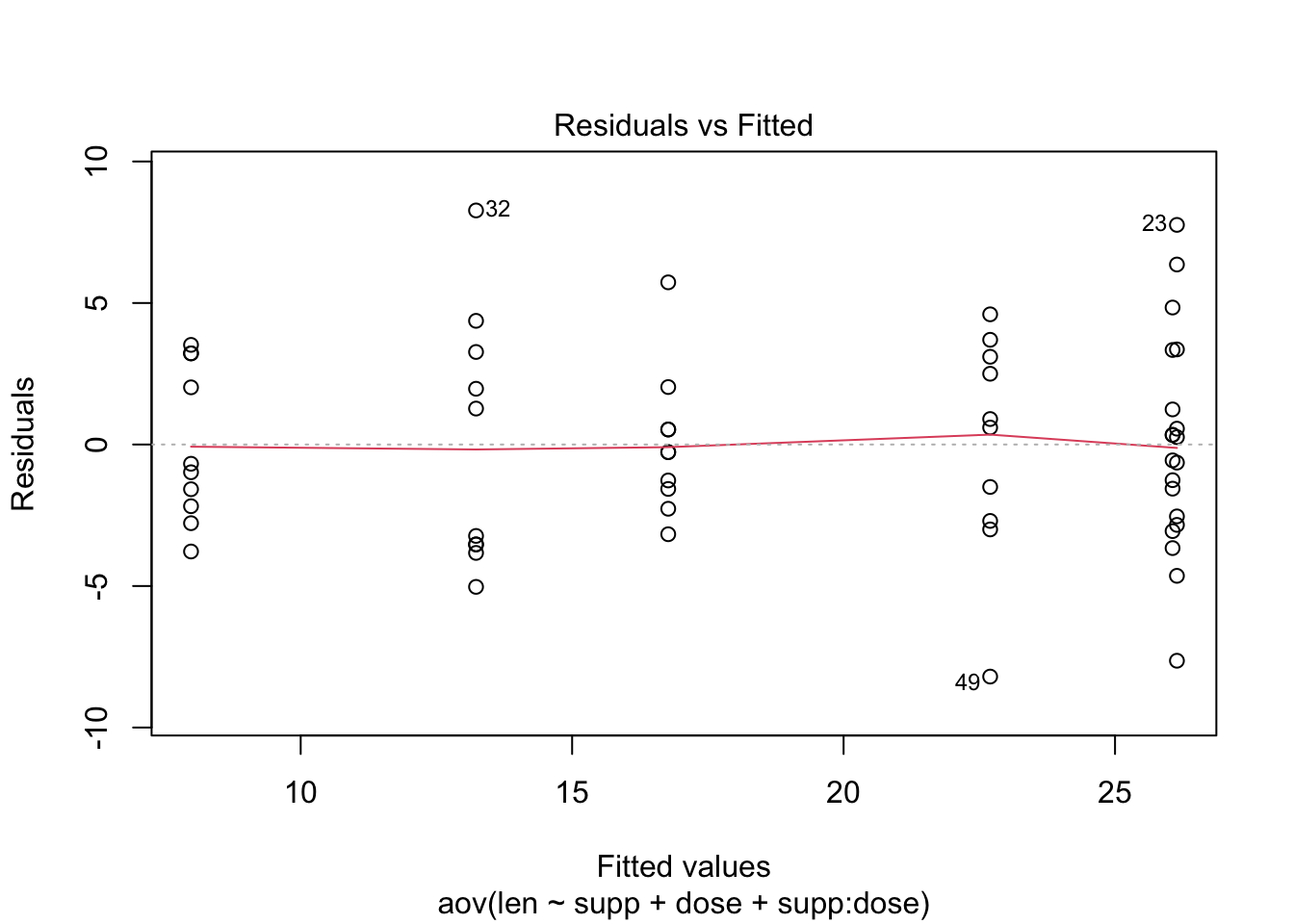

## Check the homogeneity of variance assumption (outliers)

plot(res.aov3, 1)

## Levene’s test to check the homogeneity of variances.

# library(car)

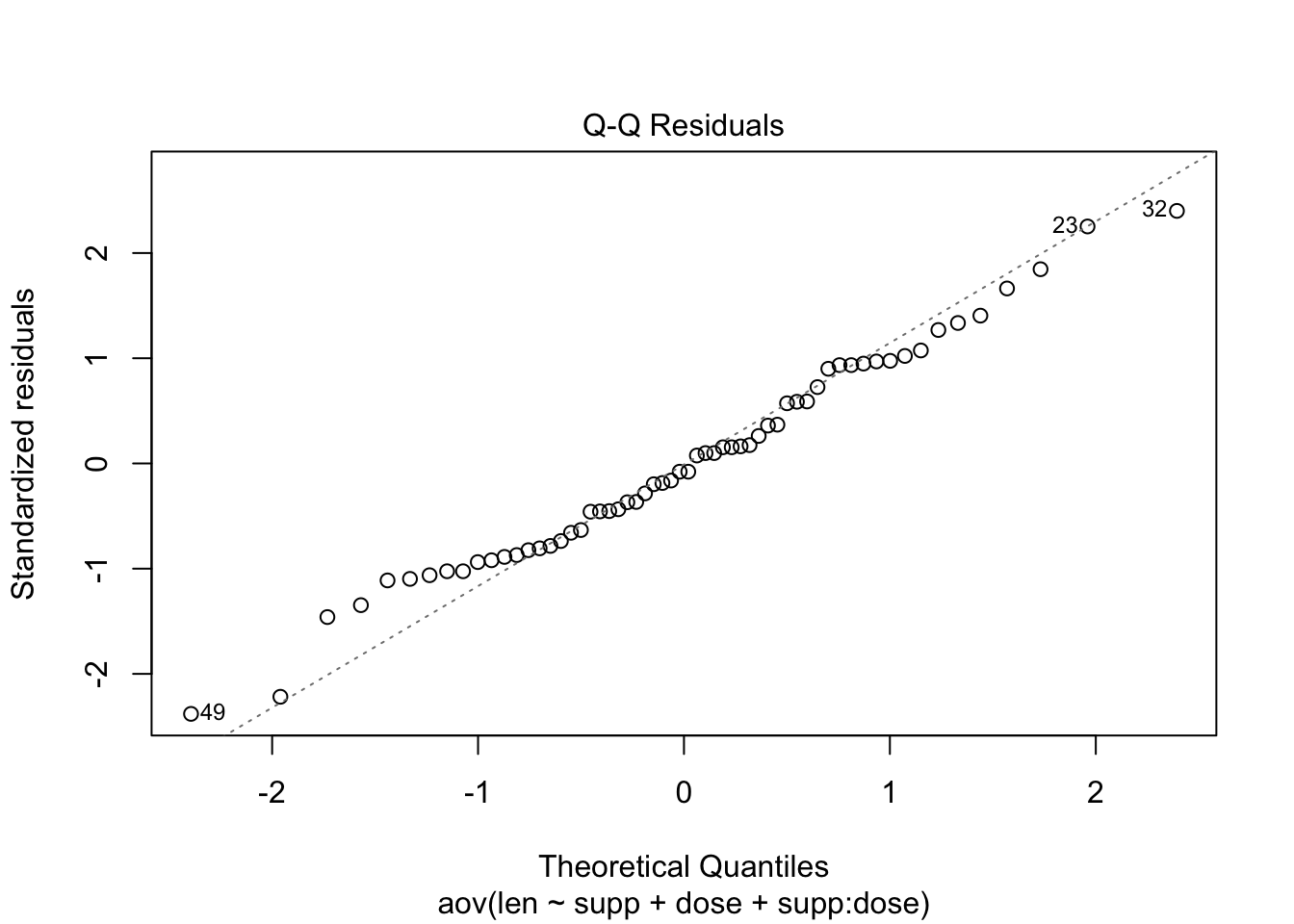

leveneTest(len ~ supp*dose, data = my_data)## Check the normality assumpttion

plot(res.aov3, 2)

# Extract the residuals, Shapiro-Wilk test

aov_residuals <- residuals(object = res.aov3)

shapiro.test(x = aov_residuals )##

## Shapiro-Wilk normality test

##

## data: aov_residuals

## W = 0.98499, p-value = 0.6694SAS Implementation

data Waste;

do Temp = 1 to 3;

do Envir = 1 to 5;

do rep=1 to 2;

input Waste @@;

output;

end;

end;

end;

datalines;

7.09 5.90 7.94 9.15 9.23 9.85 5.43 7.73 9.43 6.90

7.01 5.82 6.18 7.19 7.86 6.33 8.49 8.67 9.62 9.07

7.78 7.73 10.39 8.78 9.27 8.90 12.17 10.95 13.07 9.76

;

run;

ods graphics on;

proc glm data=Waste;

class Temp Envir;

model Waste = Temp Envir Temp*Envir;

run;

quit;

ods graphics off;

Unbalanced design

With unbalanced designs, LS-means typically are more relevant than arithmetic means for quantifying general population characteristics, since the LS-means estimate the marginal means over a balanced population, whether or not the design itself is balanced; the arithmetic means only estimate the marginal means for a population whose margins match those of the design. In particular, the arithmetic means estimate balanced population margins only when the design itself is balanced. Moreover, the LS-means match the arithmetic means when the design is balanced.

## unequal numbers of subjects in each group.

library(car)

my_anova <- aov(len ~ supp * dose, data = my_data)

Anova(my_anova, type = "III")*** Comparisons of LS-Means with Unbalanced Data;

data Drug;

input Drug Disease @;

do i=1 to 6;

input Response @;

output;

end;

cards;

1 1 42 44 36 13 19 22

1 2 33 . 26 . 33 21

1 3 31 -3 . 25 25 24

2 1 28 . 23 34 42 13

2 2 . 34 33 31 . 36

2 3 3 26 28 32 4 16

3 1 . . 1 29 . 19

3 2 . 11 9 7 1 -6

3 3 21 1 . 9 3 .

4 1 24 . 9 22 -2 15

4 2 27 12 12 -5 16 15

4 3 22 7 25 5 12 .

;

ods graphics on;

proc glm;

class Drug Disease;

model Response = Drug Disease Drug*Disease/ss3;

lsmeans Drug/ pdiff cl adjust=simulate(seed=121211 acc=.0002

report);

run; quit;

ods graphics off; *** Computing LS-Means by Hand;

data Balanced;

do Drug = 1 to 4;

do Disease = 1 to 3;

output;

end;

end;

data DrugPlus; set Drug(where=(Response ^= .)) Balanced;

proc glm data=DrugPlus;

class Drug Disease;

model Response = Drug Disease Drug*Disease;

output out=PredBal(where=(Response = .)) p=pResponse;

proc means data=PredBal;

class Drug;

ways 1;

var pResponse;

run; MANOVA - Multivariate Analysis of Variance

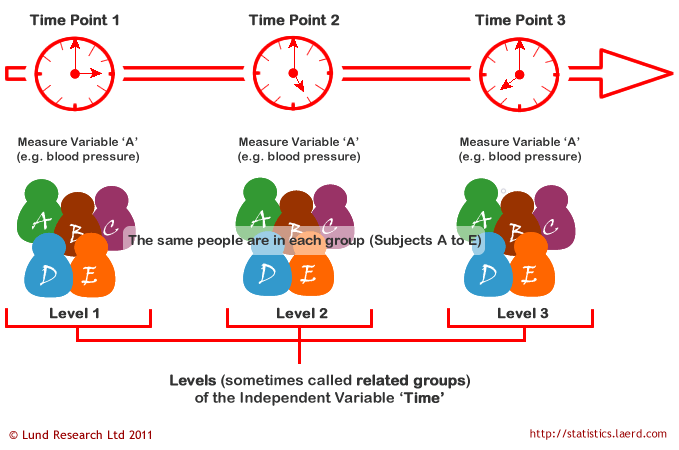

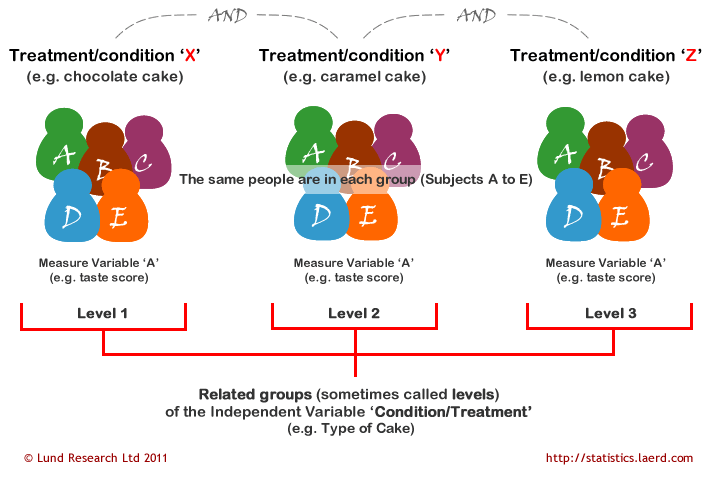

A repeated measures ANOVA is used to determine whether or not there is a statistically significant difference between the means of three or more groups in which the same subjects show up in each group. A repeated measures ANOVA is typically used in two specific situations:

- Measuring the mean scores of subjects during three or more time points.

- Measuring the mean scores of subjects under three different conditions.

General Linear Model (GLM) for Repeated Measures ANOVA

The equation for repeated measures ANOVA is: \[ Y_{ij} = \mu + \pi_i + \alpha_j + (\pi\alpha)_{ij} + \epsilon_{ij} \]

- \(\mu\) is the overall mean or grand mean of the data.

- \(\pi_i\) represents the effect due to the \(i\)-th subject.

- \(\alpha_j\) is the effect of the \(j\)-th condition or treatment.

- \((\pi\alpha)_{ij}\) is the interaction between subject \(i\) and condition \(j\), which usually is not included (set to zero) in simple models for ease and clarity.

- \(\epsilon_{ij}\) is the error term, capturing random effects not explained by the model.

This model accounts for the repeated measures on the same subjects by including a term for each subject, allowing it to analyze within-subject variations effectively.

To ensure valid results from a repeated measures ANOVA, certain assumptions must be met:

Independence: Each observation should be independent of others. This assumption is crucial for any statistical test involving inference. In the context of repeated measures, the design inherently involves related observations (repeated on the same subjects); hence the independence primarily concerns the error terms \(\epsilon_{ij}\), which should be independent.

Normality: The distribution of residuals (not the raw data) should be approximately normally distributed. This can be checked through:

- Histograms or Q-Q plots of the residuals.

- Formal tests of normality such as the Shapiro-Wilk test.

Repeated measures ANOVA is robust against mild violations of normality, especially with larger sample sizes. For severe violations, data transformation or non-parametric alternatives like the Friedman test might be necessary.

Sphericity: The variances of the differences among all combinations of related groups (conditions) must be equal. This assumption, unique to repeated measures and multivariate designs, can be tested using Mauchly’s Test of Sphericity. If sphericity is violated, adjustments such as Huynh-Feldt, Greenhouse-Geisser, or lower-bound corrections are used to correct the degrees of freedom used in F-tests, affecting the p-values.

If assumptions are violated, several strategies can be employed:

- Transforming the data to improve normality or stabilize variances.

- Using corrections for violations of sphericity to ensure valid F-tests.

- Resorting to non-parametric methods when the data significantly deviate from these assumptions.

Other

Heteroscedastic Responses

If the error variances are not constant, then the ordinary methods might be biased (in the sense of providing higher error rates than advertised) or inefficient (in the sense that the method lacks power to detect real differences).

Repeated Measures ANOVA Data

When there are repeated measures on the same experimental unit, the crucial independence assumption that is used for the previous models no longer applies. For example, the data may contain repeated measures on blood pressure for an individual. In such cases, you can model the dependence of blood pressure measurements by using a variety of possible dependence structure models, and perform multiplicity-adjusted analyses within the context of such models. Normality (or at least approximate normality) remains an important assumption for these models.

Multivariate Responses with Normally Distributed Data

In these models, there are multiple measurements on the same individual. While repeated measures models usually assume that the measurements are taken on the same characteristic (like blood pressure), the multivariate response models allow completely different scales of measurement. For example, blood pressure and self-rated anxiety level form a multivariate response vector. Multiple inferences from such data are improved by incorporating the correlations among such measurements. In addition to the normality assumption, the multivariate observation vectors also are assumed independent, with constant covariance matrices. Our suggested method of analysis will allow covariates as well, so you can perform multiple comparisons with multivariate analysis of covariance (MANCOVA) data.

Independent Observations from Parametric Nonnormal Distributions

As an example, suppose you know that the observations are counts of defects on a manufactured item, and you wish to compare shifts A, B, and C. The model used may be Poisson, and you still wish to perform multiple comparisons. In this case, you can use any of several SAS procedures to fit the Poisson model, and can perform adjustments for multiple comparisons easily using the fitted results from such models.

Dependent Observations from Parametric Nonnormal Distributions

Following the previous example, suppose you know that the counts of defects on manufactured items are associated with different machines. You still wish to compare shifts A, B, and C, but you want to account for the machine effect. In this case, you may model the observations on a common machine as dependent, using a random effects model, where the machine effect is considered random. Again the model may be Poisson, but with a repeated measures component. In this case, you can use PROC GLIMMIX both to perform the repeated measures modeling and to perform the multiple comparisons.

Multiple Comparison

Post-hoc Comparisons

Significance of F Statistic: If the overall F statistic from an ANOVA is not statistically significant (i.e., p-value exceeds the alpha level, typically 0.05), it indicates that there is no statistical evidence to suggest that there are any differences among the group means. In such cases, it is standard practice not to perform any further contrasts or post hoc tests because the initial analysis did not reject the null hypothesis of no group differences.

If the F statistic is significant, suggesting differences among the group means, post hoc tests can be used to find out which specific groups differ from each other. Here are some commonly used post hoc tests:

- Tukey’s Honestly Significant Difference (HSD) Test:

- Pros: Well-balanced in terms of Type I error control; not as conservative as Bonferroni, leading to slightly more powerful results where moderate control is sufficient.

- Cons: Can still be too conservative compared to tests without any corrections.

- Bonferroni Correction:

- Method: This test adjusts the p-values by multiplying them by the number of comparisons. For instance, if three t-tests are conducted, each p-value is multiplied by three.

- Pros: Highly conservative, minimizing the risk of Type I errors (false positives).

- Cons: Can drastically reduce statistical power, particularly when many comparisons are made, possibly leading to Type II errors (false negatives).

- Holm-Bonferroni Method:

- Method: A sequential adjustment where the smallest p-value is multiplied by the total number of tests, the next smallest by one fewer, and so on. This provides a step-down method that is less conservative than Bonferroni.

- Pros: Offers a good balance between controlling Type I error and maintaining statistical power.

- Scheffe’s Test:

- Method: A complex formula for calculating adjustments, rarely used due to its complexity and conservativeness.

- Pros: Very robust, as it allows for all possible comparisons post-hoc.

- Cons: May be too conservative and complex for practical use.

- Welch’s F-test:

- Method: Adjusts the degrees of freedom used in the F-test to account for group variances that are unequal. This test is especially useful when the assumption of homogeneity of variances is violated.

- Pros: Provides a more accurate assessment of the significance of the group differences when variances are unequal.

- Kruskal-Wallis Test:

- Method: A non-parametric alternative to ANOVA, used when the data does not meet the assumptions necessary for ANOVA. It compares medians from two or more groups.

- Pros: Does not assume a normal distribution and is useful for ordinal data or skewed distributions.

- Cons: Generally less powerful than ANOVA in detecting differences when the normality assumption is met.

Introduction

Multiplicity Problem

There are real effects from multiplicity.

- confounding effects

- nonresponse effects

- placebo effects

- learning effects

- carryover effects

The problem with all statistical tests is the fact that the (overall) error rate increases with increasing number of tests. \[1 - (1 - \alpha)^m.\]

Error Rates

Comparisonwise Error Rate (CER)

Typical inferences are performed using the \(95 \%\) confidence level or \(5 \%\) significance level. In either case, the comparisonwise error rate (CER) is \(5 \%\). For confidence intervals, CER is defined as \[\mathrm{CER}=P(\text{Interval does not contain the parameter}).\] A typical two-sided confidence interval has the form

(parameter estimate) \(\pm\) (critical value) \(\times\) (standard error of the estimate).

For example, if the parameter of interest is a population mean \(\mu\), and the data are normally distributed, then the usual two-sided \(95 \%\) confidence interval for \(\mu\) is \[ \bar{y} \pm t_{975, n-1} \times s_{y} / \sqrt{n} \] where - \(\bar{y}\) is the estimate of the population mean - \(s_{y}\) is the sample standard deviation - \(n\) is the sample size - \(s_{y} / \sqrt{n}\) is the standard error of the estimated mean.

The critical value is \(t_{975, n-1}\), which is the \(1-0.05 / 2\) quantile of the \(t\) distribution with \(n-1\) degrees of freedom. A one-sided upper confidence interval for \(\mu\) might be all values below \[ \bar{y}+t_{.95, n-1} \times s_{y} / \sqrt{n} \] For tests of hypotheses, CER is defined as \[ \mathrm{CER}=P\left(\text { Reject } H_{0} \mid H_{0}\right. \text { is true). } \]

Familywise Error Rate (FWE)

FWE for Simultaneous Confidence Intervals

The FWE is the probability of at least one erroneous inference, defined for simultaneous confidence intervals as \[\text{FWE (at least one interval is incorrect) 1 (all intervals are correct).}\]

FWE for Multiple Tests of Hypotheses

The family-wise error rate is defined as the probability of rejecting at least one of the true \(H_0\)

In the case of multiple tests of hypotheses, some of the hypotheses \(H_{0 j}\) could be true, and others could be false. Suppose the true state of nature is that the particular null hypotheses corresponding to \(j_{1}, \ldots, j_{m}\) are true, and all other null hypotheses are false. In other words, \(H_{0 j_{1}}, H_{0 j_{2}}, \ldots, H_{0 j_{m}}\) are true, and the remaining \((k-m)\) hypotheses are false. The FWE is then defined as

\[FWE =P( \text{reject at least one of} H_{0 j_{1}}, H_{0 j_{2}}, \ldots, H_{0 j_{m}} \mid H_{0 j_{1}}, H_{0 j_{2}}, \ldots, H_{0 j_{m}} \text{all are true})\].

Control of the FWE: Weak and Strong

An MCP is said to control the FWE in the weak sense if it controls the FWE under the complete null configuration, but not under all other configurations. Despite the fact that the terms “weak control” and “strong control” are used in conjunction with FWE, you should note that they really refer to different error rates. Weak control refers only to controlling the probability that the complete null hypothesis is rejected, and allows Type I errors in excess of the usual 5% value (for example, for the component hypotheses).

A method that controls the FWE in the strong sense will result in a Type I error for any component hypothesis no more than 5% of the time.

Directional Decisions and Type III Error Rates

A directional error (sometimes called a Type III error) is defined as the probability of misclassifying the sign of an effect. If you reject the hypothesis H0 : μ = 0 in favor of the (twosided) alternative HA : μ ≠ 0 using a CER= 0.05 level test, can you then claim that the sign of the true mean μ is the same as the sign of the estimated mean y ?

A type III error is where you correctly reject the null hypothesis, but it’s rejected for the wrong reason. This compares to a Type I error (incorrectly rejecting the null hypothesis) and a Type II error (not rejecting the null when you should). Type III errors are not considered serious, as they do mean you arrive at the correct decision. They usually happen because of random chance and are a rare occurrence.

You can also think of a Type III error as giving the right answer (i.e. correctly rejecting the null) to the wrong question. In other words, both your null and alternate hypotheses may be poorly worded or completely incorrect.

For MCPs, the Type III FWE is the probability that the sign of any tested effect is misclassified.

False Discovery Rate (FDR)

Benjamini and Hochberg (1995) referred to the expected proportion of erroneously rejected null hypotheses among the rejected ones as the False Discovery Rate, or FDR. Formally, for a given family of k hypotheses and a given MCP, let R= number of hypotheses rejected, and let V = the (unknown) number of erroneously rejected ones. Define V/R = 0 in case R=0. Then FDR is the expected value of V/R

\[ \begin{array}{cccc} \hline & H_{0} \text { accepted } & H_{0} \text { rejected } & \text { Total } \\ \hline H_{0} \text { true } & m-V & V & m \\ H_{0} \text { false } & k-m-R+V & R-V & k-m \\ \text { Total } & k-R & R & k \\ \hline \end{array} \]

\[ \mathrm{FDR}=E(V / R) \] (assuming \(0 / 0\) is defined as 0 ), whereas \[ \mathrm{FWE}=P(V>0) \] Under the overall null hypothesis, FDR and FWE are equal, since in this case \(V / R=1\) when there is at least one rejection, and \(V / R=0\) when there are no rejections.

The adjusted P

Marginal p-value is based on the marginal p-values, which do not account for a multiplicity adjustment.

The adjusted P value is the smallest familywise significance level at which a particular comparison will be declared statistically significant as part of the multiple comparison testing. A separate adjusted P value is computed for each comparison in a family of comparisons.

The following show the R code about teh comparsion of adjusted and un-adjusted p-values

library(multcomp)

data(thuesen,package = "ISwR")

thuesen <- read.sas7bdat("~/Desktop/SASUniversityEdition/myfolders/Daten/thuesen.sas7bdat")

thuesen.lm <- lm(short.velocity ~ blood.glucose,data = thuesen)

thuesen.mc <- glht(thuesen.lm, linfct = diag(2))

## With adjustment.

summary(thuesen.mc,

test = adjusted(type = "bonferroni"))

## without adjustment.

summary(thuesen.mc, test = univariate())Furthermore, there are different methods for p value adjust.

Input = ("

Food Raw.p

Blue_fish .34

Bread .594

Butter .212

Carbohydrates .384

Cereals_and_pasta .074

Dairy_products .94

Eggs .275

Fats .696

Fruit .269

Legumes .341

Nuts .06

")

Data = read.table(textConnection(Input),header=TRUE)

## Order data by p-value

Data = Data[order(Data$Raw.p),]

## Perform p-value adjustments and add to data frame

Data$Bonferroni =

p.adjust(Data$Raw.p,

method = "bonferroni")

Data$BH =

p.adjust(Data$Raw.p,

method = "BH")

Data$Holm =

p.adjust(Data$ Raw.p,

method = "holm")

Data$Hochberg =

p.adjust(Data$ Raw.p,

method = "hochberg")

Data$Hommel =

p.adjust(Data$ Raw.p,

method = "hommel")

Data$BY =

p.adjust(Data$ Raw.p,

method = "BY")

Food Raw.p Bonferroni BH Holm Hochberg Hommel BY

11 Nuts 0.060 0.660 0.4070 0.66 0.66 0.5485714 1

5 Cereals_and_pasta 0.074 0.814 0.4070 0.74 0.74 0.5920000 1

3 Butter 0.212 1.000 0.5280 1.00 0.94 0.8700000 1

9 Fruit 0.269 1.000 0.5280 1.00 0.94 0.9280000 1

7 Eggs 0.275 1.000 0.5280 1.00 0.94 0.9280000 1

1 Blue_fish 0.340 1.000 0.5280 1.00 0.94 0.9400000 1

10 Legumes 0.341 1.000 0.5280 1.00 0.94 0.9400000 1

4 Carbohydrates 0.384 1.000 0.5280 1.00 0.94 0.9400000 1

2 Bread 0.594 1.000 0.7260 1.00 0.94 0.9400000 1

8 Fats 0.696 1.000 0.7656 1.00 0.94 0.9400000 1

6 Dairy_products 0.940 1.000 0.9400 1.00 0.94 0.9400000 1Basic Statistical Concepts

The hypotheses described here are for the two-sample t-test, a common test for comparing two groups. The assumptions of the two-sample t-test are important: random, independent samples from the two groups, common variances, and normally distributed data.

- The null hypothesis is \(H_{0}: \mu_{1}=\mu_{2} ;\) that is, the hypotheses that the population means are equal.

- The alternative hypothesis is \(H_{A}: \mu_{1} \neq \mu_{2} ;\) that is, the hypotheses that the population means are not equal.

- The test statistic is \(T=\frac{\bar{X}_{1}-\bar{X}_{2}}{s_{p} \sqrt{\frac{1}{n_{1}}+\frac{1}{n_{2}}}}\), where \(s_{p}^{2}=\frac{\left(n_{1}-1\right) s_{1}^{2}+\left(n_{2}-1\right) s_{2}^{2}}{n_{1}+n_{2}-2}\).

- The decision rule is to reject \(H_{0}\) if \(|T| \geq t_{1-\alpha / 2, n-2}\), where \(t_{1-\alpha / 2, n-2}\) is the critical value.

- The \(p\) -value is the probability of observing a test statistic as large as or larger than the \(|T|\) that was observed in the study, assuming the null hypothesis is true.

By construction, the \(p\) -value is found \(\leq \alpha\) wherever \(|T| \geq t_{1-\alpha / 2, n-2} .\) Thus, when all of the assumptions are satisfied, \[ P\left(p \leq \alpha \mid H_{0} \text { is true }\right)=\alpha \] This leads to an important point:

When the null hypothesis is true and when all assumptions are satisfied, the \(p\) -value has a uniform distribution.

From the parameter, the adjusted and unadjusted p value can be calculated

## Calculation without adjustment.

## regression coefficients β and their covariance matrix

betahat <- coef(thuesen.lm)

Vbetahat <- vcov(thuesen.lm)

## compute two individual t test statistics and correlation matrix

C <- diag(2)

Sigma <- diag(1 / sqrt(diag(C %*% Vbetahat %*% t(C))))

t <- Sigma %*% C %*% betahat

Cor <- Sigma %*% (C %*% Vbetahat %*% t(C)) %*% t(Sigma)

## Use the pmvt function of the mvtnorm package to calculate the adjusted p value from the basic bivariate t distribution

library("mvtnorm")

thuesen.df <- nrow(thuesen) - length(betahat)

q <- sapply(abs(t), function(x) 1 - pmvt(-rep(x, 2),

rep(x, 2),

corr = Cor,

df = thuesen.df))

## 获得了多重调整的p值 q1 <0.001且q2 = 0.064

## compute the critical value u1−α 计算临界值

delta <- rep(0, 2)

myfct <- function(x, conf) {

lower <- rep(-x, 2)

upper <- rep(x, 2)

pmvt(lower, upper, df = thuesen.df, corr = Cor,

delta, abseps = 0.0001)[1] - conf

}

u <- uniroot(myfct, lower = 1, upper = 5, conf = 0.95)$root

round(u, 3)Functions in glht package in R

| Functions | Descriptions |

|---|---|

glht.mc$model |

The fitted model |

glht.mc$linfct |

linear conflict functions |

glht.mc$vcov |

Covariance matrix |

glht.res <- summary(glht.mc) glht.res$test$pvalues |

P-values |

summary(warpbreaks.mc, test = Ftest()) |

Global F-Test |

summary(warpbreaks.mc, test = Chisqtest()) |

Wald测试 |

summary(warpbreaks.mc, test = univariate()) |

未调整的p值, 不考虑多重性执行了m个单独t检验 |

summary(warpbreaks.mc, test = adjusted(type = "bonferroni")) |

Bonferroni校正 |

Bonferroni and Šidák Methods

LSD (least significance difference)

least significant difference method. First proposed by Fisher, it is essentially a t-test.

For Two independent sample t test:

\[t=\frac{\bar{X}_{1}-\bar{X}_{2}}{\sqrt{S_{c}^{2}\left(\frac{1}{n_{1}}+\frac{1}{n_{2}}\right)}}\] \[S_{c}^{2}=\frac{\left(n_{1}-1\right) S_{1}^{2}+\left(n_{2}-1\right) S_{2}^{2}}{n_{1}+n_{2}-2}\] is the variance of the joint estimate of the two samples, under the premise that the sample variance is uniform

The LSD method also performs a t-test of pairwise comparison. The difference is that under the premise of meeting the homogeneity of variance, the LSD method uses the joint variance of all samples to estimate the standard error of the mean difference, rather than the joint variance of the two samples to be compared. Take the comparison of the mean difference between the three samples as an example, the formula is

\[\begin{aligned} &S_{c}^{2}=\frac{\left(n_{1}-1\right) S_{1}^{2}+\left(n_{2}-1\right) S_{2}^{2}+\left(n_{3}-1\right) S_{3}^{2}}{n_{1}+n_{2}+n_{3}-3} \end{aligned}\]

The LSD method calculates the smallest significant difference, namely \[\begin{aligned} &L S D=t_{\alpha / 2} \sqrt{S_{c}^{2}\left(\frac{1}{n_{1}}+\frac{1}{n_{2}}\right)} \end{aligned}\]

The test level of LSD method for single comparison is still α. The LSD test has the highest sensitivity, but increases the probability of Type 1 error as the frequency of comparisons increases. To solve this problem, the Sidak method and the Bonferroni method appeared.

Šidák

The Sidak method is also a t test, and the calculation formula is the same as that of the LSD method. But the Sidak method adjusts for a. If there are k groups, the number of pairwise comparisons for the k groups is \(c=\frac{k(k-1)}{2}\) Then after c comparisons, the cumulative probability of making a class of errors is: \(1-\left(1-\alpha_{a}\right)^{c}\) makes the above formula equal to 0.05, which can be reversed to deduce the adjusted \(\alpha_{a} \quad\) . For example, after 6 post-hoc comparisons, the Sidak method = 0.0085, and \(\alpha_{a}\) is used as the significance level of a single comparison. Obviously, \(\alpha_{a}\) becomes smaller. Since \(\alpha_{a}\) subtracts You, the conclusion tends to accept the null hypothesis, so this method is much more conservative than the LSD method.

The rationale for this method is the Boole inequality: \[ P\left(A_{1} \text { or } A_{2} \text { or } \ldots \text { or } A_{k}\right) \leq P\left(A_{1}\right)+P\left(A_{2}\right)+\cdots+P\left(A_{k}\right) \]

\[ P\left(\left\{\text { Reject } H_{01}\right\} \text { or }\left\{\text { Reject } H_{02}\right\}\right) \leq P\left(\text { Reject } H_{01}\right)+P\left(\text { Reject } H_{02}\right) \]

For the Šidák method, recall that you can reject an individual hypothesis \(H_{0 j}\) if \(p_{j} \leq 1-(1-\alpha)^{1 / k}\); or equivalently, when \(1-\left(1-p_{j}\right)^{k} \leq \alpha\), where \(\alpha\) is the desired FWE level. This gives you the Šidák adjusted \(p\) -values. Šidák Adjusted \(p\) -value for Hypothesis \(H_{0 j}\); \[ \tilde{p}_{j}=1-\left(1-p_{j}\right)^{k} . \]

Bonferroni

The Bonferroni method is similar to the Sidak method, and α is also adjusted on the basis of the LSD method. The adjustment method is based on Bonferroni’s inequality. If there are k groups, the calculation formula is \[\alpha^* = \alpha / k\]

The Bonferroni method is generally considered to be the most conservative. When the number of comparisons is small, the effect of this method is better. When the number of comparisons is large (such as k>10), the adjustment of \(\alpha\) is somewhat overcorrected and the effect is not as good as Sidak

library(multcomp)

## Create a matrix where each *row* is a contrast

K <- rbind(c(1, -1/2, -1/2), ## ctrl vs. average of trt1 and trt2

c(1, -1, 0)) ## ctrl vs. trt1

fit.gh <- glht(fit, linfct = mcp(group = K))

## Individual p-values

summary(fit.gh, test = adjusted("none"))

## Bonferroni corrected p-values

summary(fit.gh, test = adjusted("bonferroni"))While the Boole inequality is directly applicable to multiple hypothesis testing, the Bonferroni inequality is directly applicable to simultaneous confidence intervals. As an example, suppose that you have constructed k=10 simultaneous confidence intervals, all at the CER level 0.05/k=0.05/10=0.005, corresponding to 99.5% confidence intervals. Then the simultaneous confidence level is

\[ \begin{array}{l} P(\{\text { Interval } 1 \text { correct }\} \text { and } \ldots \text { and }\{\text { Interval } 10 \text { correct }\}) \\ \geq 1-\{P(\text { Interval } 1 \text { incorrect })+\cdots+P(\text { Interval } 10 \text { incorrect })\} \\ =1-10(0.005) \\ =0.95 . \end{array} \]

Bonferroni Adjusted \(p\) -value for Hypothesis \(H_{0 j}\); \[ \tilde{p}_{j}=\left\{\begin{array}{ccc} k p_{j} & \text { if } & k p_{j} \leq 1 \\ 1 & \text { if } & k p_{j}>1 \end{array}\right. \]

Bonferroni and Šidák Adjusted p-Values Using the DATA Step

data pvals1;

input test pval @@;

bon_adjp = min(1,10*pval);

sid_adjp = 1 - (1-pval)**10;

datalines;

1 0.0911 2 0.8912

3 0.0001 4 0.5718

5 0.0132 6 0.9011

7 0.2012 8 0.0289

9 0.0498 10 0.0058

;

proc sort data=pvals1 out=pvals1;

by pval;

proc print data=pvals1;

run; Bonferroni and Šidák Adjusted p-Values Using PROC MULTTEST

proc multtest inpvalues(pval)=pvals1 bon sid out=outp;

proc sort data=outp out=outp;

by pval;

proc print data=outp label;

run;Bonferroni and Šidák methods are easy to implement, and they correspond naturally to confidence intervals. Šidák’s method provides slightly more power, but occasionally does not control the FWE. However, when confidence intervals are not required, adaptive procedures are more powerful, although they might not control the FWE in some cases. Simulation studies should be used to understand this issue.

- For inferences with dependent data: ⇒ Use Bonferroni tests or intervals.

- For inferences with independent data: ⇒ Use Šidák tests or intervals.

Schweder-Spjøtvoll p-Value Plot

This plot, which is very useful for assessing multiplicity, depicts the relationship between values \(q=1-p\) and their rank order. Specifically, if \(q_{(1)} \leq \ldots \leq q_{(k)}\) are the ordered values of the \(q\) ’s, then \(q_{(1)}=1-p_{(k)}, q_{(2)}=1-p_{(k-1)}\), etc. The method is to plot the \(\left(j, q_{(j)}\right)\) pairs. If the hypotheses all are truly null, then the \(p\) -values will behave like a sample from the uniform distribution, and the graph should lie approximately on a straight diagonal line. Deviations from linearity, particularly points in the upper-right corner of the graph that are below the extended trend line from the points in the lower-left corner, suggest hypotheses that are false, since their \(p\) -values are too small to be consistent with the uniform distribution.

*** Schweder-Spjøtvoll p-Value Plot Using PROC MULTTEST ;

data pvals1;

input test pval @@;

bon_adjp = min(1,10*pval);

sid_adjp = 1 - (1-pval)**10;

datalines;

1 0.0911 2 0.8912

3 0.0001 4 0.5718

5 0.0132 6 0.9011

7 0.2012 8 0.0289

9 0.0498 10 0.0058

;

ods graphics on;

proc multtest inpvalues(pval)=pvals1 plots= RawUniformPlot;

run;

ods graphics off;Figure: Schweder-Spjøtvoll (Uniform Probability) Plot

How does the plot look when there are no true effects

ods graphics on;

proc multtest inpvalues(probt)=ttests plots= RawUniformPlot;

run;

ods graphics off;Figure: Plot of p-Values for the Cold Study

Adaptive Methods

FWE of an MCP depends upon the number of true null hypotheses, m. In order to protect the FWE in all possible circumstances, you had to protect it for the complete null hypothesis where all nulls are true (i.e., where m=k). Thus, in the Bonferroni method, you use k as a divisor for the critical value (and as a multiplier for the adjusted p-value). If you know m, the number of true nulls, then you may use m as a divisor (or multiplier for adjusted p-values) instead of k, and still control the FWE. From the examination of the Schweder-Spjøtvoll plot, you can estimate the total number of true null hypotheses \(\hat m\), and modify the critical value of the Bonferroni procedure by rejecting any hypothesis\(H_{0 j}\) for which \(p_{j} \leq \alpha / \hat{m} .\)

Adaptive Holm (AHOLM) method specified in the following program.

*** Estimating the Number of Null Hypotheses;

ods graphics on;

proc multtest inpvalues(pval)=pvals1

plots= RawUniformPlot aholm;

run;

ods graphics off;Figure: Estimating the Number of True Nulls Using Hochberg and Benjamini’s Method

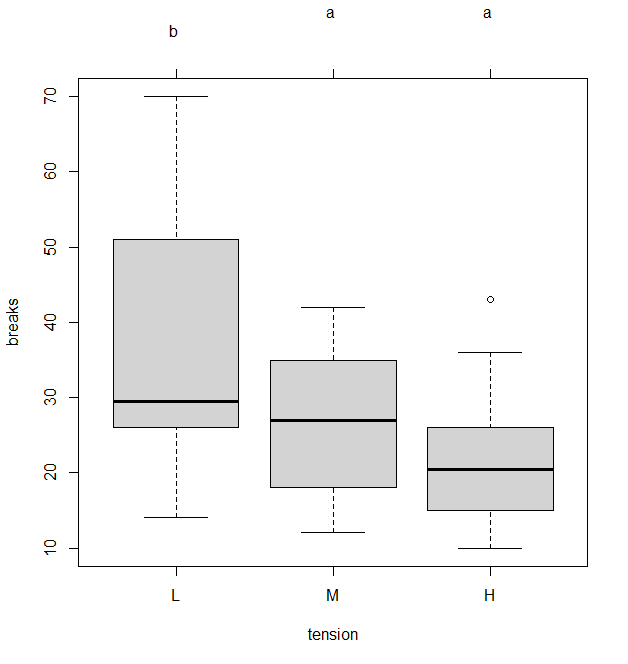

MCP among Treatment Means in the One-Way Balanced ANOVA

LS-Means