Multiple Imputation Project

Multiple Imputation

Introduction

MI Attributes

MI is model-based. It ensures statistical transparency and integrity of the imputation process. To ensure robustness in analysis, the imputation model should be broader than the analysis models that will be analyzed using the imputed data (see Section 2.2). The model that underlies the imputation process is often an explicit distributional model (e.g., multivariate normal), but good results may also be obtained using techniques where the imputation model is implicit (e.g., nearest neighbor imputation).

MI is stochastic. It imputes missing values based on draws of the model parameters and error terms from the predictive distribution of the missing data, \(Y_{\text {mis. }}\). For example, in linear regression imputation of the missing values of a continuous variable, the conditional predictive distribution may be: \(\hat{Y}_{k, m i s}=\hat{\beta}_0+\hat{\beta}_{j \neq k} \cdot y_{j \neq k}+e_k\). In forming the imputed values of \(y_{k, \text { mis }}\), the individual predictions incorporate multivariate draws of the \(\hat{\beta}\) s and independent draws of \(e_k\) from their respective estimated distributions. In a hot deck, predictive mean, or propensity score matching imputation, the donor value for \(y_{k, \text { mis }}\) is drawn at random from observed values in the same hot deck cell or in a matched “neighborhood” of the missing data case.

MI is multivariate. It preserves not only the observed distributional properties of each single variable but also the associations among the many variables that may be included in the imputation model. It is important to note that under the assumption that the data are missing at random (MAR), the multivariate relationships that are preserved are those relationships that are reflected in the observed data, \(Y_{o b s .}\)

MI employs multiple independent repetitions of the imputation procedure that permit the estimation of the uncertainty (the variance) in parameter estimates that is attributable to imputing missing values. This is variability that is in addition to the recognized variability in the underlying data and the variability due to sampling.

MI is robust against minor departures from strict theoretical assumptions. No imputation model or procedure will ever exactly match the true distributional assumptions for the underlying random variables, \(Y\), nor the assumed missing data mechanism. Empirical research has demonstrated that if the more demanding theoretical assumptions underlying MI must be relaxed that applications to data can produce estimates and inferences that remain valid and robust (Herzog and Rubin 1983).

Theoretical Foundation and SAS Implementation: - Bayesian Framework: MI fundamentally relies on the Bayesian framework, where imputations are considered random draws from the posterior predictive distribution of the missing data, conditioned on the observed data and a set of parameters.

MAR Assumption in MI

- MAR (Missing At Random): This assumption posits that the missingness of the data is related to the observed data but not to the values of the data that are missing. In simpler terms, after accounting for the observed data, the missingness is random and does not depend on unobserved data.

- Usage in MI: Under MAR, MI assumes that any systematic differences between missing and observed values can be explained by the observed data. Thus, if the model correctly incorporates all variables that influence the propensity for missing data, the imputations should be unbiased.

- MNAR: MI is not limited to MAR situations. It can also be employed under Missing Not At Random (MNAR) assumptions, where missingness depends on unobserved data. MI can incorporate techniques like pattern-mixture models, which are particularly useful in MNAR contexts to model the different missing data mechanisms explicitly.

- Sensitivity Analysis: MI allows for the testing of various scenarios under which the missingness mechanism might operate, providing a robust tool for understanding the potential biases introduced by different types of missing data.

- Choosing Variables: When implementing MI, selecting appropriate ancillary variables is crucial. These choices can be made prospectively (based on prior knowledge about the data and subject matter considerations) or retrospectively (based on observed patterns in the data).

Comparing MI with MMRM

- Similar Base Assumptions: Both MI and MMRM operate under the MAR framework. They assume that once we account for the observed data, the reasons for the missing data are effectively random and not dependent on unknown factors.

- Flexibility in Model Specification:

- MMRM: Uses all available data and incorporates assumptions about the missingness directly into its model. It’s typically used for analyzing data from clinical trials where the interest is in the change from baseline and where missing data occur in longitudinal measurements.

- MI: Unlike MMRM, MI can include ancillary or auxiliary variables in the imputation model. These are variables that might correlate with the likelihood of missing data but do not necessarily correlate with the outcome variable. This inclusion can enhance the quality of the imputations and allow for sensitivity analyses regarding the missingness assumptions.

MI and Maximum Likelihood (ML)

Multiple Imputation (MI) and Maximum Likelihood (ML) methods are both powerful statistical techniques used to handle missing data, but they differ significantly in their approaches and underlying assumptions.

Maximum Likelihood Estimation (ML)

- Approach and Methodology:

- ML methods involve constructing a log-likelihood function based on the assumed probability distribution that best describes the observed data.

- By maximizing this function, ML estimates the parameters that are most likely to have generated the observed data.

- Inference, such as hypothesis testing or estimation of effects, is performed using these parameter estimates, which represent the best single set of values under the model assumptions.

- Handling of Missing Data:

- In the context of longitudinal data, such as with a Mixed Model for Repeated Measures (MMRM), ML methods use all available data, including data from subjects with missing values at some time points.

- ML does not impute missing data but instead leverages the longitudinal relationships and covariances between observed data points across time to inform the estimates.

- This allows for inferences about the overall mean effects, integrating information from incomplete cases without the need for imputation.

- Estimation Focus:

- ML produces a single set of parameter estimates and standard errors, reflecting the most probable state of the underlying population given the model and the data.

Multiple Imputation (MI)

- Approach and Methodology:

- MI explicitly handles missing data by creating multiple imputations or predicted values for missing entries.

- This is achieved by estimating an imputation model that includes the primary outcome and relevant covariates, reflecting how these variables interact in the observed data.

- Handling of Missing Data:

- MI fills in missing data points by predicting values based on the relationships identified in the imputation model.

- Unlike ML, MI does not rely on a single set of parameter estimates. Instead, it generates multiple datasets by drawing repeatedly from the posterior distribution of the imputation model’s parameters.

- Estimation Focus:

- MI acknowledges the uncertainty in the estimates of the parameters themselves by using multiple sets of imputations. Each set of imputed data is analyzed separately, and the results are then combined to provide overall estimates and standard errors.

- This process not only fills in missing data but also incorporates the variability of the parameter estimates into the final analysis, providing a more robust measure of uncertainty.

Key Differences

- Imputation vs. Direct Analysis: ML directly analyzes the available data using all observed values and the relationships among them, without imputing missing data. In contrast, MI explicitly imputes missing values using a model-based approach and then analyzes each complete dataset.

- Uncertainty and Variability: ML estimates focus on obtaining the most likely parameter values and their variability from a single model. MI, however, considers multiple potential outcomes and the inherent uncertainty in the parameters themselves, reflecting a broader spectrum of possibilities that could have led to the observed data.

- Final Inference: MI provides a way to account for the uncertainty in predicting missing values by averaging over multiple plausible data completions, which can potentially lead to more accurate and credible inference, especially when the pattern of missing data is complex.

MI Algorithms

The general three-step process for handling missing data using Multiple Imputation (MI) in statistical analyses is crucial for maintaining the integrity and robustness of study findings when complete data are not available. Each step is integral to ensuring that the imputed results are as reliable and accurate as possible. Here’s an expanded explanation of each step involved in the MI process:

Step 1: Imputation

Objective: The goal here is to replace missing data with plausible values based on the observed data. This step involves creating multiple complete datasets to reflect the uncertainty about the right value to impute.

Methods: - Continuous vs. Categorical Data: The approach to imputation may differ based on whether the missing values are for continuous or categorical variables. - Imputation Model: This model includes the variables that will help predict the missing values effectively. Choosing the right predictors and the form of the relationship (e.g., linear, logistic) is critical. - Multiple Datasets: Typically, M different complete datasets are created (where M could be 5, 10, 20, etc.), each representing a different plausible scenario of what the missing data could have been.

Step 2: Analysis

Objective: Each of the M completed datasets is analyzed independently using the statistical methods appropriate for the study design and research questions.

Process: - Independent Analyses: The same statistical method is applied separately to each imputed dataset. This could be ANCOVA, regression analysis, or any other method suitable for the complete data. - Replication of Standard Procedures: The method chosen is the one that would have been used if the dataset had no missing values, ensuring that the analysis aligns with standard scientific inquiry practices.

Step 3: Pooling

Objective: The results from the M separate analyses are combined to produce a single result that accounts for the uncertainty in the imputed values.

Methods: - Pooling Results: Techniques like Rubin’s Rules are used to combine the results. This involves calculating the overall estimates (e.g., means, regression coefficients), their variances, and confidence intervals from the separate analyses. - Final Inference: The pooled results are used to make statistical inferences. This step ensures that the variability between the multiple imputations is incorporated into the final estimates, providing a comprehensive assessment of the results.

Imputation Model vs Analysis Model

The distinction between the imputation and analysis models in the context of multiple imputation (MI) is a crucial aspect of handling missing data effectively while maintaining the integrity of statistical analyses. This differentiation allows for a more nuanced approach to modeling data, whereby different sets of variables can be utilized during the imputation and analysis phases to best reflect their relevance and relationships within the dataset.

One of the strengths of MI is that the imputation and analysis models operate independently:

- Separate Models: Variables included in the imputation model do not need to be included in the analysis model. This separation allows for the inclusion of variables in the imputation phase that are necessary for accurately predicting missing values but may dilute or confuse the analysis of treatment effects if included in the analysis model.

- Flexibility in Analysis: After the imputation phase, the analysis can proceed as if the data were originally complete, using the most appropriate statistical methods for the study objectives without the constraint of accommodating all variables used in the imputation model.

Imputation Model

The imputation model in the context of multiple imputation (MI) is a statistical framework designed to predict missing values in a dataset. This model is crucial for handling missing data effectively, ensuring that the subsequent analyses are robust and reliable. The primary goal of the imputation model is to generate plausible values for missing data points in a dataset. These values are not meant to be exact predictions but rather plausible substitutes that reflect the uncertainty inherent in predicting missing data.

The imputation model is constructed to predict missing values accurately, based on the available data and assumptions about the mechanism of missingness. Key considerations for building an effective imputation model include:

- Inclusion of Variables: Variables that explain the mechanism of missingness or are highly correlated with variables having missing values should be included. This helps in accurately estimating the missing values by leveraging all relevant information that could influence or explain the missingness.

- Complexity and Appropriateness: The imputation model can be more complex than the analysis model, incorporating additional variables that may not be directly related to the primary outcomes of interest but are instrumental in accounting for the pattern and nature of the missing data.

Components of the Imputation Model

- Predictive Variables:

- The imputation model includes variables that are known (observed) that can help predict the missing values. These variables may be directly related to the outcome or may be correlates of the missing values.

- Variables that influence the probability of missingness should also be included to adhere to the Missing At Random (MAR) assumption, where missingness can be explained by the observed data.

- Statistical Methods:

- Depending on the nature of the missing data (continuous, binary, categorical), different statistical methods are used. Common approaches include linear regression for continuous data, logistic regression for binary data, and multinomial logistic regression or other categorical data techniques for multi-category variables.

- Distribution Assumptions:

- The imputation model assumes a distribution for the missing data based on the type of data and the observed patterns. For continuous data, a normal distribution might be assumed; for binary or categorical data, a binomial or multinomial distribution might be appropriate.

Construction of the Imputation Model

- Variable Selection:

- Selecting the right variables for the imputation model is crucial. This includes both predictors of the missing values and variables that explain the mechanism of missingness.

- Ancillary variables, which might not be of interest in the analysis model but can predict the missing values effectively, are also included.

- Model Specification:

- The relationships between predictors and the missing variable are specified based on theoretical knowledge or exploratory data analysis.

- Interactions and non-linear effects can be included if they are believed to impact the missing values significantly.

- Parameter Estimation:

- Parameters of the imputation model are estimated using available (complete) data. This step often involves fitting the specified model to the observed data to understand the relationships between variables.

Analysis/Substantive Model

The analysis model in the context of multiple imputation (MI) plays a critical role after the completion of the imputation process. This model is used to analyze the datasets that have been made complete through the imputation of missing values. The primary goal of the analysis model is to conduct statistical analyses on the completed datasets provided by the imputation model. It aims to draw inferences or test hypotheses about the underlying data, focusing on the relationships and effects that are of primary interest in the research study.

The analysis model, also known as the substantive model, is used to analyze the imputed datasets. This model:

- Focuses on Main Objectives: It includes variables that are directly relevant to the primary objectives of the study. It does not necessarily include all the variables used in the imputation model, especially those included solely for the purpose of accurately imputing missing values.

- Simplicity and Relevance: While the analysis model may be simpler than the imputation model, it focuses on variables that are crucial for the analysis and interpretation of the primary outcomes of the study.

Components of the Analysis Model

- Variables Included:

- The analysis model includes the main variables of interest—those directly related to the research questions or hypotheses being tested.

- Unlike the imputation model, the analysis model does not necessarily include all the variables used in the imputation process, especially those included solely to account for the pattern of missingness.

- Statistical Techniques:

- The choice of statistical techniques depends on the research objectives, the nature of the data, and the specific hypotheses being tested. Common techniques include regression analysis (linear, logistic, Cox proportional hazards), ANOVA, ANCOVA, and more sophisticated models like structural equation modeling or mixed-effects models.

- Each technique is chosen based on its suitability to address the specific structure and needs of the data as well as the study design.

- Model Specification:

- This involves defining the functional form of the model, including the selection of interaction terms, polynomial terms, and other transformations of the variables if needed.

- The specification should align with theoretical expectations and empirical evidence about the relationships between variables.

Construction of the Analysis Model

- Variable Selection:

- Critical variables are selected based on their relevance to the study objectives. This includes outcome variables, key predictors, control variables, and potential confounders.

- The model should focus on variables hypothesized to have significant effects or relationships within the study’s framework.

- Model Fitting:

- The model is fitted to each of the imputed datasets separately. This process involves estimating the parameters of the model using standard statistical procedures that would be appropriate if the data were completely observed.

- Techniques such as maximum likelihood estimation, least squares, or others appropriate for the data type and analysis method are used.

Role in Multiple Imputation

- Independent Analyses: Each of the M imputed datasets is analyzed independently using the same analysis model. This step is crucial as it treats each imputed dataset as a valid realization of the complete data, reflecting different plausible scenarios of the missing data.

- Pooling Results: After analyzing each dataset, results (e.g., coefficients, standard errors, p-values) are pooled using techniques such as Rubin’s Rules to generate single inference statistics. This pooling accounts for both within-imputation and between-imputation variability, providing a comprehensive assessment of the uncertainty due to missing data.

Proper Imputation and Compatibility

For imputations to be considered proper, they must meet certain criteria:

- Bayesian Posterior Distribution: Imputations should ideally be drawn following the Bayesian paradigm, using a posterior distribution of the missing values that reflects an accurate and comprehensive model of the data and missingness mechanism.

- Model Compatibility: While the imputation and analysis models are independent, they must be compatible in the sense that the imputation model adequately captures the relationships necessary to make accurate imputations for variables analyzed in the substantive model.

- Unbiased Estimation: Both compatibility and congeniality are essential for ensuring that the parameter estimates and inferences from the substantive model are unbiased and valid.

- Challenges: Categorical variables, interactions, and non-linear terms can complicate the compatibility and congeniality between models. For instance, logistic regression for binary outcomes or proportional odds models for ordinal outcomes may not align perfectly with linear analysis models.

- Solutions: Predictive mean matching (PMM) is often used as a compromise technique, especially when the linear assumptions of the Bayesian model used in the imputation are not perfectly congenial with the substantive model. PMM involves using observed values as donors for imputation, which helps mitigate issues arising from non-congenial imputation models.

Details

The concepts of compatibility and congeniality introduced in this section refer to the connection between the imputation model and the analysis model (substantive model), they may be beneficial for unbiased estimation in the substantive model (White et al., 2009; Burgess et al., 2013).

The imputation model and substantive model are considered compatible, if

- there exists a joint model (e.g. multivariate normal distribution) for a set of density functions (\(f_1, f_2, ..., f_n\)), and from the joint model the imputations could be drawn,

- the imputation model and the substantive model can be expressed as conditional models of the joint model (Liu et al., 2013).

For example, if a joint bivariate normal model \(g(x,y|\theta), \theta \in \Theta\) exists, the imputation model to impute \(X\) is \(f(x|y,\omega),\omega \in \Omega\), and the substantive model is \(f(y|x,\phi),\phi \in \Phi\), with the surjective function \(f_1:\Theta \rightarrow \Omega\) and \(f_2:\Theta \rightarrow \Phi\). The imputation model is compatible with the substantive models, if the two conditional densities \(f(x|y,\omega)\) and \(f(y|x,\phi)\) use the given densities from the joint model as its conditional density, which means \(f(x|y,\omega) = g(x|y,\theta)\) and \(f(y|x,\phi) = g(y|x,\theta)\) (Morris et al., 2015).

Compatibility affects the FCS effectiveness (Fully Conditional Specification is also called MICE, detailed introduction in Section 3.5), and it may benefit unbiased parameter estimation. However, the conditional normality of dependent variables \(X\) with homoscedasticity is insufficient to justify the linear imputation model for the predictor variable \(y\), when only \(y\) has missing observations (Morris et al., 2015). The imputation model and the real substantive model may be incompatible when the linear imputation model is assumed for \(y\), as a consequence, the imputation model may be misspecified. Furthermore, the compatibility in MICE is easily broken by the categorical variables, interactions, or non-linear terms in the imputation model, which results in the implicitly joint distribution or even not exist. Although parameter estimation may be biased in the substantive model under incompatibility, incompatibility between the analysis model and the imputation model only slightly impacted the final inferences if the imputation model is well specified (Van Buuren, 2012).

In addition to compatibility, there is another important consideration “Congeniality” in multiple imputation presented by Meng (1994), which appoints the required relationship between the analysis model and the imputation model. Congeniality is, essentially, a special case of compatibility, the joint model is the Bayesian joint model \(f\). The analysis model and the imputation congenial is congenial if

- for incomplete data, mean estimates using the imputation model are asymptotically equal to the posterior predictive mean using the joint model \(f\) given missing data, and the associated variance estimates using the imputation model are asymptotically equal to the posterior predictive variance using the joint model \(f\) given missing data;

- for observed data, mean estimates using the analysis model are asymptotically equal to the posterior mean from the joint model \(f\), and the associated variance estimates using the analysis model are asymptotically equal to the posterior variance from the joint model \(f\) (Burgess et al., 2013).

If the interaction terms and non-linear relationships do not exist in the imputation model, and the variables are continuous, each univariate imputation model specified as Bayesian linear regression is congenital to the substantive model. Under these circumstances, imputations for variables with missingness are derived independently from the conditional posterior predictive distribution given other variables, and the multiple imputation variance estimates are consistent (Murray, 2018). However, when categorical variables are also included in the imputation model, the analysis model and the imputation model are not congenial, because the relationship between categorical variables and outcome given other variables cannot be linear or log-linear.

Alternatively, there are two ways to deal with categorical variables. By default, logistic regression is specified as the imputation model in \(R\) package \(mice\) for binary variables and proportional odds model for ordered categorical variables. Notwithstanding, the imputation model using the logistic regression model or proportional odds model is not congenital to the analysis models. As another option, predictive mean matching (PMM) may be an option for the imputation model. Although PMM is not congenital, the first step of PMM is based on a congenital Bayesian linear model, and the missing data are imputed using the observed donors, which also avoids meaningless imputed values. PMM is a compromise method, because the Bayesian linear regression is actually used first, and then in the next step it adjusts the imputed values from the observed values instead of directly drawing from the linear regression.

Compatibility

Compatibility refers to the relationship between the imputation model and the substantive (analysis) model, ensuring that both models can logically coexist within a single, unified statistical framework.

- Joint Model Existence:

- Compatibility requires that there exists a joint statistical model covering all variables involved in both the imputation and analysis models. This joint model should be able to generate the imputations as well as serve the analytical needs of the substantive model.

- For instance, if a joint bivariate normal model is assumed for variables \(X\) and \(Y\), then the imputation for \(X\) can be conducted using the conditional model \(f(x|y,\omega)\) and the substantive analysis of \(Y\) using \(f(y|x,\phi)\), provided these conditionals are derived from the joint distribution.

- Conditional Model Expression:

- Both the imputation and substantive models should be expressible as conditional models stemming from the joint model. This ensures that the imputations are not only appropriate for the missing data but also suitable for the analyses that follow.

Congeniality

Congeniality, a related but distinct concept, deals with the alignment between the imputation and analysis models, specifically in terms of their ability to produce consistent statistical inferences.

- Asymptotic Agreement:

- For incomplete data, the mean and variance estimates using the imputation model should asymptotically align with those from the posterior predictive mean and variance using the joint model, given the missing data.

- For observed data, the analysis model should yield mean and variance estimates that asymptotically agree with the posterior estimates derived from the joint model.

- Practical Implications:

- Congeniality ensures that the imputation method does not introduce biases that could affect the estimates and conclusions drawn from the analysis model.

- It is particularly important when dealing with complex data structures where different types of variables (continuous, categorical) and relationships (linear, non-linear) are present.

Imputation Phase

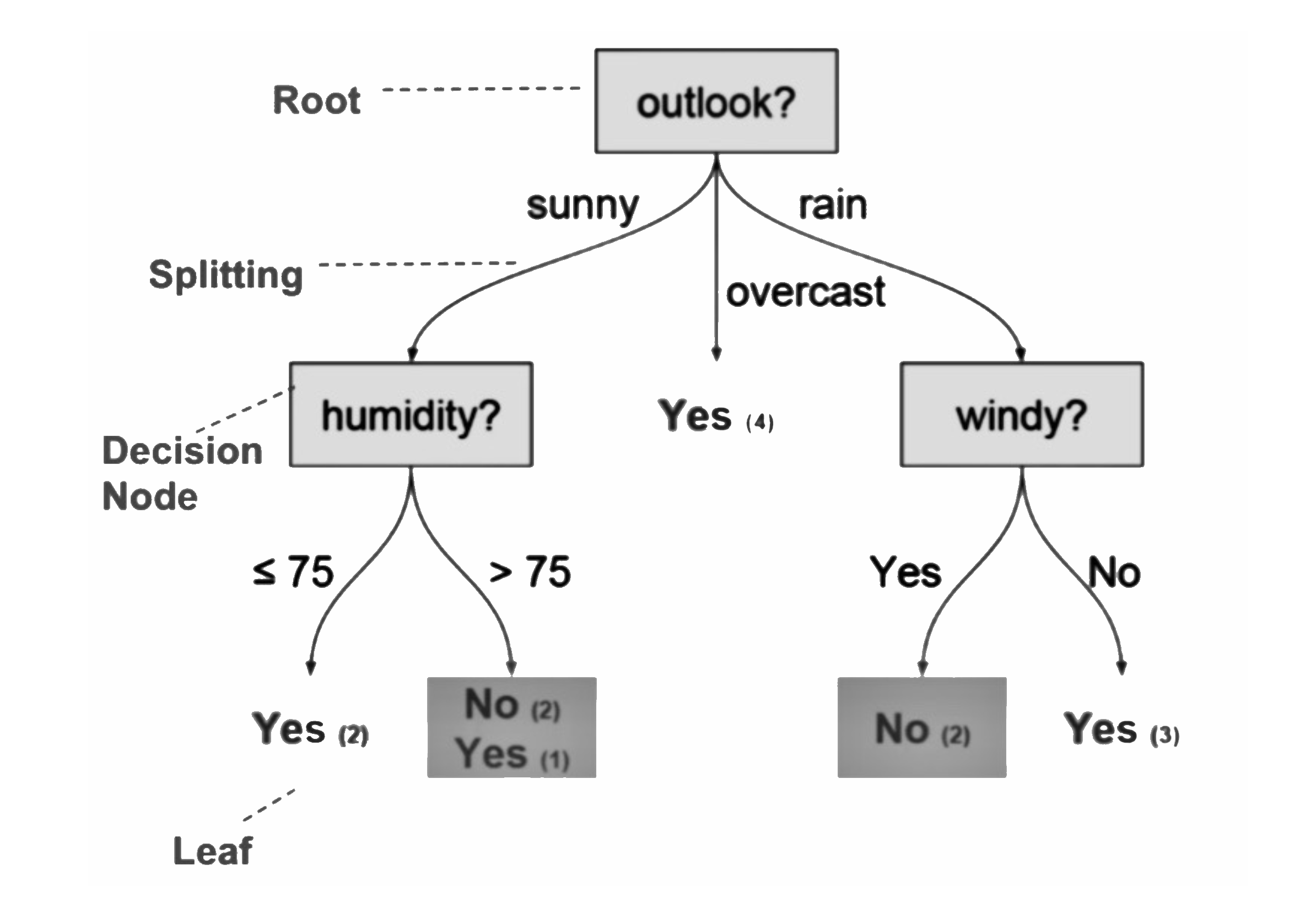

Missing patterns: Monotone and non-monotone

Understanding the patterns of missing data—specifically monotone and non-monotone missingness—is crucial for selecting the appropriate imputation methods and ensuring that the imputation process aligns with the structure of the dataset.

Figure: SAS PROC MI Imputation Methods

For Monotone Missingness

A monotone missing pattern occurs when the missing data for any subject follow a sequential order such that once data are missing, all subsequent measurements for that subject are also missing. This pattern often occurs in longitudinal studies where participants drop out and no further data are collected for them.

Characteristics:

- Sequential Dropouts: Once a participant fails to provide data at a time point, all subsequent data from that participant are missing.

- Imputation Simplicity: Monotone missingness simplifies the imputation process because the missing data can be imputed sequentially using methods that take advantage of the ordered structure.

For Non-Monotone Missingness

A non-monotone missing pattern is more complex and occurs when missing data do not follow a sequential pattern. Participants might miss certain visits but return for later assessments, leading to gaps in the data that are not necessarily followed by continuous missing data points.

Characteristics:

- Intermittent Missing Data: Participants may miss some assessments but return for later ones, creating a non-sequential pattern of missing data.

- Imputation Complexity: This pattern complicates the imputation process because the missing data cannot be handled sequentially. More complex imputation methods are needed to adequately address the random gaps.

Methods for Addressing Different Missing Patterns

- Monotone Missingness: For datasets with a monotone pattern, methods like last observation carried forward, next observation carried backward, or even simpler forms of single imputation or regression imputation can be effectively employed. These methods can sequentially address the missing data from the point of dropout to the end of the dataset.

- Non-Monotone Missingness: For non-monotone patterns, more sophisticated methods such as multiple imputation using chained equations (MICE), which can handle arbitrary patterns of missingness, are appropriate. MICE performs multiple imputations by specifying a series of regression models conditional on the rest of the variables in the dataset, thus accommodating the complexity of non-monotone missingness.

How do we get multiple imputations?

To understand how multiple imputations are generated in practice, it’s helpful to explore the Bayesian statistical framework that underpins the process. The method essentially involves two primary steps: drawing parameter samples from their posterior distributions and then using these parameters to predict missing values. Here’s a detailed step-by-step explanation of how multiple imputations are generated:

Step 1: Estimation of the Imputation Model Parameters

- Model Specification:

- First, specify an imputation model \(P_{\text{imp}}(Y | X, \theta)\), where \(Y\) represents the variable with missing data, \(X\) represents the observed covariates, and \(\theta\) represents the parameters of the model.

- Bayesian Framework:

- In a Bayesian context, you assume a prior distribution for the parameters \(\theta\). This prior could be informative (based on previous studies or expert knowledge) or non-informative (flat priors, which do not weight any outcome more than others).

- Posterior Distribution:

- Using the observed data, update the prior distribution of \(\theta\) to obtain the posterior distribution \(p(\theta | X, Y_{\text{obs}})\). This updating is done via Bayes’ Rule, which combines the likelihood of observing the data given the parameters with the prior distribution of the parameters.

Step 2: Generating Multiple Imputations

- Drawing Parameter Samples:

- From the posterior distribution \(p(\theta | X, Y_{\text{obs}})\), draw multiple sets of parameter samples. Each set of parameters, denoted \(\theta^*_m\) (where \(m = 1, \ldots, M\)), represents a possible realization of the model parameters given the data and the prior knowledge.

- Predicting Missing Values:

- For each drawn set of parameters \(\theta^*_m\), use the imputation model \(P_{\text{imp}}(Y | X, \theta^*_m)\) to predict the missing values in \(Y\). This step involves generating values of \(Y\) that are consistent with the observed data \(X\) and the drawn parameters \(\theta^*_m\).

- Creating Completed Datasets:

- Each set of predictions for \(Y\) (using a different \(\theta^*_m\)) results in a different “completed” dataset. If you draw \(M\) different samples of \(\theta\), you will end up with \(M\) imputed datasets. Each dataset represents a plausible scenario of what the complete data might look like, reflecting both the uncertainty in the parameters and the model’s predictions.

Sequential Univariate versus Joint Multivariate Imputation

The discussion of sequential univariate versus joint multivariate imputation strategies provides insight into how to handle different patterns of missing data, especially when considering the complexity of the missingness and the types of variables involved.

Sequential Univariate Imputation (Often Used in Monotone Missingness)

Overview: - Sequential univariate imputation is applied when the missingness pattern is monotone, meaning once a subject begins missing data, all subsequent data points for that subject are also missing. - This method assumes conditional independence between variables, allowing the joint distribution to be approximated through a series of univariate models.

Process:

- Model Construction: Univariate models are built one at a time. Each variable \(Y_j\) is modeled based on all previous variables in a specified order that aligns with the monotone missing pattern.

- Parameter Sampling: Parameters for each univariate model are drawn from their Bayesian posterior distributions.

- Data Imputation: Missing values for each variable \(Y_j\) are imputed sequentially, using the sampled parameters and all previously observed or imputed variables as predictors.

Detailed Process of Sequential Univariate Imputation

Ordering the Variables:

- Assume variables \(X_1, X_2, \ldots, X_S\) and \(Y_1, Y_2, \ldots, Y_J\), where \(X_i\) are fully observed covariates and \(Y_j\) are variables with missing data.

- The variables are ordered such that the earlier in the sequence a variable appears, the fewer missing values it has.

Modeling Each Variable:

- For each variable \(Y_j\) that has missing values, a univariate model is constructed using all previously ordered variables as predictors. This includes both \(X\) variables and any \(Y\) variables that have been previously imputed.

\[ \theta^{(m)}_j \sim P(\theta_j | x_1, \ldots, x_S, y_1^{\text{obs}}, \ldots, y_{j-1}^{\text{obs}}, y_j^{\text{obs}}) \]

Here, \(\theta^{(m)}_j\) represents the parameters of the imputation model for \(Y_j\) drawn from the Bayesian posterior given the observed data up to \(Y_{j-1}\).

Imputation of Missing Values:

- Once the parameters \(\theta^{(m)}_j\) are drawn, missing values of \(Y_j\) are imputed using the predictive distribution conditioned on the drawn parameters and all available predictors (both observed and previously imputed).

\[ y^{(m)}_j(\text{imp}) \sim P(Y_j | \theta^{(m)}_j, x_1, \ldots, x_S, y^{(m)}_1(\text{obs+imp}), \ldots, y^{(m)}_{j-1}(\text{obs+imp})) \]

In this formula:

- \(y_j(\text{obs})\) are the observed values of \(Y_j\),

- \(y^{(m)}_j(\text{obs+imp})\) represents the combination of observed and previously imputed values for \(Y_j\),

- \(y^{(m)}_j(\text{imp})\) are the new imputed values for \(Y_j\).

Sequential Progression:

- This process is repeated for each variable \(Y_j\) from \(j = 1\) to \(J\), where each step incorporates all previous imputations and observations. Each variable \(Y_j\) is imputed based on the assumption that all variables before it, in the sequence, either have no missing values or have been already imputed.

Advantages: - Simplicity: The method is computationally straightforward as it breaks down a potentially complex multivariate imputation into simpler, manageable univariate imputations. - Flexibility: Different types of regression models can be used depending on the nature of the variable being imputed (e.g., linear regression for continuous variables, logistic regression for binary variables).

Limitations: - Dependency on Order: The quality of imputations depends heavily on the ordering of variables, which may not always be clear or optimal. - Assumption of Conditional Independence: The method assumes that the conditional distributions adequately capture the relationships among variables, which might not hold in more complex datasets.

Joint Multivariate Imputation (Used for Non-Monotone Missingness)

Joint multivariate imputation treats the entire set of variables in a dataset as part of a single, cohesive statistical model. Unlike sequential univariate imputation, which imputes one variable at a time using only the previously imputed or observed variables as predictors, joint multivariate imputation simultaneously considers all variables to capture the complex interdependencies among them.

Overview: - Joint multivariate imputation addresses more complex non-monotone missingness patterns, where missing data can occur at any point in a subject’s record. - This approach typically utilizes a model that captures the joint distribution of all variables involved, facilitating the simultaneous imputation of all missing values.

Mathematical Formulation

Assume a dataset with variables \(X_1, X_2, \ldots, X_p\) where any of these variables can have missing entries. The goal is to estimate the joint distribution:

\[ P(X_1, X_2, \ldots, X_p | \theta) \]

where \(\theta\) represents the parameters of the joint distribution model. This model could assume a specific form, such as a multivariate normal distribution, especially when dealing with continuous variables:

\[ \mathbf{X} \sim \mathcal{N}(\boldsymbol{\mu}, \boldsymbol{\Sigma}) \]

where \(\boldsymbol{\mu}\) is the mean vector and \(\boldsymbol{\Sigma}\) is the covariance matrix of the distribution.

Process: 1. Model Specification: - Specify a multivariate model that fits the data well. Common choices include the multivariate normal model for continuous data or more complex models like multivariate mixed models that can handle a combination of continuous and categorical data.

- Parameter Estimation:

- Estimate the parameters of the joint model using available (complete) data. Techniques like Maximum Likelihood Estimation (MLE) or Bayesian estimation methods can be used. In Bayesian settings, priors are specified for \(\theta\), and the posterior distribution \(P(\theta | X_{\text{obs}})\) is computed.

- Data Imputation:

- Using techniques such as Markov Chain Monte Carlo (MCMC), sample from the joint distribution \(P(X_1, X_2, \ldots, X_p | \theta)\) to generate imputed values for the missing data points. This sampling reflects the correlations and relationships among all variables in the model.

- Each iteration of the sampling process results in a complete dataset, where missing values are filled based on the joint distribution conditioned on the observed data.

- Multiple Imputations:

- Repeat the sampling process multiple times to generate multiple imputed datasets. This reflects the uncertainty in the imputations due to the inherent randomness in the missing data and the estimation of \(\theta\).

Advantages: - Comprehensive Handling of Relationships: This method captures the complete dependency structure among all variables, which is particularly beneficial in datasets where variables are highly interrelated. - Flexibility: It can accommodate various types of data (continuous, ordinal, nominal) by choosing an appropriate joint model.

Limitations: - Computational Intensity: Estimating a joint model, especially one involving many variables or complex dependencies, can be computationally intensive and challenging. - Assumption Sensitivity: The performance of the imputation heavily depends on the correctness of the assumed joint model. A poor choice of model can lead to biased and unreliable imputations.

Methods for Monotone Missing Data Patterns

Figure: Monotone Multivariate Missing Data Pattern

In this example, the sequence of imputations in the monotone pattern therefore begins with imputation of missing values of \(Y_3\).

The P-step in the imputation of missing \(Y_3\) will utilize the relationship of the observed values of \(Y_3\) to the corresponding observed values of \(Y_1\) and \(Y_2\) to estimate the parameters of the predictive distribution, \(\mathrm{p}\left(Y_{3, \text { mis }} \mid Y_1, Y_2, \theta_3\right)\). The predictive distribution and the parameters to be estimated will depend on the variable type for \(Y_3\). PROC MI will use either linear regression or predictive mean matching (continuous), logistic regression (binary or ordinal categorical), or the discriminant function method (nominal categorical) to estimate the predictive distribution. For example, if \(Y_3\) is a continuous scale variable, the default predictive distribution is the linear regression of \(Y_{3, o b s}\) on \(Y_1, Y_2\) with parameters, \(\theta_3=\{\beta\)-the vector of linear regression coefficients, and \(\sigma_3^2\) the residual variance}. To ensure that all sources of variability are reflected in the imputation of \(Y_{3, \text { mis }}\), the values of the parameters for the predictive distribution, \(\mathrm{p}\left(Y_{3, \text { mis }} \mid Y_1, Y_2, \theta_3\right)\), are randomly drawn from their estimated posterior distribution, \(\mathrm{p}\left(\theta_3 \mid Y_1, Y_2\right)\).

Linear Regression in PROC MI

- Usage: Default imputation method for continuous variables under monotone or Fully Conditional Specification (FCS) imputation methods.

- Process: Involves regressing observed values of a continuous variable on other more fully observed variables, then using these regression estimates to define the posterior distribution for the model parameters.

- Imputation Steps: Consists of two steps, the Prediction (P) step and the Imputation (I) step, where parameters are drawn from their posterior distribution and missing values are imputed based on these parameters.\[Y_{3^*}=\beta_{0^*}+\beta_{1^*} Y_1+\beta_{2^*} Y_2+z \sigma_{* 3}\]

Predictive Mean Matching (PMM)

- For Continuous Variables: An alternative to linear regression in PROC MI for imputing continuous variables.

- Process: Utilizes the initial steps of linear regression to predict values, then defines a neighborhood of similar cases for each missing value. The missing value is imputed by randomly selecting from observed values within this neighborhood.

- Advantage: Ensures that imputed values lie within the range of actual observed values.

Logistic Regression

- For Binary or Ordinal Variables: Used to impute binary or ordinal classification variables.

- Methodology: Involves fitting a logistic regression model to the observed values, then using this model to impute missing values based on the probability distribution it defines. \[\operatorname{logit}\left(\mathrm{p}\left(\mathrm{Y}_4=1\right)\right)=\log \left(\frac{\mathrm{p}}{1-\mathrm{p}}\right)=\hat{\beta}_0+\hat{\beta}_1 \mathrm{Y}_1+\hat{\beta}_2 \mathrm{Y}_2+\hat{\beta}_3 \mathrm{Y}_3\]

Discriminant Function Method

- For Nominal Classification Variables: Used to impute missing data for variables with nominal category groups.

- Process: Relies on the assumption that covariates are multivariate normal with constant variance-covariance across groups. It employs discriminant analysis to estimate the probability of belonging to each category, which is then used to impute missing values.

Propensity Score Method

- PROC MI also offers a propensity score option (Schafer 1999) for performing imputation of missing data. This is a univariate method that was developed for use in very specialized missing data applications.

- Limitation: Does not preserve associations among variables in the imputation model, thus not recommended for applications where multivariate analysis is the ultimate goal.

Methods for Non-Monotone (Arbitrary) Missing Data Patterns

In such cases of a “messy” pattern of missing data where exact methods do not strictly apply, the authors of multiple imputation software have generally followed one of three general approaches. Each of these three approaches to an arbitrary pattern of missing data are available in PROC MI.

- The Markov chain Monte Carlo (MCMC) Method: Using an Explicit Multivariate Normal Model and Applying Bayesian Posterior Simulation Methods

- Transform the Arbitrary Missing Data Pattern to a Monotonic Missing Data Structure

- FCS, Sequential Regression, and Chained Regressions

Figure: Arbitrary Multivariate Missing Data Pattern

MCMC Method

MCMC is a statistical method used to estimate the posterior distribution of parameters in cases where the distribution cannot be derived in a closed form, especially with missing data. It is most effective when the underlying data reasonably follows a multivariate normal distribution.

Underlying Assumptions:

- Assumes a multivariate normal distribution for the variables.

- Uses a noninformative or Jeffries prior distribution for parameters \(\mu\) (mean) and \(Σ\) (covariance matrix).

Algorithm Process: Involves iterative steps, alternating between Imputation (I-step) and Prediction (P-step).

- I-Step: Draws imputations for missing data based on the current iteration’s predictive distribution.

- P-Step: Updates the parameter values for the predictive distribution based on the completed data.

- Convergence: The process aims to converge so that the imputation draws simulate the true joint posterior distribution. There is no exact test for convergence, but several graphical tools in SAS (like trace plots and autocorrelation plots) can help evaluate it. They should ideally show the posterior mean and variance stabilizing as iterations increase.

Considerations and Recommendations

- Transformations for Non-normal Data: PROC MI allows specifying transformations for continuous variables that are not normally distributed.

- Data Type Assumptions: The MCMC method assumes that the variables follow a multivariate normal distribution, which may not be suitable for highly skewed or non-normal continuous variables.

- Mixed Data Types: For mixed data types, PROC MI allows imputing and then applying post-imputation rounding, although this is not recommended for classification variables. With the availability of FCS methods, it is advised to use methods directly appropriate for the variable type.

Transforming to Monotonic Missing Data Structure

- Initial Step: Use simple imputation methods or an MCMC posterior simulation approach to fill in missing values for variables with very low rates of item missing data.

- Sequential Imputation for Remaining Missing Values: After transforming to a monotonic pattern, noniterative monotone regression or predictive mean matching imputations can be applied to sequentially fill in the remaining missing values.

For datasets where all variables are assumed to be continuous, the MCMC method with the MONOTONE option in PROC MI can be used.

Advantages of This Approach

- Simplification: By transforming the data into a monotonic missing pattern, the imputation process is simplified to a sequence of single-variable imputations.

- Efficiency: This approach is particularly effective when the generalized pattern of missing data is primarily due to missing data in one or two variables

- Flexibility: It allows for the combination of different imputation techniques, starting with MCMC and then using regression or predictive mean matching.

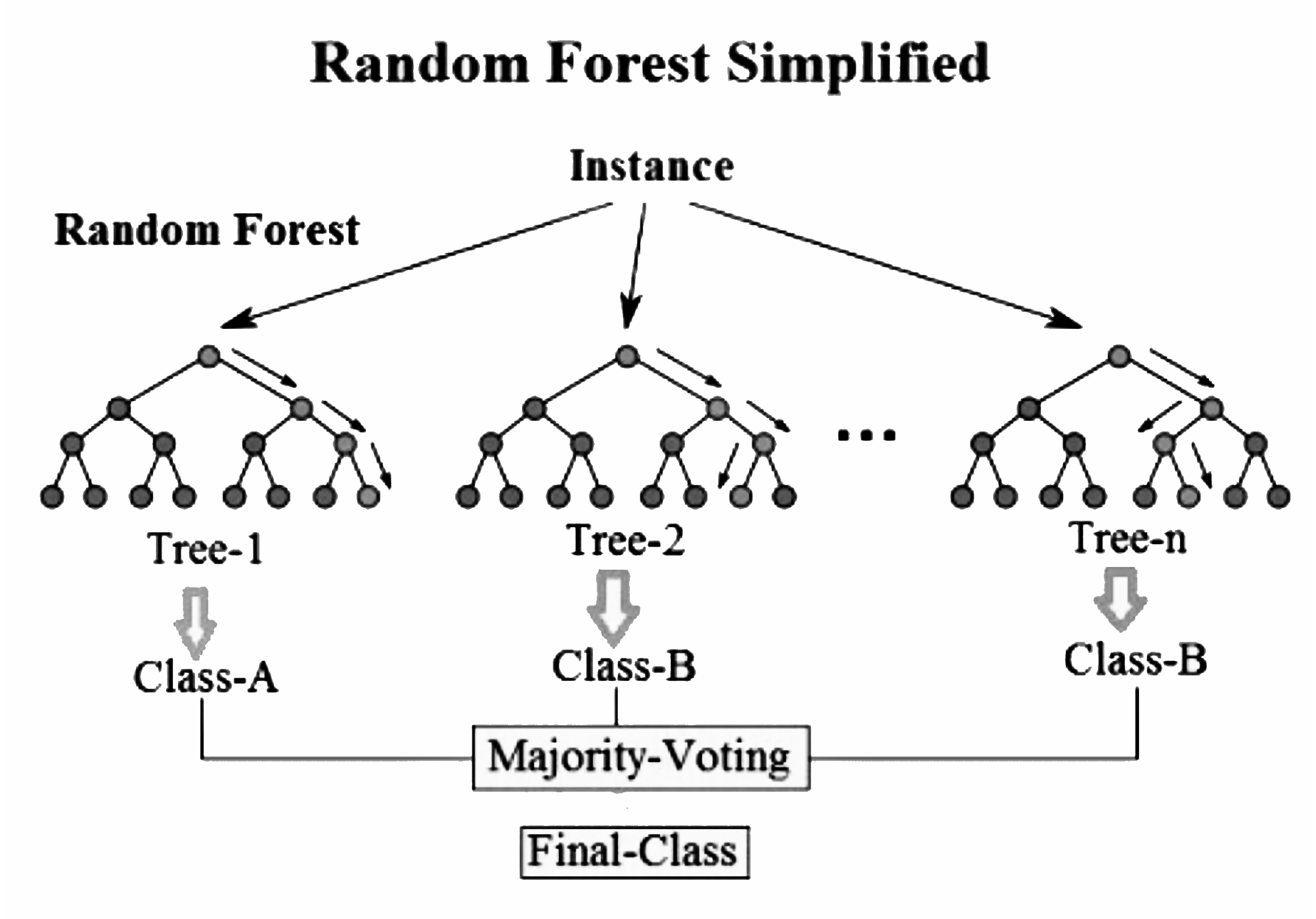

Fully Conditional Specification (MICE)

Multiple imputation by Fully Conditional Specification (FCS) is an iterative procedure, it also called Multiple Imputation by Chained Equations (MICE) (Van Buuren et al., 2006). FCS specifies an imputation model for each incomplete variable given other variables and formulates the posterior parameter distribution for the given model. Finally, imputed values for each variable are iteratively created until the imputation converges.

Algorithm Process:

- Iterative Nature: Involves multiple iterations, where each iteration goes through all variables in the imputation model sequentially.

- P-Step (Prediction): For each variable, current observed and imputed values are used to derive the predictive distribution for the missing values of the target variable.

- I-Step (Imputation): Imputations are updated by drawing from the predictive distribution defined by the updated regression model.

- Convergence: The process continues through multiple cycles until a pre-defined convergence criterion or system default (e.g., a certain number of iterations) is met. Assumes convergence to a stable joint distribution, representing draws from an unknown joint posterior distribution.

Variable-Specific Methods: FCS Uses different regression methods depending on the type of variable:

- Linear regression or predictive mean matching (PMM) for continuous variables.

- Ordinal logistic regression for binary or ordinal variables.

- Discriminant function method for nominal variables.

Algorithm 2 (Table Below) introduces the MICE process (taking imputation of the variable “age” using Bayesian linear regression as an example).

| Algorithm: MICE (FCS) | |

|---|---|

| 1. | The missing data \(Y_{\text{mis}}(\text{age})\) is filled using values randomly drawn from the observed \(Y_{\text{obs}}(\text{age})\) |

| 2. | For \(i=1,...,p\) in \(Y_{\text{mis}}(\text{isced})\), parameter \(\Theta_i^* (\beta_{0i}^*,\beta_{1i}^*,\beta_{2i}^*,\beta_{3i}^*,\beta_{4i}^*)\) is randomly drawn from the posterior distribution. |

| 3. | \(Y_i^*(\text{age})\) is imputed from the conditional imputation model given other variables \(f_i(Y_i |Y_{i^-}, \Theta_i^*)\), where \(Y_i^*(\text{age}) = \beta_{0i}^* + \beta_{1i}^*X_i(\text{isced}) + \beta_{2i}^*X_i(\text{bmi}) + \beta_{3i}^*X_i(\text{sex}) + \beta_{4i}^*X_i(\text{log.waist}) + \epsilon.\) |

| 4. | Steps 2-3 are Repeated \(m\) times to allow the Markov chain to reach convergence and finally \(m\) imputed datasets are generated. |

Advantage

- FCS directly specifies the conditional distribution for each variable and it avoids specifying a multivariate model for the entire data like Joint Model (MCMC).

- As another advantage, FCS can deal with different variable types, because each variable can be imputed using a customized imputation model (Bartlett et al., 2015). For instance, linear regression could be used for continuous variables; binary variables can be modeled by logistic regression; predictive mean matching (PMM) applies to any variable type, which was specified as the parametric MICE method in this thesis. However, it is important to correctly specify the imputation model to get the unbiased estimation (Van Buuren, 2012).

- Implicit Posterior Distribution: Unlike methods that define a specific multivariate distribution \(f(Y \mid \theta)\), FCS operates under the assumption that such a distribution exists and that the imputations reflect draws from this distribution.

- Empirical Validation: The method has been shown empirically to produce results comparable to other approaches like the EM algorithm and Bayesian posterior simulation methods.

- Utility and Effectiveness: The FCS method is particularly useful for complex data structures with mixed variable types and has been proven effective in various applications.

Overview of the imputation methods

Regression method

The regression method described is a powerful tool for imputation, particularly when dealing with datasets that have missing values either in a monotone pattern or under the Fully Conditional Specification (FCS) approach for non-monotone patterns. This approach fits into the broader framework of sequential imputation procedures, where each variable with missing data is imputed one at a time using a regression model that includes previously imputed or observed variables as predictors. Regression imputation involves using linear regression models to estimate the missing values in a dataset. The variables are imputed sequentially based on the order determined by the missing data pattern:

- Monotone Missingness: Variables are imputed in the order they appear in the dataset, using all previously available variables as predictors.

- FCS for Non-Monotone Patterns: Each variable is still imputed one at a time, but the order does not depend on a sequential missingness pattern and can be cycled through multiple times until convergence.

Imputation using linear regression is a simple imputation mothod, the regression model \(y_{\text{obs}}=\hat\beta_0+X_{\text{obs}}\hat\beta_1\) is calculated from the complete dataset (\(X_{\text{obs}}, y_{\text{obs}}\)), and the missing value \(y_{\text{mis}}\) is estimated from the regression model \(\dot y=\hat\beta_0+X_{\text{mis}}\hat\beta_1\). Where

- \(y\) is assigned as the \(n \times1\) vector of the incomplete variable y,

- \(y_{\text{obs}}\) is \(n_1 \times 1\) vector of observed data,

- \(y_{\text{mis}}\) is \(n_0 \times 1\) vector of data with missingness,

- \(X_{\text{obs}}\) is \(n_1 \times q\) matrix of predictors for rows with observed data in \(y\),

- \(X_{\text{mis}}\) is \(n_0 \times q\) matrix of predictors for rows with mising data in \(y\).

However, this imputation method can not be used in multiple imputation, beacuse each imputed dataset produces same estimated values, and the imputed value \(\dot y\) cannot reflect the uncertainty of imputation. As an improvement to achieve multiple imputation using linear regression, an appropriate random noise can be added in the regression model \(\dot y=\hat\beta_0+X_{\text{mis}}\hat\beta_1+\dot\epsilon\) (Van Buuren, 2012), where the random noise \(\dot\epsilon\) is randomly drawn from the normal distribution \(N(0,\hat\sigma^2)\), but this method is more suitable for large samples and has limitations in application.

Bayesian linear regression is more widely applicable in multiple imputation, where the statistical analysis is conducted within the Bayesian inference. We denote the existing sample (such as IDEFICS data) as D, and the real sample as X, and sample D is randomly drawn from sample X. Bayesian inference considers the entire data X distribution with a fixed but unknown probability density function \(\mathrm P(X)\) (Prior). And core problem of Bayesian inference is to estimate the probability distribution of D based on prior information X, denoted as \(\mathrm P(D\mid X)\) (Posterior). Bayesian inference is a big topic beyond the scope of this thesis and will not be further expanded here, for more information see “Bayesian Methods for Data Analysis” (Carlin et al., 2008).

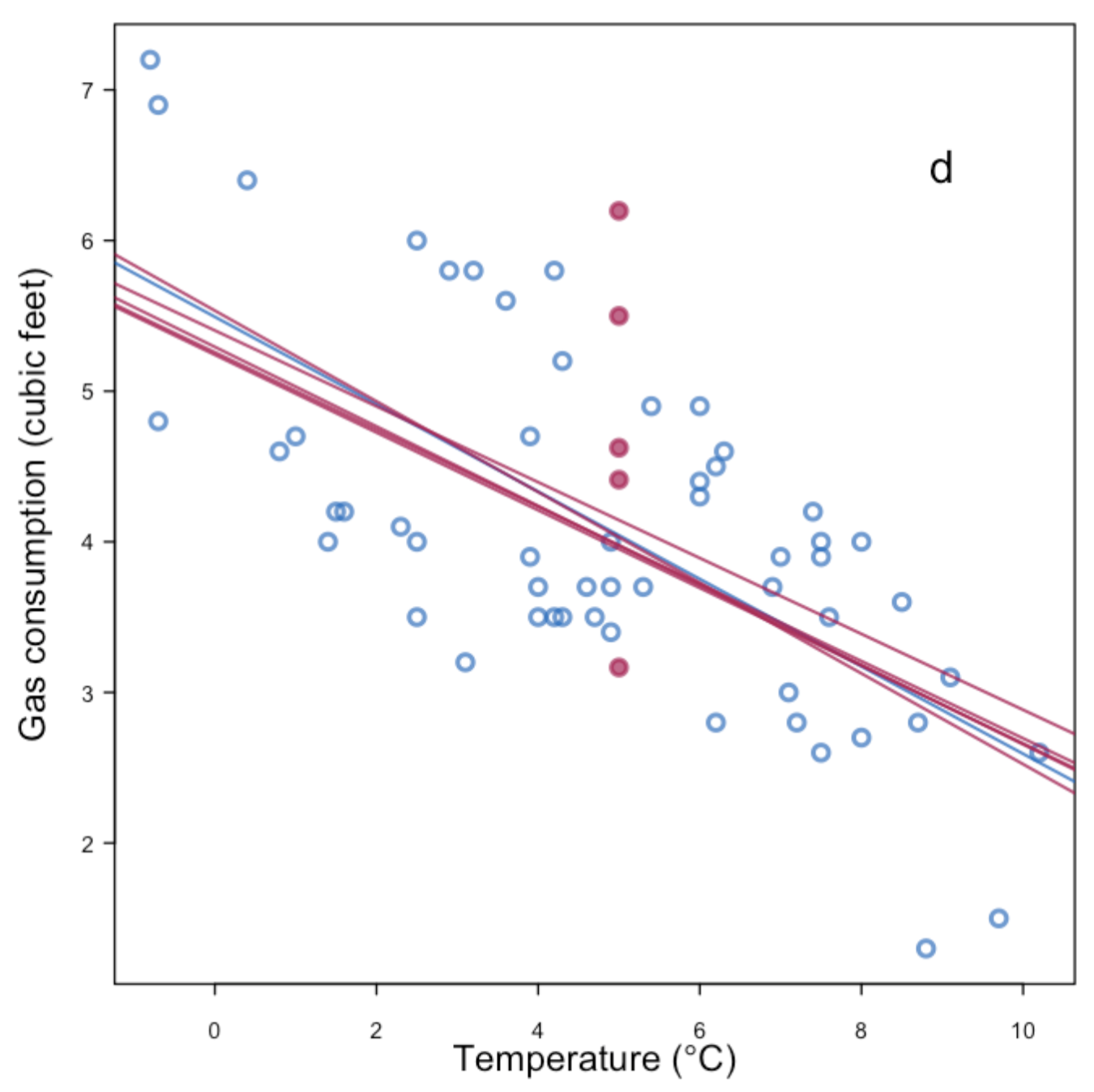

Figure: Bayesian multiple imputation

Compared with the general linear regression, which calculates the parameter estimates of existing data set D (e.g., \(\hat\beta_0,\hat\beta_1,\hat\sigma\)), Bayesian linear regression supplements additional information on the basis of standard linear regression \({\displaystyle Y=\mathbf {X}^{\rm {T}}{\boldsymbol {\beta}}+\varepsilon}\) (e.g., \(\boldsymbol {\beta}=(\hat\beta_0,\hat\beta_1)\)), it assumes that the data has a specific prior distribution (\({\displaystyle \mathrm P ({\boldsymbol {\beta }},\sigma ^{2})}\)). The posterior probability distribution of parameters \({\boldsymbol {\beta }}\) and \(\sigma\) (\(\mathrm P({\boldsymbol {\beta }},\sigma ^{2}\mid \mathbf {y} ,\mathbf {X} )\)) is obtained by combining prior beliefs about parameters with the likelihood function of the data (\(\mathrm P (\mathbf {y} \mid \mathbf {X} ,{\boldsymbol {\beta}},\sigma ^{2})\)) according Bayesian inference, it can be parametrized as \[\mathrm P({\boldsymbol {\beta }},\sigma ^{2}\mid \mathbf {y} ,\mathbf {X} ) \propto \mathrm P (\mathbf {y} \mid \mathbf {X} ,{\boldsymbol {\beta }},\sigma ^{2}) {\displaystyle \mathrm P ({\boldsymbol {\beta }},\sigma ^{2})}.\] Bayesian linear regression can incorporate parameter uncertainties, for predictive models \(\dot y =\dot\beta_0 + X_{\text{mis}}\dot\beta_1+\dot\epsilon\) (\(\dot\epsilon \sim N(0,\dot\sigma^2)\)) given the data D, parameters \(\dot\beta_0,\dot\beta_1,\dot\sigma\) are randomly obtained from their posterior distribution e.g. \(N(\beta_0,\sigma_{\beta_0}^2)\) (Van Buuren, 2012).

Mathematical Model for Regression Imputation

Consider a variable \(Y_j\) to be imputed and a set of predictors \(W_1, W_2, \ldots, W_{K_j}\) derived from the variables \(X_1, \ldots, X_S, Y_1, \ldots, Y_{j-1}\). The linear regression model used for imputation is given by:

\[ Y_j = \beta_0 + \beta_1 W_1 + \beta_2 W_2 + \cdots + \beta_{K_j} W_{K_j} + \epsilon \]

Where: - \(\beta_0, \beta_1, \ldots, \beta_{K_j}\) are the regression coefficients. - \(\epsilon\) is the error term, typically assumed to be normally distributed.

- Model Estimation:

- Fit the regression model using observed data to estimate the coefficients \(\hat{\beta} = (\hat{\beta}_0, \hat{\beta}_1, \ldots, \hat{\beta}_{K_j})\).

- Estimate the covariance matrix of the regression coefficients, which is essential for the next step of drawing samples from the Bayesian posterior distribution.

- Parameter Sampling:

- Draw new parameter values \(\beta^{(m)}\) from the Bayesian posterior distribution of the coefficients. This often involves adding variability to the estimated coefficients to reflect uncertainty: \[ \beta^{(m)} = \hat{\beta} + z \cdot SE(\hat{\beta}) \] where \(z\) is a draw from a standard normal distribution and \(SE(\hat{\beta})\) is the standard error of the estimated coefficients.

- Imputation of Missing Values:

- Use the newly sampled parameters \(\beta^{(m)}\) to impute missing values for \(Y_j\): \[ Y_j^{(m)} = \beta_0^{(m)} + \beta_1^{(m)} W_1 + \ldots + \beta_{K_j}^{(m)} W_{K_j} + \epsilon^{(m)} \]

- Here, \(\epsilon^{(m)}\) is a random draw from the distribution of the residuals to add randomness to the imputations.

Advantages: - Allows for the inclusion of interactions and non-linear terms. - Can handle different types of variables (continuous and categorical) by selecting appropriate regression models (linear, logistic).

Limitations: - The quality of imputation depends heavily on the model’s accuracy. - Sequential regression may introduce biases if the order of variables or the model specification is not optimal.

Logistic regression for categorical variables

Logistic regression is a robust statistical method used extensively for imputing missing values in binary and ordinal categorical variables. It models the log odds of the probability of an outcome based on predictor variables.

Model Overview: For a binary variable \(Y_j\), the logistic regression model used for imputation can be expressed as:

\[ \text{logit}(p_j) = \log\left(\frac{p_j}{1 - p_j}\right) = \beta_0 + \beta_1 W_1 + \beta_2 W_2 + \ldots + \beta_{K_j} W_{K_j} \]

where: - \(p_j = \Pr(Y_j = 1 | W_1, \ldots, W_{K_j})\) is the probability of the event \(Y_j = 1\) given the predictors \(W_1, \ldots, W_{K_j}\). - \(W_k\) are the predictor variables, which can include both continuous and other categorical variables, as well as potential interactions and transformations.

- Parameter Estimation:

- Fit the logistic regression model using available (non-missing) data to estimate the coefficients \(\hat{\beta} = (\hat{\beta}_0, \hat{\beta}_1, \ldots, \hat{\beta}_{K_j})\).

- Estimate the variance-covariance matrix of the estimated coefficients, important for the next step involving Bayesian updating.

- Bayesian Parameter Sampling:

- Parameters for the logistic regression model are drawn from their Bayesian posterior distribution. This involves sampling new parameter sets \(\beta^{(m)}\) based on the posterior: \[ \beta^{(m)} = \hat{\beta} + z \cdot \text{SE}(\hat{\beta}) \] where \(z\) is a vector of random draws from a standard normal distribution, reflecting the uncertainty in parameter estimates.

- Imputation of Missing Values:

- For each draw of \(\beta^{(m)}\), compute the logit and subsequently the probability \(p_j^{(m)}\) for the missing values: \[ p_j^{(m)} = \frac{\exp(\beta_0^{(m)} + \beta_1^{(m)} W_1 + \ldots + \beta_{K_j}^{(m)} W_{K_j})}{1 + \exp(\beta_0^{(m)} + \beta_1^{(m)} W_1 + \ldots + \beta_{K_j}^{(m)} W_{K_j})} \]

- Generate imputed values for \(Y_j\) by sampling from the Bernoulli distribution with probability \(p_j^{(m)}\).

Considerations

- Model Specification: Ensure that the logistic model is well-specified with appropriate predictors and interactions as needed.

- Diagnostics: After imputation, it’s crucial to assess the quality of imputations, examining aspects like convergence and the distribution of imputed values.

Markov chain Monte Carlo

The Markov Chain Monte Carlo (MCMC) method is a powerful statistical technique used extensively in situations where direct sampling from complex, high-dimensional distributions is not feasible. Its application in handling missing data, particularly under Bayesian frameworks, is both efficient and effective, enabling the estimation of posterior distributions that are otherwise difficult to compute analytically.

Markov Chain Basics: - A Markov chain is a sequence of random variables where the distribution of each variable depends only on the state of the previous variable, making this dependency a “memoryless” property. - Stationary Distribution: After many iterations, the Markov chain reaches equilibrium, where the distribution of the chain’s states no longer changes with further steps. This equilibrium is known as the stationary distribution, denoted as \(\pi(E)\).

MCMC Process: 1. Initialization: Start with arbitrary initial estimates of the parameters \(\theta^{(0)}\), such as mean vector and covariance matrix for a multivariate normal distribution. 2. Iteration: - I-step (Imputation Step): Impute missing data \(y_{\text{mis}}\) using the current parameter estimates \(\theta^{(\eta)}\). This involves sampling from the conditional distribution \(P(y_{\text{mis}} | x, y_{\text{obs}}, \theta^{(\eta)})\). - P-step (Posterior Step): Update the parameter estimates \(\theta\) based on the now complete data matrix (including the newly imputed values). This step involves sampling \(\theta^{(\eta+1)}\) from the posterior distribution \(P(\theta | x, y_{\text{obs}}, y_{\text{mis}}^{(\eta)})\).

- Convergence: Repeat the I-step and P-step until the

Markov chain converges to its stationary distribution, at which point

the samples of \(y_{\text{mis}}\) and

\(\theta\) can be considered draws from

the joint posterior distribution \(P(y_{\text{mis}}, \theta | x,

y_{\text{obs}})\).

- Monitor the convergence of the Markov chain to ensure that it reaches a stationary distribution.

- This may involve checking the stability of parameter estimates across successive iterations.

- Thinning and Independence:

- To ensure independence of the imputed datasets used in the final analysis, it is common to select samples at intervals (thinning) from the Markov chain.

- This is done by choosing iterations that are sufficiently spaced apart to minimize the correlation between successive samples.

Considerations and Challenges

- Missing Data Imputation: MCMC is particularly useful in scenarios where the pattern of missing data is complex and the underlying data distribution involves numerous parameters (such as multivariate normal with unknown mean and covariance).

- Parameter Estimation: In Bayesian statistics, MCMC methods are used to estimate parameters of complex models where traditional methods fail due to the intractability of the likelihood function.

- Convergence: Ensuring that the MCMC chain has converged to the stationary distribution is crucial. Diagnostics like trace plots and convergence diagnostics (e.g., Gelman-Rubin statistic) are commonly used.

- Burn-in: Early samples in the MCMC sequence may not represent the target distribution well, so they are often discarded in a phase known as “burn-in”.

- Autocorrelation: MCMC samples are not independent. High autocorrelation can lead to inefficient sampling and increased variance in estimates. Techniques such as thinning (selecting every nth sample) are used to reduce this issue.

Predictive mean matching method

The predictive mean matching (PMM), proposed by Rubin (1986) and Little (1988), is a hot deck imputation method, where the missing value is imputed with a similarly observed observation. Compared with the standard imputation method linear regression, the imputed values produced by PMM is more real. PMM can avoid strong parametric assumptions and can be easily applied to various variable types. If the variable is categorical, the imputed values are also categorical. If the variable is continuous, the imputed values are also continuous. They do not exceed the boundary of the original variable, and the distribution of imputed values is consistent with the original variable. Table 6 details the PMM MICE algorithm in this thesis (take the example of imputing variable “ISCED”):

| Algorithm: MICE PMM | |

|---|---|

| 1. | Coefficient \(\mathbf{\hat\beta}\) is estimated using Bayesian linear regression given other variables \({\displaystyle Y_{\text{isced}}=\beta_0 + {\beta_{\text{1}}}\mathbf{x_{\text{age}}}+ {\beta_{\text{2}}} \mathbf{x_{\text{sex}}}+ {\beta_{\text{3}}}\mathbf{x_{\text{bmi}}}+ {\beta_{\text{4}}}\mathbf{x_{\text{log.waist}}}+ \varepsilon}\), expressed as \({\displaystyle Y_{\text{isced}}=\mathbf{x}{\mathbf{\beta}}+\varepsilon}\). |

| 2. | Parameter \(\mathbf{\dot\beta}\) is randomly drawn from its posterior multivariate normal distribution \(N(\hat\beta,\text{Var}(\hat\beta))\). |

| 3. | For each missing data of variable, calculate the distance \(\dot d(i,j)=|X_i^\mathrm{obs}\hat\beta-X_j^\mathrm{mis}\dot\beta|\), where \(i=1,\dots,n_1\) and \(j=1,\dots,n_0\) |

| 4. | For each missing data \(Y_j^\mathrm{mis}\), from \(\dot d(i,j)\) create a set of \(d\) donors, from \(Y_\mathrm{obs}\) such that \(\sum_d\dot\eta(i,j)\) is minimum. |

| 5. | Sort \(d_{\text{PMM}}\) and create a set of \(k\) donors with smallest distances from the observed data for each missing case, which means the predicted values with observed data are close to predicted value with missing data. |

| 6. | From those \(k\) donors, randomly select one and assign its observation value \(\displaystyle \dot y_{i'}\) to impute the missing value, where \(\displaystyle \dot y_{i}=\displaystyle \dot y_{i'}\). |

| 7. | Repeat steps 2 to 6 \(m\) times for multiple imputations to generate \(m\) imputed datasets. |

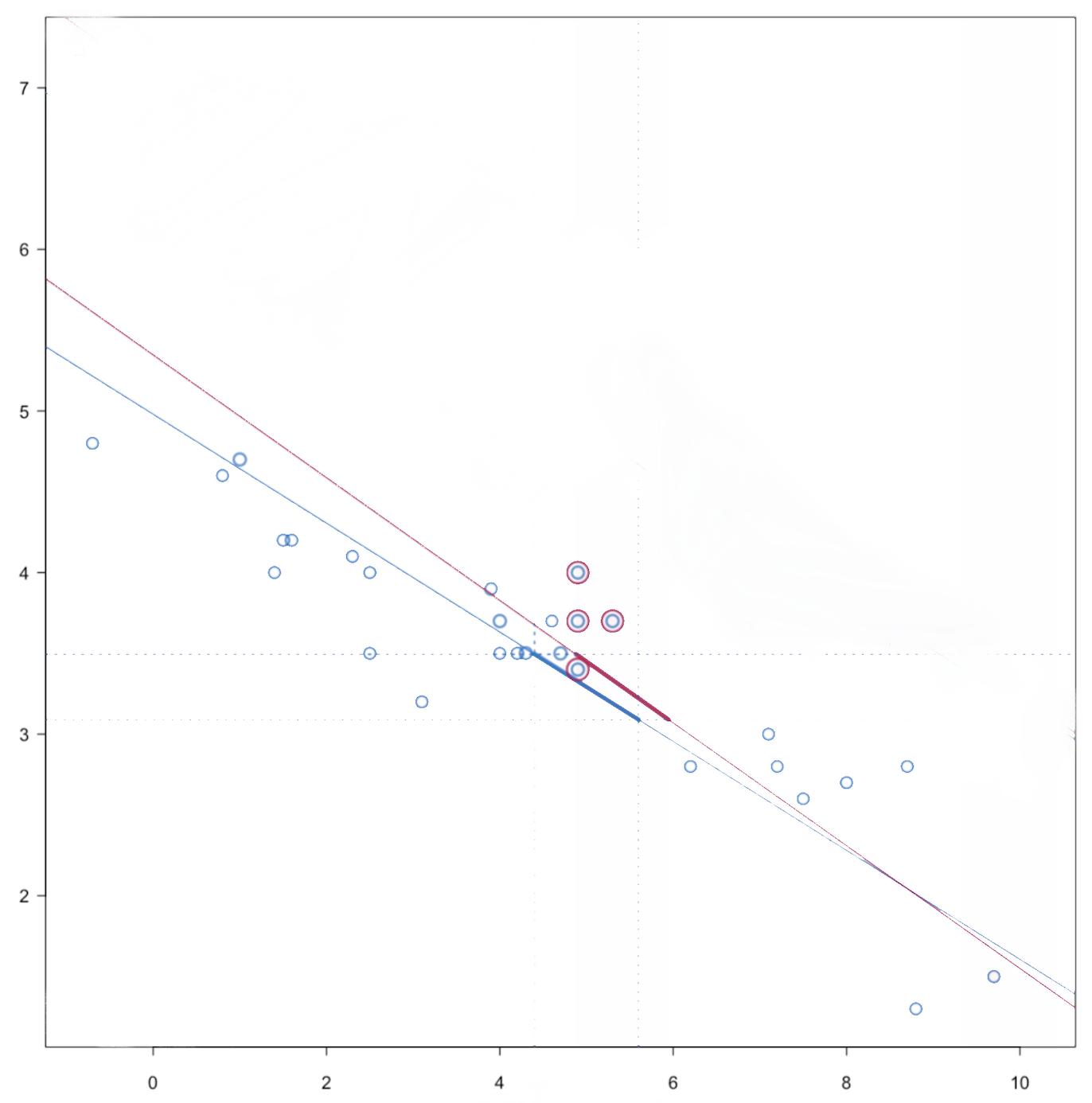

Figure: MICE PMM

PMM is built on a two-step process, where the first step is common to standard regression imputation:

- Parameter Estimation:

- For each complete data set, the parameters (coefficients) of a regression model are estimated. This is often done by drawing samples from the Bayesian posterior distribution of the parameters, which accounts for the uncertainty in parameter estimation.

- Prediction and Imputation:

- Predictions are made for each subject using their available data. However, instead of using these predictions directly to impute missing values (as done in regression imputation), PMM uses these predictions to find donor matches from among those with observed data.

PMM utilizes the same regression model to estimate parameters, but the imputation process differs significantly in the final step:

Step a: Similar to standard regression imputation, PMM begins by drawing a sample of the parameters from the posterior distribution of the regression model.

Step b.1: For each individual with observed data, the model predicts a value based on available predictors.

- For each individual \(i\) with a completely observed set of data on the outcome \(Y_j\), calculate a predicted value using the regression model: \[ y^{(m)}_{j,i} = \beta^{(m)}_0 + \beta^{(m)}_1 w_{1,i} + \ldots + \beta^{(m)}_{Kj} w_{Kj,i} \] This equation uses the regression coefficients drawn from the Bayesian posterior and the predictor values for individual \(i\).

Step b.2: For individuals with missing data, the model also predicts values. Instead of using these predicted values directly, PMM identifies a set of donors—individuals whose predicted values are closest to the predicted value for the missing case.

- For each individual \(q\) missing the outcome \(Y_j\), compute a predicted value in a similar manner: \[ y^{(m)}_{j,q} = \beta^{(m)}_0 + \beta^{(m)}_1 w_{1,q} + \ldots + \beta^{(m)}_{Kj} w_{Kj,q} \] This prediction uses the available predictors for the individual with missing data.

Donor Selection and Imputation

- Identify a subset of \(N_j\) individuals from the complete cases whose predicted outcomes \(y^{(m)}_{j,i}\) are closest to the predicted missing value \(y^{(m)}_{j,q}\).

- Randomly select one individual from this subset and use their actual observed outcome \(y_{j,i}\) to impute the missing value for individual \(q\). This step ensures that the imputed values are actual observed values from the dataset, maintaining data integrity and preventing out-of-range imputation.

Benefits of PMM: - Plausibility and Range Consistency: Since PMM uses actual observed values for imputation, it naturally respects the empirical distribution of the data. This method avoids unrealistic imputation results that might occur with pure prediction strategies, especially in cases with bounded or restricted data ranges (like scores or scales). - Robustness to Model Misspecification: PMM does not rely as heavily on the assumption of the correct specification of the parametric form of the distribution of the data. By using observed values, it sidesteps potential biases that can occur if the model assumptions are incorrect. - Choosing \(N_j\): The size of \(N_j\) (the number of close matches considered for donor selection) can affect the variability and bias of the imputed values. A smaller \(N_j\) ensures closer matches but might increase variance among the imputed datasets, while a larger \(N_j\) makes the method more robust to model mis-specifications but could dilute the predictive power of the model.

Propensity score method

The propensity score method for handling missing data utilizes the concept of estimating the probability of missingness based on observed covariates, then grouping subjects by similar probabilities, and finally performing imputation within these groups.

- Estimation of Propensity Scores

- Model Construction: A logistic regression model is built where the dependent variable is an indicator \(A_j\) representing whether \(Y_j\) is observed (1) or missing (0). The logistic model predicts the probability of missingness based on a set of covariates \(W_1, W_2, ..., WK_j\) which can include prior outcomes \(Y_1, ..., Y_{j-1}\) and other explanatory variables \(X_1, ..., X_S\).

- Equation: \[ \text{logit}(p_j) = \beta_0 + \beta_1W_1 + \beta_2W_2 + \cdots + \beta_{Kj}WKj \]

- Outcome: This results in a propensity score for each subject \(i\), denoted as \(p_{j,i}\), representing the probability that \(Y_j\) is missing given the covariates.

- Group Formation

- Subjects are grouped based on the similarity of their calculated propensity scores. This stratification aims to ensure that within each group, subjects have a similar likelihood of having missing data.

- Approximate Bayesian Bootstrap Imputation

- Within each group, the following steps are performed:

- (a) Bootstrap Sampling: Draw \(n_1\) values randomly with replacement from the observed values \(yk(obs)\) within the group to create a new sample set \(y^{(m)}k(obs)\).

- (b) Imputation: Draw \(n_0\) values randomly with replacement from \(y^{(m)}k(obs)\) to impute the missing values \(yk(mis)\).

- These steps are designed to simulate drawing from the posterior distribution of the imputation model, providing a non-parametric approach to imputation within each propensity-score group.

- Within each group, the following steps are performed:

- Repetition and Finalization

- The steps are repeated for each variable with missing values and are carried out \(M\) times to generate \(M\) complete datasets. This repetition helps in capturing the uncertainty about the imputed values.

Considerations and Limitations

- User-Defined Parameters: The number of groups to form based on propensity scores can significantly affect the imputation results. The default in many software implementations like SAS PROC MI is typically 5 groups.

- Focus on Missingness, Not Relationships: Since the propensity score focuses on the likelihood of missingness rather than the relationships between variables, this method might not be suitable for analyses that require understanding inter-variable relationships, such as multivariate or repeated measures analyses.

- Risk of Bias: If the missingness is not correctly modeled or if it is completely random (MCAR), using propensity scores might not offer any benefit over simple random imputation and might even introduce bias due to inappropriate grouping.

Discriminant function method

The discriminant function method is a statistical technique used to classify subjects into groups (categories) based on their characteristics, which can also be adapted for imputation purposes.

- Assumptions:

- Predictor Variables: This method requires that all predictor variables are continuous.

- Distribution: These predictors should ideally follow a multivariate normal distribution.

- Covariance: The within-group covariance matrices should be approximately equal across the groups.

- Process:

- Classification Basis: It utilizes linear combinations of the continuous predictors to create a discriminant function that maximizes the separation between predefined groups.

- Discriminant Score: Each subject gets a score based on this function that predicts their group membership.

- Group Assignment: Based on the discriminant score, subjects can be classified into the most likely category.

Application to Imputation

- Imputing Missing Data: In the context of imputation, the discriminant function can estimate the most likely category (group) for a missing categorical variable based on other available continuous variables.

- Monotone Missingness: It is particularly suited for scenarios with monotone missing patterns, where the presence of missing values can be systematically ordered across variables.

Limitations in Clinical Trials

- Binary or Categorical Predictors:

- Clinical trials often involve treatment arms and other variables that are inherently categorical (e.g., gender, treatment received).

- The discriminant function method is not well-suited for categorical predictors unless they are converted to continuous scores, which might not always be appropriate or feasible.

- Categorical Outcomes:

- If the outcome or endpoint itself is categorical and has missing values, using this method becomes problematic as previous values of these endpoints (also categorical) cannot serve as predictors under the discriminant function method’s assumptions.

Fully conditional specifications

The Fully Conditional Specifications (FCS) method, also known as “chained equations” or “chained regression,” is a flexible technique for handling missing data in both monotone and non-monotone patterns.

Core Principles of FCS

- Univariate Models:

- Unlike methods that rely on a joint multivariate distribution (like MCMC), FCS uses a series of univariate models.

- Each variable with missing data is imputed separately using its own model.

- Sequential Imputation:

- The process involves cycling through each variable sequentially and imputing missing values using the rest of the variables as predictors.

- This cycling is repeated across multiple iterations to ensure that the imputations for all variables are mutually consistent and stable.

- Iterative Process:

- Initially, all missing values are filled in (fill-in step) using a temporary model to start the iterative process.

- During the imputation step, the values are updated iteratively, where each variable can use all other variables as predictors, regardless of their order.

- This is akin to a Gibbs sampling approach, where each cycle through the variables aims to draw closer to a stable joint distribution of imputed values.

Implementation Steps

- Fill-In Step:

- Start by filling in missing values for each variable based on a univariate model that only uses previously listed variables as predictors.

- These initial values are temporary and serve only to kick-start the iterative process.

- Imputation Step:

- Update each missing value by fitting a univariate model that can include any of the other variables in the dataset as predictors.

- This step is repeated for several iterations (similar to burn-in in MCMC), allowing the imputed values to stabilize.

- Convergence: