Safety Signal Detection and Evaluation

1 Safety Signal Detection

1.1 Introduction

Safety Signal Detection (SSD) is a critical component of pharmacovigilance and drug safety monitoring. Its primary aim is to detect, assess, and manage potential safety risks associated with pharmaceutical products, ensuring patient safety and supporting public health.

What is Safety Signal Detection?

SSD involves the routine evaluation of safety signals through periodic reviews of aggregated data from various sources, including clinical trials, post-marketing surveillance, and real-world data. A safety signal refers to evidence of a potentially new adverse event or a new aspect of a known adverse event that is caused by a medicinal product and that warrants further investigation.

SSD Process

- Strategy and Scope Setting:

- At the early phases of drug development, the clinical team, along with the benefit-risk lead (subject to confirmation), discusses and determines the SSD strategy. This includes deciding the frequency of reviews and the scope of included studies.

- The strategy is tailored to ensure that all potential safety issues are promptly identified and addressed. This involves periodic assessments that could be aligned with other regulatory requirements like Periodic Safety Update Reports (PSURs) or Development Safety Update Reports (DSURs).

- Data Collection and Aggregation:

- Relevant data from clinical trials and other sources are collected and aggregated.

- Analysis and Review:

- The data undergoes statistical analysis to identify trends or patterns that could indicate potential safety issues.

- Signal Evaluation:

- Identified signals are then evaluated to confirm their validity and potential impact on patient safety. This evaluation includes a detailed investigation into whether the signal represents a true risk or is due to other factors like underlying diseases or concurrent medications.

- Risk Management and Mitigation:

- If a safety signal is confirmed, risk management strategies are developed and implemented. These may include changes to the product labeling, restrictions on use, or in some cases, drug withdrawal.

- Documentation and Reporting:

- All findings and actions are thoroughly documented and reported to regulatory authorities as required.

1.2 Biostatistics and Data Science (BDS) Role

- BDS is primarily responsible for authoring the Program Statistical Analysis Plan (PSAP).

- BDS provides clinical study data summaries, typically in the form of Tables, Figures, and Listings (TFLs), which are essential for supporting the detection of safety signals.

- Additionally, BDS ensures that the clinical teams have access to advanced visualization tools for data exploration. These tools help in the intuitive understanding of complex datasets and trends, facilitating a more robust safety signal detection process.

2 Planning for IND Safety Reporting

2.1 Safety Reports (SER & SSAR)

Safety Evaluation Report: A flexible approach for reviewing safety topics which are not triggered from the signal detection process. An SER can be upgraded to an SSAR if the safety topic becomes a valid signal during the process. This report is led by the Safety Writer

Safety Signal Assessment Report: Further evaluated signal considering all available evidence, to determine whether there are ne wrisk causually assoiciated with active substance or medicinal product, or if known risk have changed. his report is led by the Safety Writer

2.2 Expected and Anticipated Serious Adverse Events (SAEs)

Expected SAEs refer to serious adverse events that can reasonably be predicted based on the known pharmacological properties, previous clinical trial outcomes, or typical characteristics of the drug class. These events are usually documented in the drug’s label or other professional literature. Therefore, when these events occur in new clinical trials, they are considered “expected” because their potential has already been identified and acknowledged based on existing data. Expected SAEs are important for risk management and informed consent processes, as they help set realistic expectations for both clinicians and participants regarding the known risks associated with a drug.

Anticipated SAEs, while similar to expected SAEs, generally refer to events whose occurrence is foreseen based on less definitive evidence than that for expected SAEs. These could be based on preliminary data, such as early clinical trials, animal studies, or even theoretical considerations linked to the drug’s mechanism of action. Anticipated SAEs are not as firmly established as expected SAEs but are considered likely enough that they should be monitored for in the context of ongoing clinical research. They may or may not be included in the product label but are anticipated from a safety surveillance perspective.

Key Differences

- Basis of Prediction: Expected SAEs are based on more solid, often clinically verified evidence, while anticipated SAEs may rely on preliminary or less conclusive evidence.

- Documentation: Expected SAEs are typically documented in official product materials like labeling, whereas anticipated SAEs might not be, depending on their level of evidence and regulatory requirements.

- Regulatory Impact: Expected SAEs have a direct impact on the drug’s labeling and are crucial for regulatory compliance and patient safety communications. Anticipated SAEs, while also important, might influence ongoing monitoring strategies and potential label updates as more data become available.

2.3 Aggregate Analysis Planning

Planning for FDA IND (Investigational New Drug) safety reporting is a critical component of clinical trial management, ensuring that serious adverse events (SAEs) are properly identified, analyzed, and reported. This process is especially vital during the transition from Phase 1 to Phase 2 of clinical trials, where a clear understanding of the safety profile of the investigational medicinal product (IMP) is essential for further development.

Aggregate Analysis Planning: Early in the product development lifecycle, planning for the aggregate analysis of aSAEs and expected SARs should commence. This is crucial as it sets the foundation for ongoing safety monitoring and regulatory compliance. The planning should start as the studies transition from Phase 1 to Phase 2, which is typically when the target population for these studies has been clearly identified and the safety data from initial human exposure is available.

Possible Approaches for Aggregate Analysis:

- Analysis of All Serious Adverse Events (SAEs) by Treatment

Group:

- This approach involves periodic reviews of all SAEs sorted by treatment groups within the clinical trial. The primary focus is to identify whether aSAEs or expected SARs are occurring at a significantly higher incidence in the group receiving the IMP compared to a concurrent control (either placebo or an active comparator) or a historical control group. This method helps in understanding the direct impact of the drug under study relative to other treatments or known data.

- Unblinding Trigger Approach:

- In this method, a blinded quantitative analysis is conducted. The Unblinding Trigger Approach focuses on the analysis of anticipated serious adverse events (aSAEs) and expected serious adverse reactions (SARs). This approach uses a pre-specified threshold to determine whether the overall blinded incidence rate of these events is higher than either the estimated background incidence rate in the target population or the incidence of expected SARs as listed in the Reference Safety Information (RSI).

- If these thresholds are exceeded, the SSDT may conduct a further blinded review and, if necessary, escalate the safety issue to the Benefit Risk Team (BRT) for consideration. If the BRT deems it necessary, an unblinded review may be initiated.

Selection and Initiation of Aggregate Analysis:

The Safety Surveillance and Data Team (SSDT) is responsible for selecting the appropriate methodology for aggregate analysis. The choice between analyzing all events by treatment group or applying the unblinding trigger approach depends on several factors including the study population, the characteristics of the product, and the size and duration of the clinical studies involved. Aggregate analysis is typically initiated during Phase 2 of clinical studies, assuming there are enough participants and observed SAEs to conduct a meaningful analysis.

Documentation in Safety Surveillance Plan (SSP): The specific methodologies chosen for the aggregate analysis are detailed in the product-specific FDA IND Safety Surveillance Plan (SSP), which is a dedicated section of the Safety Signal Detection Strategy. The SSP is crafted and reviewed by the SSDT and should include:

- The chosen methodology (Analysis of All Events by Treatment Group and/or Unblinding Trigger Approach).

- Criteria for further assessment if using the Unblinding Trigger Approach, including thresholds that might trigger an IND safety report.

- A list of aSAEs with MedDRA search criteria used for identifying these events in the clinical database.

- Estimations of background incidence rates for aSAEs, if possible.

- Protocols for when unblinding is necessary to evaluate potential causal relationships with the IMP.

Defining anticipated serious adverse events (aSAEs)

- The process begins when the Development Physician initiates the definition of aSAEs as soon as the target patient population(s) have been identified and the first study concepts in these populations have been approved. This early initiation ensures that the safety monitoring is tailored to the specific needs of the population from the outset.

- The Development Physician is responsible for defining the characteristics of the target population and checking for any existing lists of aSAEs relevant to this group.

- They lead efforts to update or create new lists of aSAEs, involving contributions from other functions such as Medical Affairs to identify relevant clinical studies for evaluating aSAEs and background incidence rates.

- The RWE Representative uses Real World Data, starting with a focused literature review, and if necessary, conducting studies using fit-for-use Real World Databases to identify potential aSAEs.

- If the initial literature review does not yield sufficient data, further studies are conducted to estimate background incidence rates for each aSAE. This step is critical for understanding the typical occurrence rates of these events outside of clinical trials.

- Since Real World Evidence might use non-MedDRA terms, the list of aSAEs identified needs to be reviewed by the Medical Coding Oversight Lead to define these events in standardized MedDRA terms.

- The Development Physician consolidates a preliminary list of aSAEs using MedDRA search terms based on the RWE and statistical analyses.

- The Statistical Representative then estimates the background incidence rates for each aSAE using historical clinical data.

2.4 XSUR - Standard Required Safety Reporting Documents

DSUR/PSUR/IB/J-NUPR/RMP

These documents are regulatory safety reporting requirements that help ensure the ongoing evaluation of the safety profile of investigational and marketed drugs.

1. DSUR (Development Safety Update Report)

Purpose:

The DSUR is a yearly regulatory document that provides a comprehensive safety overview of an investigational product during its development phase (pre-marketing).General Content:

- Summary of cumulative safety data across all clinical trials

- Adverse event (AE) overview

- Exposure data

- Ongoing and completed study updates

- Benefit-risk considerations

Timing:

Annually, typically synchronized with the Investigational Brochure (IB) update.BST Contribution:

- TFLs summarizing:

- Cumulative subject exposure, often broken down by population (e.g., healthy vs. patient, age groups)

- Study-specific exposure summaries

- Participant withdrawals due to adverse events

- SAE/AE listings if required

- TFLs summarizing:

2. PSUR (Periodic Safety Update Report)

Purpose:

The PSUR is used to monitor the safety of marketed (authorized) products over time, usually post-approval.General Content:

- Cumulative safety information since the product was authorized

- Global safety data

- Benefit-risk evaluation

- Regulatory actions taken

- Literature and spontaneous report summaries

Timing:

Varies depending on the product’s time on the market and specific regulatory agreements (e.g., every 6 months, 1 year, or 3 years).BST Contribution:

- TFLs supporting:

- Cumulative subject exposure, including dose and duration

- Trend summaries (e.g., AE over time)

- Stratified summaries by indication or region, if required

- TFLs supporting:

3. IB (Investigator’s Brochure)

Purpose:

The IB is a reference document for investigators conducting clinical trials and contains comprehensive data on the investigational product, including safety and efficacy findings.General Content:

- Clinical and non-clinical safety data

- Pharmacokinetics and pharmacodynamics

- Investigator guidance

- Benefit-risk summary

Timing:

Updated annually, often in parallel with DSUR preparation.BST Contribution:

- TFLs used in the Adverse Drug Reaction (ADR)

section:

- Listings or summary tables of AEs considered related

- Cumulative AE rates

- Narrative support via structured data

- TFLs used in the Adverse Drug Reaction (ADR)

section:

4. J-NUPR (Japanese Non-serious Unlisted Periodic Report)

Purpose:

A Japan-specific post-marketing requirement for periodic reporting of non-serious and unlisted adverse events observed during post-marketing surveillance.General Content:

- Line listings of applicable non-serious, unlisted AEs

- Summary counts stratified by term, SOC, region, etc.

Timing:

Regular intervals defined by the Japanese Ministry of Health, Labour and Welfare (MHLW)BTS Contribution:

- Delivery of:

- Cumulative frequency tables

- Listings of unlisted events

- Patient-level datasets, filtered by local criteria

- Delivery of:

5. RMP (Risk Management Plan)

Purpose:

The RMP outlines how the risks of a medicinal product will be identified, characterized, prevented, or minimized once the product is on the market.General Content:

- Product safety specification

- Pharmacovigilance plans

- Risk minimization measures (e.g., targeted education, monitoring)

Timing:

- At the time of marketing authorization application (MAA)

- Updated as needed post-authorization (e.g., after new safety signals)

BST Contribution:

- Statistical review and validation of:

- Exposure estimates

- Incidence rates of identified and potential risks

- Monitoring metrics (e.g., patient compliance, reporting rates)

- Statistical review and validation of:

Reference:

EMA guidance:- GVP Module V – Risk Management Systems (Rev 2)

- RMP Q&A on EMA website

- GVP Module V – Risk Management Systems (Rev 2)

3 BSSD

3.1 Ongoing Clinical Trials, Utilizing a Bayesian Framework

Safety signals refer to potential indications of an adverse effect caused by a drug or treatment. Detecting these early during a clinical trial can:

- Prevent harm to participants

- Inform decisions about trial continuation

- Support regulatory compliance

The purpose of this safety signal detection approach is to improve how potential risks and adverse effects are identified during ongoing clinical trials, especially when those trials are still blinded. Detecting safety signals early is crucial to protect participants and to make informed decisions about whether a drug or treatment should continue to be studied. Traditional methods often fall short in this area because they may not be flexible enough to handle evolving data or may not work well when treatment assignments are still unknown. That’s why a new, more adaptive system is needed. Using a Bayesian framework, which allows for continuous learning from new data and offers a more intuitive way to assess risk as information accumulates. This approach is particularly useful in the clinical development phase, where decisions must be made based on incomplete or uncertain data. By re-engineering the safety signal detection process to include these modern statistical techniques, the goal is to create a more reliable and responsive system that can better safeguard patient health and support smarter, faster decision-making in drug development.

Bayesian methods as a core solution:

Bayesian statistics allow for updating beliefs with new evidence, which is ideal for ongoing trials where new safety data constantly emerge.

This framework supports:

- Assessment of blinded data

- Evaluation of adverse events

- Estimation of probabilities of safety risks

Bayesian reasoning is often more flexible and intuitive for interpreting risk over time compared to traditional frequentist approaches.

Why a change in safety signal detection (SSD) is needed by highlighting key drivers across five major areas: regulatory requirements, scientific definitions, industry trends, patient safety, and corporate responsibility.

From a regulatory perspective, recent FDA guidance (e.g., CFR 312.32 and other 2012–2015 documents) increasingly emphasizes the need for sponsors to systematically assess safety data across ongoing studies. Regulations call for aggregate analyses to identify adverse event patterns and recommend setting up dedicated Safety Assessment Committees and Surveillance Plans. Moreover, guidance encourages quantitative approaches to determine the likelihood of causal associations between treatments and adverse events.

In terms of signal detection science, definitions from CIOMS (Council for International Organizations of Medical Sciences) and Good Pharmacovigilance Practices describe a signal as a hypothesis of a causal link between a treatment and observed events. Detection is about identifying statistically unusual patterns—those exceeding a specified threshold—that justify further verification. This highlights the need for methods that can handle complex data and allow continuous evaluation, especially in blinded settings.

CIOMS (Council for International Organizations of Medical Sciences) Working Group VI recommends a holistic, program-level review of safety data across studies.

This approach is intended to:

- Identify safety signals earlier, ideally before they become serious or widespread.

- Protect patients by responding quickly to potential safety issues.

- Support medical judgment with a quantitative framework—i.e., not just subjective interpretations, but data-supported decisions.

US FDA integrated these ideas into official regulations

21 CFR Parts 312.32 and 320.31 (effective 2010): These specify the rules for IND (Investigational New Drug) safety reporting.

Guidance Documents

- 2012 Guidance: Clarifies how sponsors should implement safety reporting in clinical studies.

- 2015 Draft Guidance: Suggests how sponsors can assess safety data more rigorously—especially for identifying causal relationships between the drug and adverse events.

US FDA Final Rule – Key Expectations, The Final Rule establishes that sponsors must take a systematic approach to pharmacovigilance.

It emphasizes that IND safety reports should only be submitted when there’s reasonable evidence of a causal relationship between the drug and the adverse event.

Breaking the Blind: The FDA allows breaking the blind for serious adverse events not related to clinical endpoints.

According to the Final Rule, two options exist:

- Routine Aggregate Unblinded Review by a Safety Assessment

Committee (SAC)

- Conducted independently.

- Preferred method: Balances patient safety with trial integrity.

- Allows identification of signals without bias.

- Conditional Unblinding

- Sponsors remain blinded unless overall adverse event rates exceed a predefined expected threshold.

- Only then do they unblind data to assess if the drug might be responsible.

- Routine Aggregate Unblinded Review by a Safety Assessment

Committee (SAC)

The industry trend is also pushing for more rigorous SSD, as literature (e.g., Yao et al., 2013) points to the limitations of traditional monitoring. Bayesian approaches are being promoted for their ability to offer objective, real-time analysis during trials. Early detection improves patient protection and can reduce long-term development costs. Industry best practices also advocate for early and proactive planning of safety analyses through frameworks like Program Safety Analysis Plans (PSAPs).

Patient safety remains the central ethical and practical driver of SSD improvement. Leading pharmaceutical companies (e.g., Teva, Pfizer, Eli Lilly) publicly commit to placing patient safety at the heart of their operations, underscoring the societal and reputational importance of effective monitoring.

Lastly, corporate principles stress that accurate safety profiles affect not just regulatory compliance and patient safety, but also the financial valuation of a compound. Failure to detect and report risks promptly can result in serious harm, legal consequences, and a loss of public trust and investment.

How change should occur in the safety signal detection

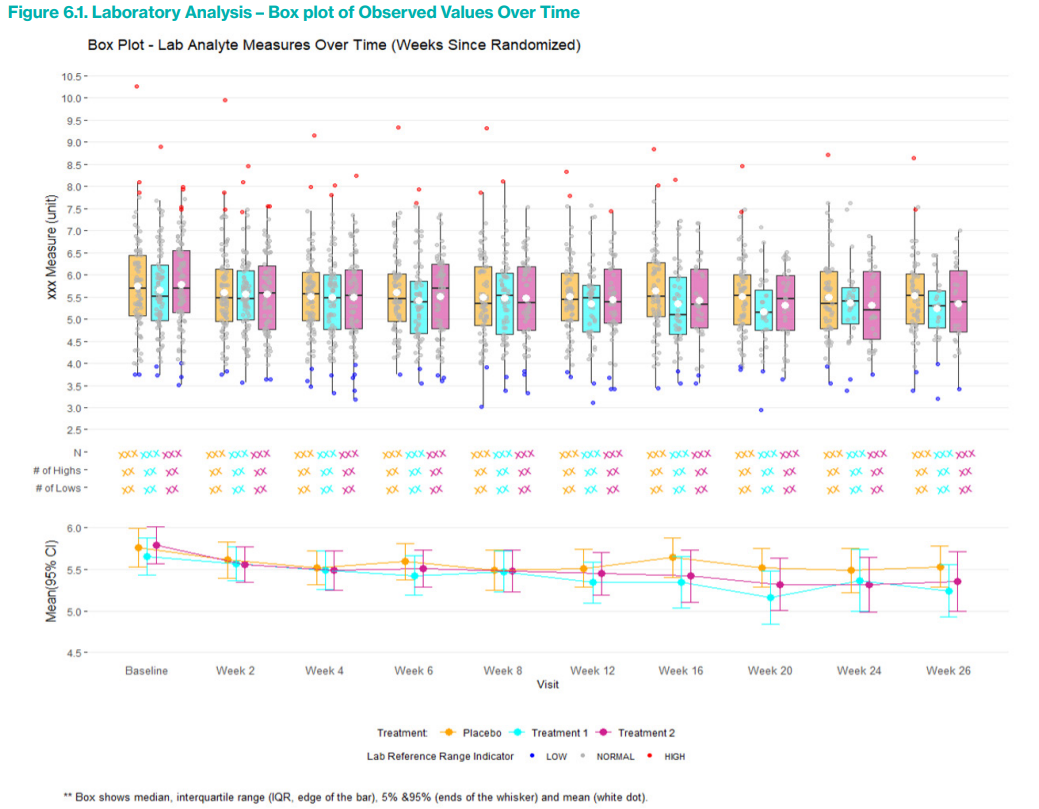

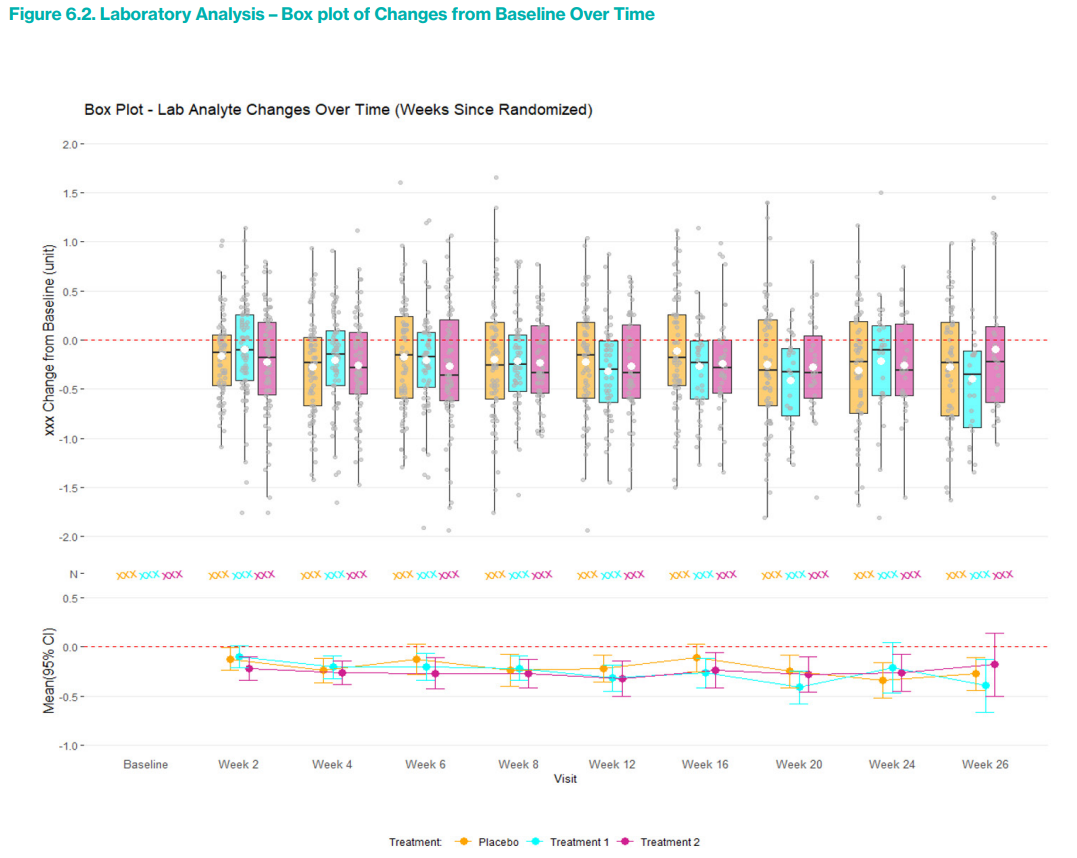

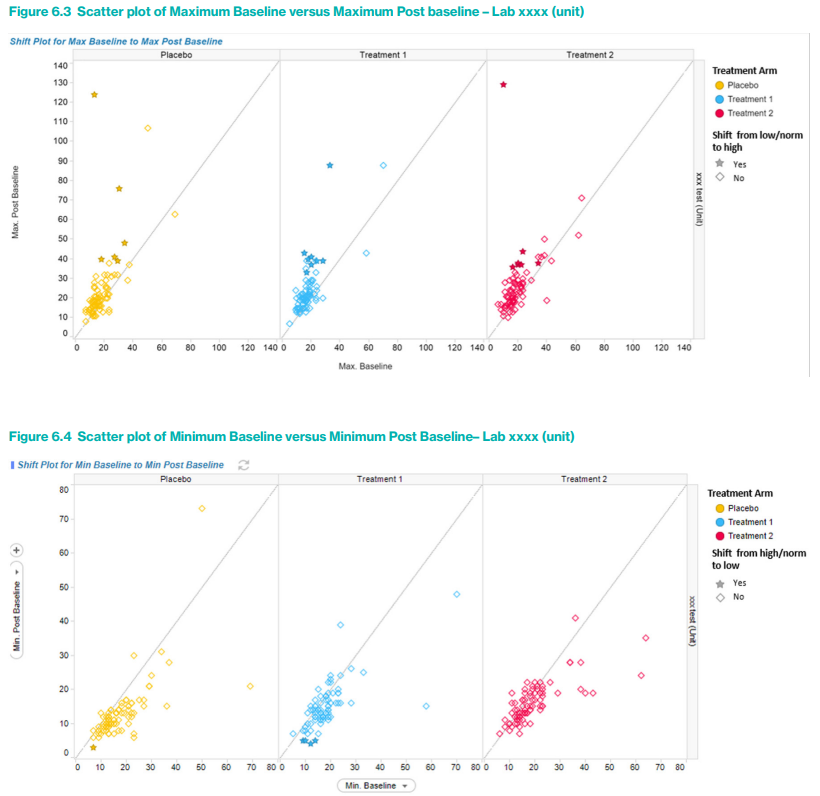

Firstly, the use of advanced data visualization tools is encouraged to enhance the interpretability and interactivity of safety data. These tools, including forest plots, threshold plots, time-to-event plots, and hazard plots, offer interactive and drill-down features that allow for a more dynamic exploration of potential safety issues. Such visualization supports faster identification of concerning patterns and facilitates more informed decision-making.

Secondly, there is a push for greater scientific and statistical rigor, particularly in the analysis of both blinded and unblinded safety data. This includes applying robust statistical methodologies to datasets covering adverse events (AEs), laboratory results, vital signs, and electrocardiograms (ECGs). The goal is to bring consistency, objectivity, and depth to the SSD process.

Another key element is the development of global safety databases organized by compound. These databases would centralize safety data from multiple studies, making it easier to detect patterns, perform aggregate analyses, and assess compound-specific risks across different trials and populations.

Lastly, organizations are advised to develop Program Safety Analysis Plans (PSAPs). These formalized plans lay out a comprehensive strategy for conducting safety analyses throughout a drug’s development lifecycle. They ensure that safety monitoring is systematic, pre-planned, and harmonized across studies.

What specifically needs to change in managing safety signal detection

First, companies should develop pooled, aggregate safety databases much earlier in the clinical development lifecycle—rather than waiting until submission. These databases should have standardized structures and reporting templates to support not only SSD but also broader safety reporting needs. By centralizing safety data early, companies can enable faster and more effective signal detection and trend analysis across studies.

Second, in the area of safety signal detection, companies need to adopt or enhance statistical methods capable of handling blinded clinical trial data, ensuring valid analysis even when treatment assignments are unknown. This should be coupled with the use of data visualization tools—both static and interactive—to better identify patterns and communicate findings. Additionally, optimizing the outputs produced during SSD (e.g., reports, dashboards, alerts) ensures that results are both informative and actionable.

Lastly, IND safety reporting practices must be realigned to match FDA guidance and expectations. This includes improving how serious adverse events (SAEs) are assessed and reported—focusing on those with a demonstrated causal relationship to the drug, as judged by a combination of sponsor, medical, and statistical input. Consistency in the use of terms like “anticipated,” “predicted,” or “expected adverse event” in Investigator Brochures (IBs) is also important to avoid ambiguity.

3.2 Objectives of BSSD Analyses

Objective of SSD in blinded studies is to use predefined statistical thresholds, reference rates, and modeling (like Bayesian or frequentist methods) to detect early signs of safety concerns without breaking the blind. The approach must balance avoiding unnecessary alarms with catching true adverse trends early enough for meaningful intervention.

Core challenge in blinded SSD: distinguishing between “noise” (random variation) and true “signal” (evidence of risk) while the treatment assignment remains unknown.

- A criterion (threshold) is set to decide whether an “alarm” should be triggered, suggesting a potential safety issue.

- If the true signal falls below this threshold, it may result in a missed alarm—failing to detect a true risk.

- Conversely, if random noise exceeds the threshold, a false alarm may be triggered—suggesting a safety issue when none exists.

The objective is to define and refine this threshold in a way that balances sensitivity (catching real issues) and specificity (avoiding false alarms), especially under the constraints of blinded data.

E.g. practical statistical example to frame the objectives of SSD in blinded trials:

- Historically, from a dataset of 500 subjects with 24 weeks of follow-up, the adverse event (AE) incidence in the placebo group for a particular event is known to be 2%.

- In a new blinded study, 80 subjects (with a 3:1 treatment-to-placebo randomization ratio) have completed the same follow-up period, and Y events have been observed.

- The central question is: What number of events (Y) would suggest that the observed rate is too high to be due to chance, assuming no true difference exists between the groups?

- This analysis seeks to determine whether the current study data (while still blinded) deviates enough from expected patterns to indicate a potential safety signal.

3.2.1 Simple Frequentist Approach (Binomial Model)

- Start with a baseline estimate of adverse event rate (θ) from robust historical placebo data.

- Adjust for time-at-risk and censoring using person-time and hazard models.

- Use the adjusted θ to compute expected counts in the ongoing study.

- Compare observed counts (Y) to this expectation via binomial or Poisson models.

- Trigger a signal if observed Y exceeds what is reasonably expected under the null hypothesis.

Throughout, it emphasizes the need for careful adjustment for follow-up time and differences between populations to avoid false alarms or missed signals.

Assumptions:

- The event of interest is rare (e.g., AE incidence of 2%).

- In a new blinded study, 80 subjects have completed follow-up.

The expected number of AEs under the null hypothesis (no increased risk) is:

\[ \mathbb{E}[Y] = n \cdot \theta = 80 \cdot 0.02 = 1.6 \]

A binomial distribution \(Y \sim \text{Binomial}(n=80, \theta=0.02)\) is used to calculate probabilities of observing different values of Y.

If 5 or more AEs are observed, the cumulative probability \(P(Y < 5)\) is 0.9776 → suggesting a low likelihood under the null (possible signal).

However: underline the need for careful estimation of θ, the true underlying event rate in the placebo group.

- Where did θ (2%) come from? Was it from robust, representative historical data?

- Are historical and current populations comparable? Differences in demographics, care settings, and follow-up time can bias results.

- Time-at-risk mismatch: If subjects in the historical and current study differ in follow-up duration or censoring, comparing incidence rates directly could be misleading.

Therefore, the estimation of θ is approached indirectly via hazard rate λ, using historical data:

For each historical study:

\[ \text{IR}_j = \frac{r}{\sum_{i=1}^{r} t_i + (n - r)T} \]

where:

- \(r\): number of events

- \(t_i\): time to event

- \(T\): censoring time for those without events

This yields an incidence rate per unit time (hazard rate), assuming an exponential model.

IR is treated as the Maximum Likelihood Estimate (MLE) of λ.

Next, the pooled hazard rate (λ_w) across multiple historical studies is computed using a weighted average:

\[ \lambda_w = \frac{\sum_{j=1}^k w_j \lambda_j}{\sum_{j=1}^k w_j} \]

- \(w_j\): total person-time in study j

- \(λ_j\): incidence rate for study j

Then, derive the distribution of time-at-risk for each patient in the blinded trial. This accounts for:

- Patients who completed the study

- Those who withdrew prematurely

- Those censored at database cut-off

This step ensures alignment between exposure times in the new and historical data.

Finally, the expected number of AEs in the ongoing blinded study is calculated:

\[ \mathbb{E}[Y] = \sum_{i=1}^{n} \left(1 - e^{-\lambda_w t_i}\right) \]

\[ θ = \frac{\mathbb{E}[Y]}{n} \]

- This gives a time-adjusted estimate of the incidence proportion \(θ\), accounting for censoring and variable follow-up times.

- However, the distribution of this \(θ\) is not straightforward and requires further consideration, especially for constructing confidence intervals or hypothesis testing.

3.2.2 Hybrid Frequentist/Bayesian approach

This approach enhances traditional frequentist models by incorporating uncertainty through probability distributions,

- The Beta distribution allows uncertainty in θ to be modeled directly from historical data.

- The Beta-binomial distribution extends the frequentist framework, producing a richer model for event counts by integrating uncertainty.

- This approach gives a probabilistically coherent and statistically robust method for detecting safety signals in blinded trials, improving decision-making under uncertainty.

In real-world scenarios, θ (the AE probability) is often not known with certainty—it varies across populations, time points, or study settings. This variation can be modeled using a Beta distribution, which provides a flexible way to represent a distribution of probabilities for θ.

The Beta distribution, parameterized by α (event counts) and β (non-event counts), is suitable for modeling probabilities between 0 and 1.

The mean (expected value) is:

\[ \mu = \frac{\alpha}{\alpha + \beta} \]

The variance is:

\[ \text{Var}(\theta) = \mu(1 - \mu)\phi \quad \text{where} \quad \phi = \frac{1}{\alpha + \beta} \]

Example: Historical data from 500 patients show 10 with AEs and 490 without → \(\text{Beta}(10, 490)\)

- Mean = 0.02

- Standard deviation ≈ 0.006

While the most likely value of θ is 0.02, there’s inherent variability, captured by the spread of the distribution.

If θ follows a Beta(α, β) distribution and Y ~ Binomial(n, θ), then the marginal distribution of Y (i.e., accounting for uncertainty in θ) is the Beta-Binomial distribution.

Probability mass function:

\[ f(y; \alpha, \beta) = \binom{n}{y} \cdot \frac{B(\alpha + y, \beta + n - y)}{B(\alpha, \beta)} \]

Expected value and variance:

\[ \mathbb{E}[Y] = n\mu = \frac{n\alpha}{\alpha + \beta} \]

\[ \text{Var}(Y) = n\mu(1 - \mu)\left[\frac{1 + (n - 1)\phi}{1 + \phi}\right] \]

This variance is higher than in the standard binomial model, reflecting the extra uncertainty from estimating θ rather than treating it as fixed.

This hybrid method enhances the frequentist binomial model by accounting for uncertainty in historical estimates. Instead of assuming a fixed θ, it treats θ as a random variable based on real-world data.

- More realistic modeling: Reflects natural variation in AE rates between studies or populations.

- Improved inference: Wider distributions reduce overconfidence and better balance false/missed alarm risks.

- Consistency: When α and β are large (i.e., precise historical data), the Beta distribution approaches a point estimate, and the beta-binomial converges to the standard binomial.

3.2.3 Bayesian methods

Bayesian approaches provide a rational, structured way to update beliefs as new data become available. In the context of SSD for blinded trials, where uncertainty is high and full treatment assignments are not yet revealed, Bayesian methods allow sponsors to:

- Incorporate historical safety data (e.g., placebo AE rates),

- Reflect uncertainty in model parameters (such as event probabilities),

- Continuously update risk assessments without breaking the blind.

This is especially useful for detecting early signals without making premature, binary conclusions from sparse data.

At its core, Bayesian analysis relies on Bayes’ Theorem:

\[ P(\theta | y) = \frac{P(y | \theta) P(\theta)}{P(y)} \]

Where:

- \(P(\theta)\) = prior belief about the AE rate (from historical data),

- \(P(y | \theta)\) = likelihood (how likely observed data is under a given AE rate),

- \(P(\theta | y)\) = posterior belief after observing data \(y\).

This framework allows for inductive learning, making it well-suited for ongoing SSD as trial data accumulate.

As Example:

- Prior Distribution \(P(\theta)\): Represents the belief about AE rates before observing new data. For example, if historical data show 10 AEs in 500 placebo patients, a Beta(10, 490) distribution is a natural prior.

- Likelihood \(P(y|\theta)\): Based on the binomial model, this reflects the chance of seeing Y AEs out of n patients given a particular value of θ.

- Posterior Distribution \(P(\theta | y)\): Updated belief about θ after seeing the blinded data, used to judge if a safety signal is emerging.

In blinded trials, the treatment group (placebo vs. active drug) is unknown, introducing latent structure. A finite mixture model addresses this by modeling:

- Multiple possible distributions (e.g., AE rates for placebo and active groups),

- With weights \(\pi_j\) representing the probability of each group assignment.

This allows a comprehensive analysis without needing to unblind, maintaining trial integrity.

3.3 Bayesian application to SSD

Core Bayesian Framework

At the heart of the Bayesian SSD approach is the idea of computing the posterior probability that a clinical parameter (e.g., AE rate θ) exceeds a critical safety threshold (θc):

\[ \Pr(\theta > \theta_c \mid \text{blinded data}) > P_{\text{cutoff}} \]

- θ = estimated AE rate or other safety metric (e.g., risk difference).

- θc = comparator value, typically based on historical placebo rates or clinical benchmarks.

- P_cutoff = predefined probability threshold (e.g., 90%, 95%) used to flag potential safety signals.

This decision rule is model-agnostic and applies to any Bayesian setup, making it a flexible universal framework for SSD.

To implement a Bayesian SSD approach in practice, follow these key principles:

- Define a clinical parameter of interest (θ), such as AE rate.

- Specify a threshold value (θc) representing a point of clinical concern.

- Choose an appropriate prior based on historical data, model context, and desired neutrality.

- Calculate the posterior probability that θ > θc given the blinded data.

- Compare it to a P_cutoff value, selected based on event rarity and desired balance of sensitivity vs. specificity.

This framework enables dynamic, data-driven safety monitoring under uncertainty—ideal for the complex, evolving nature of clinical trials.

The choice of prior distribution for θ has a major influence on the posterior, especially when event counts are small. A “non-informative” prior might seem neutral but can actually bias the result if not chosen carefully.

Beta(1/3, 1/3) (Kerman’s prior) is centered on the sample mean and is often used as a neutral or default prior because of its balanced shape.

For mixture models (where θ comes from both placebo and treatment arms), a generalized version is used:

\[ \theta \sim \text{Beta}(1/3 + mp, \ 1/3 + m(1-p)) \]

where

pis the historical incidence andmis the prior’s effective sample size.For overall pooled models, priors can also be derived from historical data but should be down-weighted to reflect uncertainty (e.g., treating historical data as if it contributes one subject’s worth of information).

In all cases, priors should reflect real-world clinical understanding while guarding against overconfidence or undue influence.

📈 Setting Thresholds: The Role of P_cutoff

The P_cutoff is the posterior probability required to declare a potential safety signal. Choosing this value impacts the balance between sensitivity (detecting true signals) and specificity (avoiding false alarms).

- For rare events (≤5%), lower cutoffs like 0.90 to 0.925 are suitable, because the goal is to be sensitive to early potential risks.

- For more frequent events (≥10%), stricter cutoffs like 0.975 are used, to reduce the chance of overreacting to normal variation.

This mirrors traditional hypothesis testing logic—more common outcomes require more convincing evidence to flag as abnormal.

General Considerations

Patient Population

The objective is to clearly define and select the appropriate patient population for the study, based on:

- The specific medical indication being studied (e.g., a certain cancer type, diabetes, etc.)

- Any other relevant classifications or criteria (e.g., disease severity, prior treatments). Ensuring that the enrolled population reflects the target population for which the treatment is intended helps maintain relevance and generalizability of the results.

TEAEs of Special Focus

TEAEs of special focus are pre-identified adverse events of particular interest in a trial, typically outlined in the Program Statistical Analysis Plan (PSAP). These usually include:

- Anticipated TEAEs: Events common in the disease population, regardless of drug exposure (background events).

- Expected ADRs (Adverse Drug Reactions): Events known or suspected to be caused by the investigational drug.

Key Considerations:

- Observing a single or small number of anticipated events does not automatically signal causality.

- To assess causality, an unblinded aggregate analysis is used—comparing event rates between the treatment and control groups to identify meaningful differences.

- These analyses should only be initiated when statistical thresholds (described later) indicate a potential safety signal.

Data Sources on Historical Controls

When monitoring safety in blinded studies, researchers compare ongoing event rates to historical background rates to detect abnormal patterns. The goal is to identify if observed rates of anticipated AEs are higher than expected, potentially indicating a safety signal.

Data Source Considerations:

Use a meta-analytic approach (combining results from multiple studies) to estimate background rates, preferably from placebo/control arms.

Preferred sources (ranked by data quality and bias control):

- Double-blind RCTs – best for avoiding bias.

- Open-label RCTs

- Single-arm multi-center trials

- Retrospective cohort studies

- Electronic Health Records (RWE)

Only sources 1–3 are typically used directly in meta-analyses. Others (4–5) may offer supporting evidence but require caution due to potential biases.

Relevance and comparability to the ongoing study population and design is critical when selecting historical data.

Probability Thresholds for Flagging Safety Signals

To avoid unblinding prematurely, a trigger-based approach is used:

- A posterior probability is calculated indicating the likelihood that the AE rate in the treatment group exceeds that of the control group by more than expected (background rate).

- This probability threshold is pre-specified and agreed upon by the project team.

Trigger System Example:

- Yellow Flag (warning): Posterior probability > 80%

- Red Flag (alert): Posterior probability > 82%

- This system allows ongoing blinded monitoring while maintaining trial integrity.

Considerations for Threshold Setting:

Thresholds should not be overly conservative (e.g., >90%) to avoid missing real signals due to low power.

Several factors influence these probabilities:

- Event rarity (common vs. rare AEs)

- Sample size of current and historical data

- Degree of heterogeneity across studies

Regulatory Context:

The FDA recommends:

- Clearly documenting why specific events were selected for monitoring.

- Justifying the choice of thresholds used to trigger evaluation/unblinding.

Any decision to unblind is made by the Blinded Review Team (BRT), which includes both clinical and statistical experts.

Whether an imbalance suggests a reasonable possibility of causality (and therefore needs IND reporting) is a Sponsor-level judgment.

3.4 Binomial-Beta Model

In this document, we present 2 methods for monitoring safety data from blinded ongoing clinical trials with the aim of detecting potential safety signals. 1) Bayesian Markov Chain Monte Carlo (MCMC) method: Subject level data with blinded treatment are modeled using a mixture of binomial distribution with an indicator variable and the MCMC algorithm is used to estimate the parameters accounting for variable follow-up times across subjects. 2) Simpler Monte Carlo (MC) approximation method: The method is based on study level data for planning purposes and result comparison with the primary method. Because the simpler approximation method is based on study-level data, the model assumes a fixed follow-up time for all subjects and an approximation has to be made to account for this.

Prior Distributions Chi-square Approach

The Chi-square approach is a practical method to construct an informative prior from multiple historical studies by first assessing their consistency and then pooling their data. It is termed “Chi-square” because it typically relies on Cochran’s Q (chi-square) test to check heterogeneity (i.e. whether observed differences in event rates across studies are beyond chance variability). In essence, this approach asks: Can we treat all historical studies as having a common underlying event rate? If yes, the data are combined (pooled) to form a single prior distribution. If not (significant heterogeneity), adjustments are made – for example, using a random-effects meta-analysis to allow each study to have its own rate and account for between-study variance. This approach is appropriate when you have a few historical studies and want a straightforward summary of the historical event rate. It works best if the studies are fairly homogeneous (similar populations, endpoints, etc.), or if you plan to widen the prior’s uncertainty when they differ.

In practice, the Chi-square approach often mirrors a classical meta-analysis of proportions. It might be used in trial planning to set a prior on a control event rate by combining past control group rates. It is conceptually simpler than MAP: it does not fully model hierarchical variation, but rather uses hypothesis testing and summary statistics to decide how much information to borrow.

Mathematical Formulation

Suppose we have historical studies \(i = 1,2,\dots,k\), each with \(x\_i\) events out of \(n\_i\) participants (event rate estimate \(\hat p\_i = x\_i/n\_i\)). The Chi-square approach involves the following key elements and equations:

Fixed-effect (pooled) model: This assumes a common true event rate \(p\) across all studies. The weighted pooled estimate can be obtained by a weighted average of \(\hat p\_i\). A simple weighting is by sample size (for event rates, this is reasonable if rates are not extremely low/high). The pooled event rate would be: \(\bar p = \frac{\sum_{i=1}^k w_i \hat p_i}{\sum_{i=1}^k w_i},\) where \(w\_i\) are weights for study \(i\). For example, one may choose \(w\_i = n\_i\) for simple pooling or \(w\_i = 1/\text{Var}(\hat p\_i)\) if using inverse-variance. Under a fixed-effect assumption (no between-study heterogeneity), \(\bar p\) estimates the common event rate.

Cochran’s Q (Chi-square test for heterogeneity): To assess if the \(k\) study results are consistent with a common rate, we compute \(Q = \sum_{i=1}^k w_i (\hat p_i - \bar p)^2.\) Under the null hypothesis of homogeneity (all studies share the same true \(p\)), \(Q\) follows a chi-square distribution with \(k-1\) degrees of freedom. A large \(Q\) (or small \(p\)-value) indicates significant heterogeneity – meaning the event rates likely differ beyond chance. The I² statistic is often used alongside \(Q\) to quantify heterogeneity: $I^2 = %, $ which is the percentage of total variance across studies due to true heterogeneity rather than chance. (For example, \(I^2=0\) if all variation is consistent with chance, and higher values imply more between-study variability.)

Random-effects model (if needed): If heterogeneity is detected, a random-effects meta-analysis is used to incorporate between-study variance. This assumes each study’s true event rate \(\theta\_i\) varies around an overall mean \(\mu\). A common assumption is \(\theta\_i \sim \mathcal{N}(\mu,\tau^2)\) on some scale (often the log-odds scale for proportions, or directly on the proportion scale for small heterogeneity). The DerSimonian-Laird (DL) method provides an estimate for the between-study variance \(\tau^2\) using the \(Q\) statistic. In simplified form: \(\hat\tau^2 = \frac{Q - (k-1)}{\sum_{i}w_i - \sum_i w_i^2/\sum_i w_i},\) if \(Q>(k-1)\) (and \(\hat\tau^2=0\) if \(Q\le k-1\)). Updated weights become \(w\_i^\* = 1/(\text{Var}(\hat p\_i)+\hat\tau^2)\), and the random-effects pooled estimate is \(\hat p_{\text{RE}} = \frac{\sum_i w_i^* \hat p_i}{\sum_i w_i^*}.\) The variance of \(\hat p\_{\text{RE}}\) is \(1/\sum\_i w\_i^\*\). This model essentially treats the true event rate in each study as a draw from a distribution with mean \(\mu\) and variance \(\tau^2\). It acknowledges heterogeneity by inflating the uncertainty of the combined estimate.

Deriving a prior distribution: Once an overall event rate and variance are determined (from either fixed or random-effects), we translate that into a prior distribution for the new trial’s event rate. A convenient choice for an event rate (a probability) is a Beta distribution prior. The Beta distribution is defined on [0,1] and is conjugate to the Binomial likelihood. We can think of the Beta prior parameters as “pseudo-counts” of events and non-events. For instance, a Beta(\(\alpha,\beta\)) prior corresponds to \(\alpha-1\) prior “successes” and \(\beta-1\) “failures” observed historically.

– If using fixed-effect pooling (homogeneous case): We might set \(\alpha = x\_{\text{total}} + 1\) and \(\beta = n\_{\text{total}} - x\_{\text{total}} + 1\) (assuming a flat base prior Beta(1,1)). Here \(x\_{\text{total}}=\sum\_{i}x\_i\) and \(n\_{\text{total}}=\sum\_{i}n\_i\). This yields a Beta prior with mean \(\frac{\alpha}{\alpha+\beta} \approx \frac{x\_{\text{total}}}{n\_{\text{total}}} = \bar p\) and a variance that reflects the binomial variation from the aggregated data. Essentially, we treat all historical data as one big trial. For example, if across 3 studies there were 50 events out of 200 patients, the prior could be Beta(51,151), which has mean ~0.25 (25% event rate). We might also incorporate a slight increase in \(\beta\) (or decrease in \(\alpha\)) to deliberately widen the prior if we suspect any unmodeled heterogeneity, ensuring we’re not overconfident.

– If using random-effects (heterogeneous case): There isn’t a single obvious closed-form prior, because the true rates vary. One simple approach is to choose a conservative (over-dispersed) Beta prior that has the same mean as \(\hat p\_{\text{RE}}\) but larger variance to account for between-study differences. For instance, one can match moments: set the Beta’s mean \(m = \hat p\_{\text{RE}}\) and variance \(v = \hat p\_{\text{RE}}(1-\hat p\_{\text{RE}})/N\_{\text{eff}}\), where \(N\_{\text{eff}}\) is an “effective sample size” smaller than \(n\_{\text{total}}\). \(N\_{\text{eff}}\) can be chosen such that the Beta’s variance \(v\) is roughly equal to the total uncertainty (within-study binomial error plus between-study variance \(\tau^2\)). Another approach is to use the predictive interval from the random-effects meta-analysis: for a new study of similar size, the event rate is expected (with 95% probability) to lie in, say, [L, U]. One can then choose a Beta prior whose 95% credible interval is [L, U], thus reflecting the heterogeneity. There is some art to this, but the principle is to down-weight the historical information when heterogeneity exists. Essentially, the prior will be broader (less informative) as heterogeneity increases, reflecting greater uncertainty about the event rate in a new setting.

In summary, the Chi-square approach uses classical meta-analytic formulas (like \(Q\) and possibly DL random effects) to derive a Beta (or similar) prior for the event rate. Each term in these equations corresponds to either a measure of variability (\(Q\), \(\tau^2\)) or a summary of data (pooled \(\bar p\)). The Beta prior’s parameters \((\alpha,\beta)\) are interpreted in plain language as prior evidence equivalent to \(\alpha-1\) events and \(\beta-1\) non-events.

Step-by-Step Application

Collect and summarize historical data: List each prior study’s sample size (\(n\_i\)) and number of events (\(x\_i\)). Compute the observed event rates \(\hat p\_i = x\_i/n\_i\) for each study.

Assess heterogeneity: Calculate Cochran’s \(Q\) statistic and its \(p\)-value (and/or \(I^2\)).

- If \(Q\) is not significant (or \(I^2\) is very low), assume the studies are consistent with a common event rate.

- If \(Q\) is significant (large heterogeneity), acknowledge that event rates differ across studies.

Choose pooling model:

- Low heterogeneity (homogeneous): Use a fixed-effect pooling. Combine all events and all patients: \(x\_{\text{total}}=\sum x\_i\), \(n\_{\text{total}}=\sum n\_i\). The pooled estimate is \(\bar p = x\_{\text{total}}/n\_{\text{total}}\). Compute the binomial variance \(\mathrm{Var}(\bar p) = \bar p(1-\bar p)/n\_{\text{total}}\) (or get a confidence interval for \(\bar p\)).

- Notable heterogeneity: Use a random-effects meta-analysis. Estimate \(\tau^2\) (between-study variance) via a formula (e.g., DerSimonian-Laird). Compute a weighted average \(\hat p\_{\text{RE}}\) with weights \(w\_i^\* = 1/(\text{Var}(\hat p\_i)+\tau^2)\). Also calculate an approximate 95% prediction interval for a new study’s true rate: this is often \(\hat p\_{\text{RE}} \pm t\_{(k-1)} \sqrt{\tau^2 + \mathrm{AvgVar}}\), where \(\mathrm{AvgVar}\) is the typical within-study variance and \(t\_{(k-1)}\) is a quantile from a \(t\) distribution with \(k!-!1\) degrees of freedom (to reflect uncertainty in $p_{}`). This interval gives a range where we expect the new trial’s event rate to fall, considering between-study variability.

Derive the prior distribution: Translate the meta-analytic result into a prior for the event rate \(p\) in the new trial.

- If pooling fixed-effect: set a Beta prior \(\pi(p) = \mathrm{Beta}(\alpha,\beta)\) with \(\alpha = x\_{\text{total}} + c\) and \(\beta = n\_{\text{total}} - x\_{\text{total}} + c\), where \(c\) is a small constant reflecting any initial prior (for example \(c=1\) if using Beta(1,1) as non-informative base). This Beta has mean \(\approx \bar p\) and a 95% credible interval roughly \(\bar p \pm 1.96\sqrt{\bar p(1-\bar p)/(n\_{\text{total}}+c)}\).

- If random-effects: choose a more diffuse prior to account for heterogeneity. One way is to reduce the effective sample size: e.g. \(\pi(p) = \mathrm{Beta}(\tilde\alpha,\tilde\beta)\) such that \(\tilde\alpha/(\tilde\alpha+\tilde\beta) = \hat p\_{\text{RE}}\) but \(\tilde\alpha + \tilde\beta < n\_{\text{total}}\) to widen the variance. You can solve \(\tilde\alpha = m \cdot N\_{\text{eff}}\) and \(\tilde\beta = (1-m)\cdot N\_{\text{eff}}\) with \(m=\hat p\_{\text{RE}}\) and choose \(N\_{\text{eff}}\) to achieve a desired variance (for instance, set \(N\_{\text{eff}} = n\_{\text{total}}/(1+\text{extra variance inflation})\) or use the predictive interval width to guide it). Another approach is to adopt a mixture prior: for example, a mixture of Beta distributions each reflecting different historical studies or clusters, though this gets more complex. In most cases, a single Beta with inflated variance suffices for simplicity.

Validate or adjust (if necessary): It’s good practice to double-check that the chosen prior makes sense. Plot the Beta prior density to see if it reasonably covers the range of historical study estimates. If one historical study was an outlier, ensure the prior’s spread covers that or consider excluding that study if it’s not deemed relevant. Essentially, verify that the prior is neither too narrow (overconfident) nor shifted in a way that ignores important differences. If the prior seems too informative given heterogeneity, you can scale back \(\alpha,\beta\) (reducing \(N\_{\text{eff}}\)). Conversely, if heterogeneity was low and we might be underutilizing information, one might confidently use \(n\_{\text{total}}\).

Strengths:

- Simplicity: The Chi-square approach is relatively easy to execute and explain. It uses familiar meta-analysis techniques (proportions, chi-square test) that many clinical trialists know from frequentist analysis. For example, summarizing “in 5 previous studies the event rate ranged 20–25%, with no significant heterogeneity, so we use a Beta prior centered around 23%” is intuitively understandable.

- Transparency: Each step (testing heterogeneity, pooling or not) is explicit. Investigators retain control to include or exclude certain studies based on clinical judgment (e.g. exclude a study that appears clinically different if heterogeneity is high).

- Speed: It requires minimal specialized software – one can do it with basic stats tools or even by hand for simple cases. This makes it practical in early trial planning when quick estimates are needed.

- Reasonable with homogeneous data: If historical studies truly have a common rate, this approach will produce a tight prior reflecting all the data. If heterogeneity is truly absent or very low, the Chi-square approach and the more complex MAP approach will give similar results (since modeling heterogeneity isn’t needed in that case).

Limitations:

- All-or-nothing heterogeneity handling: The reliance on a heterogeneity test means the approach can be somewhat dichotomous – pooling fully versus ad-hoc adjustments. Cochran’s Q has low power with few studies, so one might miss heterogeneity and pool inappropriately (overly narrow prior), or if many studies, Q can be overly sensitive, leading one to declare heterogeneity and perhaps over-broaden or even discard data. There’s no continuous “partial pooling” built-in; any down-weighting of data due to heterogeneity is manual and subjective (e.g., deciding an effective sample size).

- Less formal uncertainty modeling: Unlike the MAP approach, the Chi-square method does not explicitly model the distribution of true event rates across studies. As a result, the uncertainty due to between-study variability might be handled in a ad-hoc manner. For instance, choosing how much to inflate the Beta prior variance when \(I^2\) is 50% can be tricky and might under- or over-estimate the true uncertainty.

- Potential bias if data differ: If one historical study’s population is slightly different (say, older patients with higher event rate), a simple pooled prior might be biased for the new trial population. The Chi-square approach doesn’t inherently account for covariates or differences in study design that could explain heterogeneity. It treats heterogeneity as noise rather than something to model. This can lead to a prior that is centered at a value not truly applicable to the new trial.

- No automatic conflict resolution: In Bayesian analysis, a prior-data conflict refers to the prior strongly disagreeing with new data. The Chi-square approach doesn’t have a mechanism to identify or mitigate this conflict beyond the initial heterogeneity test. If the new trial’s early data look very different from the prior, one must recognize this and perhaps discount the prior in an ad-hoc interim adjustment. In contrast, some Bayesian methods (like robust MAP) include heavy-tailed priors that automatically reduce influence in such conflicts.

- Not a fully Bayesian updating of evidence: One could argue the Chi-square approach is a hybrid – you use frequentist meta-analysis, then plug that into a Bayesian prior. It doesn’t use the Bayesian formalism to combine historical data and new data in one model; instead it’s a two-step approach. While this is not inherently bad, it lacks the coherence of a single model and may be suboptimal in fully leveraging information.

In short, the Chi-square approach is straightforward but can be rigid. It works well for quick summaries when data are consistent, but its ad-hoc nature in handling heterogeneity is both a strength (simple rule) and a weakness (potentially inadequate modeling).

When use the Chi-square approach when:

- Historical studies are few and similar: If you only have 1–3 prior studies and they appear clinically and statistically consistent (e.g., similar patient populations, same treatment and endpoint, and no obvious discrepancies in event rates), the Chi-square approach is attractive. With minimal heterogeneity, a simple pooled Beta prior is almost as good as a more complex model, and it’s easier to justify to stakeholders.

- A quick, rough prior is needed: Early in trial planning, you might need a ballpark prior for calculations (like sample size or Bayesian power). The Chi-square method can rapidly provide an estimate. For instance, if two past trials had 30% and 35% event rates with similar sample sizes, you might immediately propose a Beta prior around 0.33 with a certain pseudo-count, without doing a full hierarchical model analysis.

- Computational or expertise limitations: Not all teams have Bayesian experts or the software set up to run hierarchical models. The Chi-square approach can be done with basic stats knowledge. Regulators or team members might also be more familiar with seeing a pooled estimate and a chi-square test for heterogeneity (common in meta-analysis) than with a Bayesian hierarchical model. If you need to communicate the prior derivation in a simpler way, Chi-square approach has that interpretability (though note: regulatory agencies are becoming conversant with MAP for historical borrowing too).

However, be cautious using the Chi-square method if there is substantial heterogeneity or many historical studies. In those cases, the MAP approach is usually preferred:

Meta-Analytic Predictive (MAP) Approach

The Meta-Analytic Predictive (MAP) approach is a Bayesian method that formally models the historical data in a hierarchical framework, capturing both the common trend and the between-study heterogeneity. Instead of pooling or making yes/no decisions about heterogeneity, MAP treats the true event rate in each historical study as a random draw from a population distribution. This yields a posterior predictive distribution for the event rate in a new trial – that predictive distribution is the MAP prior for the new trial’s event rate. In other words, MAP uses all historical evidence to “predict” what the new study’s event rate is likely to be, with uncertainty bands naturally widened if the historical results disagree with each other.

Situations appropriate for MAP include having multiple historical studies, especially with some heterogeneity. MAP shines in complexity: it can borrow strength from many studies while appropriately down-weighting those that don’t agree. It is a fully Bayesian approach – the historical data and the new data are linked through a hierarchical model. Conceptually, it’s like saying: “We have a distribution of possible event rates (learned from past trials). Let’s use that distribution as our prior for the new trial’s event rate.” The approach is grounded in Bayesian meta-analysis techniques and often implemented with MCMC simulations due to its complexity.

One big advantage is that the MAP prior inherently accounts for uncertainty at multiple levels: it recognizes both within-study uncertainty (each study’s finite sample) and between-study uncertainty (variation in true rates across studies). The resulting prior is “predictive” in the sense that it represents our uncertainty about the new trial’s parameter after seeing the old trials. This method is particularly useful when historical data are available but not identical to the new trial’s setting – MAP will downweight the influence of historical data if they are inconsistent (via a larger between-study variance).

Mathematical Formulation

At the heart of MAP is a Bayesian hierarchical model. Let’s define parameters and distributions for a binary outcome (event rate):

Data level (likelihood): For each historical study \(i\) ( \(i=1,\dots,k\) ), denote the true event rate by \(\theta\_i\). We observe \(x\_i\) events out of \(n\_i\) in that study. We model this as \(x_i \sim \text{Binomial}(n_i,\, \theta_i),\) meaning given the true event rate \(\theta\_i\), the number of events follows a binomial distribution. This is just the standard likelihood for each study’s data.

Parameter level (between-trial distribution): Now we impose a model on the vector of true rates \((\theta\_1,\theta\_2,\dots,\theta\_k)\) to capture heterogeneity. A common choice is to assume the \(\theta\_i\) are distributed around some overall mean. There are a couple of ways to specify this:

- Beta-Binomial hierarchical model: Assume each \(\theta\_i\) is a draw from a Beta distribution: \(\theta_i \sim \text{Beta}(\alpha,\; \beta),\) with hyperparameters \(\alpha,\beta\) (which are unknown to be estimated). This implies a prior belief that across different studies the event rate varies according to a Beta distribution. The Beta’s mean \(\frac{\alpha}{\alpha+\beta}\) can be thought of as the “typical” event rate and its variance \(\frac{\alpha\beta}{(\alpha+\beta)^2(\alpha+\beta+1)}\) captures the between-study variability. If \(\alpha\) and \(\beta\) are closer to each other (and large), the Beta is tight (low heterogeneity); if \(\alpha\) and \(\beta\) are small or very different, the Beta is broad (high heterogeneity).

- Logistic-normal hierarchical model: Alternatively, assume \[\text{logit}(\theta_i) = \ln\frac{\theta_i}{1-\theta_i} \sim \mathcal{N}(\mu,\; \tau^2).\] Here \(\mu\) is the overall average log-odds of the event and \(\tau^2\) is the between-study variance on the log-odds scale. This is analogous to the classical random-effects meta-analysis assumption (normal distribution of effects), but fully in a Bayesian context. The pair \((\mu,\tau^2)\) are hyperparameters to be estimated. Logistic-normal is quite flexible and can accommodate \(\theta\_i\) near 0 or 1 without Beta distribution’s constraints.

Both approaches serve the same purpose: introduce hyperparameters (like \(\alpha,\beta\) or \(\mu,\tau\)) that govern the distribution of true event rates across studies. Let’s use a generic notation \(\psi\) for the set of hyperparameters (either \({\alpha,\beta}\) or \({\mu,\tau}\), etc.). The hierarchical model can be written abstractly as: \[ p(\theta_1,\dots,\theta_k,\theta_{\text{new}}\mid \psi) = \Big[\prod_{i=1}^k p(\theta_i \mid \psi)\Big]\; p(\theta_{\text{new}} \mid \psi),\] where \(p(\theta\_i|\psi)\) is the distribution of a true rate given hyperparameters (Beta or logistic-normal), and we’ve included \(\theta\_{\text{new}}\) (the new study’s true event rate) as another draw from the same distribution (since we consider the new trial exchangeable with the historical ones a priori). We will use the historical data to learn about \(\psi\), and then infer \(p(\theta\_{\text{new}})\) from that.

Hyperprior level: We must specify priors for the hyperparameters \(\psi\). These are chosen to be relatively non-informative or weakly informative, because we want the historical data to drive the estimates. For example, if using \((\alpha,\beta)\), one might put independent vague priors on them (like \(\alpha,\beta \sim \text{Gamma}(0.01,0.01)\) or something that is nearly flat over plausible range). If using \((\mu,\tau)\), one might choose \(\mu \sim \mathcal{N}(0, 10^2)\) (a wide normal for log-odds, implying a broad guess for event rate around 50% with large uncertainty) and \(\tau \sim \text{Half-Normal}(0, 2^2)\) or a Half-Cauchy – a prior that allows substantial heterogeneity but is not overly informative. These choices should be made carefully, often guided by previous knowledge or defaults from literature (for instance, half-normal with scale 1 on \(\tau\) is a common weak prior for heterogeneity on log-odds).

Given this three-level model (data, parameters, hyperparameters), we use Bayesian inference to combine the information:

Posterior with historical data: We apply Bayes’ rule to update our beliefs about \(\psi\) (and the \(\theta\_i\) for \(i=1\ldots k\)) after seeing the historical outcomes \(x\_1,\dots,x\_k\). The joint posterior is: \[ p(\theta_1,\dots,\theta_k, \psi \mid x_1,\dots,x_k) \propto \left[\prod_{i=1}^k \underbrace{ \binom{n_i}{x_i} \theta_i^{x_i}(1-\theta_i)^{n_i-x_i} }_{\text{Binomial likelihood for study $i$}} \underbrace{p(\theta_i \mid \psi)}_{\text{Beta or logit-normal}} \right] \; \underbrace{p(\psi)}_{\text{hyperprior}}.\] We typically do not need to write this out explicitly in practice – we use MCMC software to sample from this posterior. The key point is that the posterior captures what we have learned about \(\psi\) (the overall rate and heterogeneity) from the data.

Posterior predictive for new study’s rate (MAP prior): The MAP prior for the new trial’s event rate \(\theta\_{\text{new}}\) is the posterior predictive distribution given the historical data. In formula form: \[\pi_{\text{MAP}}(\theta_{\text{new}} \mid \text{historical data}) = \int p(\theta_{\text{new}} \mid \psi)\; p(\psi \mid x_1,\dots,x_k)\, d\psi.\] This integral means: we average over the uncertainty in the hyperparameters \(\psi\) (as described by their posterior) to predict \(\theta\_{\text{new}}\). In plainer language, we’ve learned a distribution of possible event rates (by seeing the past studies), now we derive the implied distribution for a new study’s rate by integrating out our uncertainty in the overall mean and heterogeneity. The result \(\pi\_{\text{MAP}}(\theta\_{\text{new}} \mid \text{data})\) is the informative prior to use for \(\theta\_{\text{new}}\) in the new trial’s analysis. In the context of actual trial analysis, once new data \(y\_{\text{new}}\) is observed, the posterior for \(\theta\_{\text{new}}\) would be proportional to \(p(y\_{\text{new}} \mid \theta\_{\text{new}}), \pi\_{\text{MAP}}(\theta\_{\text{new}})\), as usual.

It’s rare to get a closed-form expression for \(\pi\_{\text{MAP}}(\theta\_{\text{new}})\). However, we can characterize it. For example, if we used the Beta-Binomial model and had a conjugate Beta hyperprior for \(\alpha,\beta\), one could in theory integrate to get a Beta mixture. More generally, one uses MCMC samples: each MCMC draw of \(\psi\) produces a draw of \(\theta\_{\text{new}}\) from \(p(\theta\_{\text{new}}|\psi)\), and aggregating those yields a Monte Carlo representation of \(\pi\_{\text{MAP}}(\theta\_{\text{new}})\). Often this distribution is then approximated by a convenient form (e.g., a Beta distribution or a mixture of Betas) for easier communication. For instance, one might find that \(\pi\_{\text{MAP}}\) is roughly Beta(20,80) (just as an example) or perhaps a mixture like 0.7·Beta(15,60) + 0.3·Beta(3,12) if there were bi-modality or excess variance. Tools like the R package RBesT use algorithms to fit a parametric mixture to the MCMC output.

To describe each term in plain language:

- \(\theta\_i\) = the true event rate in historical study \(i\).

- \(\psi\) = hyperparameters (like overall mean rate and heterogeneity) describing how \(\theta\_i\) values are distributed across studies.

- \(p(\theta\_i|\psi)\) = the model for variability in event rates between studies (e.g., “\(\theta\_i\) are around 0.3 with a between-study standard deviation of 0.05”).

- \(p(\psi)\) = prior belief about the overall rate and heterogeneity before seeing historical data (usually vague).

- \(p(\psi|x\_{1..k})\) = updated belief about those hyperparameters after seeing historical results (e.g., after 5 studies, we might infer the typical event rate is ~30% and there’s moderate heterogeneity with \(\tau \approx 0.4\) on log-odds).

- \(\pi\_{\text{MAP}}(\theta\_{\text{new}}| \text{data})\) = a weighted blend of possible \(\theta\_{\text{new}}\) values, weighted by how plausible each scenario is given historical data. If historical studies were very consistent, \(p(\psi|data)\) will be tight (low \(\tau\)) and \(\pi\_{\text{MAP}}(\theta\_{\text{new}})\) will be concentrated around the common rate. If they were inconsistent, \(p(\psi|data)\) will favor larger \(\tau\) (heterogeneity) and \(\pi\_{\text{MAP}}(\theta\_{\text{new}})\) will be broader, reflecting our uncertainty what the new rate will be.

To make this concrete, imagine 3 historical studies had event rates of 10%, 20%, and 30% in similar settings. A MAP model would treat those as random draws. It might infer an overall average ~20% and substantial heterogeneity. The MAP prior for a new study’s rate would then be a distribution perhaps centered near 20% but quite wide (e.g., 95% interval maybe 5% to 40%). Compare that to a chi-square pooling: chi-square might have flagged heterogeneity and if one still pooled naively one might pick 20% ± some fudge. The MAP gives a principled way to get that wide interval. If instead all 3 studies had ~20%, heterogeneity would be inferred as low, and MAP prior would be tight around 20% (small variance).

Step-by-Step Application

Assemble historical data: Just like before, gather the outcomes \(x\_i\) and sample sizes \(n\_i\) of all relevant historical trials on the event of interest. Also, carefully consider inclusion criteria for historical data – ensure the studies are sufficiently similar to your new trial’s context (patient population, definitions, etc.), because the MAP will faithfully combine whatever data you feed it. If one study is markedly different but included, the MAP model will try to accommodate it via larger heterogeneity, which might dilute the influence of all data (sometimes that’s warranted, other times you might exclude that study). In short, garbage in, garbage out applies: select historical data that you truly consider exchangeable with the new trial aside from random heterogeneity.

Specify the hierarchical model: Choose a parametric form for between-trial variability and assign priors to its parameters. For example, decide between a Beta vs logistic-normal model for \(\theta\_i\). Suppose we choose the logistic-normal random-effects model (commonly used for meta-analysis of proportions). We then specify priors like:

- \(\mu \sim N(0, 2^2)\) (implies a prior guess that the overall event rate is around 50% but could be anywhere from a few% to ~98% within 2 SDs; we could shift this if we have prior expert belief on the general rate).

- \(\tau \sim \text{Half-Normal}(0, 1^2)\) or perhaps \(\tau \sim \text{Half-Cauchy}(0, 1)\) (a weakly informative prior that allows heterogeneity but doesn’t put too much mass on extreme values; e.g., \(\tau\) on log-odds around 1 means quite large heterogeneity). If using Beta-Binomial: we might say \(\alpha,\beta \sim \text{Uniform}(0,10)\) or something moderate. There’s also a concept of putting a prior on the between-study variance directly (e.g., \(\tau^2 \sim \text{Inverse-Gamma}\)), but modern Bayesian practice often prefers more interpretable priors on \(\tau\).

It’s important here to do sensitivity checking: since the MAP will be used as prior, you might examine how different reasonable hyperpriors affect the result, especially if data are sparse. However, with moderate amount of historical data, the influence of the hyperprior will be minor.

Fit the model to historical data: Using a Bayesian software (Stan, JAGS, BUGS, or specialized R packages like RBesT or bayesmeta), perform posterior sampling or approximation. Essentially, you feed in \({x\_i, n\_i}\) and get posterior draws of \((\mu,\tau)\) (or \((\alpha,\beta)\)) and possibly of each \(\theta\_i\). Verify the model fit by checking if the posterior predicts the observed data well (e.g., posterior predictive checks for each study’s event count can be done). If one study is extremely improbable under the model, that might indicate model mis-specification or that the study is an outlier (you might consider a more robust model or excluding that study). Usually, though, this step is straightforward with modern tools, and you obtain MCMC samples from \(p(\psi | \text{data})\).

Obtain the MAP prior (posterior predictive for new): Extract the predictive distribution for \(\theta\_{\text{new}}\). If using MCMC, for each saved draw of \((\mu,\tau)\), draw a sample \(\theta\_{\text{new}}^{(s)} \sim p(\theta\_{\text{new}}|\mu^{(s)},\tau^{(s)})\). This gives a large sample of \(\theta\_{\text{new}}\) values from the MAP prior. Now summarize this distribution:

- Compute its mean (this is the MAP prior’s expected value for the event rate, a kind of weighted average of historical rates).

- Compute credible intervals (e.g., 95% interval from the 2.5th to 97.5th percentile of the draws). This interval is essentially the Bayesian prediction interval for a new study’s true rate, incorporating uncertainty in both \(\mu\) and \(\tau\).

- Plot the density or histogram to see its shape. It might be approximately bell-shaped on probability scale, or skewed, or even multi-modal if data had clusters. If it’s multi-modal (say, two groups of studies had different rates), the MAP prior might show two bumps. That’s okay – it reflects ambiguity (maybe two subpopulations). One can either embrace that (use a mixture prior explicitly) or refine model (sometimes adding covariates or splitting the meta-analysis by known differences).

Approximate with convenient distribution (optional but recommended): For practical use in trial design or analysis, it’s handy to express \(\pi\_{\text{MAP}}(\theta\_{\text{new}})\) in a closed form. Often a mixture of Beta distributions is used for binary endpoints. For instance, using an EM algorithm to fit a 2- or 3-component Beta mixture to the MCMC sample. The result could be something like \(\pi\_{\text{MAP}}(\theta) \approx 0.6\mathrm{Beta}(a\_1,b\_1) + 0.4\mathrm{Beta}(a\_2,b\_2)\). This mixture can then be used in standard Bayesian calculations without needing MCMC each time. If the MAP prior is roughly unimodal, a single Beta might even suffice (by matching the mean and variance of the sample draws). The approximation step doesn’t change the inference; it’s a technical convenience. When reporting the prior, you might say, “Based on the MAP analysis of 5 historical studies, the prior for the event rate in the new trial is well-approximated by a Beta(18, 42) distribution,” for example.

Incorporate a robustness component (if needed): A practical extension is to make the prior robust against potential conflict by mixing it with a vague component. For example, define \(\pi_{\text{robust}}(\theta) = (1-w)\,\pi_{\text{MAP}}(\theta) + w\, \pi_{\text{vague}}(\theta), \qquad 0 < w < 1.\) Here \(\pi\_{\text{vague}}(\theta)\) could be a very flat prior (like Beta(1,1) or a wide Beta perhaps Beta(2,2) just to keep it proper). A typical choice might be \(w = 0.1\) or 0.2, meaning a 10–20% weight on a non-informative prior and 80–90% weight on the MAP prior. The idea is that if the new trial’s data strongly conflict with the MAP prior, the vague part ensures the prior has heavier tails and won’t overly pull the posterior. This is a safeguard; it slightly sacrifices information to gain robustness. Whether to do this depends on how confident you are in the applicability of the historical data. Many practitioners include a small robust component by default, since the cost (a minor increase in needed sample size) is usually worth the protection against prior-data conflict.

Use the MAP prior in trial planning: With \(\pi\_{\text{MAP}}(\theta)\) determined, you can now do things like: simulate the operating characteristics of the planned trial (e.g., probability of success given certain true rate, since you have an informative prior), or calculate the effective sample size (ESS) of the prior. ESS is a concept that translates the information content of the prior into an equivalent number of patients worth of data. For a Beta prior, ESS = \(\alpha + \beta\) (for mixture, it’s more complicated but there are methods to compute it). Knowing ESS helps communicate how much historical info we’re using. If ESS is very high relative to new trial N, regulators might be wary; if ESS is modest, it seems more reasonable. You can adjust the prior (e.g., adding robustness or broadening it) to get an ESS that you feel is appropriate (some teams target an ESS equal to a fraction of the new trial’s N, to not let prior dominate completely).

By following these steps, the MAP approach yields a fully Bayesian prior for the new study’s event rate, rooted in a rigorous meta-analytic model of the historical data.

Strengths: