Patient Reported Outcome Visualization

1 DLQI

In this month’s dataset, DLQI has been administered in a phase 3 clinical trial to patients with psoriasis. There are two imbalanced treatment groups, with 150 patients randomised to Placebo (Treatment A) and 450 to the active treatment (Treatment B). DLQI responses are recorded at Baseline and Week 16 (although some DLQI assessment is missing at Week 16), allowing the treatment effect in terms of Quality of Life to be assessed. The Psoriasis Area and Severity Index (PASI) is also recorded at baseline.

1.1 Change in mean scores

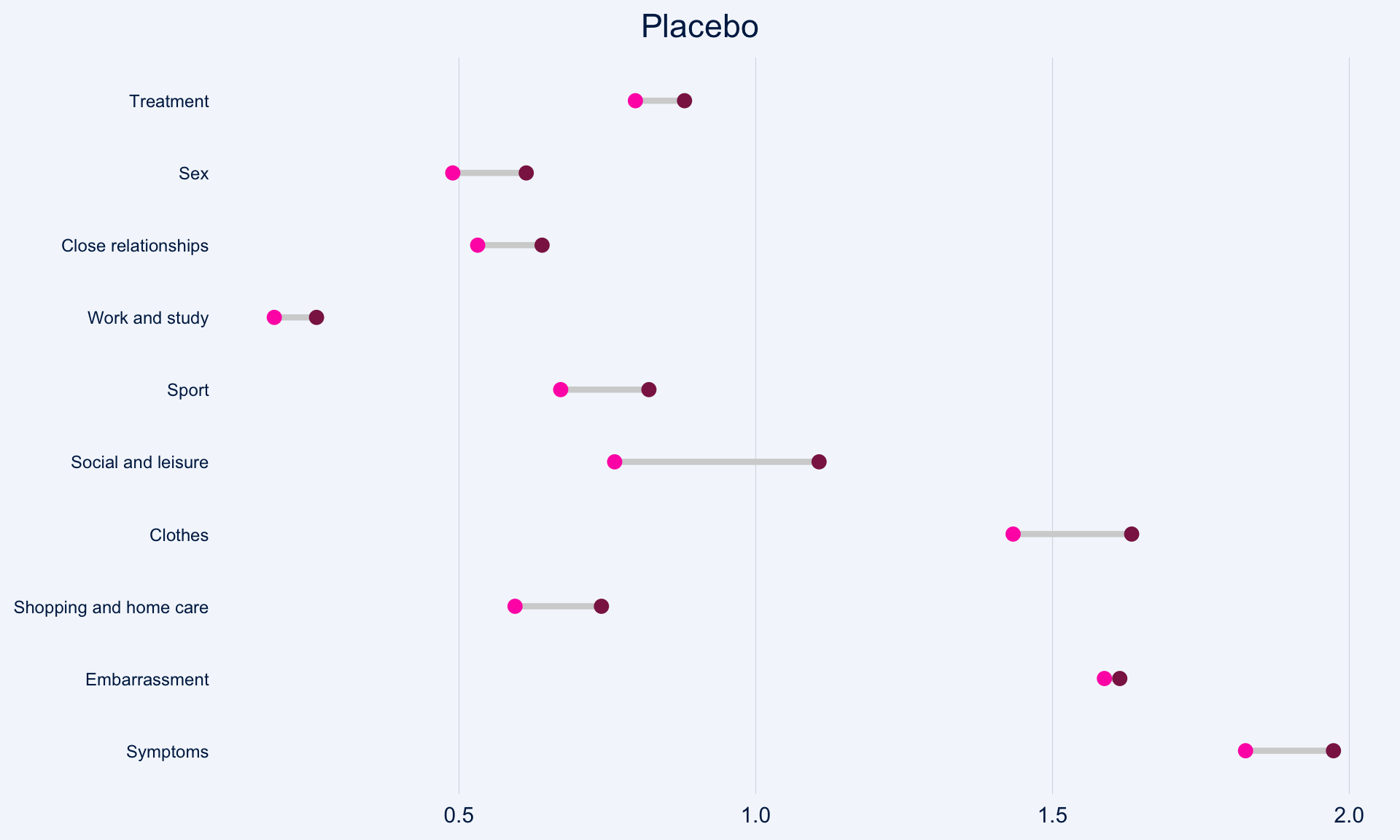

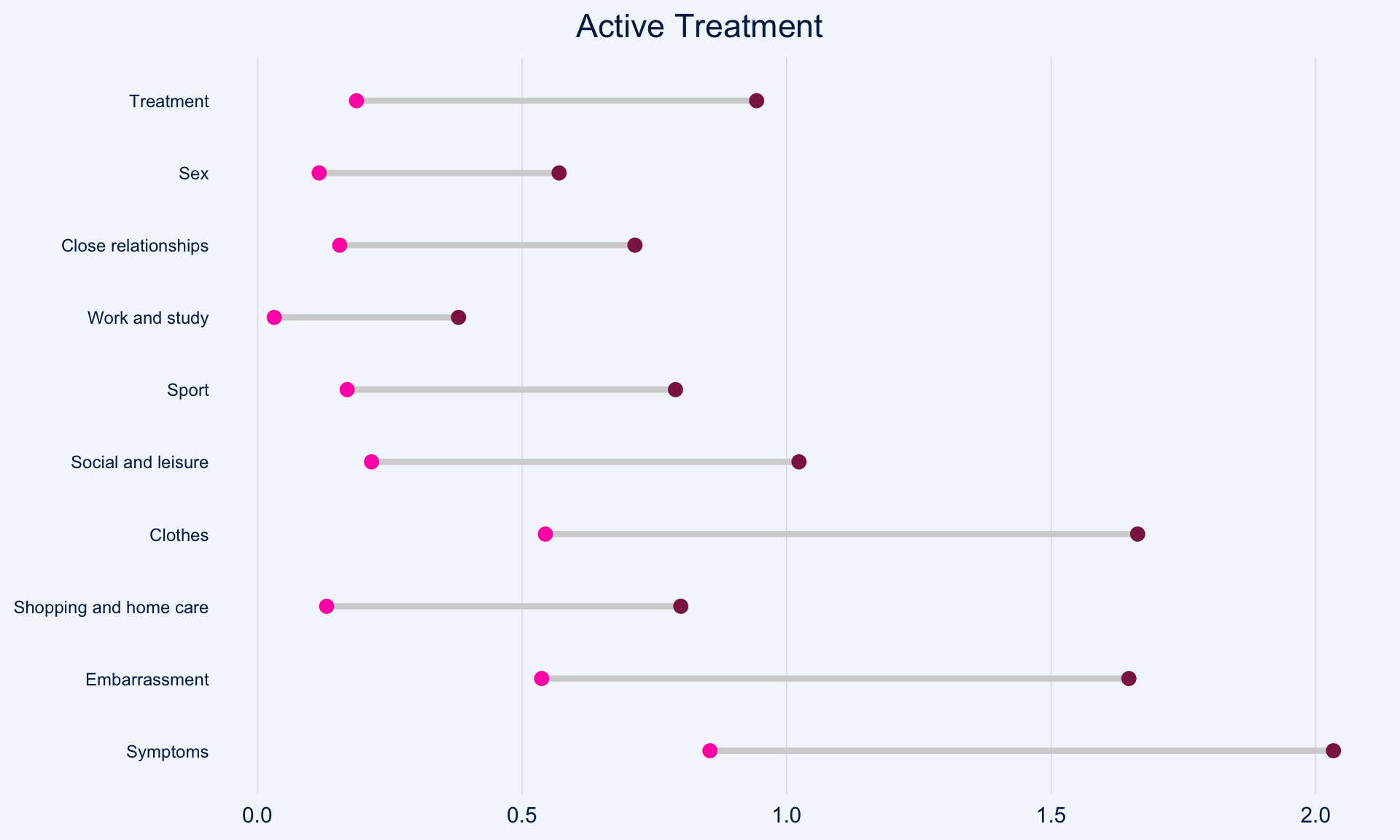

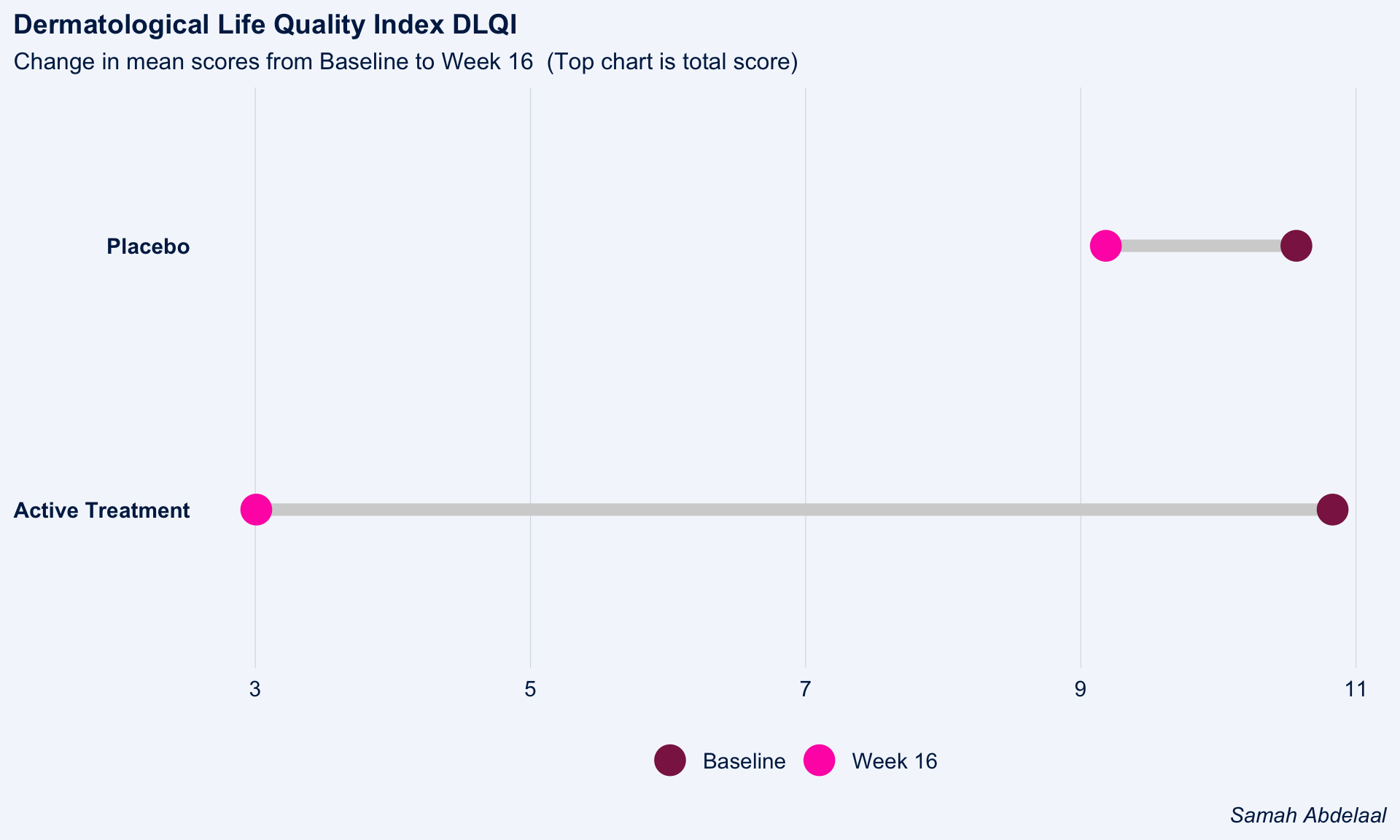

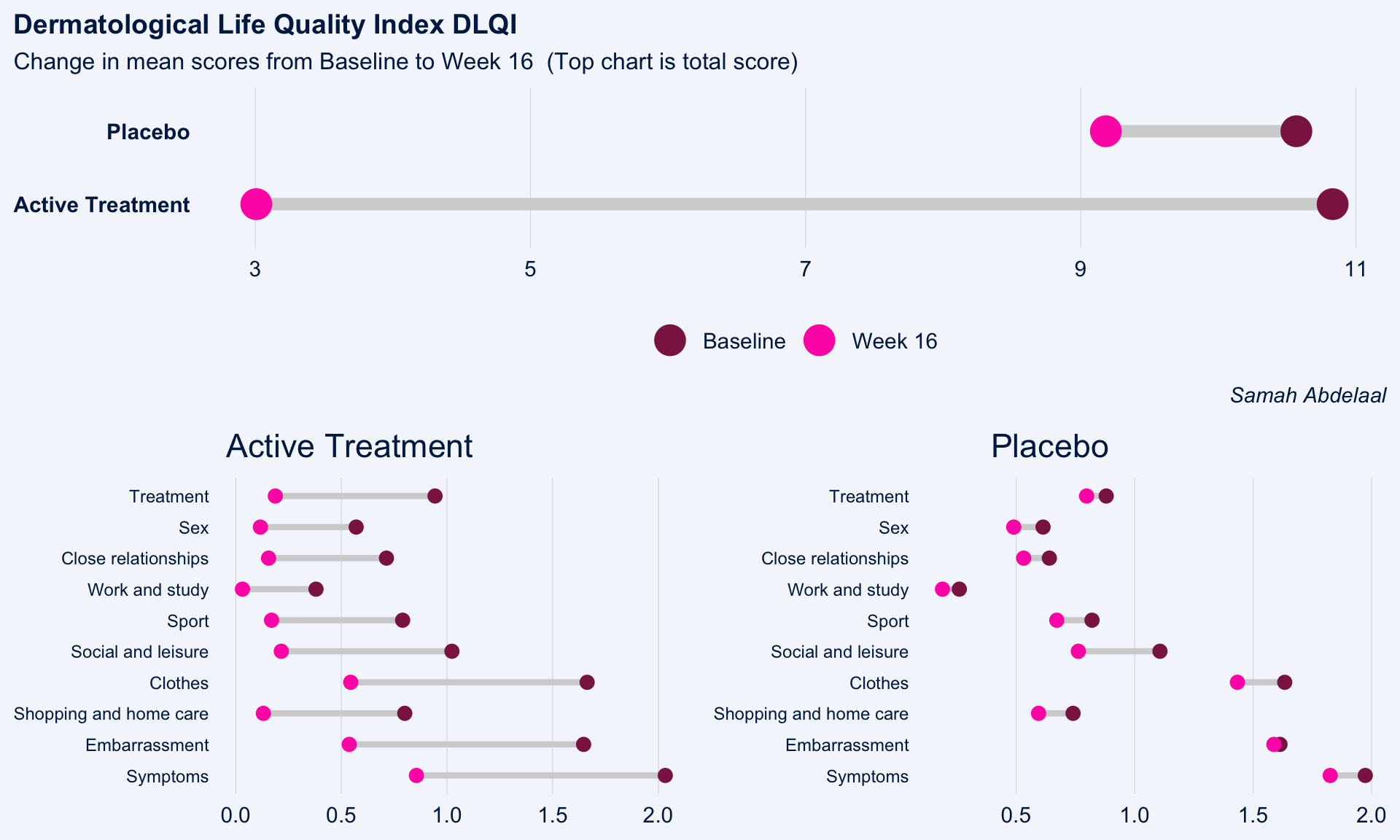

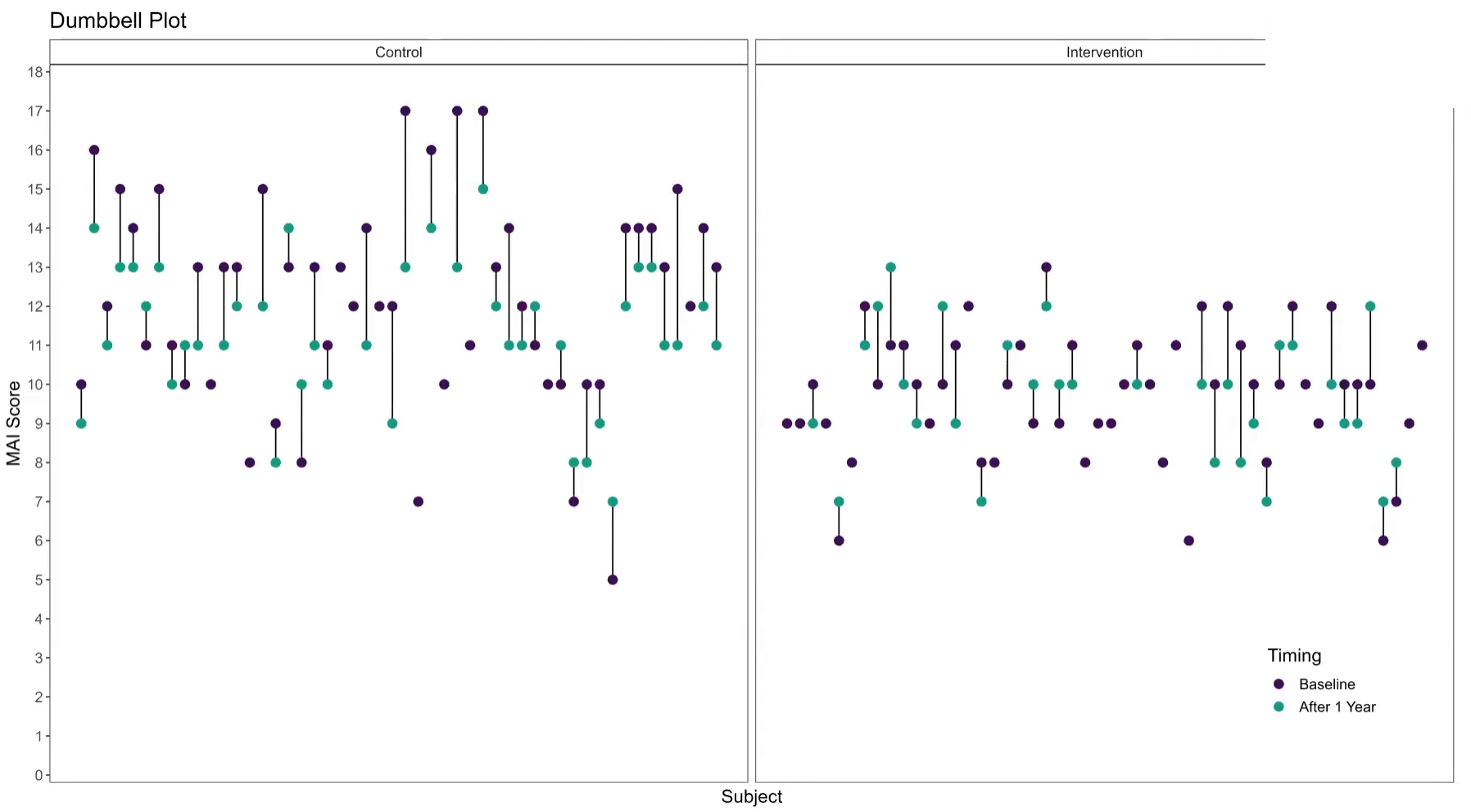

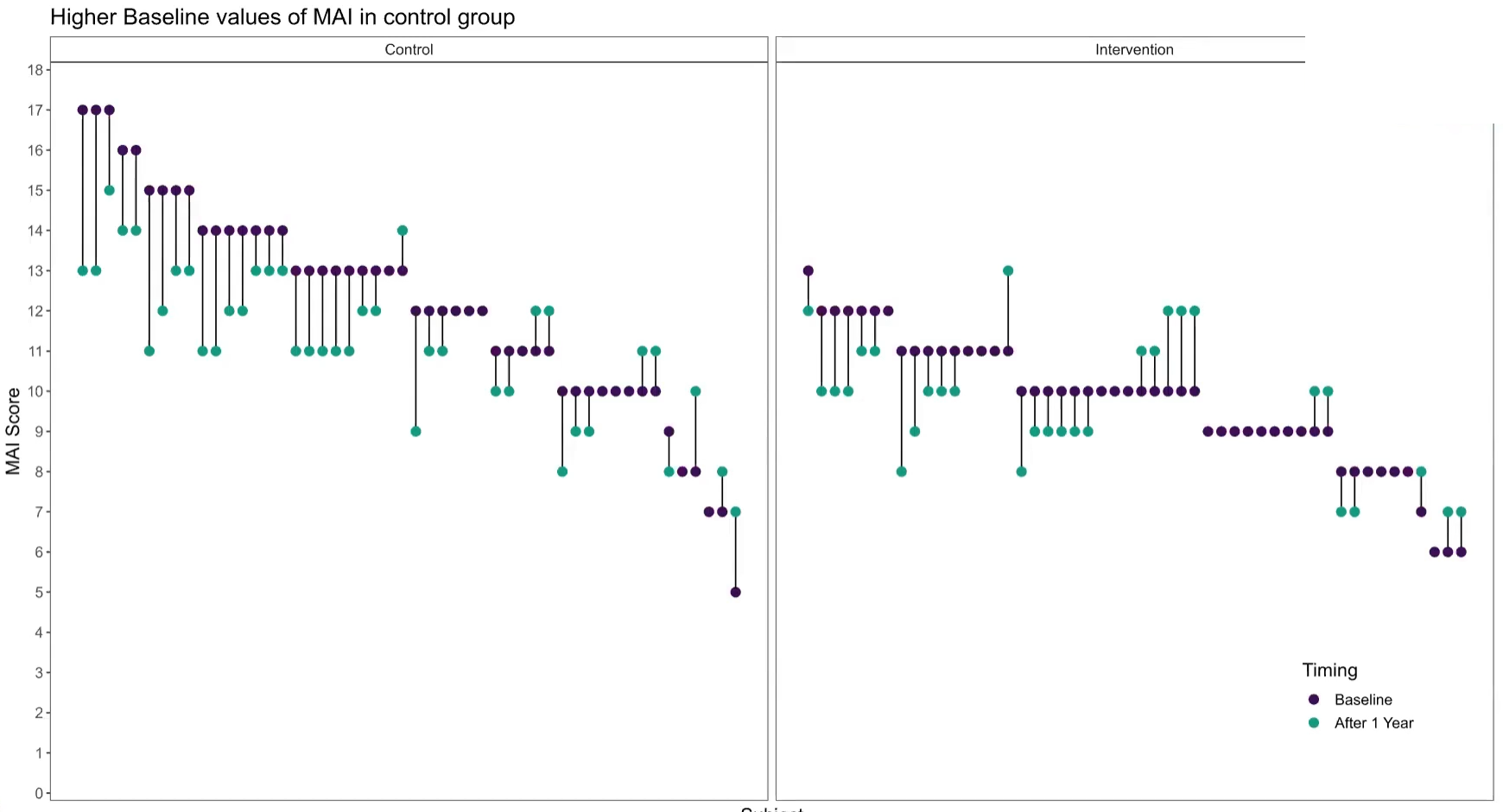

The dot plot displayed here is a great way to quickly see the effect of treatment on the different DLQI items. For each item and each group, we see mean scores for both Baseline and Week 16, which are coloured differently and consistently to allow patterns to be quickly and easily identified. Whilst it is not something commonly seen, the position of the legend in the middle of the page works really nicely and can be quickly referenced for each plot.

Overall, this is a very clean design and provides a clear message. However, there were some ways in which the panel felt that the layout of this example could be improved. Whilst it is easy to compare within treatment groups, it is not so easy to make comparisons between treatment groups here. The group proposed combining the two treatment groups in a vertical layout, possibly using different colours to differentiate between treatment groups and arrows to indicate the direction of the effect. This would also mean that labels of the different items would not need to be repeated as they currently are. This vertical structure could be accommodated by using less space for the first figure showing effect sizes on overall means. Having this so large is not necessarily a bad thing if it shows key information which needs to be emphasised, but we should be intentional about it when doing things such as this.

Unlike in the previous example, here the items are sorted in the order they appear on the questionnaire. Alternative ways to sort the items could be considered to highlight those which are most interesting, such as sorting by baseline means or by effect size. There are also a few tweaks to the horizontal axes which the group felt should be made. Firstly, it would be better to start from zero on the first dot plot, otherwise the effect size appears to be exaggerated. Further, the horizontal axes and spacing between labels should be made consistent for the two treatment groups, allowing for direct comparison between the two figures.

# Load data

dql <- read_csv("./01_Datasets/ww2020_dlqi.csv")

# Load library

library(tidyverse)

library(ggplot2)

library(ggthemes)

library(ggcharts)

library(ggalt)

# Seperate treatment arms

new_A <-

dql %>%

filter(TRT=="A")

new_B <-

dql %>%

filter(TRT=="B")

## Treatment A : Placebo

dtA <- new_A %>%

filter(if_all(everything(), ~ !is.na(.))) %>%

# Select relevant variables

select(

DLQI101, DLQI102, DLQI103, DLQI104, DLQI105,

DLQI106, DLQI107, DLQI108, DLQI109, DLQI110,

VISIT

) %>%

# Summarize mean score for each question grouped by visit

# while also renaming variables to indicate the meaning of each score

group_by(VISIT) %>%

dplyr::summarize(

Symptoms = mean(DLQI101, na.rm = T),

Embarrassment = mean(DLQI102, na.rm = T),

`Shopping and home care` = mean(DLQI103, na.rm = T),

Clothes = mean(DLQI104, na.rm = T),

`Social and leisure` = mean(DLQI105, na.rm = T),

Sport = mean(DLQI106, na.rm = T),

`Work and study` = mean(DLQI107, na.rm = T),

`Close relationships` = mean(DLQI108, na.rm = T),

Sex = mean(DLQI109, na.rm = T),

Treatment = mean(DLQI110, na.rm = T)

)

# Tidying data

dtA <-

dtA %>%

pivot_longer(

!VISIT,

names_to = "Domain",

values_to = "Mean_Score"

)

# Seperating the visit variable into baseline and week 16

dtA <-

dtA %>%

pivot_wider(

names_from = VISIT,

values_from = Mean_Score

)

# Ensuring the domain levels are ordered the same

dtA <-

dtA %>%

mutate(

Domain = factor(Domain,

levels = c("Symptoms", "Embarrassment",

"Shopping and home care",

"Clothes", "Social and leisure",

"Sport", "Work and study",

"Close relationships",

"Sex", "Treatment"))

)

# Constructing a dumbbell plot using ggalt package with a ggchart theme

(a <-

ggplot()+

geom_dumbbell(

data = dtA,

aes(

y = Domain,

x = Baseline,

xend = `Week 16`

),

size = 1.5,

color = "lightgray",

size_x = 3,

colour_x = "violetred4",

size_xend = 3,

colour_xend = "maroon1"

)

+ theme_ggcharts(grid = "Y")

+ labs(

title = "Placebo"

)

+ theme(

plot.title = element_text(hjust = 0.5),

axis.title.y = element_blank(),

axis.title.x = element_blank(),

axis.text.y = element_text(size = 9)

))

## Treatment B : Active Treatment

dtB <- new_B %>%

# Remove missing data

filter(if_all(everything(), ~ !is.na(.))) %>%

# Select relevant variables

select(

DLQI101, DLQI102, DLQI103, DLQI104, DLQI105,

DLQI106, DLQI107, DLQI108, DLQI109, DLQI110,

VISIT

) %>%

# Summarize mean score for each question grouped by visit

# while also renaming variables to indicate the meaning of each score

group_by(VISIT) %>%

summarise(

Symptoms = mean(DLQI101, na.rm = T),

Embarrassment = mean(DLQI102, na.rm = T),

`Shopping and home care` = mean(DLQI103, na.rm = T),

Clothes = mean(DLQI104, na.rm = T),

`Social and leisure` = mean(DLQI105, na.rm = T),

Sport = mean(DLQI106, na.rm = T),

`Work and study` = mean(DLQI107, na.rm = T),

`Close relationships` = mean(DLQI108, na.rm = T),

Sex = mean(DLQI109, na.rm = T),

Treatment = mean(DLQI110, na.rm = T)

)

# Tidying data

dtB <-

dtB %>%

pivot_longer(

!VISIT,

names_to = "Domain",

values_to = "Mean_Score"

)

# Seperating the visit variable into baseline and week 16

dtB <-

dtB %>%

pivot_wider(

names_from = VISIT,

values_from = Mean_Score

)

# Ensuring the domain levels are ordered the same

dtB <-

dtB %>%

mutate(

Domain = factor(Domain,

levels = c("Symptoms", "Embarrassment",

"Shopping and home care",

"Clothes", "Social and leisure",

"Sport", "Work and study",

"Close relationships",

"Sex", "Treatment"))

)

# Constructing a dumbbell plot using ggalt package with a ggchart theme

(b <-

ggplot()+

geom_dumbbell(

data = dtB,

aes(

y = Domain,

x = Baseline,

xend = `Week 16`

),

size = 1.5,

color = "lightgray",

size_x = 3,

colour_x = "violetred4",

size_xend = 3,

colour_xend = "maroon1")

+ theme_ggcharts(grid = "Y")

+ labs(

title = "Active Treatment"

) + theme(

plot.title = element_text(hjust = 0.5),

axis.title.y = element_blank(),

axis.title.x = element_blank(),

axis.text.y = element_text(size = 9)

))

## Mean total score for both treatment arms

totaldt <-

dql %>%

# Remove missing data

filter(if_all(everything(), ~ !is.na(.))) %>%

# select and summarizing relevant variables

select(DLQI_SCORE, TRT, VISIT) %>%

group_by(TRT, VISIT) %>%

summarise(

qtotal = mean(DLQI_SCORE, na.rm = T)

)

# Tidying data

totaldt <-

totaldt %>%

pivot_wider(

names_from = VISIT,

values_from = qtotal

)

totaldt$TRT[totaldt$TRT=="A"] <- "Placebo"

totaldt$TRT[totaldt$TRT=="B"] <- "Active Treatment"

# Constructing a dumbbell plot using ggcharts

(c <-

dumbbell_chart(

data = totaldt,

x = TRT,

y1 = Baseline,

y2 = `Week 16`,

line_color = "lightgray",

line_size = 3,

point_color = c("violetred4", "maroon1"),

point_size = 7

) + labs(

x = NULL,

y = NULL,

title = "Dermatological Life Quality Index DLQI",

subtitle = "Change in mean scores from Baseline to Week 16 (Top chart is total score)",

caption = "Samah Abdelaal"

) + theme(

axis.text.y = element_text(face = "bold"),

plot.title = element_text(size = 14,

face = "bold"),

plot.subtitle = element_text(size = 12),

plot.caption = element_text(size = 11,

face = "italic"),

legend.position = "bottom"

))

# Compine all three plots

library(gridExtra)

grid.arrange(c, arrangeGrob(b, a, ncol = 2), nrow = 2)

1.2 Lineplots

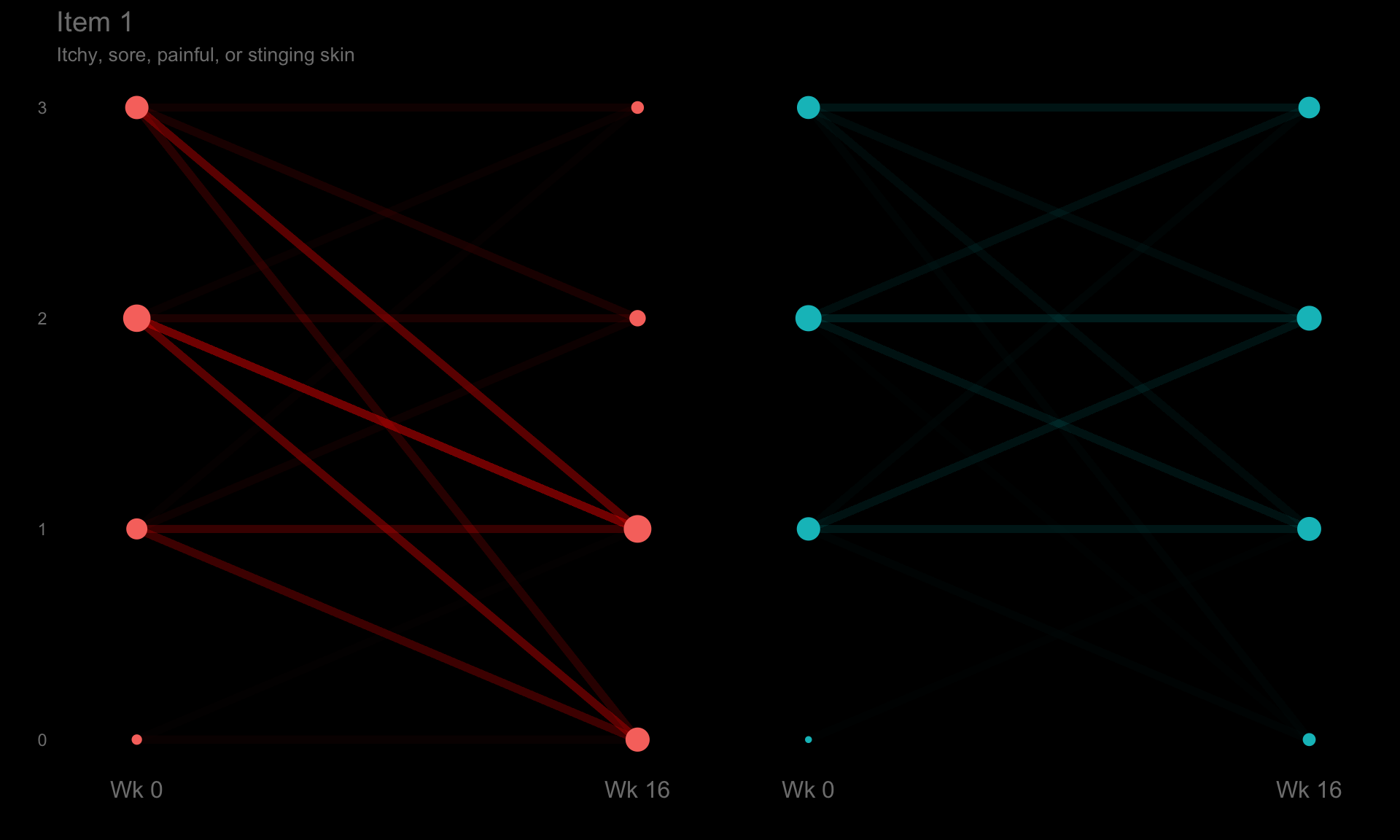

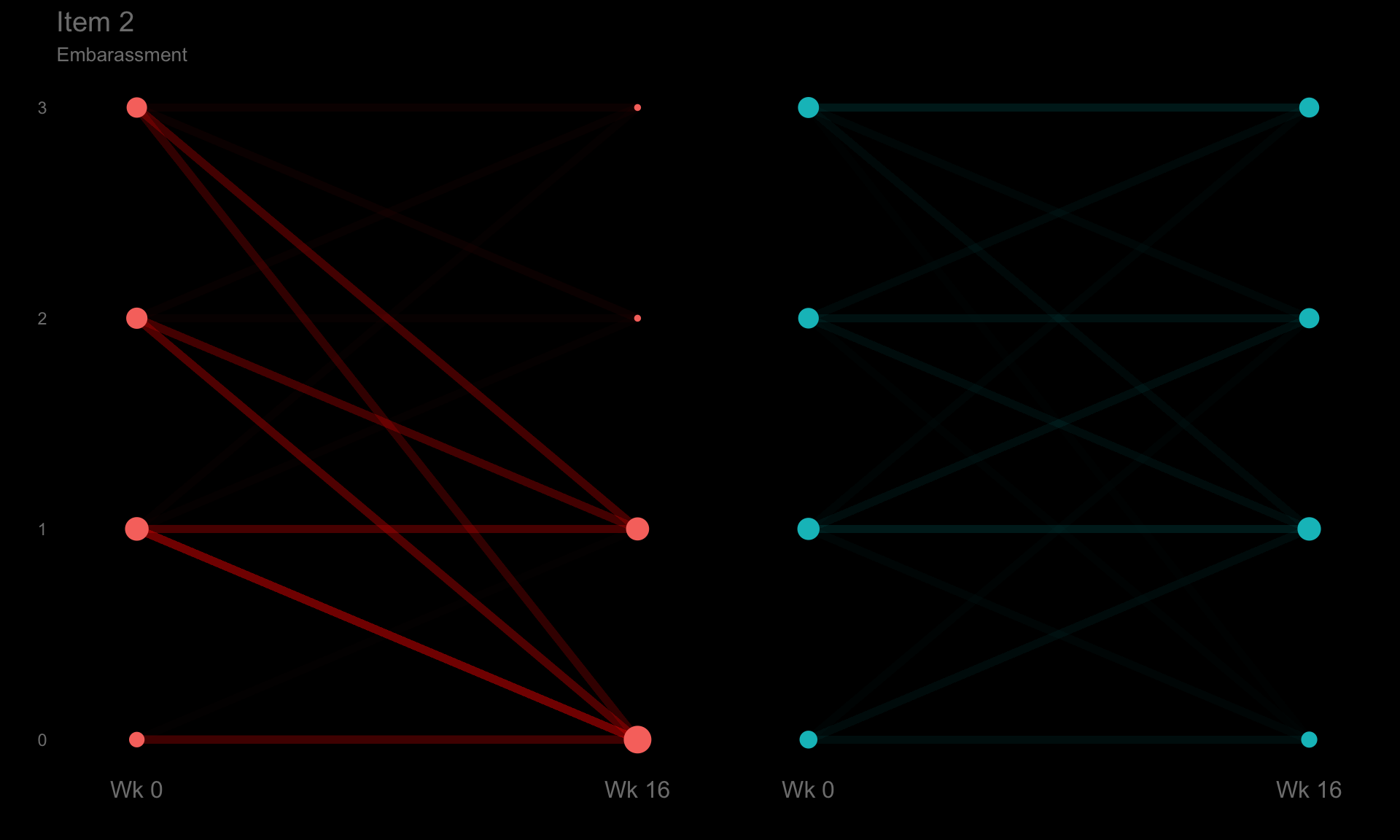

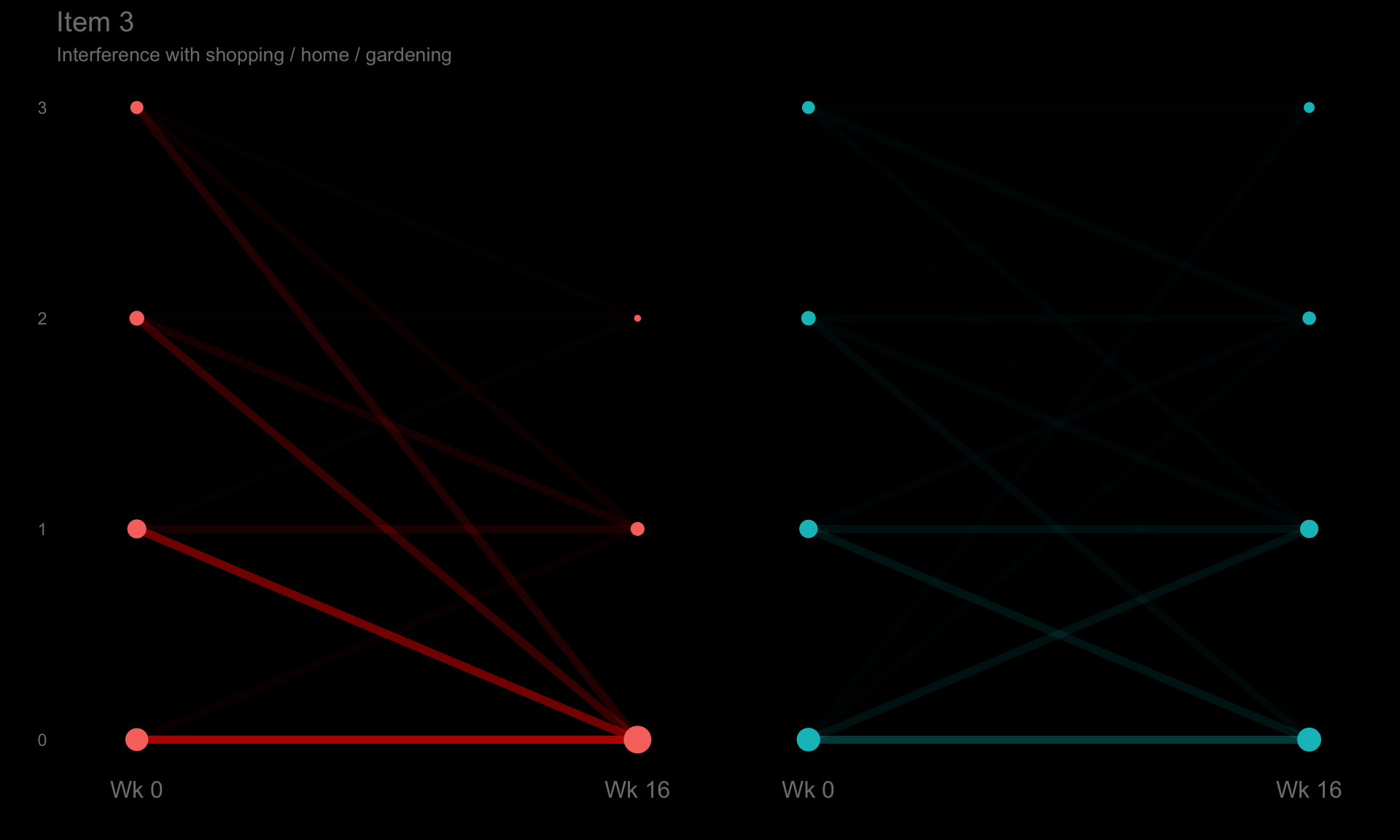

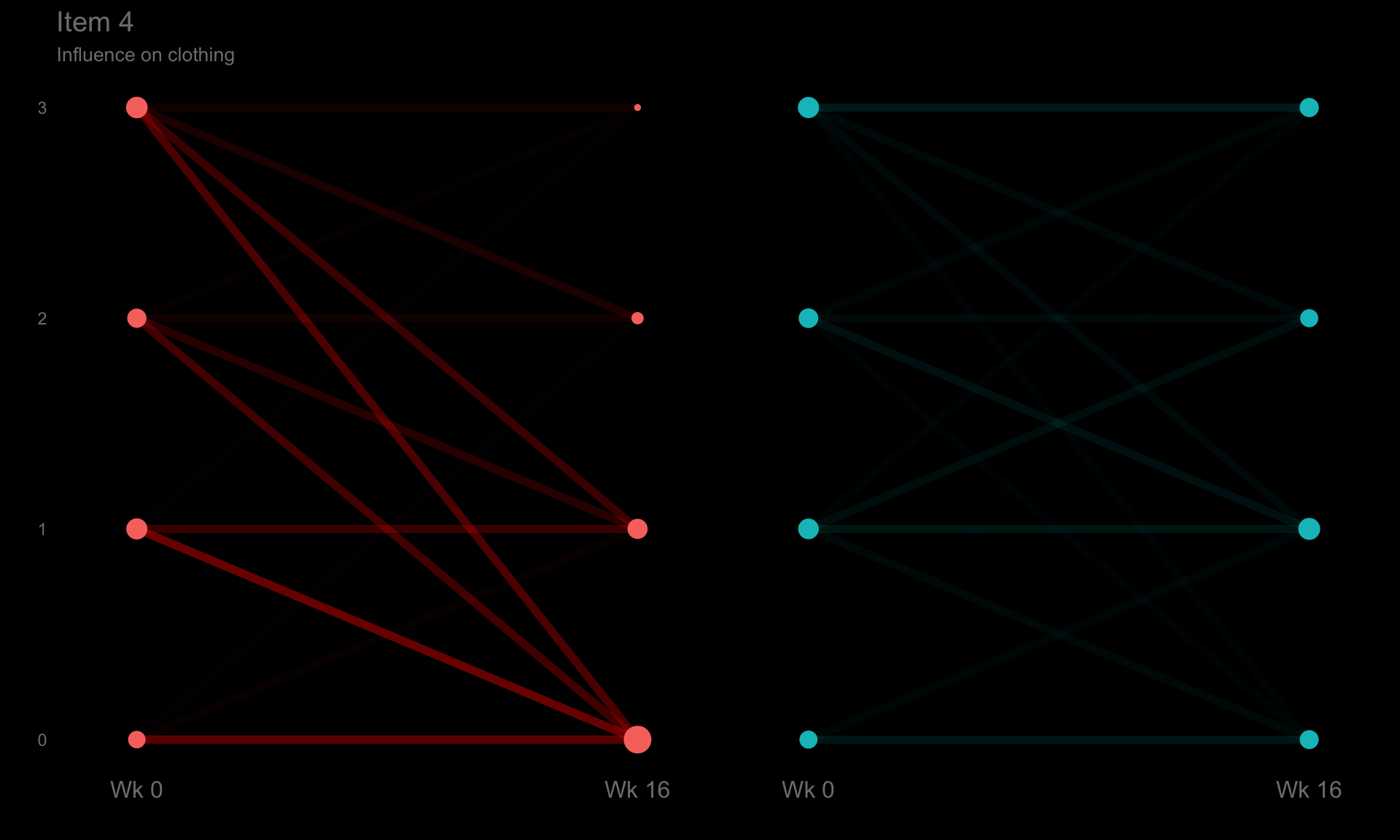

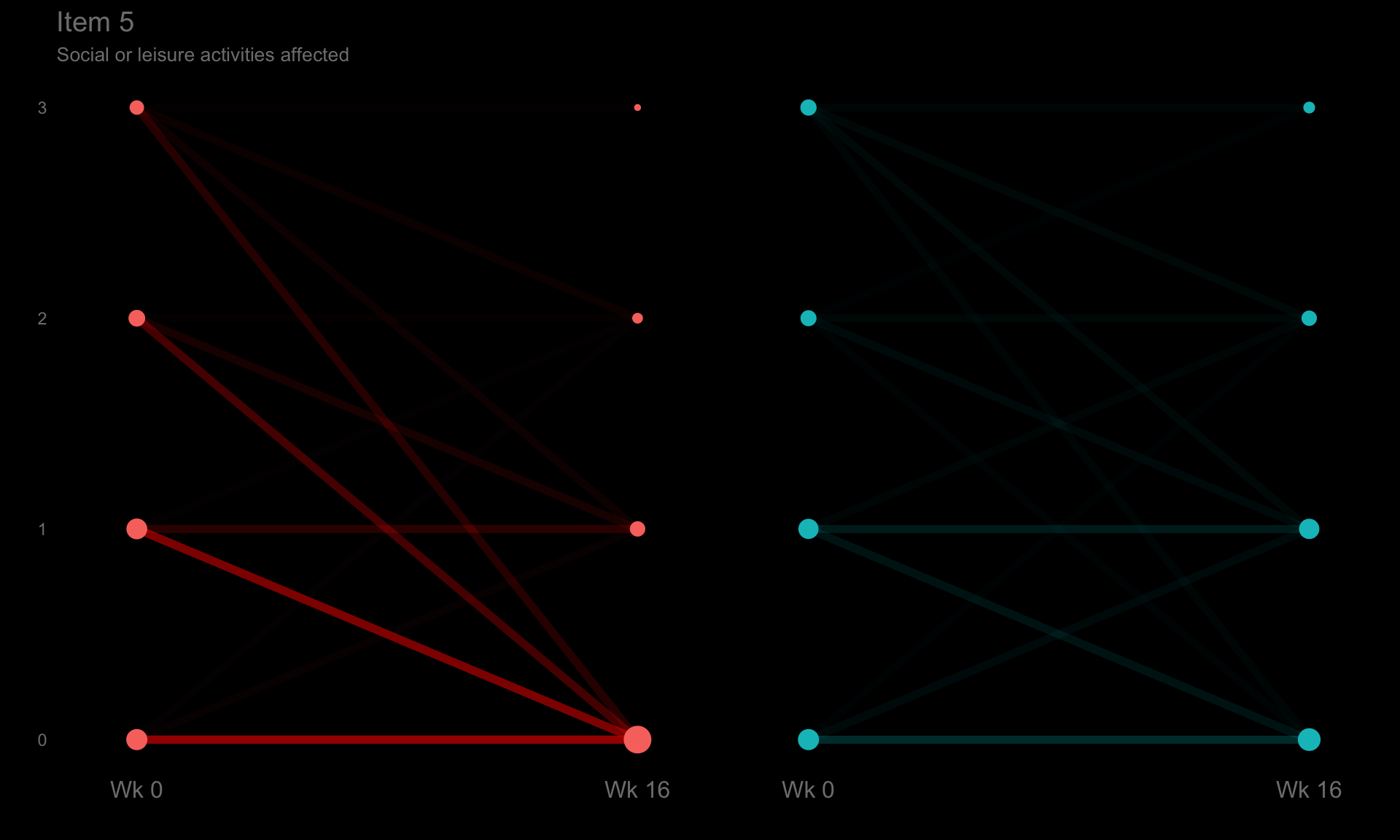

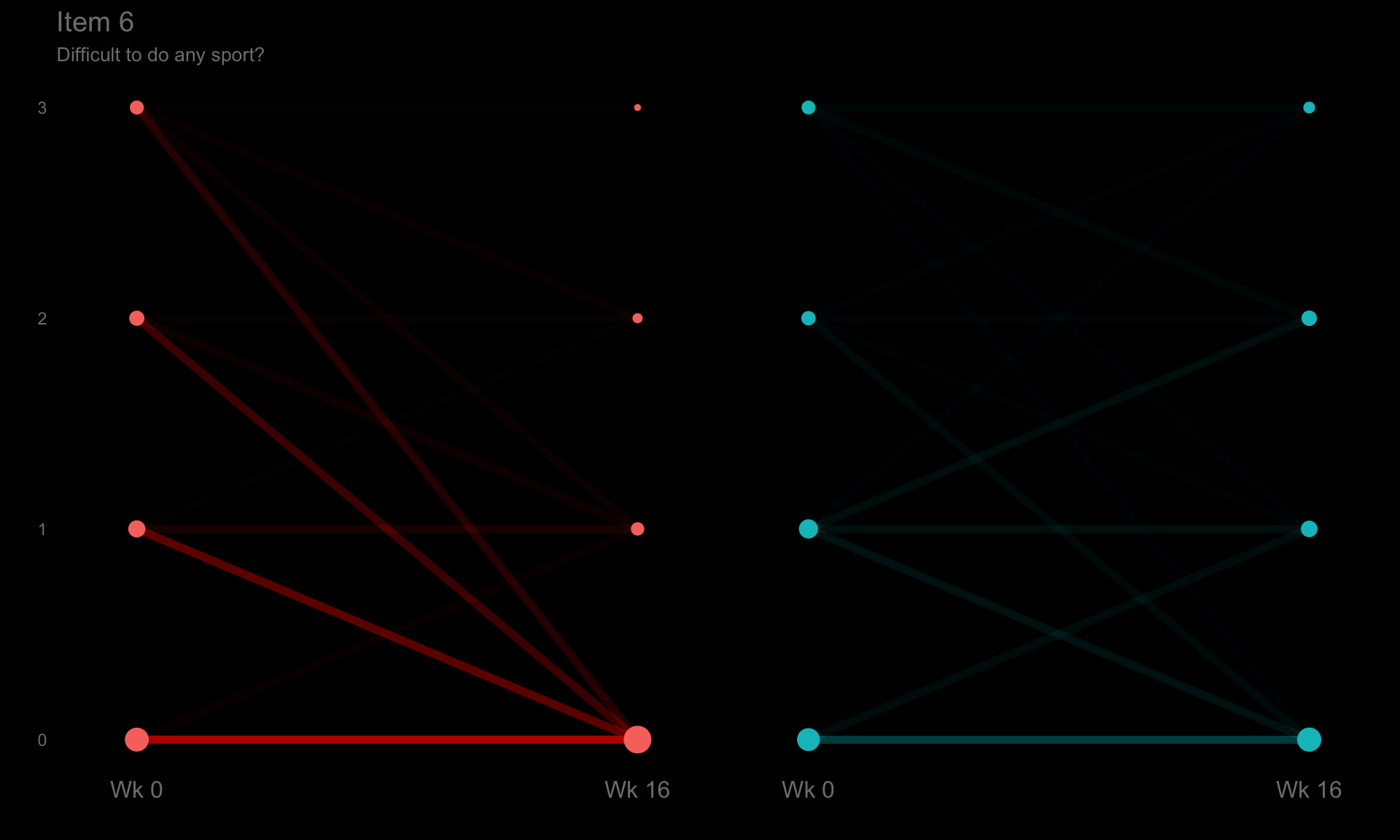

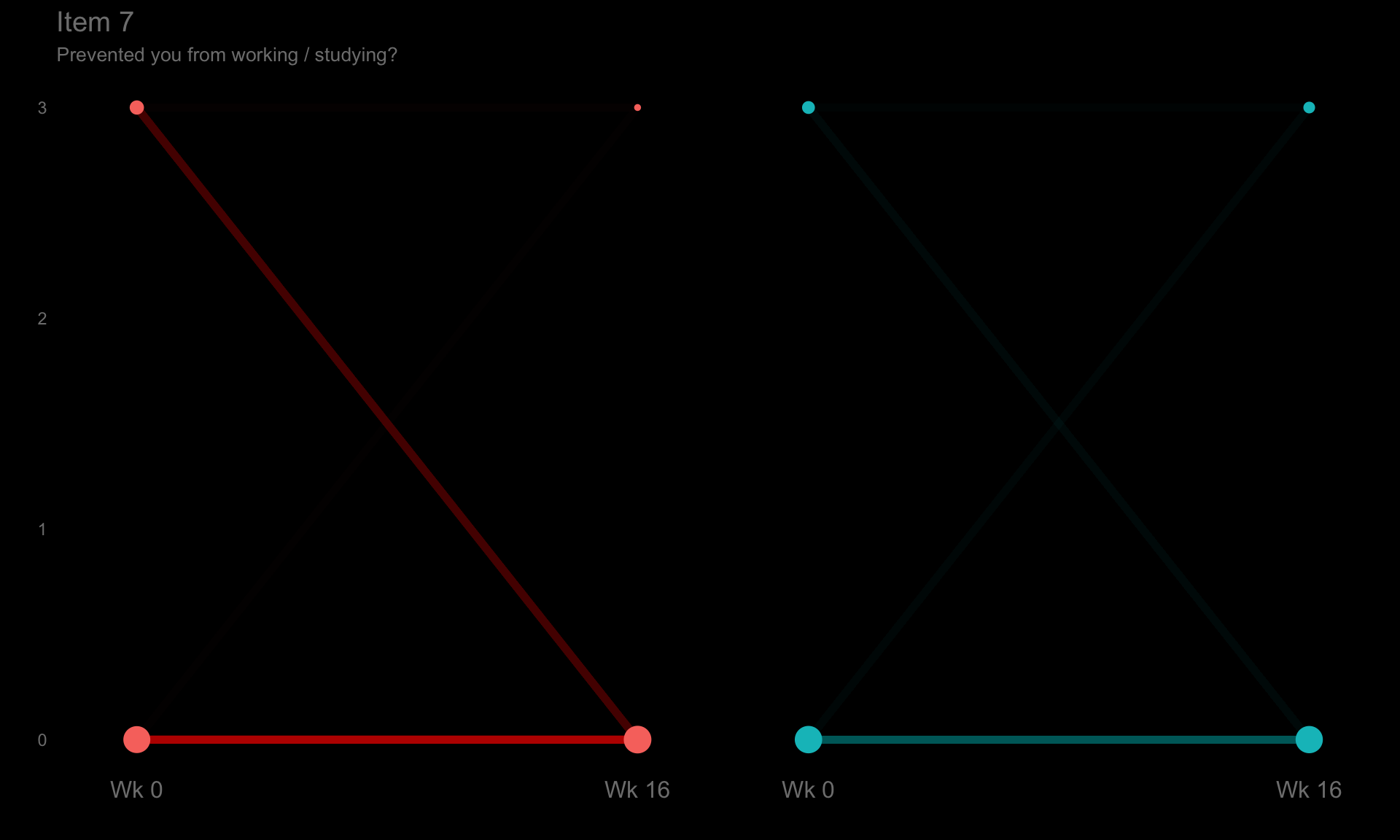

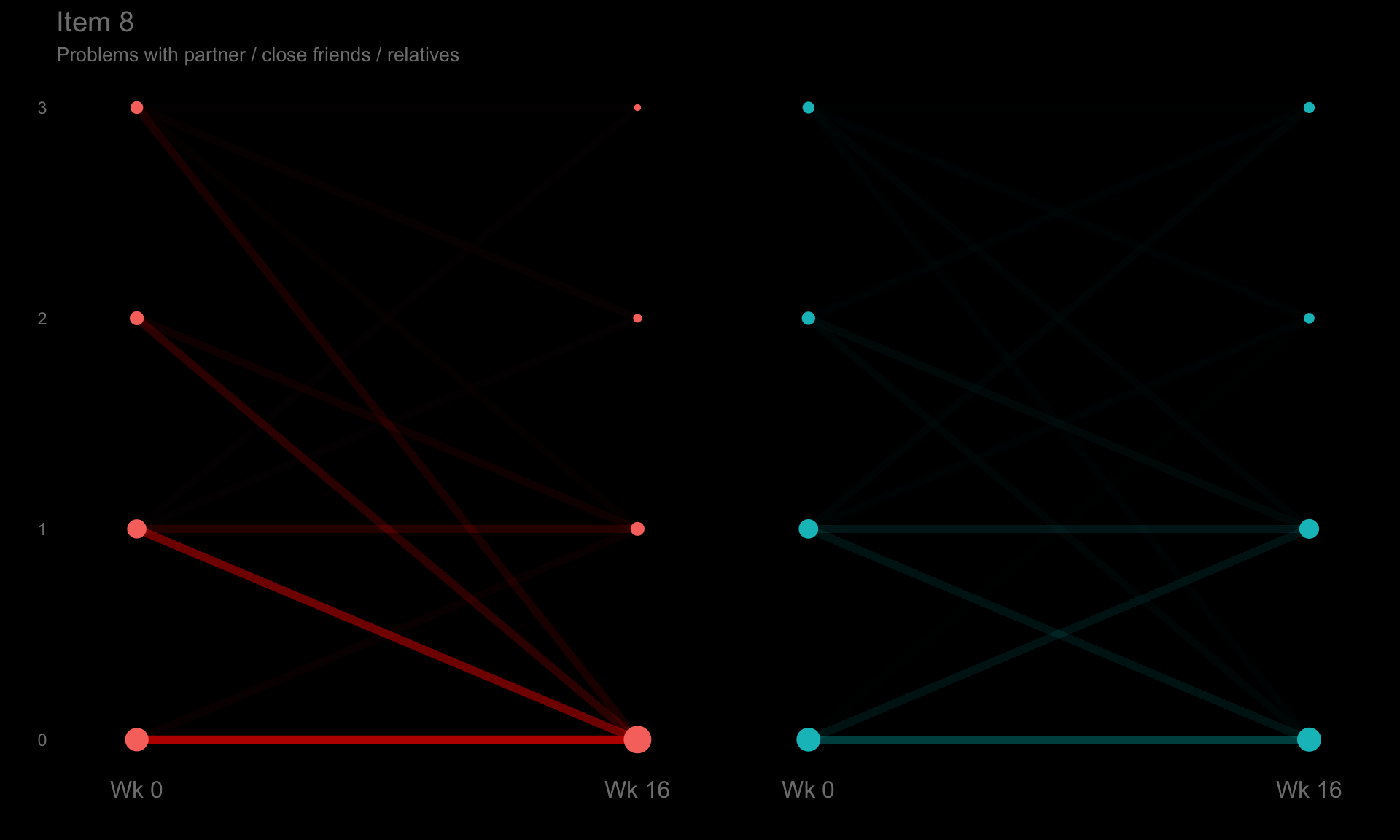

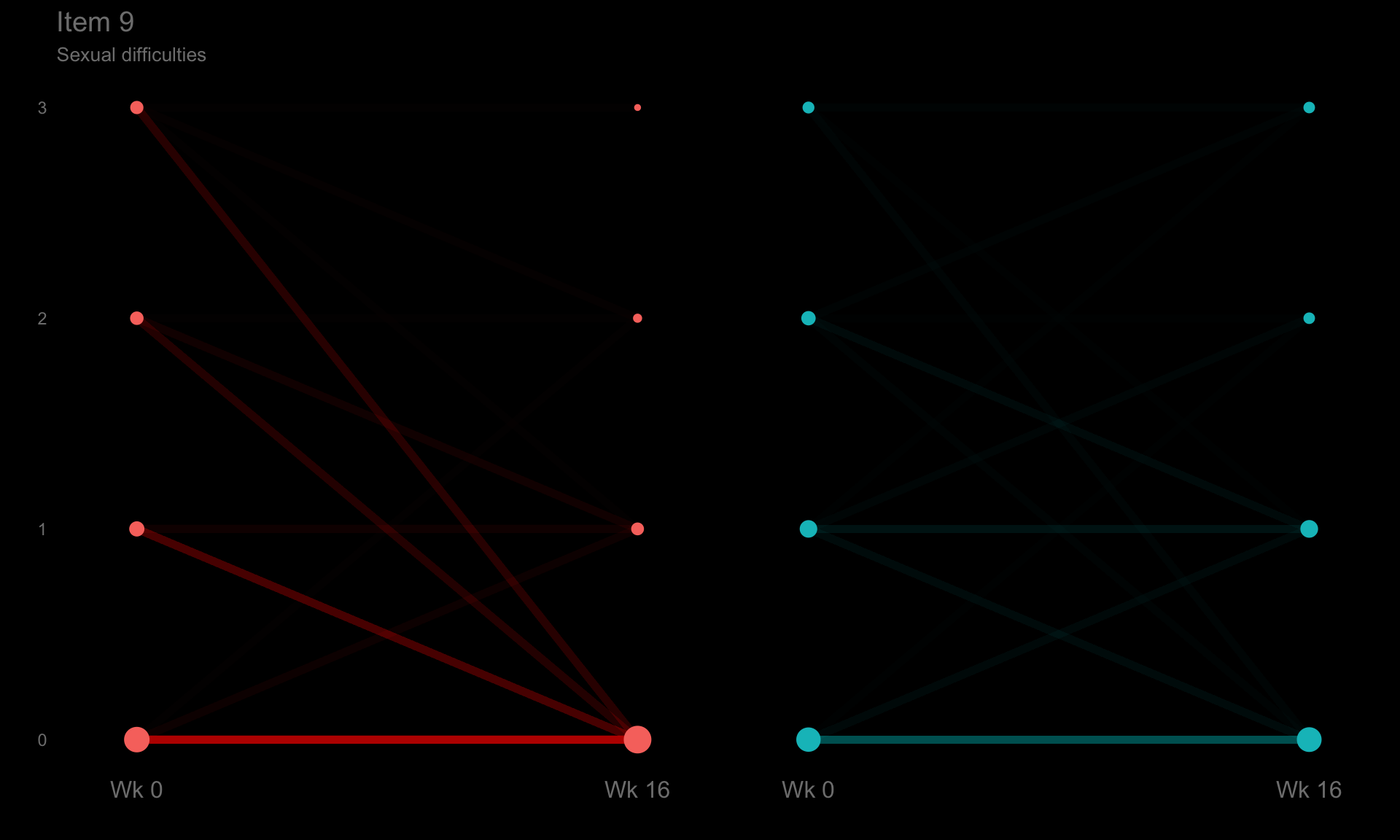

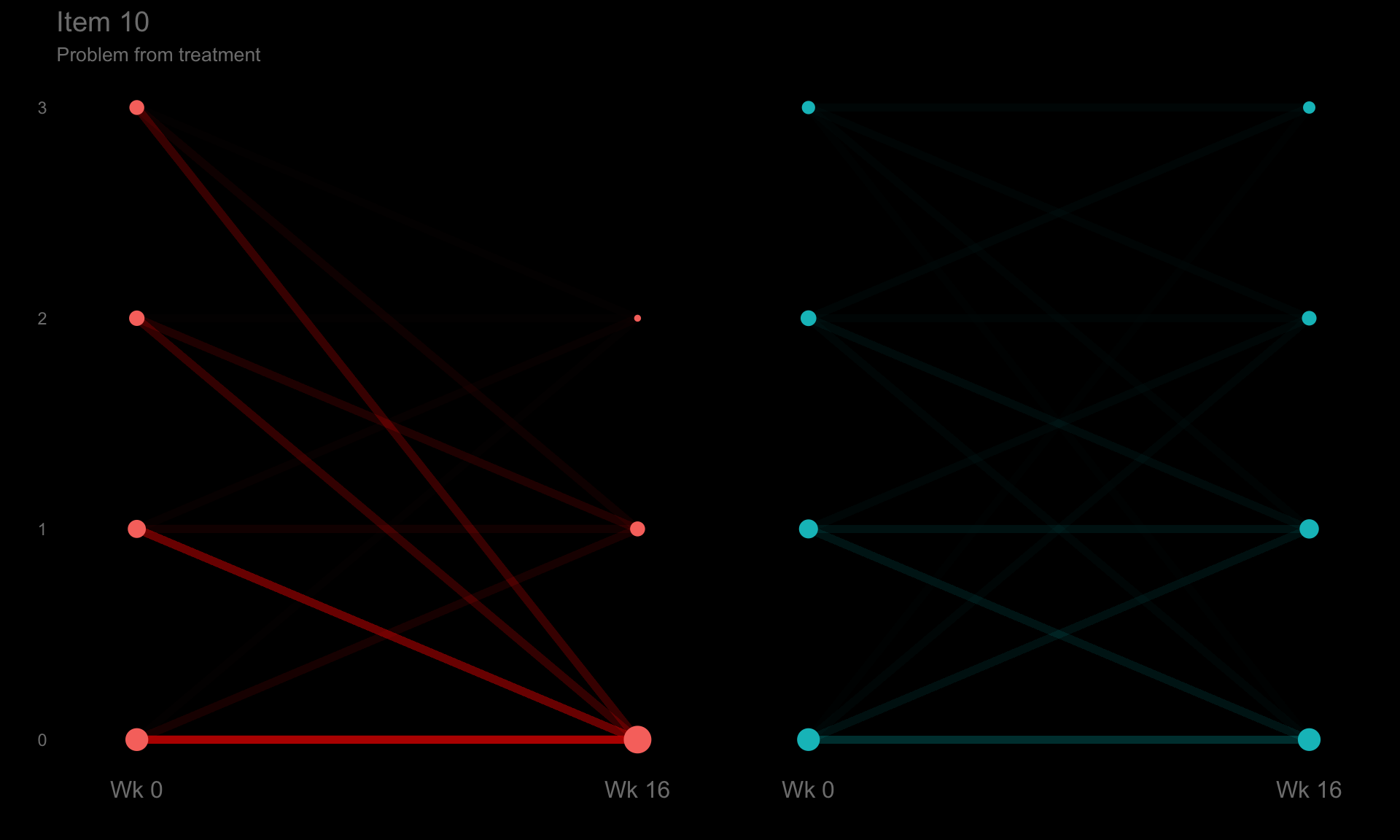

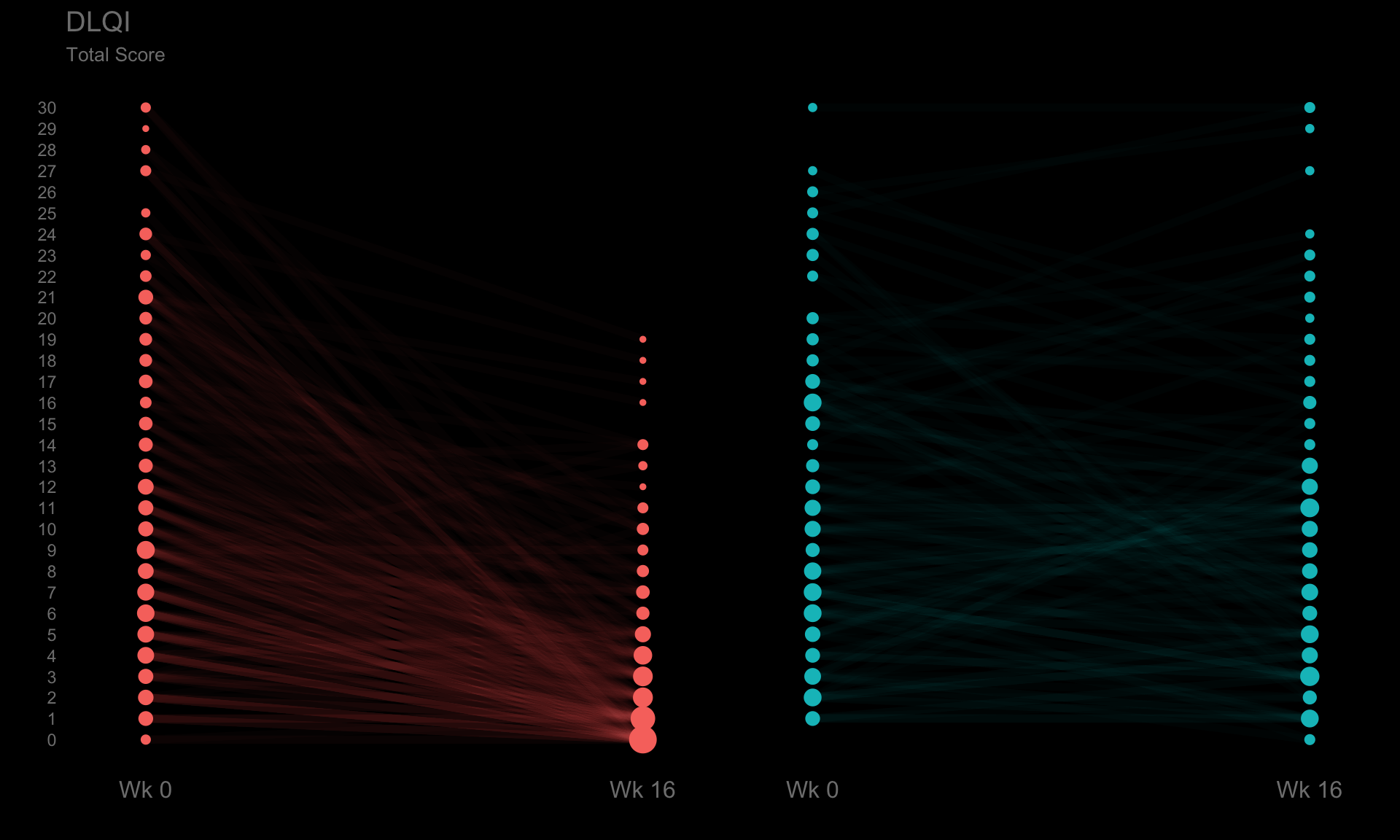

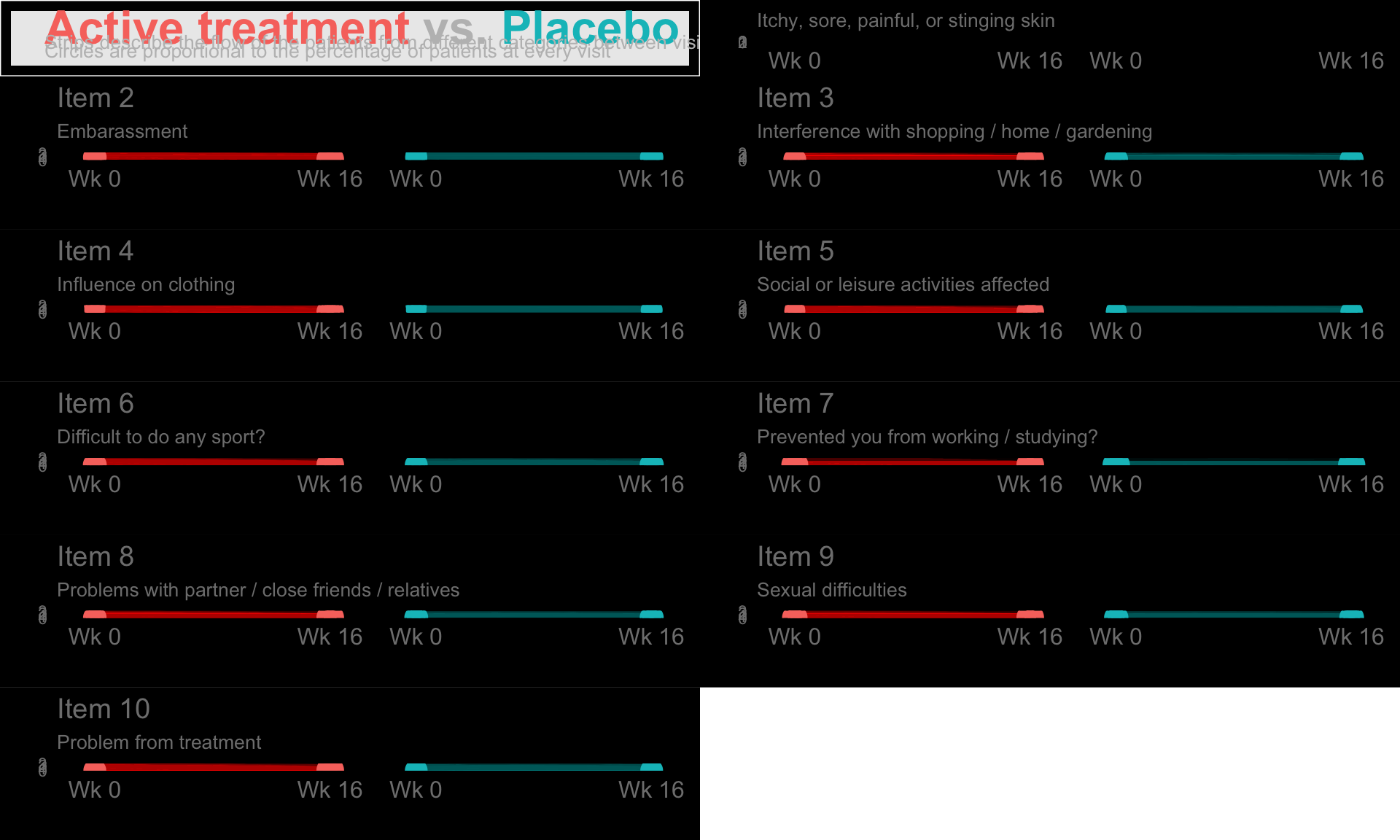

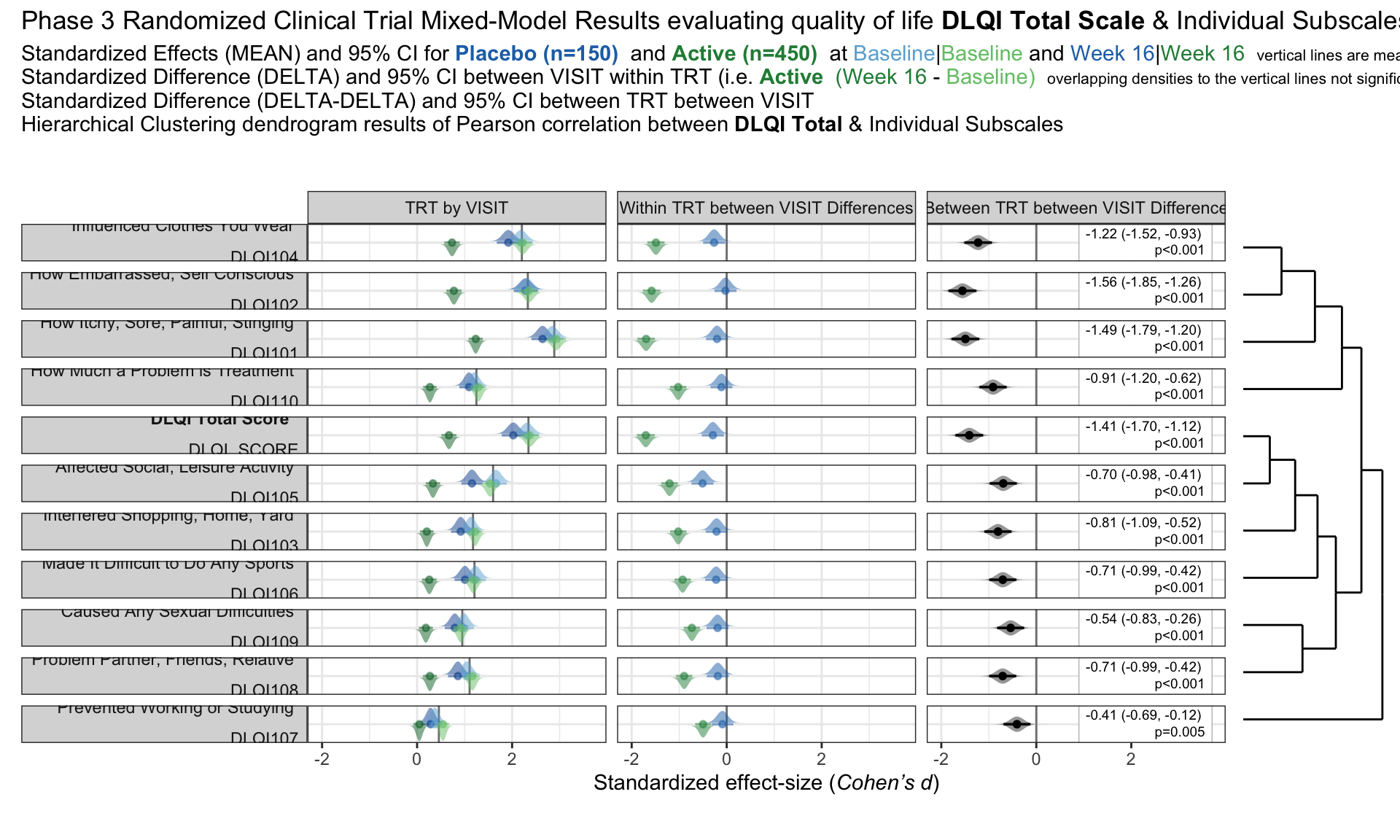

These slope graphs provide a clear and meaningful picture of patient level changes in DLQI scores for the different items and treatment groups. Here, the circles are proportional to the percentage of patients with a given response to that item at that visit, and the strips represents the ‘flow’ of patients across response levels between the visits. The decision to make the circles proportional to percentages rather than counts is the correct one here and allows for meaningful comparison between the imbalanced treatment groups.

This is a really nice example of a plot type which we don’t often see and tells a good story. The consistent improvement for the active treatment is clear to see and we see that there is not too much happening in terms of a response for placebo. Whilst we do not get the same level of understanding of marginal distributions that we might with a histrogram, we do still get some notion and this is balanced by the additional level of patient understanding which we have.

The group liked how the flow of patients could easily be seen by the intensity of lines, but felt that either a greater level of ‘minimum’ intensity or a different colour to blue could be used to still allow even a single patient’s movement to be seen, which is currently slightly difficult. However, it was acknowledged that not being able to see a lot within certain items for placebo is in itself meaningful.

The consistent use of colour between plots and titles allows us to easily distinguish between treatment groups, although it was felt that a more telling title may have been used. It is great that item descriptions rather than just numbers are provided, and the panel really liked that we see the effects when certain items are combined together into different domains. Grouping of the ten DLQI items into these six domains is something which is commonly done in clinical practice but is not something which was considered by many of the other examples. It was acknowledged that the inclusion of these additional plots for the six domains justifies keeping the items ordered as they appear on the questionnaire, so we can clearly see which items correspond to which domains. However, there was still some discussion as to whether an alternative ordering of both individual items and domains could be considered.

This example has a long, vertical layout. This would be great for something like a poster, but is maybe less convenient for viewing on screen. There was a feeling that when being presented as a poster, a lighter background may be more suitable.

library(tidyverse)

library(data.table)

library(grid)

library(cowplot)

library(RCurl)

d <- read_csv("./01_Datasets/ww2020_dlqi.csv")

d1a <- d %>%

gather(key = PARAMCD,

value = AVAL, DLQI101:DLQI_SCORE, factor_key=TRUE) %>%

filter(!PARAMCD %in% c("DLQIMCID", "DLQIRESP")) %>%

mutate(VISIT = ifelse(VISIT=="Baseline", "Wk 0", "Wk 16"),

VISITN = if_else(VISIT=="Wk 0", 0, 1))

d1ad1b <- d1a %>%

group_by(TRT, PARAMCD, VISITN, VISIT, AVAL) %>%

summarise(n = n())%>%

mutate(freq = n / sum(n))

d1bd1a$TRT <- relevel(as.factor(d1a$TRT), "B")

d1b$TRT <- relevel(as.factor(d1b$TRT), "B")

tit_col = "grey50"

cap_col = "grey50"

ggplotib <- function(paramcd = NULL,

title = NULL,

caption = NULL,

breaks = 0:3,

transparency = 0.01){

d1a_2 <- d1a %>%

filter(PARAMCD %in% paramcd) %>%

group_by(TRT, USUBJID, VISITN, VISIT) %>%

summarise(AVAL_SUB = sum(AVAL))

d1b_2 <- d1a_2 %>%

group_by(TRT, VISITN, VISIT, AVAL_SUB) %>%

summarise(n = n())%>%

mutate(freq = n / sum(n))

p1 <- ggplot() +

geom_line(data = d1a_2, aes(x = VISITN, y = AVAL_SUB, group = USUBJID, col=TRT),

alpha = transparency, size = 2) +

geom_point(data = d1b_2, aes(x = VISITN, y = AVAL_SUB, size = freq, col=TRT)) +

facet_grid(cols = vars(TRT)) +

scale_x_continuous(breaks = c(0, 1), labels = c("Wk 0", "Wk 16"), limits = c(-0.1,1.1)) +

scale_y_continuous(breaks = breaks) +

theme_minimal() +

labs(x = "", y = "", title = title, subtitle = caption) +

theme(panel.grid = element_blank(),

title = element_text(size = 12, colour = tit_col),

plot.subtitle = element_text(size = 10, colour = cap_col, hjust = 0),

axis.text.x = element_text(size = 12, colour = "grey50"),

plot.background = element_rect(fill="black"),

strip.text = element_blank()) +

guides(color = F, size = F)

p1

}

# Unidimensional ----------------------------------------------------------

p1 <- ggplotib(paramcd = "DLQI101",

title="Item 1",

caption="Itchy, sore, painful, or stinging skin") +

theme(axis.text.y = element_text(colour = "grey50"))

p1

p2 <- ggplotib(paramcd = "DLQI102",

title="Item 2",

caption="Embarassment") +

theme(axis.text.y = element_text(colour = "grey50"))

p2

p3 <- ggplotib(paramcd = "DLQI103",

title="Item 3",

caption="Interference with shopping / home / gardening") +

theme(axis.text.y = element_text(colour = "grey50"))

p3

p4 <- ggplotib(paramcd = "DLQI104",

title = "Item 4",

caption = "Influence on clothing") +

theme(axis.text.y = element_text(colour = "grey50"))

p4

p5 <- ggplotib(paramcd = "DLQI105",

title = "Item 5",

caption = "Social or leisure activities affected") +

theme(axis.text.y = element_text(colour = "grey50"))

p5

p6 <- ggplotib(paramcd = "DLQI106",

title = "Item 6",

caption = "Difficult to do any sport?") +

theme(axis.text.y = element_text(colour = "grey50"))

p6

p7 <- ggplotib(paramcd = "DLQI107",

title = "Item 7",

caption = "Prevented you from working / studying?") +

theme(axis.text.y = element_text(colour = "grey50"))

p7

p8 <- ggplotib(paramcd = "DLQI108",

title = "Item 8",

caption = "Problems with partner / close friends / relatives") +

theme(axis.text.y = element_text(colour = "grey50"))

p8

p9 <- ggplotib(paramcd = "DLQI109",

title = "Item 9",

caption = "Sexual difficulties") +

theme(axis.text.y = element_text(colour = "grey50"))

p9

p10 <- ggplotib(paramcd = "DLQI110",

title = "Item 10",

caption = "Problem from treatment") +

theme(axis.text.y = element_text(colour = "grey50"))

p10

pt <- ggplotib(paramcd = "DLQI_SCORE",

breaks = 0:30,

transparency = 0.02,

title = "DLQI",

caption = "Total Score") +

theme(axis.text.y = element_text(colour = "grey50"))

pt

# t_b

tfs <- 24

x <- 0.05

t1 <- textGrob(expression(bold("Active treatment") * phantom(bold(" vs. Placebo"))),

x = x, y = 0.7, gp = gpar(col = "#F8766D", fontsize = tfs), just = "left")

t2 <- textGrob(expression(phantom(bold("Active treatment vs.")) * bold(" Placebo")),

x = x, y = 0.7, gp = gpar(col = "#00BFC4", fontsize = tfs), just = "left")

t3 <- textGrob(expression(phantom(bold("Active treatment ")) * bold("vs.") * phantom(bold(" Placebo"))),

x = x, y = 0.7, gp = gpar(col = "grey", fontsize = tfs), just = "left")

t4 <- textGrob(expression("Strips describe the flow of the patients from different categories between visits"),

x = x, y = 0.4, gp = gpar(col = "grey", fontsize = 10), just = "left")

t5 <- textGrob(expression("Circles are proportional to the percentage of patients at every visit"),

x = x, y = 0.25, gp = gpar(col = "grey", fontsize = 10), just = "left")

tb <- ggplot(data = d) +

theme(panel.grid = element_blank(),

plot.background = element_rect(fill="black")) +

coord_cartesian(clip = "off") +

annotation_custom(grobTree(t1, t2, t3, t4, t5)) +

theme(legend.position = 'none')

tb

b <- plot_grid(tb, p1, p2, p3, p4, p5, p6, p7, p8, p9, p10,

nrow = 6, rel_heights = c(0.5, rep(1, 10)))

b

# ggsave(plot=b, filename="b.png", path=("~") , width = 6, height = 32, device = "png")

# Multidimensional --------------------------------------------------------

p12 <- ggplotib(paramcd = c("DLQI101", "DLQI102"),

breaks = 0:6,

transparency = 0.015,

title="Item 1 + Item 2",

caption="Symptoms and feelings") +

theme(axis.text.y = element_text(colour = "grey50"))

p12

# p34 <- ggplotib(paramcd = c("DLQI103", "DLQI104"),

# breaks = 0:6,

# transparency = 0.015,

# title="Item 3 + Item 4",

# caption="Daily activities") +

# theme(axis.text.y = element_text(colour = "grey50"))

# p34

#

# p56 <- ggplotib(paramcd = c("DLQI105", "DLQI106"),

# breaks = 0:6,

# transparency = 0.015,

# title="Item 5 + Item 6",

# caption="Leisures") +

# theme(axis.text.y = element_text(colour = "grey50"))

# p56

#

# p89 <- ggplotib(paramcd = c("DLQI108", "DLQI109"),

# breaks = 0:6,

# transparency = 0.015,

# title="Item 8 + Item 9",

# caption="Interpersonal relationships") +

# theme(axis.text.y = element_text(colour = "grey50"))

# p89

#

# tfs <- 42

# x <- 0.0175

# t1 <- textGrob(expression(bold("Active treatment") * phantom(bold(" vs. Placebo"))),

# x = x, y = 0.7, gp = gpar(col = "#F8766D", fontsize = tfs), just = "left")

# t2 <- textGrob(expression(phantom(bold("Active treatment vs.")) * bold(" Placebo")),

# x = x, y = 0.7, gp = gpar(col = "#00BFC4", fontsize = tfs), just = "left")

# t3 <- textGrob(expression(phantom(bold("Active treatment ")) * bold("vs.") * phantom(bold(" Placebo"))),

# x = x, y = 0.7, gp = gpar(col = "grey", fontsize = tfs), just = "left")

# t4 <- textGrob(expression("Strips describe the flow of the patients from different categories between visits"),

# x = x, y = 0.4, gp = gpar(col = "grey", fontsize = 10), just = "left")

# t5 <- textGrob(expression("Circles are proportional to the percentage of patients at every visit"),

# x = x, y = 0.25, gp = gpar(col = "grey", fontsize = 10), just = "left")

#

# t <- ggplot(data = d) +

# theme(panel.grid = element_blank(),

# plot.background = element_rect(fill="black")) +

# coord_cartesian(clip = "off") +

# annotation_custom(grobTree(t1, t2, t3, t4, t5)) +

# theme(legend.position = 'none')

# t

#

# t2 <- ggplot(data = d) +

# theme(panel.grid = element_blank(),

# plot.background = element_rect(fill="black")) +

# theme(legend.position = 'none')

# t2

# col1 <- plot_grid(p1, p2, p3, p4, p5, p6, p7, p8, p9, p10,

# nrow = 10, rel_heights = c(rep(1, 10)))

# col2 <- plot_grid(p12, p34, p56, p7, p89, p10,

# nrow = 6, rel_heights = c(2, 2, 2, 1, 2, 1))

# col3 <- plot_grid(pt, t2,

# nrow = 2, rel_heights = c(4, 6))

# cols <- plot_grid(col1, col2, col3, ncol = 3, rel_widths = c(3, 3, 3))

# o <- plot_grid(t, cols, nrow = 2, rel_heights = c(0.5, 10))

# o1.3 Mixed models

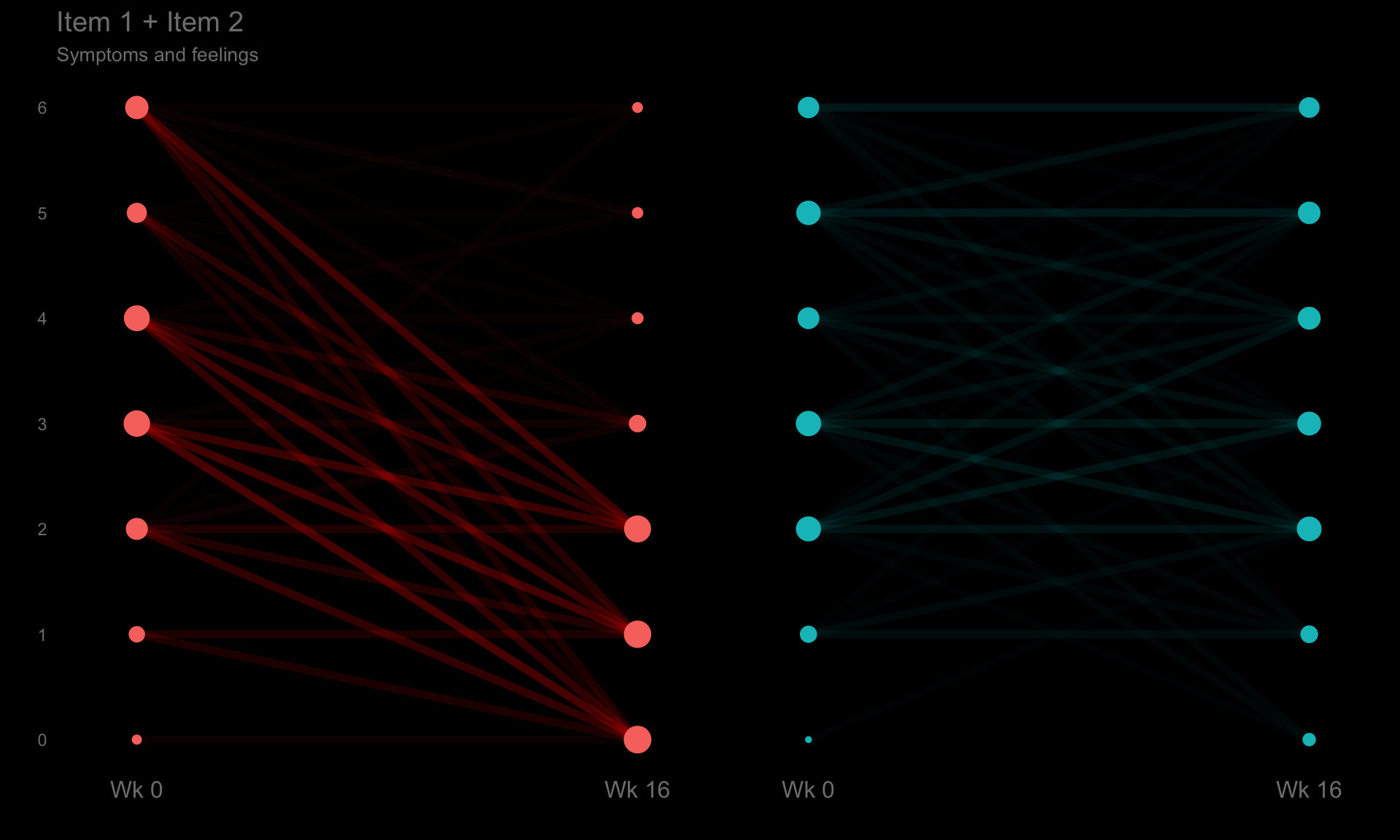

we have the results of mixed models visualised in an interactive html document. The figure is split into three columns, allowing us to see all of the effects we may be interested in: treatment by visit; within treatment, between visit differences; between treatment, between treatment differences.

One of the features that is really nice about this example is that, unlike many other examples, it considers an alternative way to sort the different items using a dendrogram. This allows us to quickly see two clear clusters in the items, although it was felt that total score should not have been included in this clustering. It is also great that the distributions are shown around the estimated effects, allowing us to quickly see the uncertainty in these estimates.

There were mixed feelings around the large amount of white space included in some of the columns. In some ways, this could be seen as unnecessary, but it was pointed out that the white space provides a nice level of consistency, allowing us to get an immediate impression of effect sizes on the upper plots which are quite a way from the labels on the horizontal axis. The vertical reference lines included are also really nice for getting a quick impression of effect sizes and how meaningful these are.

Similarly, there were mixed feelings around the level of complexity of this example, with the conclusion being that its appropriateness would largely depend on how technical the audience was. Whilst it may be slightly difficult for a non-technical audience to understand, it was felt that this example would be great for providing a large amount of information to technical audiences, particularly given that tables of values were provided in additional tabs alongside the figure. The group envisaged many applications where similar figures would be great to have, such as meta-analyses and subgroup analyses.

The final thing discussed at length for this example was the representation of the effect sizes. The group really liked that the colours used correspond to those provided in the above text, allowing us to determine treatment groups without the need for a legend. Similarly, it was great to see the different effect sizes consistently pointing either upwards or downwards for the different treatment groups, although it would be beneficial to have this also described in the text. This would allow the figure to be interpreted without having to be able to distinguish between the colours.

The panel highlighted a really nice tool which allows us to see how easily certain colours are distinguished by individuals with different kinds of colour-blindness. Whilst it was shown that the blue and green used here are not too difficult to distinguish for most individuals, the tool was used to identify colours which could be even easier to differentiate between.

pacman::p_load(rio, tidyverse)

pacman::p_load(lme4, emmeans)

pacman::p_load(ggdist, distributional)

pacman::p_load(gtsummary)

#devtools::install_github("teunbrand/ggh4x")

library(ggh4x)

pacman::p_load(ggdendro)

pacman::p_load(ggtext)

pacman::p_load(patchwork)

pacman::p_load(gt)

df1 <- read_csv("./01_Datasets/ww2020_dlqi.csv") %>%

drop_na(DLQI_SCORE) %>%

mutate(VISIT_i = as.integer(as.factor(VISIT)))

# fun code to derive change

df1_chg <- read_csv("./01_Datasets/ww2020_dlqi.csv") %>%

select(USUBJID, VISIT, TRT, starts_with("DLQI")) %>%

arrange(USUBJID, VISIT) %>%

group_by(USUBJID, TRT) %>%

# filter(n() > 1) %>% # keep participants with 2 visits

transmute(across(starts_with("DLQI"), ~.x - lag(.x), .names = "{.col}_CHG")) %>%

rowwise() %>%

filter( sum(is.na(c_across(starts_with("DLQI")))) < 11 )

dl <- c(DLQI101 = 'How Itchy, Sore, Painful, Stinging <br><br>DLQI101',

DLQI102 = 'How Embarrassed, Self Conscious <br><br>DLQI102',

DLQI103 = 'Interfered Shopping, Home, Yard <br><br>DLQI103',

DLQI104 = 'Influenced Clothes You Wear <br><br>DLQI104',

DLQI105 = 'Affected Social, Leisure Activity <br><br>DLQI105',

DLQI106 = 'Made It Difficult to Do Any Sports <br><br>DLQI106',

DLQI107 = 'Prevented Working or Studying <br><br>DLQI107',

DLQI108 = 'Problem Partner, Friends, Relative <br><br>DLQI108',

DLQI109 = 'Caused Any Sexual Difficulties <br><br>DLQI109',

DLQI110 = 'How Much a Problem is Treatment <br><br>DLQI110',

DLQI_SCORE = '**DLQI Total Score** <br><br>DLQI_SCORE')

cl <- c(MEAN = "TRT by VISIT",

DIFF = "Within TRT between VISIT Differences",

DELTA = "Between TRT between VISIT Difference")

# Mini Mixed-Model example

m <- lmer(DLQI_SCORE ~ VISIT*TRT + (1|USUBJID), data = df1)

e <- emmeans(m, ~ VISIT*TRT)

s <- eff_size(e, sigma = sigma(m), edf = 700, method = 'identity')

c <- contrast(e, method = list(diff_a = c(-1, 1, 0, 0),

diff_b = c( 0, 0, -1, 1),

diff_d = c(1, -1, -1, 1)))

f <- eff_size(c, sigma = sigma(m), edf = 700, method = 'identity')

summary(e, infer = TRUE)summary(c, infer = TRUE)# Mini Repeated Mixed-Model example

pacman::p_load(nlme)

m <- gls(DLQI_SCORE ~ VISIT*TRT,

data = df1,

method = "REML",

correlation = nlme::corSymm( form = ~VISIT_i|USUBJID),

weights = nlme::varIdent(form = ~1|VISIT))

e <- emmeans(m, ~ VISIT*TRT, mode = "df.error")

s <- eff_size(e, sigma = sigma(m), edf = 700, method = 'identity')

c <- contrast(e, method = list(diff_a = c(-1, 1, 0, 0),

diff_b = c( 0, 0, -1, 1),

diff_d = c(1, -1, -1, 1)))

f <- eff_size(c, sigma = sigma(m), edf = 700, method = 'identity')

summary(e, infer = TRUE)summary(c, infer = TRUE)# Mini bayesian example

pacman::p_load(brms)

m <- brm(DLQI_SCORE ~ VISIT*TRT + (1 | USUBJID), data = df1, family = gaussian())##

## SAMPLING FOR MODEL 'anon_model' NOW (CHAIN 1).

## Chain 1:

## Chain 1: Gradient evaluation took 0.000174 seconds

## Chain 1: 1000 transitions using 10 leapfrog steps per transition would take 1.74 seconds.

## Chain 1: Adjust your expectations accordingly!

## Chain 1:

## Chain 1:

## Chain 1: Iteration: 1 / 2000 [ 0%] (Warmup)

## Chain 1: Iteration: 200 / 2000 [ 10%] (Warmup)

## Chain 1: Iteration: 400 / 2000 [ 20%] (Warmup)

## Chain 1: Iteration: 600 / 2000 [ 30%] (Warmup)

## Chain 1: Iteration: 800 / 2000 [ 40%] (Warmup)

## Chain 1: Iteration: 1000 / 2000 [ 50%] (Warmup)

## Chain 1: Iteration: 1001 / 2000 [ 50%] (Sampling)

## Chain 1: Iteration: 1200 / 2000 [ 60%] (Sampling)

## Chain 1: Iteration: 1400 / 2000 [ 70%] (Sampling)

## Chain 1: Iteration: 1600 / 2000 [ 80%] (Sampling)

## Chain 1: Iteration: 1800 / 2000 [ 90%] (Sampling)

## Chain 1: Iteration: 2000 / 2000 [100%] (Sampling)

## Chain 1:

## Chain 1: Elapsed Time: 1.494 seconds (Warm-up)

## Chain 1: 0.836 seconds (Sampling)

## Chain 1: 2.33 seconds (Total)

## Chain 1:

##

## SAMPLING FOR MODEL 'anon_model' NOW (CHAIN 2).

## Chain 2:

## Chain 2: Gradient evaluation took 5.7e-05 seconds

## Chain 2: 1000 transitions using 10 leapfrog steps per transition would take 0.57 seconds.

## Chain 2: Adjust your expectations accordingly!

## Chain 2:

## Chain 2:

## Chain 2: Iteration: 1 / 2000 [ 0%] (Warmup)

## Chain 2: Iteration: 200 / 2000 [ 10%] (Warmup)

## Chain 2: Iteration: 400 / 2000 [ 20%] (Warmup)

## Chain 2: Iteration: 600 / 2000 [ 30%] (Warmup)

## Chain 2: Iteration: 800 / 2000 [ 40%] (Warmup)

## Chain 2: Iteration: 1000 / 2000 [ 50%] (Warmup)

## Chain 2: Iteration: 1001 / 2000 [ 50%] (Sampling)

## Chain 2: Iteration: 1200 / 2000 [ 60%] (Sampling)

## Chain 2: Iteration: 1400 / 2000 [ 70%] (Sampling)

## Chain 2: Iteration: 1600 / 2000 [ 80%] (Sampling)

## Chain 2: Iteration: 1800 / 2000 [ 90%] (Sampling)

## Chain 2: Iteration: 2000 / 2000 [100%] (Sampling)

## Chain 2:

## Chain 2: Elapsed Time: 1.631 seconds (Warm-up)

## Chain 2: 0.886 seconds (Sampling)

## Chain 2: 2.517 seconds (Total)

## Chain 2:

##

## SAMPLING FOR MODEL 'anon_model' NOW (CHAIN 3).

## Chain 3:

## Chain 3: Gradient evaluation took 6.5e-05 seconds

## Chain 3: 1000 transitions using 10 leapfrog steps per transition would take 0.65 seconds.

## Chain 3: Adjust your expectations accordingly!

## Chain 3:

## Chain 3:

## Chain 3: Iteration: 1 / 2000 [ 0%] (Warmup)

## Chain 3: Iteration: 200 / 2000 [ 10%] (Warmup)

## Chain 3: Iteration: 400 / 2000 [ 20%] (Warmup)

## Chain 3: Iteration: 600 / 2000 [ 30%] (Warmup)

## Chain 3: Iteration: 800 / 2000 [ 40%] (Warmup)

## Chain 3: Iteration: 1000 / 2000 [ 50%] (Warmup)

## Chain 3: Iteration: 1001 / 2000 [ 50%] (Sampling)

## Chain 3: Iteration: 1200 / 2000 [ 60%] (Sampling)

## Chain 3: Iteration: 1400 / 2000 [ 70%] (Sampling)

## Chain 3: Iteration: 1600 / 2000 [ 80%] (Sampling)

## Chain 3: Iteration: 1800 / 2000 [ 90%] (Sampling)

## Chain 3: Iteration: 2000 / 2000 [100%] (Sampling)

## Chain 3:

## Chain 3: Elapsed Time: 1.532 seconds (Warm-up)

## Chain 3: 0.825 seconds (Sampling)

## Chain 3: 2.357 seconds (Total)

## Chain 3:

##

## SAMPLING FOR MODEL 'anon_model' NOW (CHAIN 4).

## Chain 4:

## Chain 4: Gradient evaluation took 9.2e-05 seconds

## Chain 4: 1000 transitions using 10 leapfrog steps per transition would take 0.92 seconds.

## Chain 4: Adjust your expectations accordingly!

## Chain 4:

## Chain 4:

## Chain 4: Iteration: 1 / 2000 [ 0%] (Warmup)

## Chain 4: Iteration: 200 / 2000 [ 10%] (Warmup)

## Chain 4: Iteration: 400 / 2000 [ 20%] (Warmup)

## Chain 4: Iteration: 600 / 2000 [ 30%] (Warmup)

## Chain 4: Iteration: 800 / 2000 [ 40%] (Warmup)

## Chain 4: Iteration: 1000 / 2000 [ 50%] (Warmup)

## Chain 4: Iteration: 1001 / 2000 [ 50%] (Sampling)

## Chain 4: Iteration: 1200 / 2000 [ 60%] (Sampling)

## Chain 4: Iteration: 1400 / 2000 [ 70%] (Sampling)

## Chain 4: Iteration: 1600 / 2000 [ 80%] (Sampling)

## Chain 4: Iteration: 1800 / 2000 [ 90%] (Sampling)

## Chain 4: Iteration: 2000 / 2000 [100%] (Sampling)

## Chain 4:

## Chain 4: Elapsed Time: 1.45 seconds (Warm-up)

## Chain 4: 0.806 seconds (Sampling)

## Chain 4: 2.256 seconds (Total)

## Chain 4:e <- emmeans(m, ~ VISIT*TRT, point.est = mean)

s <- contrast(e, method = list(diff_a = c(-1, 1, 0, 0),

diff_b = c( 0, 0, -1, 1),

diff_d = c(1, -1, -1, 1)))

summary(e, point.est = mean, infer = TRUE)summary(s, point.est = mean, infer = TRUE)df2 <- df1 %>%

pivot_longer(cols = contains("DLQI"),

names_to = "VAR",

values_to = "VAL") %>%

nest_by(VAR) %>%

mutate(m = list( lmer(VAL ~ VISIT*TRT + (1|USUBJID), data = data) ),

e = list( emmeans(m, ~ VISIT*TRT) ),

s = list( eff_size(e, sigma = sigma(m), edf = 700, method = 'identity') ),

c = list( contrast(e, method = list(diff_a = c(-1, 1, 0, 0),

diff_b = c( 0, 0, -1, 1),

diff_d = c(1, -1, -1, 1))) ),

f = list( eff_size(c, sigma = sigma(m), edf = 700, method = 'identity') ),

r = list(

bind_rows(

summary(e, infer = TRUE) %>% as.data.frame(),

summary(c, infer = TRUE) %>% as.data.frame() %>%

rename(emmean = estimate, TRT = contrast) ) %>%

mutate(contrast = paste(VISIT, TRT))

),

g = list(

bind_rows(

summary(s, infer = TRUE) %>% as.data.frame(),

summary(f, infer = TRUE) %>% as.data.frame() )

),

)

df3 <- df2 %>%

select(VAR, r) %>%

unnest(r) %>%

mutate(contrast = str_remove(contrast, "NA ")) %>%

mutate(GRP = case_when(

contrast %in% c("Baseline A", "Week 16 A",

"Baseline B", "Week 16 B") ~ "MEAN",

contrast %in% c("diff_a", "diff_b") ~ "DIFF",

contrast %in% c("diff_d") ~ "DELTA")

) %>%

mutate(TRT = case_when(

contrast %in% c("Baseline A", "Week 16 A", "diff_a") ~ "A",

contrast %in% c("Baseline B", "Week 16 B", "diff_b") ~ "B")

) %>%

mutate(VISIT = case_when(

contrast %in% c("Baseline A", "Baseline B") ~ "Baseline",

contrast %in% c("Week 16 A", "Week 16 B") ~ "Week 16")

)

df3s <- df2 %>%

select(VAR, g) %>%

unnest(g) %>%

mutate(GRP = case_when(

contrast %in% c("Baseline A", "Week 16 A",

"Baseline B", "Week 16 B") ~ "MEAN",

contrast %in% c("diff_a", "diff_b") ~ "DIFF",

contrast %in% c("diff_d") ~ "DELTA")

) %>%

mutate(TRT = case_when(

contrast %in% c("Baseline A", "Week 16 A", "diff_a") ~ "A",

contrast %in% c("Baseline B", "Week 16 B", "diff_b") ~ "B")

) %>%

mutate(VISIT = case_when(

contrast %in% c("Baseline A", "Baseline B") ~ "Baseline",

contrast %in% c("Week 16 A", "Week 16 B") ~ "Week 16")

)

y1 <- df1 %>%

#filter(VISIT == "Baseline") %>%

select(starts_with("DLQI"))

c1 <- cor(y1, method="pearson")

d1 <- as.dist(1-c1)

h1 <- hclust(d1, method = "complete")

gg_d1 <- ggdendrogram(h1, rotate = TRUE) +

labs(title = " ") +

theme_dendro() +

theme(plot.margin = margin(0, 0, 0, 0, "pt"))

df3s <- df3s %>%

mutate(VAR = factor(VAR, levels = rev(h1$labels[h1$order]) ),

GRP = factor(GRP, levels = c("MEAN","DIFF","DELTA")) )

df3 <- df3 %>%

mutate(VAR = factor(VAR, levels = rev(h1$labels[h1$order]) ),

GRP = factor(GRP, levels = c("MEAN","DIFF","DELTA")) )

# Figure F1

gg_f1 <- ggplot(data = df3s,

aes(x = effect.size,

y = VAR)) +

geom_vline(data = tribble(~GRP, ~VAL,

"MEAN", NA,

"DIFF", 0,

"DELTA",0) %>%

mutate(GRP = factor(GRP) %>% fct_relevel("MEAN","DIFF","DELTA")),

aes(xintercept = VAL), color = 'gray50' ) +

geom_vline(data = df3s %>%

filter(GRP == "MEAN", VISIT == "Baseline") %>%

select(GRP, VAR, effect.size) %>%

group_by(GRP, VAR) %>%

dplyr::summarize(xint = mean(effect.size)),

aes(xintercept = xint), color = 'gray50') +

stat_dist_halfeye(ggsubset(TRT == "A"),

mapping = aes(fill1 = contrast,

color1 = contrast,

dist = dist_student_t(df = df, mu = effect.size, sigma = SE)),

.width = c(0.95),

alpha = 0.5,

justification = -0.10,

size = 1,

side = 'top') +

stat_dist_halfeye(ggsubset(TRT == "B"),

mapping = aes(fill2 = contrast,

color2 = contrast,

dist = dist_student_t(df = df, mu = effect.size, sigma = SE)),

.width = c(0.95),

alpha = 0.5,

justification = 1.10,

size = 1,

side = 'bottom') +

stat_dist_halfeye(ggsubset(GRP == "DELTA"),

mapping = aes(dist = dist_student_t(df = df, mu = effect.size, sigma = SE)),

.width = c(0.95),

size = 1,

side = 'both') +

geom_richtext(ggsubset(GRP == "DELTA"),

mapping = aes(y = 1,

x = 3.7,

label = str_glue("{style_ratio(effect.size)}

({style_ratio(lower.CL)}, {style_ratio(upper.CL)}) <br>

{style_pvalue(p.value, 2, prepend_p = TRUE)}")),

size = 2.5,

hjust = "inward",

color = 'gray75',

text.color = 'black',

fill = 'white') +

scale_listed(scalelist = list(

scale_fill_manual(values = RColorBrewer::brewer.pal(9,"Blues")[c(5,7,8)], aesthetics = "fill1"),

scale_fill_manual(values = RColorBrewer::brewer.pal(9,"Greens")[c(5,7,8)], aesthetics = "fill2"),

scale_color_manual(values = RColorBrewer::brewer.pal(9,"Blues")[c(5,7,8)], aesthetics = "color1"),

scale_color_manual(values = RColorBrewer::brewer.pal(9,"Greens")[c(5,7,8)], aesthetics = "color2")),

replaces = c("fill", "fill", "color", "color")) +

facet_grid(VAR ~ GRP,

scales = 'free_y',

switch = 'y',

labeller = labeller(VAR = dl, GRP = cl)) +

#force_panelsizes(cols = c(3,2,2)) +

scale_y_discrete(position = "right") +

labs(x = "Standardized effect-size (_Cohen's d_)",

y = NULL) +

theme_bw() +

theme(plot.margin = margin(5.5, 0, 5.5, 5.5, "pt"),

legend.position = "none",

strip.text.y.left = element_markdown(angle = 0, hjust = 1),

strip.placement = "outside",

axis.ticks.y.right = element_blank(),

axis.text.y.right = element_blank(),

axis.title.x = element_markdown(hjust = 0.5)

)

gg_f1 + gg_d1 +

plot_layout(widths = c(6,1)) +

plot_annotation(

title = "Phase 3 Randomized Clinical Trial Mixed-Model Results evaluating quality of life **DLQI Total Scale** & Individual Subscales",

subtitle = "Standardized Effects (MEAN) and 95% CI for

<span style='color:#2171B5;'><b>Placebo (n=150) </b></span> and

<span style='color:#238B45;'><b>Active (n=450) </b></span> at

<span style='color:#6BAED6;'>Baseline</span>|<span style='color:#74C476;'>Baseline</span> and

<span style='color:#2171B5;'>Week 16</span>|<span style='color:#238B45;'>Week 16</span>

<span style='font-size:8pt; color:black'>vertical lines are mean results at Baseline</span> <br>

Standardized Difference (DELTA) and 95% CI between VISIT within TRT (i.e.

<span style='color:#238B45;'><b>Active</b></span>

<span style='color:#238B45;'>(Week 16</span> -

<span style='color:#74C476;'>Baseline)</span>

<span style='font-size:8pt; color:black'>overlapping densities to the vertical lines not significant different from 0</span> <br>

Standardized Difference (DELTA-DELTA) and 95% CI between TRT between VISIT <br>

Hierarchical Clustering dendrogram results of Pearson correlation between **DLQI Total** & Individual Subscales",

theme = theme(plot.title.position = "plot",

plot.title = element_markdown(lineheight = 1.1),

plot.subtitle = element_markdown(lineheight = 1.1))

)

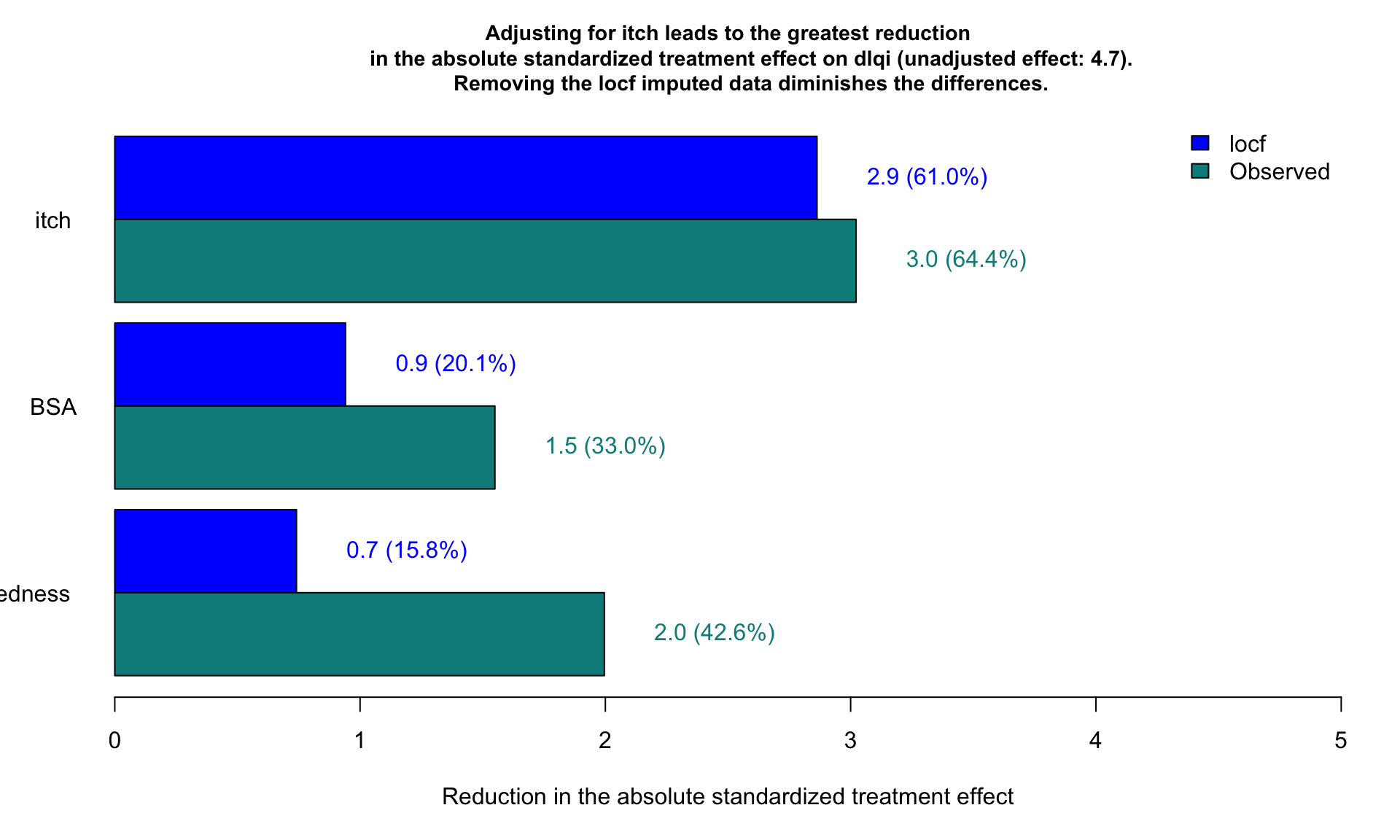

1.4 Barplot to show the reduction in treatment effect on DLQI

This graphic is similar to the first one except it uses a barchart instead of a lollipop chart and it includes a completers analysis. The title explains the results and the reference line for the marginal treatment effect provides a good reference for the reader.

# Read in the data set:

dat <- read.csv("./01_Datasets/mediation_data.csv")

fit <- lm(dlqi ~ trt, data = dat)

t.val.pure <- coef(summary(fit))[2, 3]

t.val.vec <- numeric(3)

j <- 1

for (i in c(2, 4, 6)) {

fit <- lm(dat$dlqi ~ dat[, i] + dat$trt)

t.val.vec[j] <- coef(summary(fit))[3, 3]

j <- j + 1

}

# Redo without imputed data:

t.val.vec.re <- numeric(3)

j <- 1

for (i in c(2, 4, 6)) {

dat.re <- dat[which(dat[, i+1] == F), ]

fit <- lm(dat.re$dlqi ~ dat.re[, i] + dat.re$trt)

t.val.vec.re[j] <- coef(summary(fit))[3, 3]

j <- j + 1

}

# Combine both vectors:

t.vals <- c(t.val.vec.re[3], t.val.vec[3],

t.val.vec.re[2], t.val.vec[2],

t.val.vec.re[1], t.val.vec[1])

# Calculate difference:

t.vals - t.val.pure## [1] 1.9958097 0.7407368 1.5496847 0.9407258 3.0221284 2.8629130# Calculate the difference:

t.val.diff <- t.vals - t.val.pure

t.val.mat <- matrix(t.val.diff, ncol = 3)

fit <- lm(dlqi ~ trt, dat)

t.val.mat.pr <- t.val.mat/abs(coef(summary(fit))[2, 3]) * 100

# png("barplot.png", width = 7, height = 5, res = 300, units = "in")

par(xpd = T, cex.main = 0.9)

barplot(t.val.diff, horiz = T, col = c("darkcyan", "blue"), xlim = c(0, 5),

space = rep(c(0.25, 0), 3),

main = "Adjusting for itch leads to the greatest reduction

in the absolute standardized treatment effect on dlqi (unadjusted effect: 4.7).

Removing the locf imputed data diminishes the differences.",

xlab = "Reduction in the absolute standardized treatment effect")

y.coord <- c(1.25, 3.5, 5.75)

text(-0.25, y.coord[3], "itch")

text(-0.25, y.coord[2], "BSA")

text(-0.35, y.coord[1], "redness")

for (i in 1:nrow(t.val.mat)) {

for (j in 1:ncol(t.val.mat)) {

t.val <- paste0(format(round(t.val.mat[i, j], 1), nsmall = 1), " (",

format(round(t.val.mat.pr[i, j], 1), nsmall = 1), "%)")

text(t.val.mat[i, j] + .45, y.coord[j] + i - 1.5, t.val,

col = c("darkcyan", "blue")[i])

}

}

legend("topright", legend = c("locf", "Observed"), fill = c("blue", "darkcyan"), bty = "n")

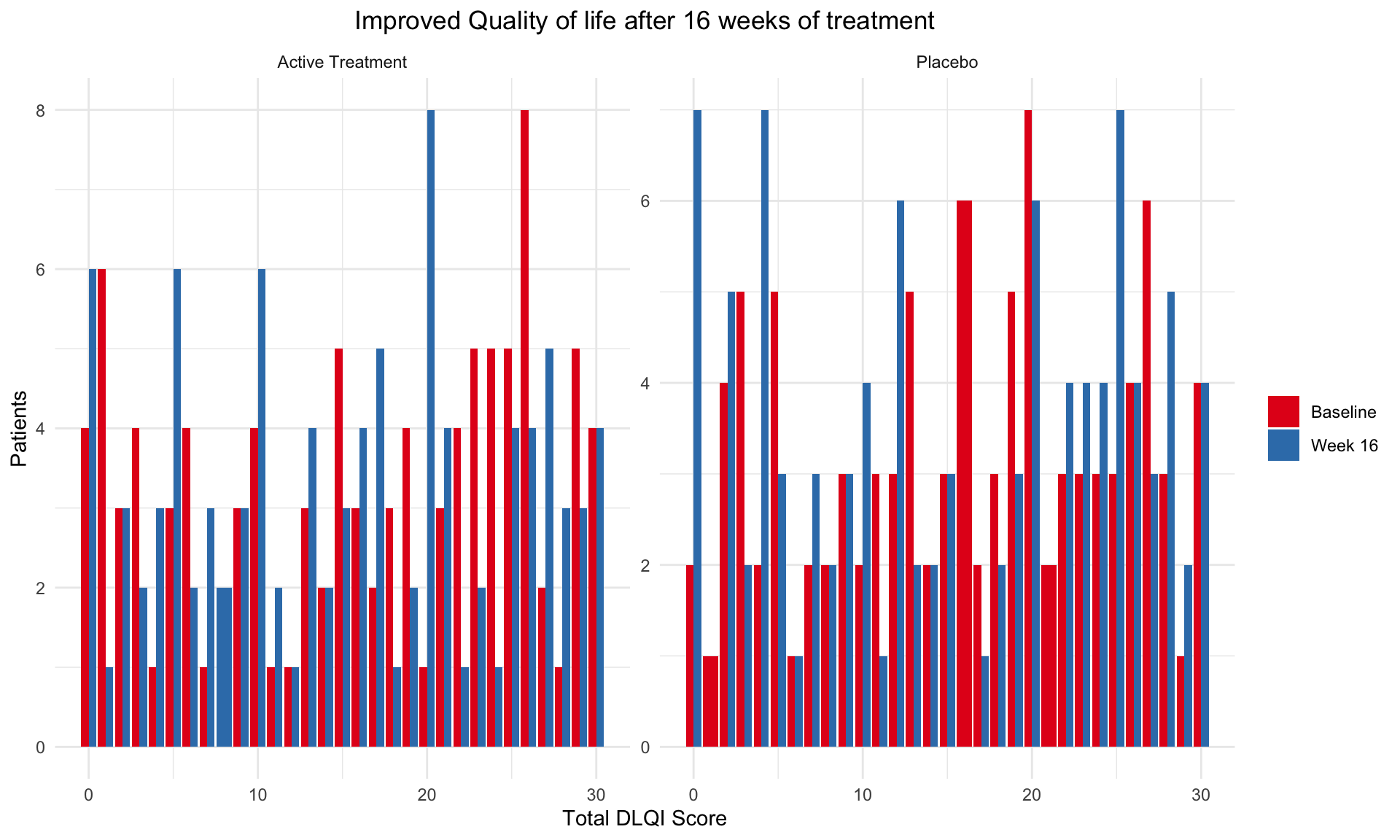

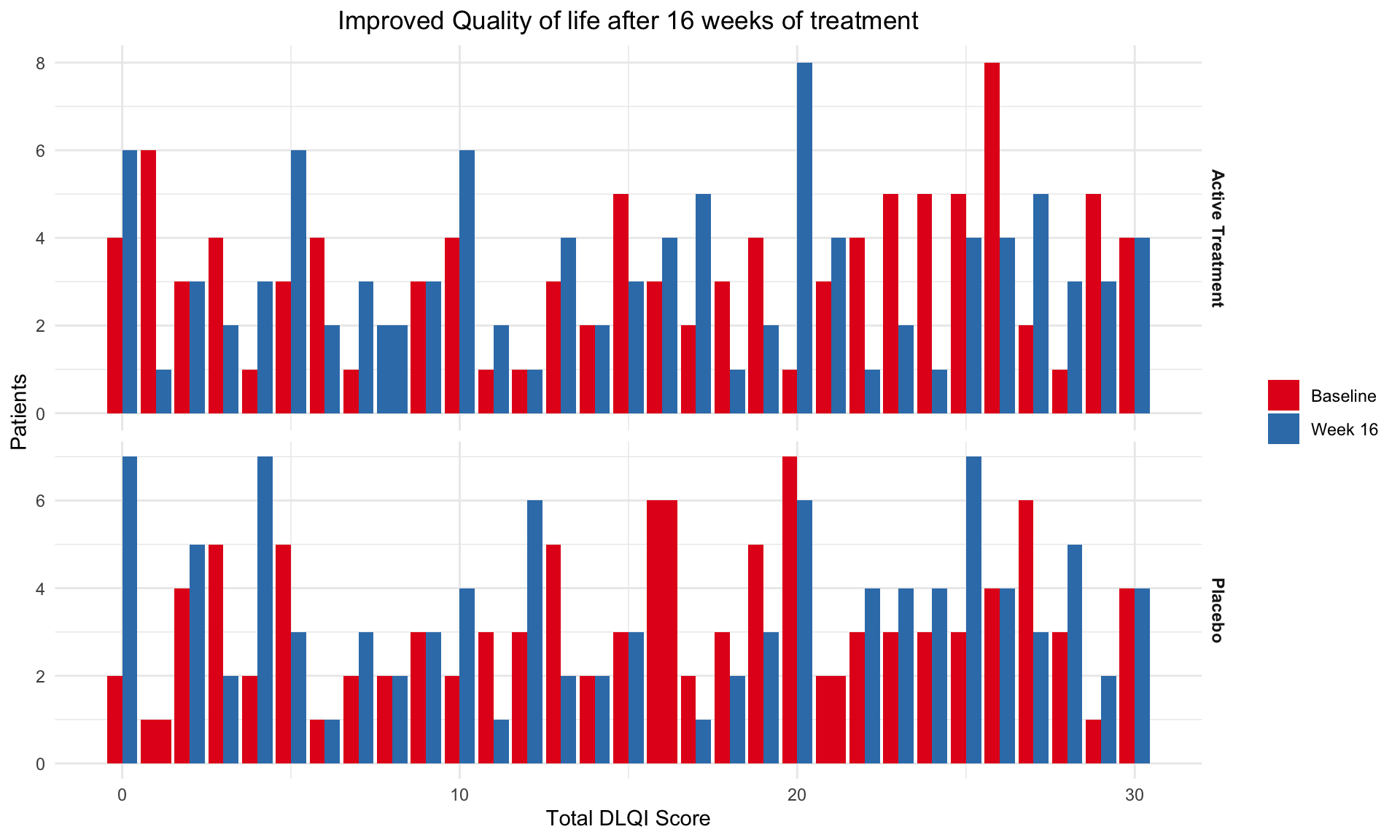

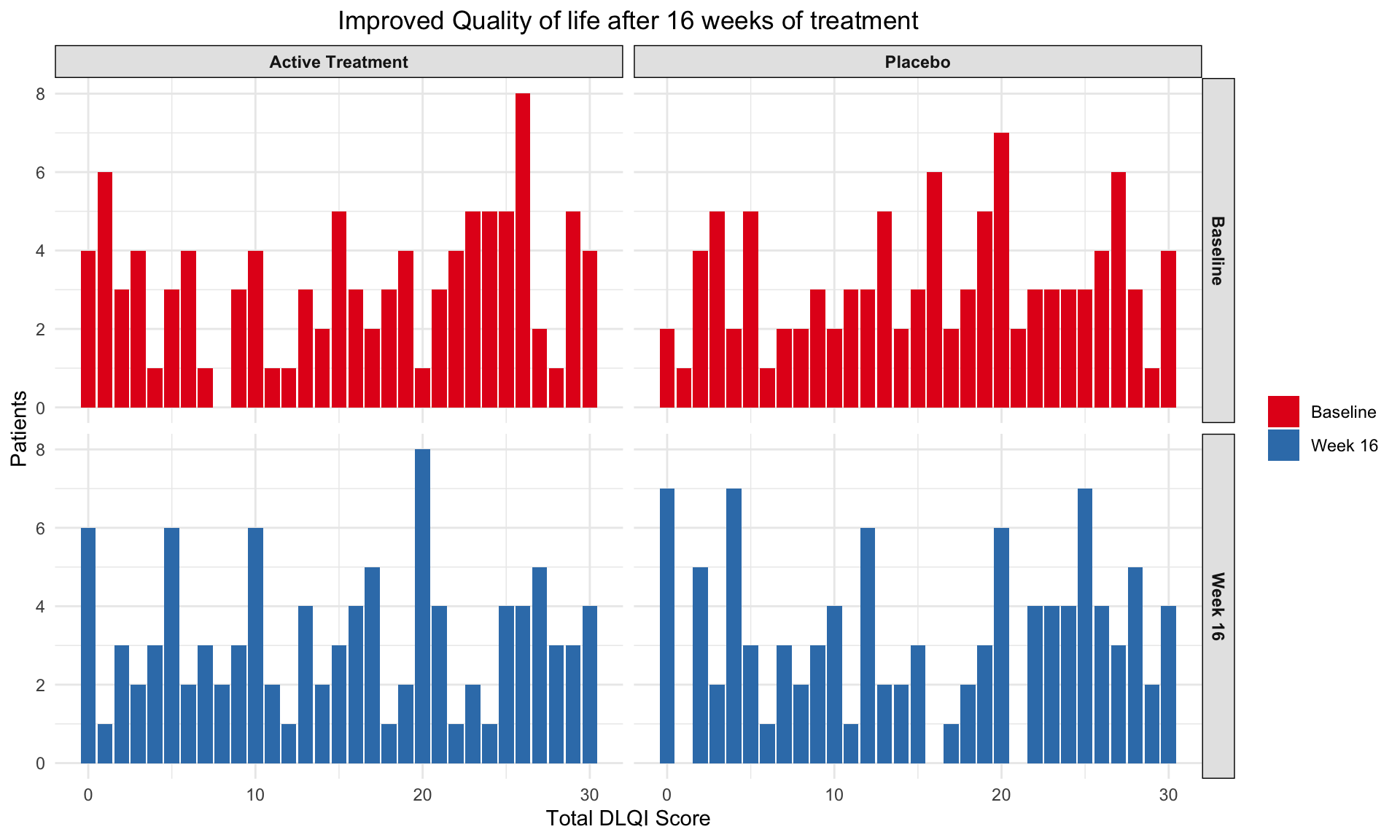

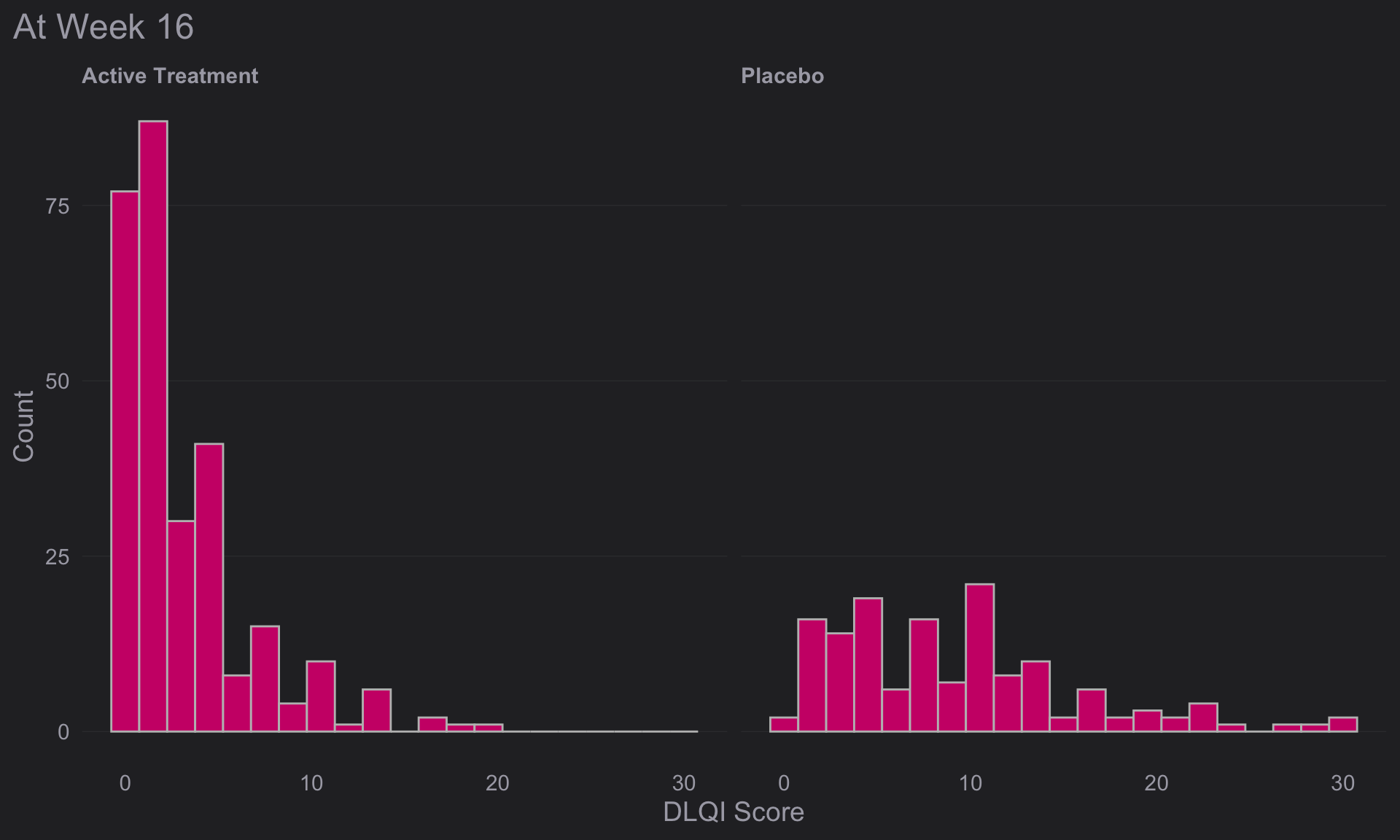

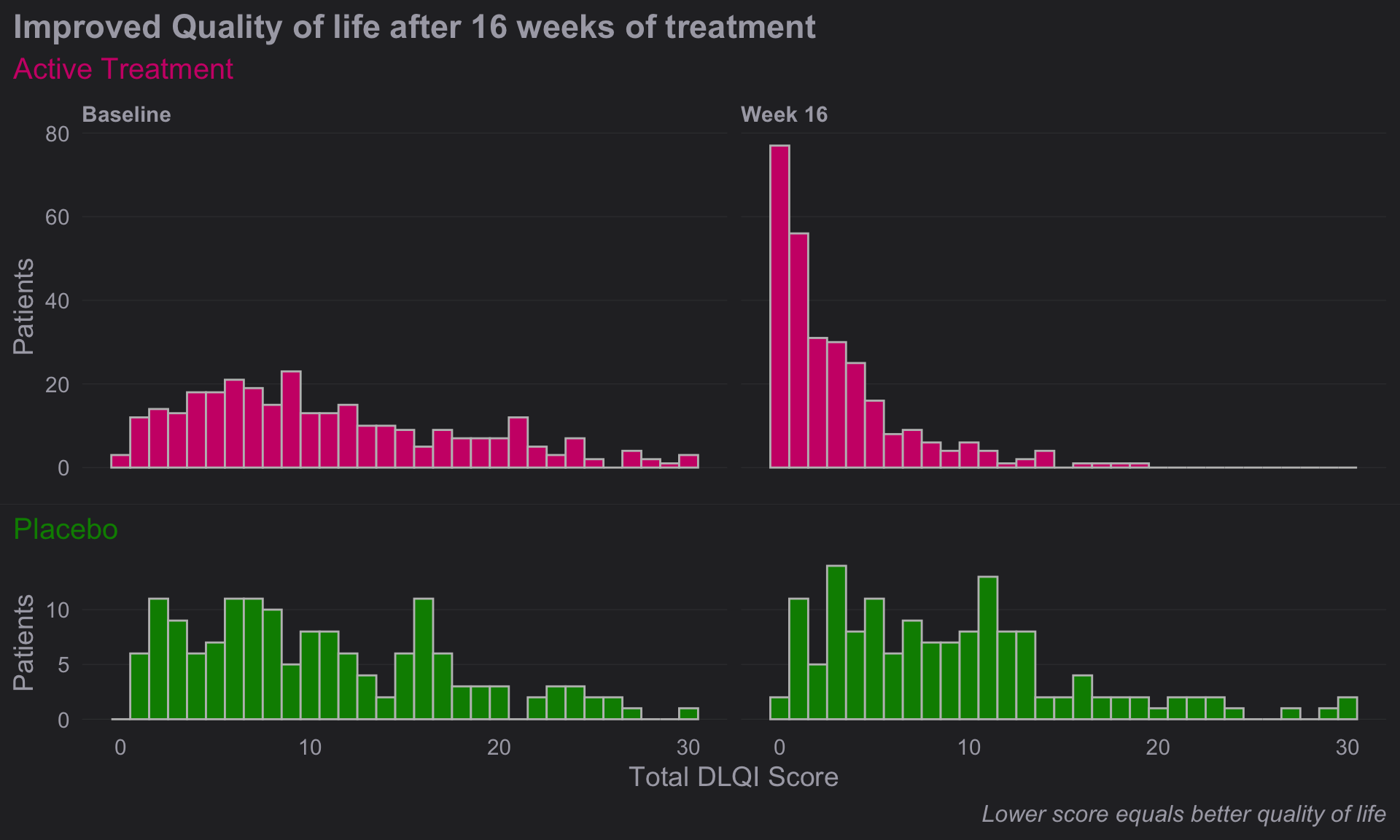

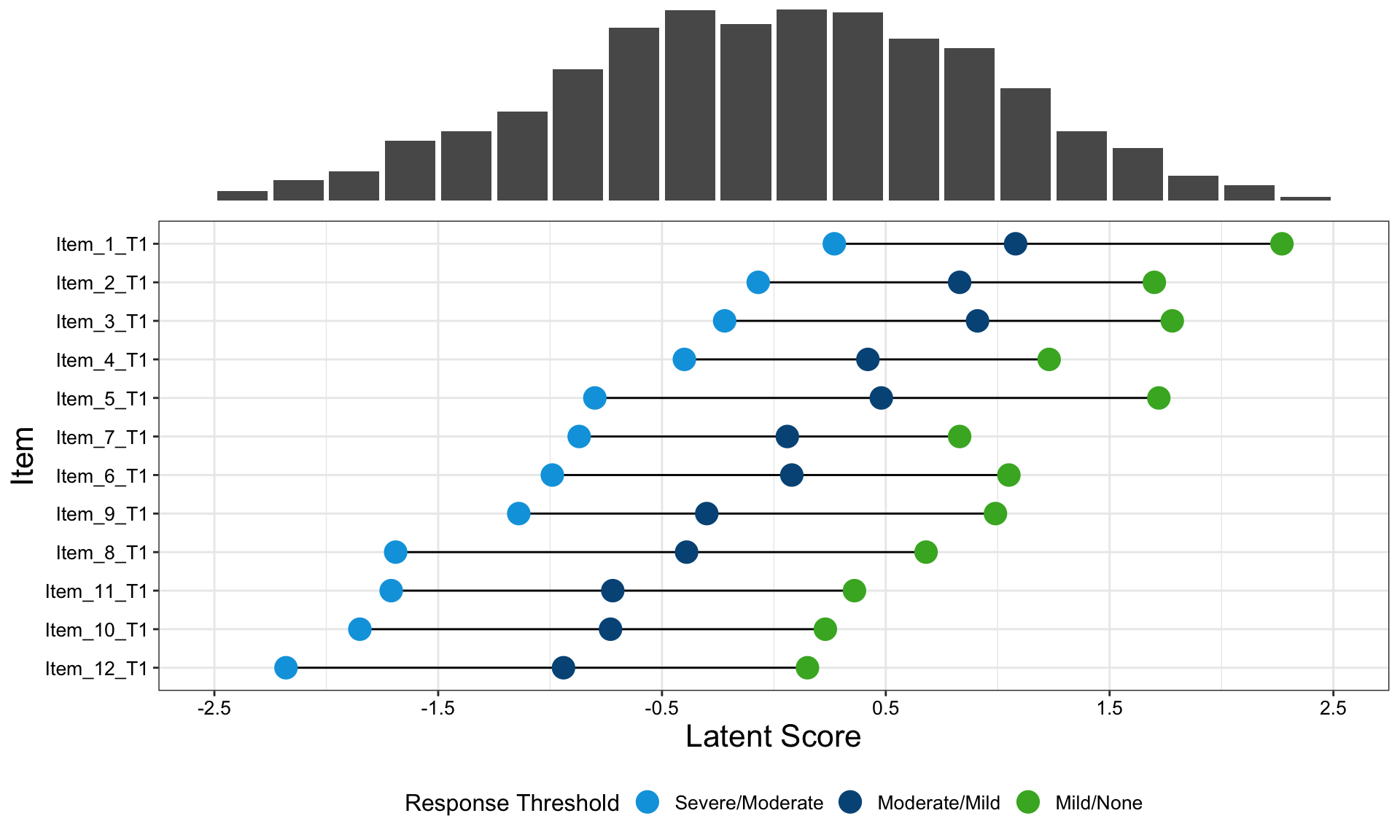

# dev.off()1.5 Histogram

In data analysis, especially when dealing with questionnaire data,

geom_histogram() in ggplot2 can be an

essential tool for visualizing the distribution of responses. Histograms

are particularly effective for showing the frequency of score

occurrences across a range of values, making them ideal for summarizing

the results from questionnaires where responses are often scaled (e.g.,

Likert scales from 1 to 5).

Data Structure Preparation: Your dataset should contain numeric or ordinal scale responses to questionnaire items. Each response is treated as an individual data point.

Plotting with

geom_histogram():- Bin Settings: By default,

geom_histogram()will attempt to create 30 bins of equal width, but for questionnaire data, you might set thebinwidthto 1 if your data are integers representing something like a Likert scale. This will create a bin for each possible score, aligning perfectly with the questionnaire’s scoring system. - Aesthetic Mapping: Map the x-axis to your questionnaire score variable. Optionally, you can fill the bars based on another variable, such as different groups or demographics within your survey population, to compare distributions across categories.

- Position Adjustment: If you are filling based on a

factor (like age group or gender), using

position = "dodge"will place the groups side by side for easier comparison, rather than the default stacking.

- Bin Settings: By default,

Customizations and Improvements:

- Labels and Titles: Adding clear labels for the x-axis and y-axis, as well as a descriptive title, can help in immediately understanding the plot’s purpose. For instance, x could be “Questionnaire Score” and y “Frequency of Responses”.

- Theme Adjustments: Customize the plot appearance

using

theme()to improve readability and presentation quality. For example, adjusting text size, changing the legend position, or modifying background colors.

Statistical Overlays:

- Adding Mean/Mode Lines: You can overlay additional

information such as a vertical line showing the mean or mode of the

distribution using

geom_vline(), which can provide insights into the central tendency of the responses. - Annotations: Annotate specific features of the histogram, like notable peaks or unusual gaps, to draw attention to important aspects of the data.

- Adding Mean/Mode Lines: You can overlay additional

information such as a vertical line showing the mean or mode of the

distribution using

Analysis Interpretation:

- Histograms allow you to quickly grasp the distribution of responses, identify common and outlier responses, and assess the skewness or symmetry of the data.

- By comparing histograms from different demographic groups, you can explore how opinions or behaviors differ across these groups, potentially guiding more detailed statistical tests or reporting insights.

1.5.1 General

data <- data.frame(

Group = c(rep("Active Treatment", 200), rep("Placebo", 200)),

Time = c(rep("Baseline", 100), rep("Week 16", 100), rep("Baseline", 100), rep("Week 16", 100)),

DLQI_Score = c(sample(0:30, 100, replace = TRUE), sample(0:30, 100, replace = TRUE),

sample(0:30, 100, replace = TRUE), sample(0:30, 100, replace = TRUE))

)

# Create the plot

plot1 <- ggplot(data, aes(x = DLQI_Score, fill = Time)) +

geom_histogram(stat = "count", binwidth = 1, position = position_dodge(width = 0.9)) +

facet_wrap(~ Group, scales = "free_y") +

labs(

title = "Improved Quality of life after 16 weeks of treatment",

x = "Total DLQI Score",

y = "Patients"

) +

scale_fill_brewer(palette = "Set1", labels = c("Baseline", "Week 16")) +

theme_minimal() +

theme(

legend.title = element_blank(),

plot.title = element_text(hjust = 0.5)

)

# Print the plot

print(plot1)

plot2 <- ggplot(data, aes(x = DLQI_Score, fill = Time)) +

geom_histogram(stat = "count", binwidth = 1, position = position_dodge(width = 0.9)) +

facet_grid(Group ~ ., scales = "free_y", space = "free_y") +

labs(

title = "Improved Quality of life after 16 weeks of treatment",

x = "Total DLQI Score",

y = "Patients"

) +

scale_fill_brewer(palette = "Set1", labels = c("Baseline", "Week 16")) +

theme_minimal() +

theme(

legend.title = element_blank(),

plot.title = element_text(hjust = 0.5),

strip.background = element_blank(),

strip.text = element_text(face = "bold")

)

# Print the plot

print(plot2)

plot3 <- ggplot(data, aes(x = DLQI_Score, fill = Time)) +

geom_histogram(stat = "count", binwidth = 1, position = position_dodge(width = 0.9)) +

facet_grid(Time ~ Group, scales = "free_y", space = "free") + # Adjust faceting

labs(

title = "Improved Quality of life after 16 weeks of treatment",

x = "Total DLQI Score",

y = "Patients"

) +

scale_fill_brewer(palette = "Set1", labels = c("Baseline", "Week 16")) +

theme_minimal() +

theme(

legend.title = element_blank(),

plot.title = element_text(hjust = 0.5),

strip.background = element_rect(fill = "gray90"),

strip.text = element_text(face = "bold")

)

# Print the plot

print(plot3)

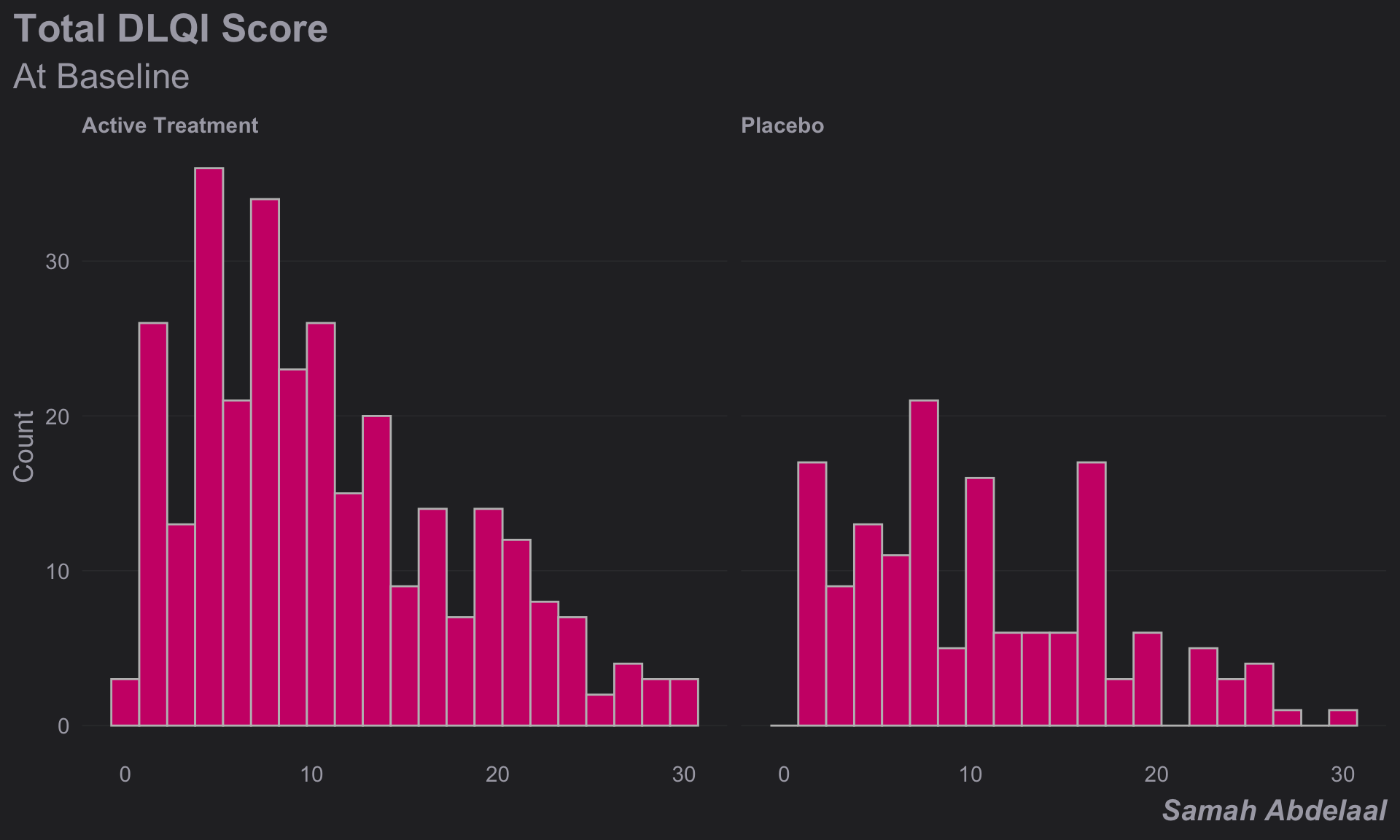

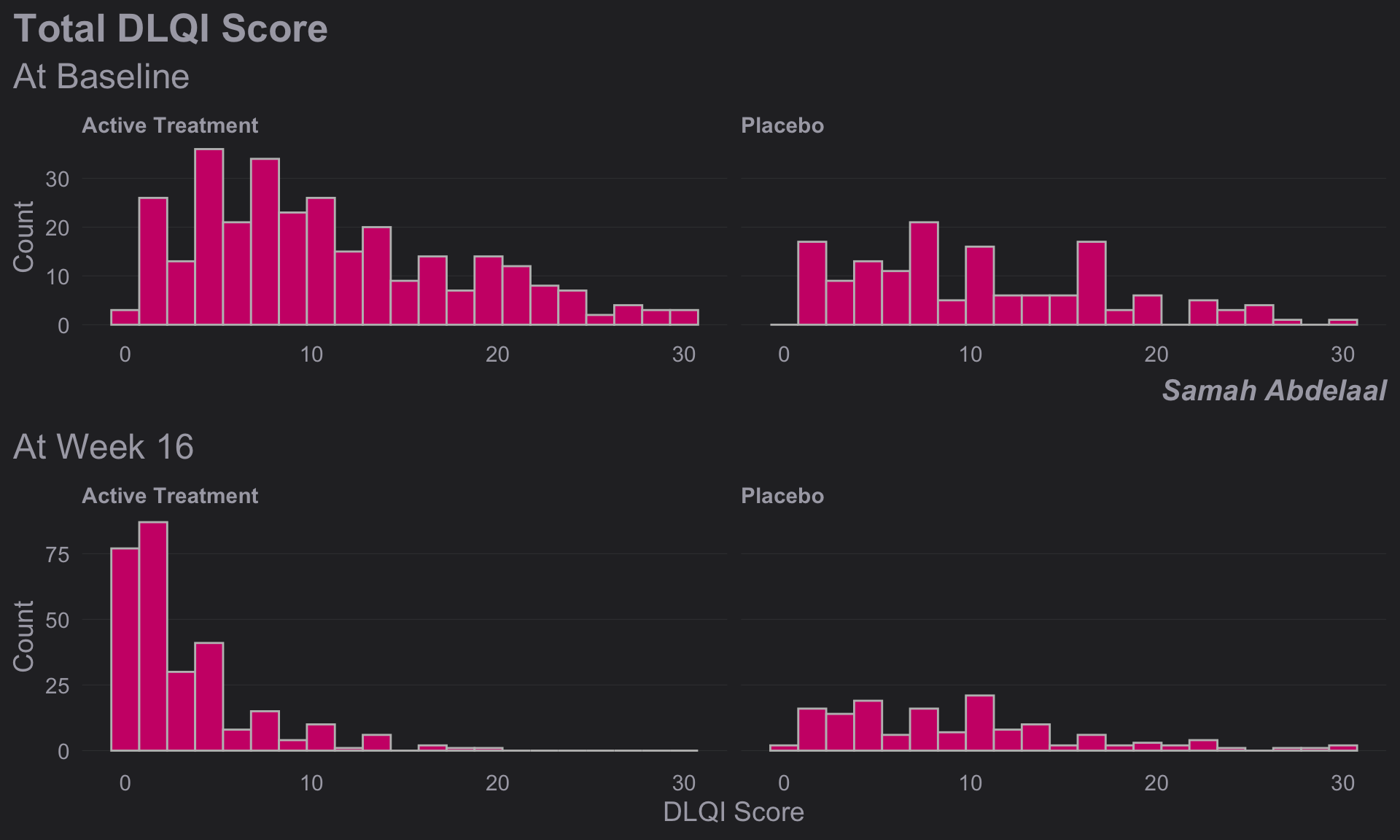

1.5.2 Clean Design 1

dql <- read.csv("./01_Datasets/ww2020_dlqi.csv")

attach(dql)

# View(dql)

# summary(dql)

# Load Library

# library(ggthemes)

# library(ggcharts)

# Select relevant variables

dql_renamed <-

dql %>%

select(

TRT, VISIT, DLQI_SCORE

)

# Rename treatment levels

dql_renamed$TRT[dql_renamed$TRT=="A"] <- "Placebo"

dql_renamed$TRT[dql_renamed$TRT=="B"] <- "Active Treatment"

# Seperate visits

# Baseline visit

totalbaseline <-

dql_renamed %>%

filter(VISIT=="Baseline")

# Construct a histogram for each treatment arm at baseline visit

(d <-

ggplot(

data = totalbaseline,

aes(

x = DLQI_SCORE

))

+ geom_histogram(

binwidth = 1.5,

color = "grey",

fill = "deeppink3"

) +

facet_grid(~ TRT)

+ theme_ng(grid = "X")

+ labs(

x = "DLQI Score",

y = "Count",

title = "Total DLQI Score",

subtitle = "At Baseline",

caption = "Samah Abdelaal")

+ theme(

axis.title.x = element_blank(),

plot.title = element_text(size = 20,

face = "bold"),

plot.subtitle = element_text(size = 18),

plot.caption = element_text(size = 15,

face = "bold.italic")

))

# Week 16 visit

totalweek16 <-

dql_renamed %>%

filter(VISIT=="Week 16")

(e <-

ggplot(

data = totalweek16,

aes(

x = DLQI_SCORE

)

)

+ geom_histogram(

binwidth = 1.5,

color = "grey",

fill = "deeppink3"

) +

facet_grid(~ TRT)

+ theme_ng(grid = "X")

+ labs(

x = "DLQI Score",

y = "Count",

subtitle = "At Week 16"

) +

theme(

plot.subtitle = element_text(size = 18)

))

# Compine plots

library(gridExtra)

gridExtra::grid.arrange(d, e, nrow = 2)

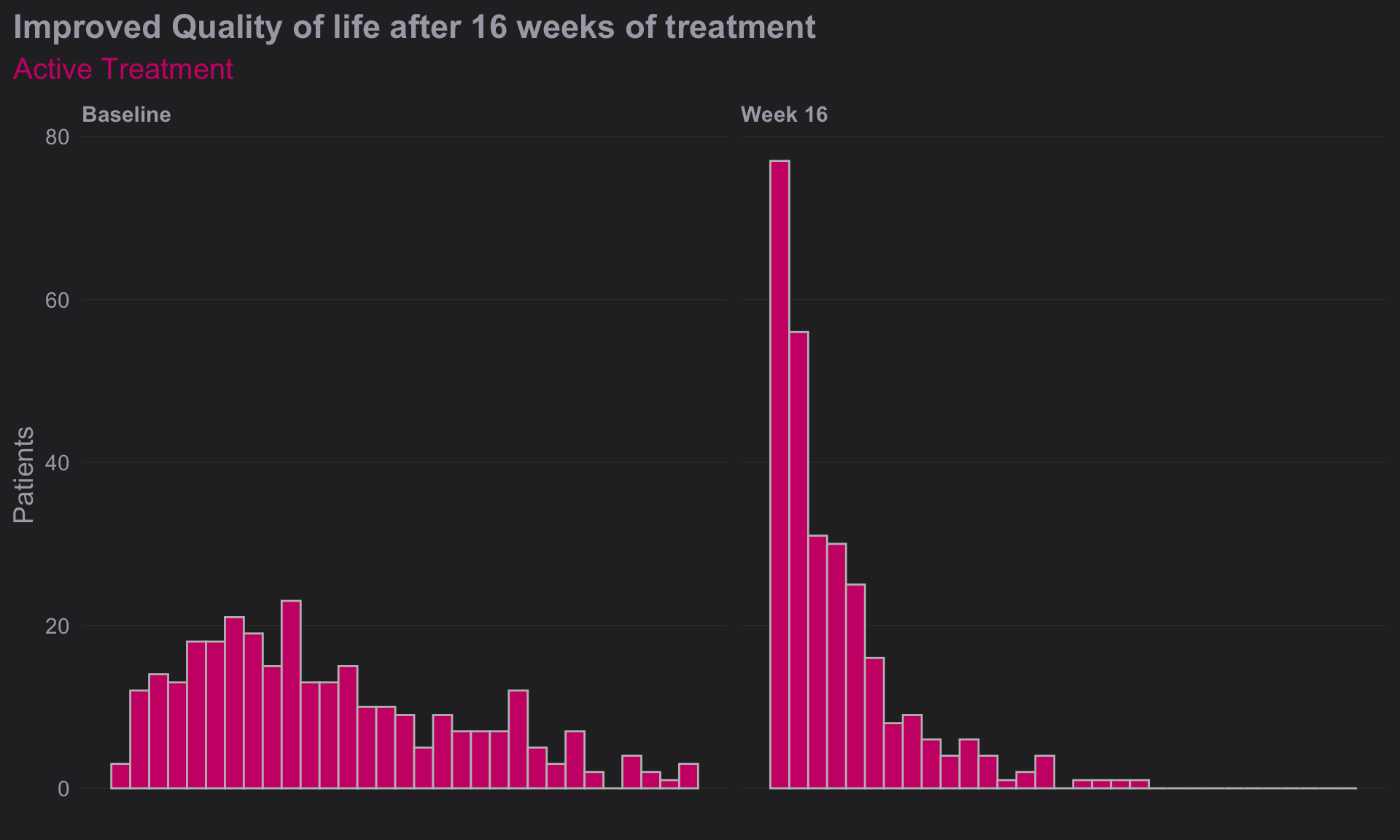

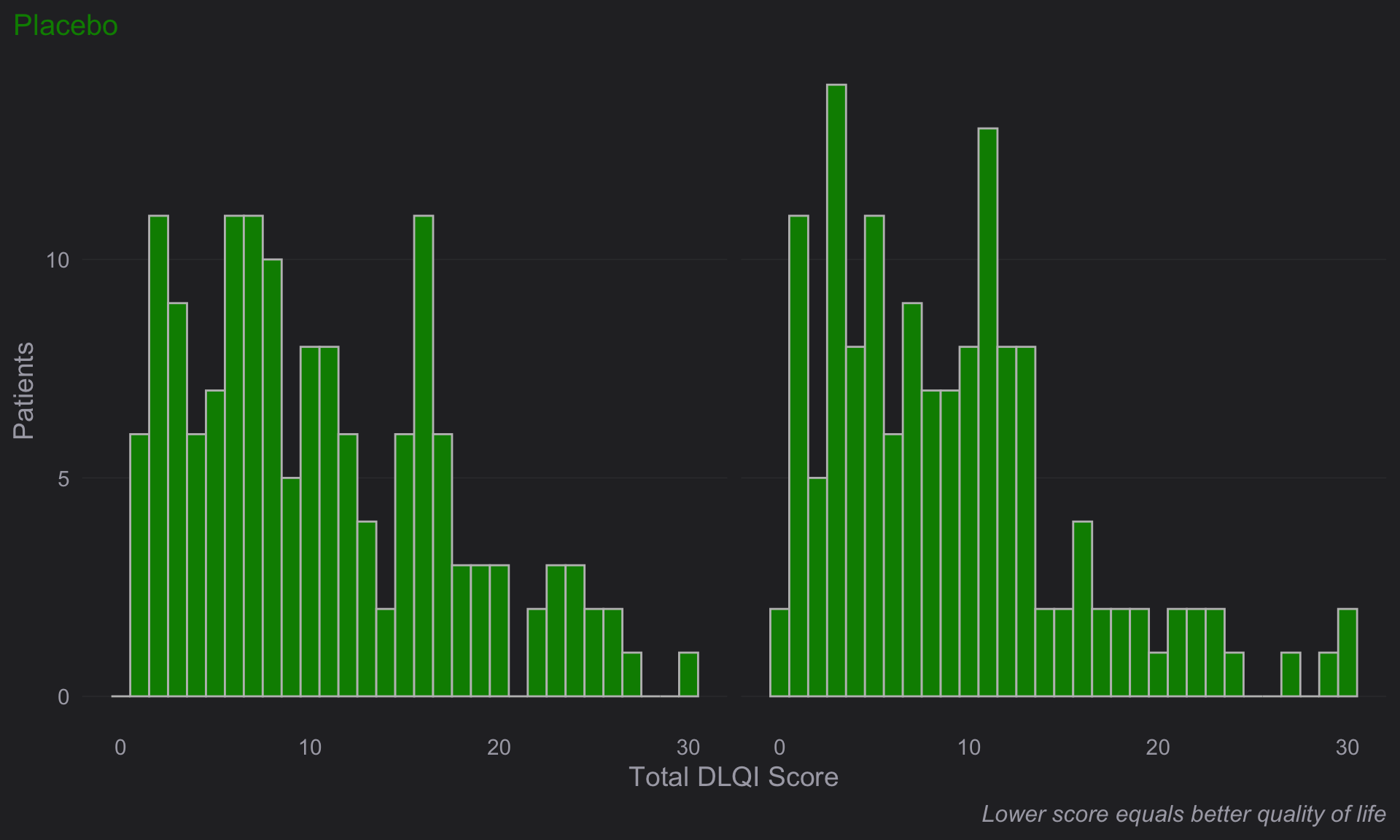

1.5.3 Clean Design 2

dql <- read.csv("./01_Datasets/ww2020_dlqi.csv")

attach(dql)

# View(dql)

# summary(dql)

# Load Library

# library(tidyverse)

# library(ggplot2)

# library(ggthemes)

# library(ggcharts)

# Select relevant variables

dql_renamed <-

dql %>%

select(

TRT, VISIT, DLQI_SCORE

)

# Rename treatment levels

dql_renamed$TRT[dql_renamed$TRT=="A"] <- "Placebo"

dql_renamed$TRT[dql_renamed$TRT=="B"] <- "Active Treatment"

# Seperate treatments

# Active

totalB <-

dql_renamed %>%

filter(TRT=="Active Treatment")

# Construct a histogram for each treatment arm at baseline visit

(d <-

ggplot(

data = totalB,

aes(

x = DLQI_SCORE

))

+ geom_histogram(

binwidth = 1,

color = "grey",

fill = "deeppink3"

) +

facet_grid(~ VISIT)

+ theme_ng(grid = "X")

+ labs(

x = "Total DLQI Score",

y = "Patients",

title = "Improved Quality of life after 16 weeks of treatment",

subtitle = "Active Treatment")

+ theme(

axis.title.x = element_blank(),

axis.text.x = element_blank(),

plot.title = element_text(size = 17,

face = "bold"),

plot.subtitle = element_text(size = 15, color = "deeppink3")

))

# Week 16 visit

totalA <-

dql_renamed %>%

filter(TRT=="Placebo")

(e <-

ggplot(

data = totalA,

aes(

x = DLQI_SCORE

)

)

+ geom_histogram(

binwidth = 1,

color = "grey",

fill = "green4"

) +

facet_grid(~ VISIT)

+ theme_ng(grid = "X")

+ labs(

x = "Total DLQI Score",

y = "Patients",

subtitle = "Placebo",

caption = "Lower score equals better quality of life"

) +

theme(

strip.text.x = element_blank(),

plot.subtitle = element_text(size = 15, color = "green4"),

plot.caption = element_text(size = 12,

face = "italic")

))

# Compine plots

# library(gridExtra)

gridExtra::grid.arrange(d, e, nrow = 2, heights = c(1.5,1))

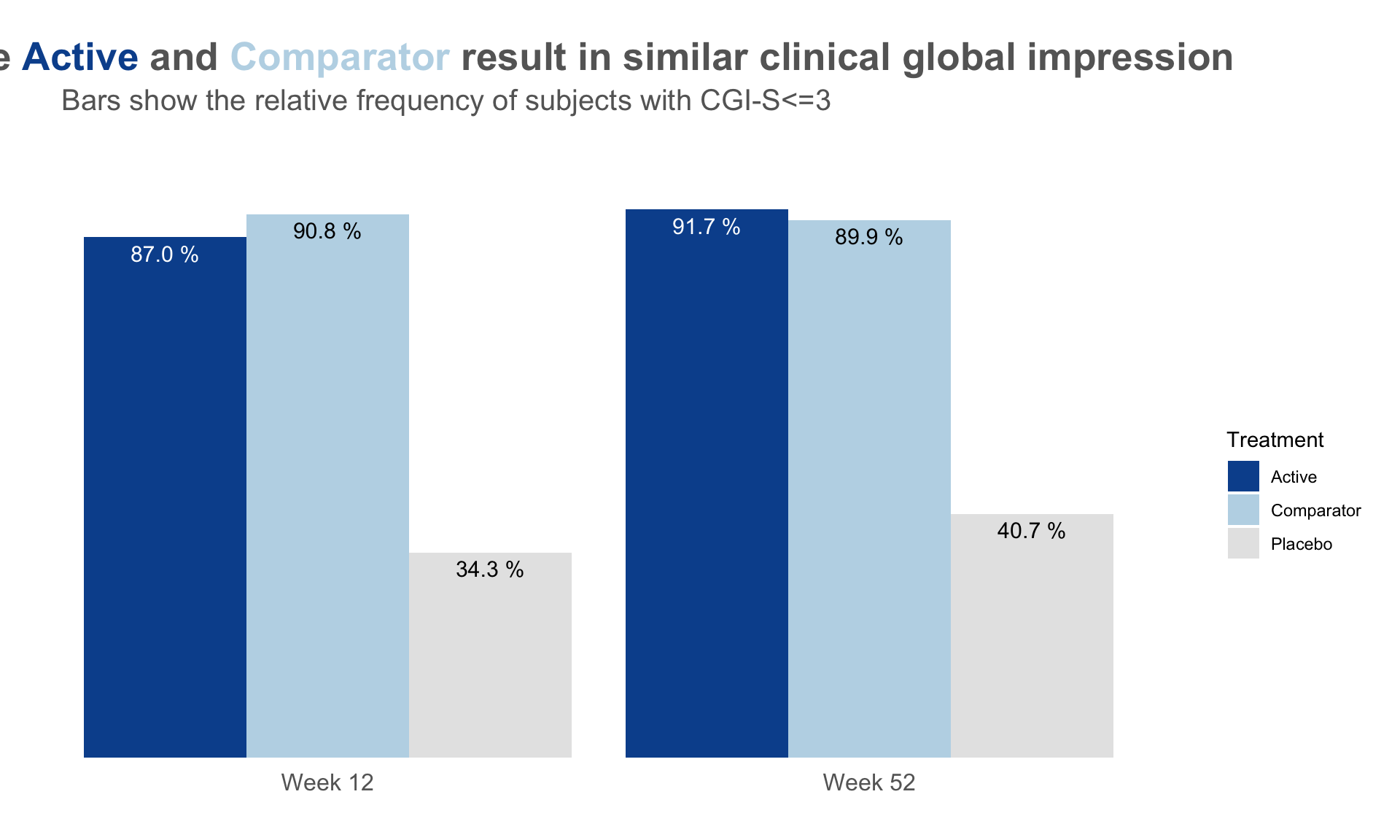

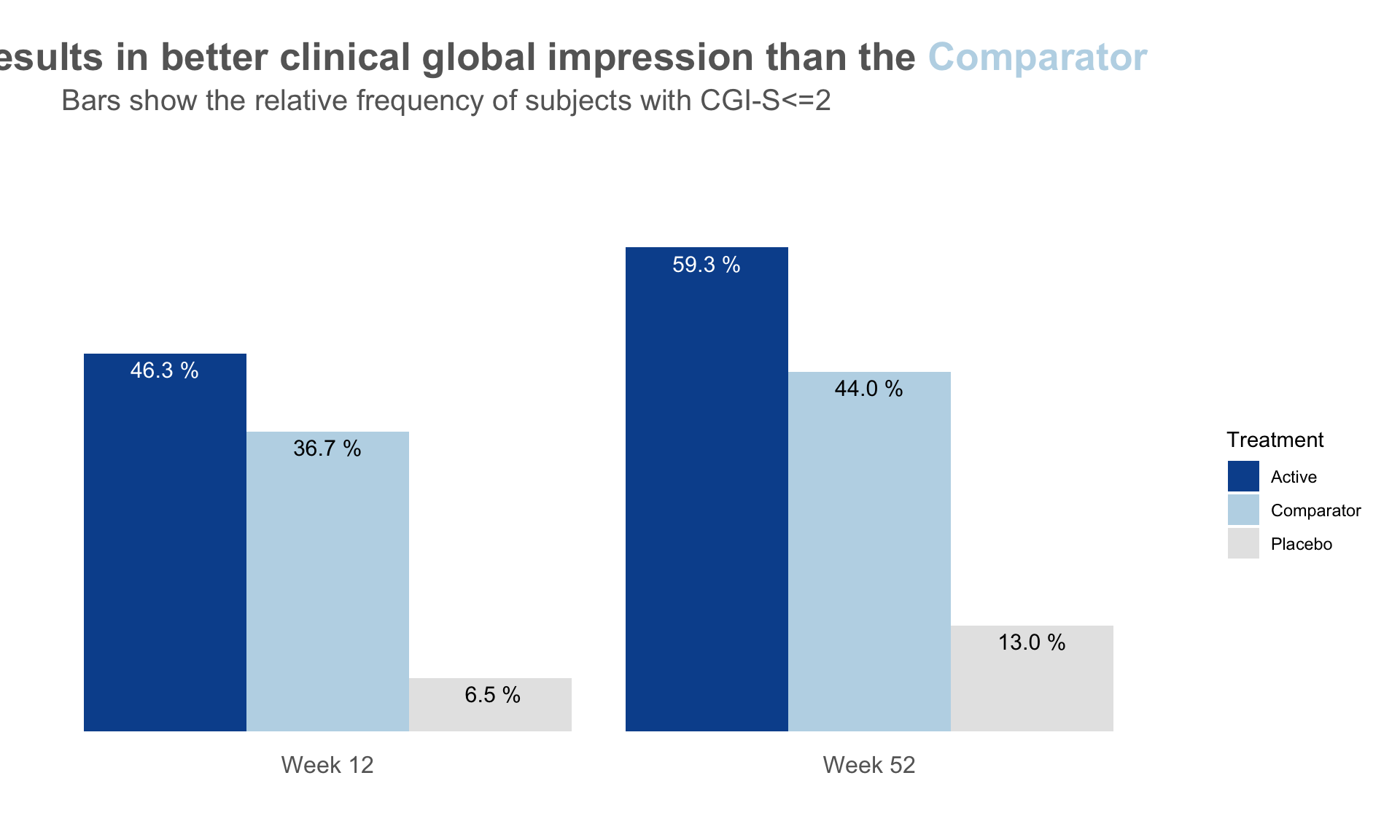

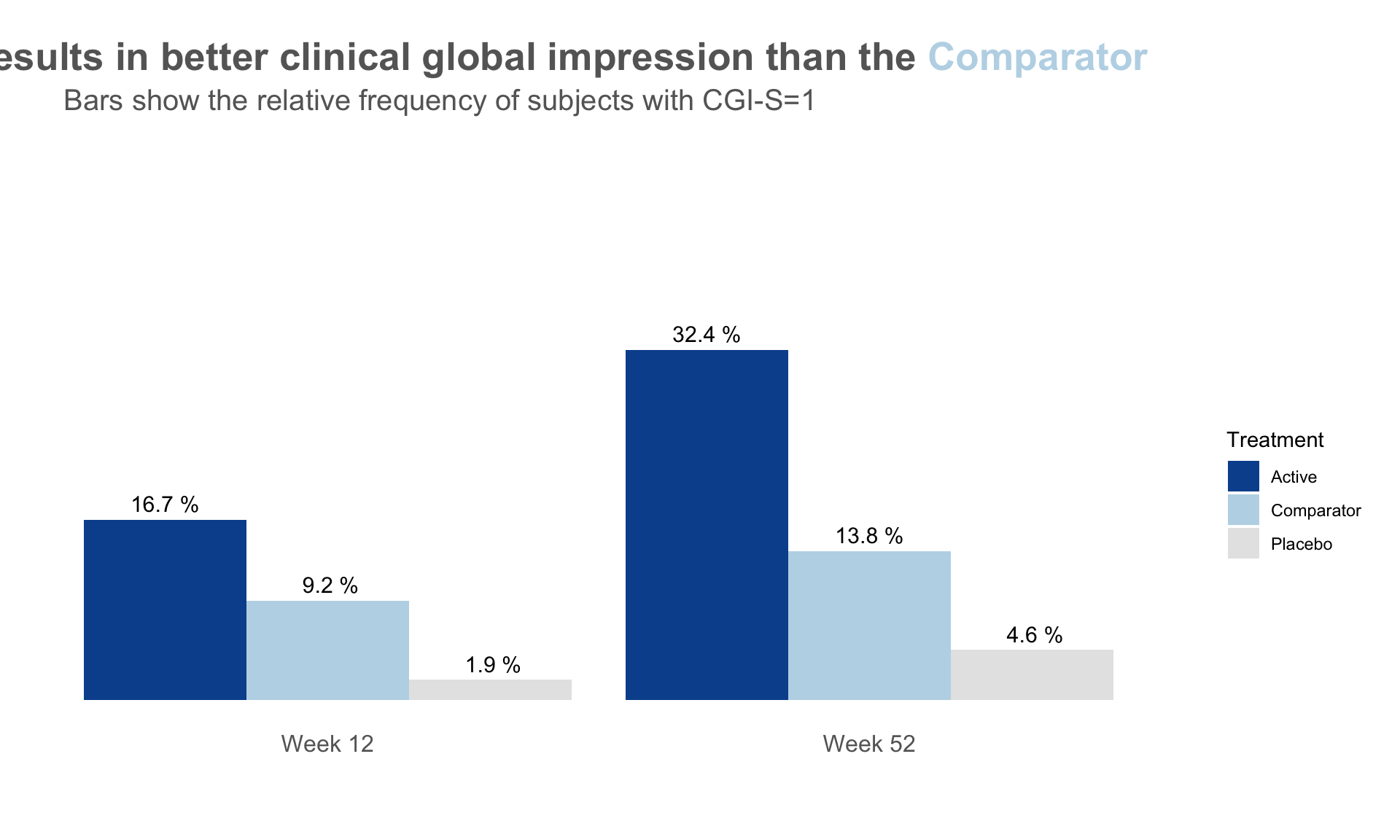

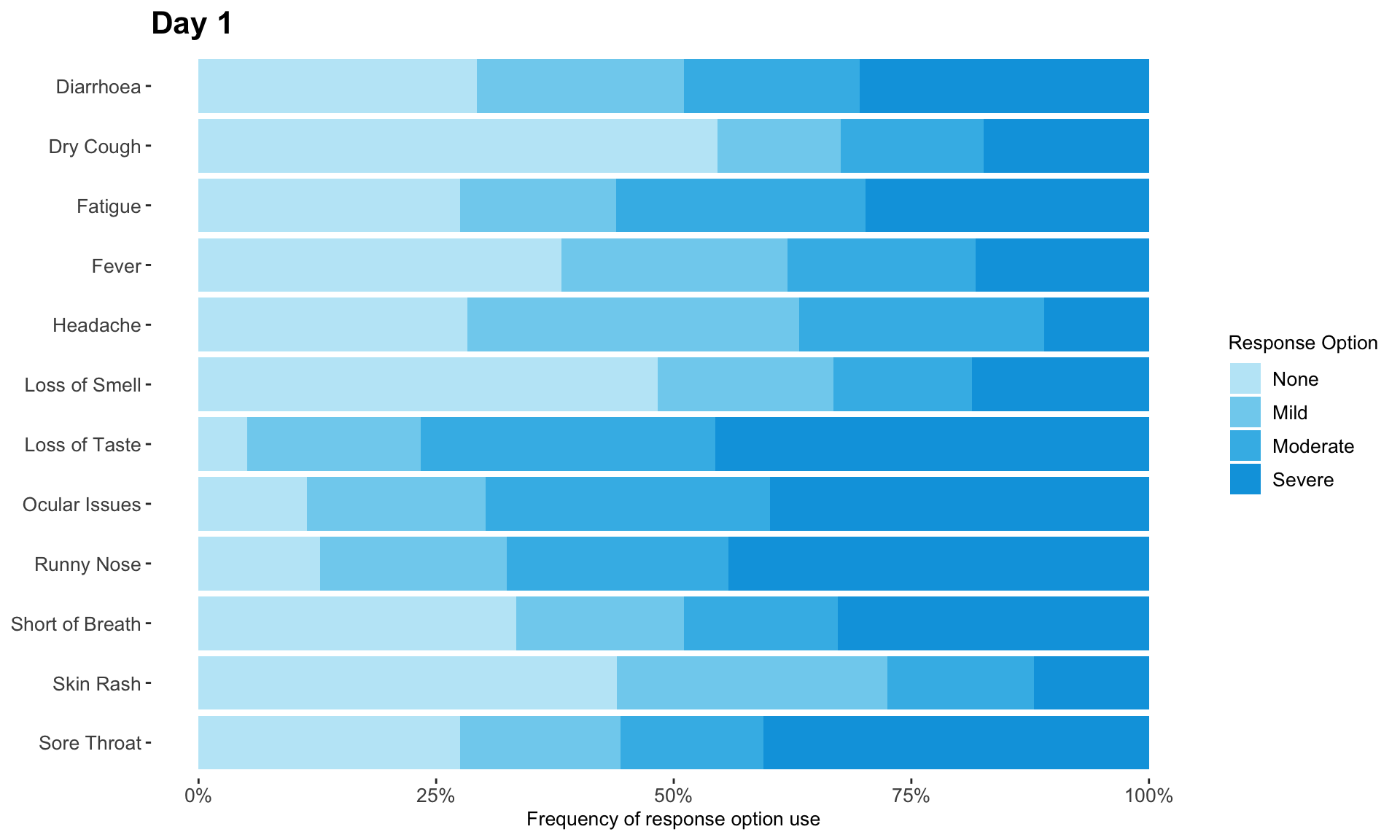

2 CGI-S

2.1 Barplot for

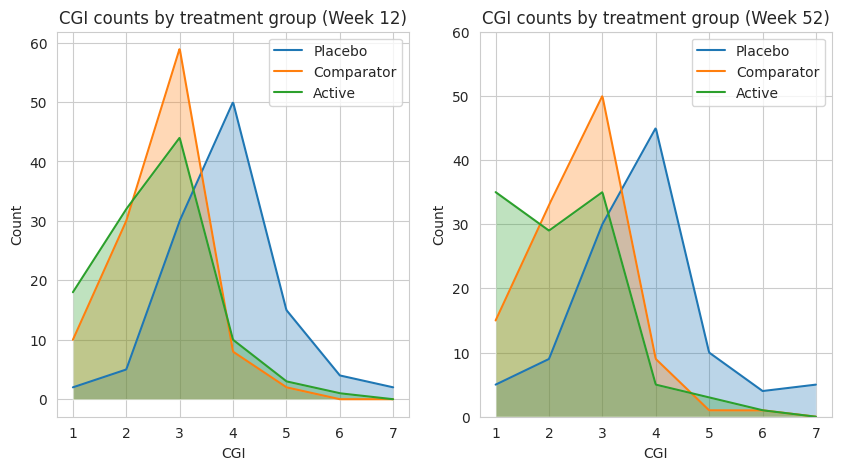

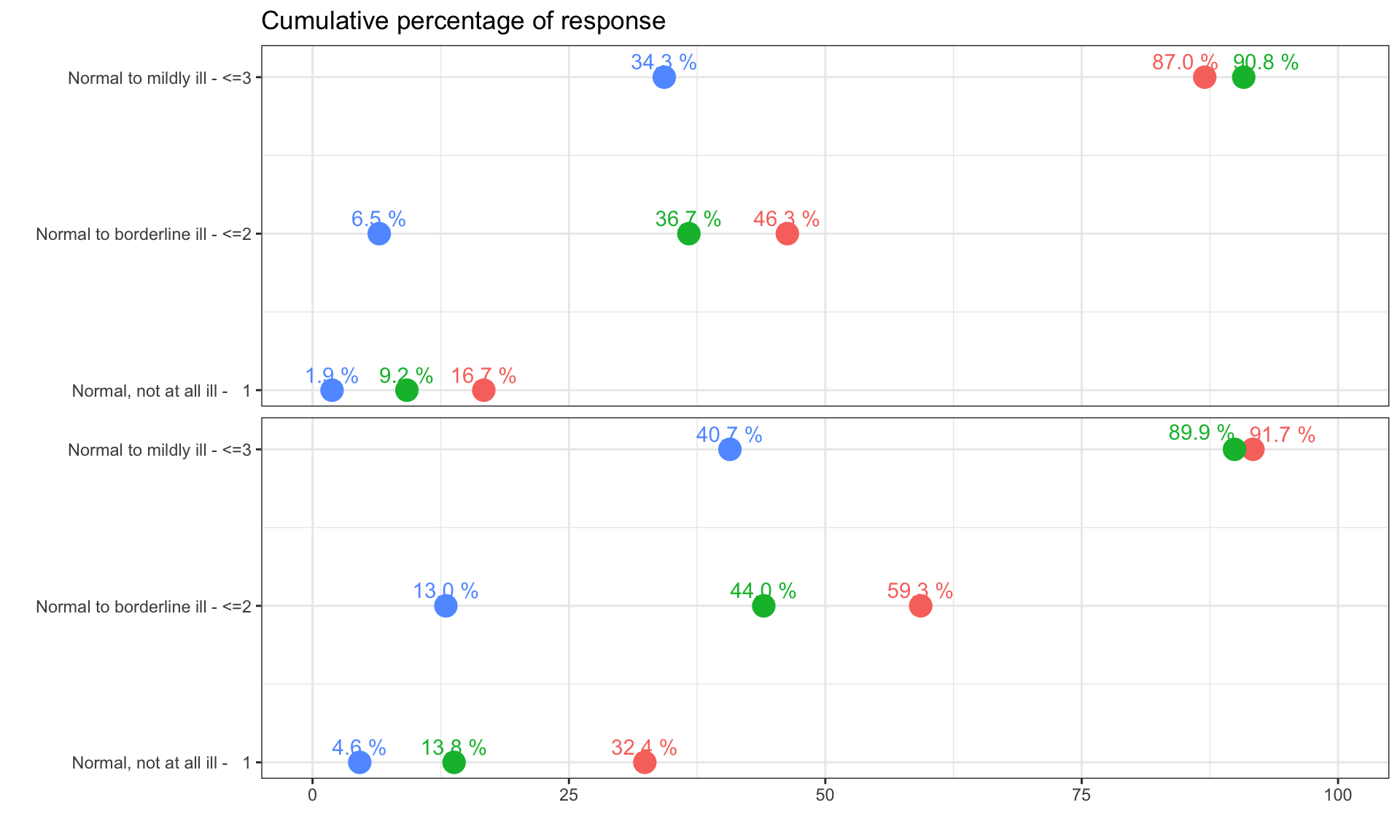

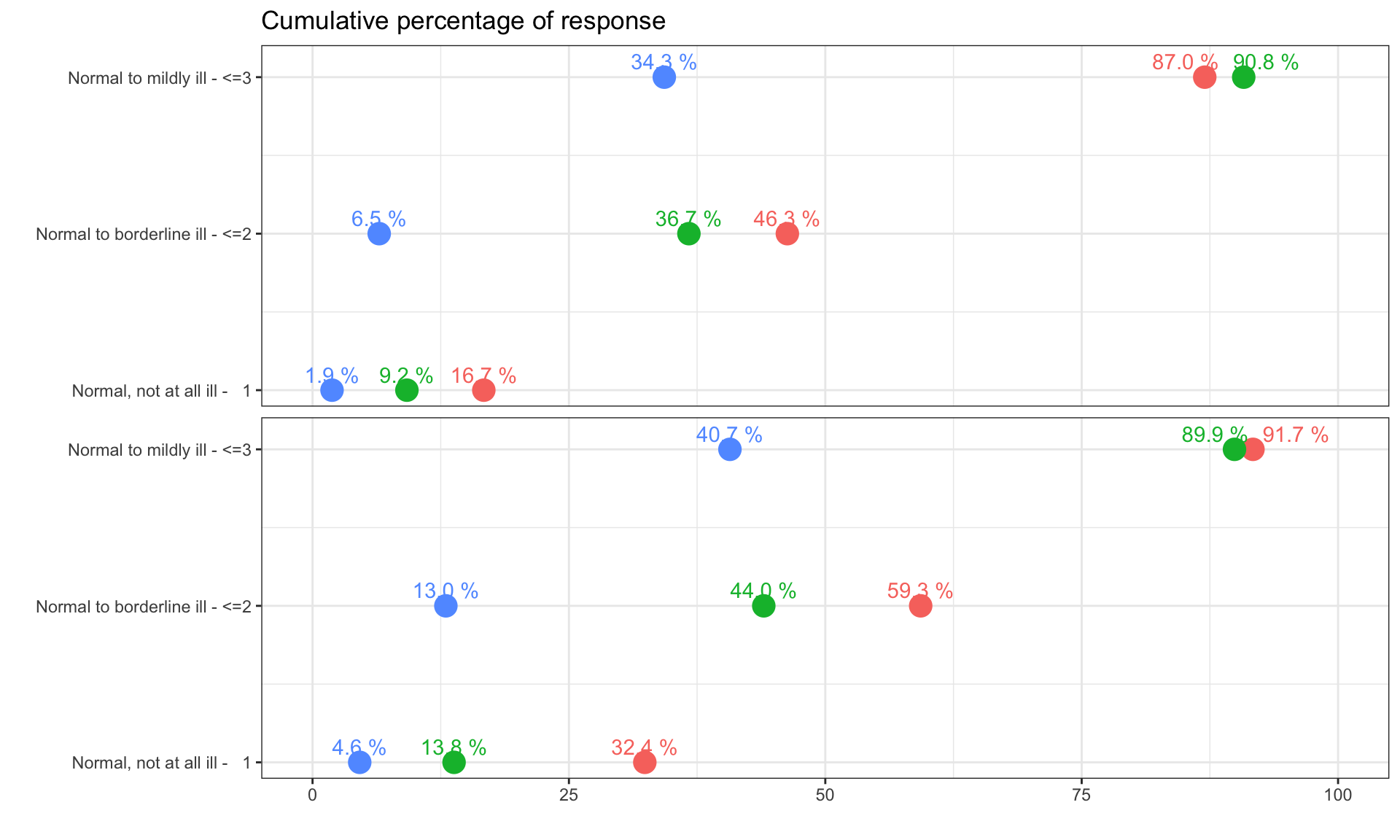

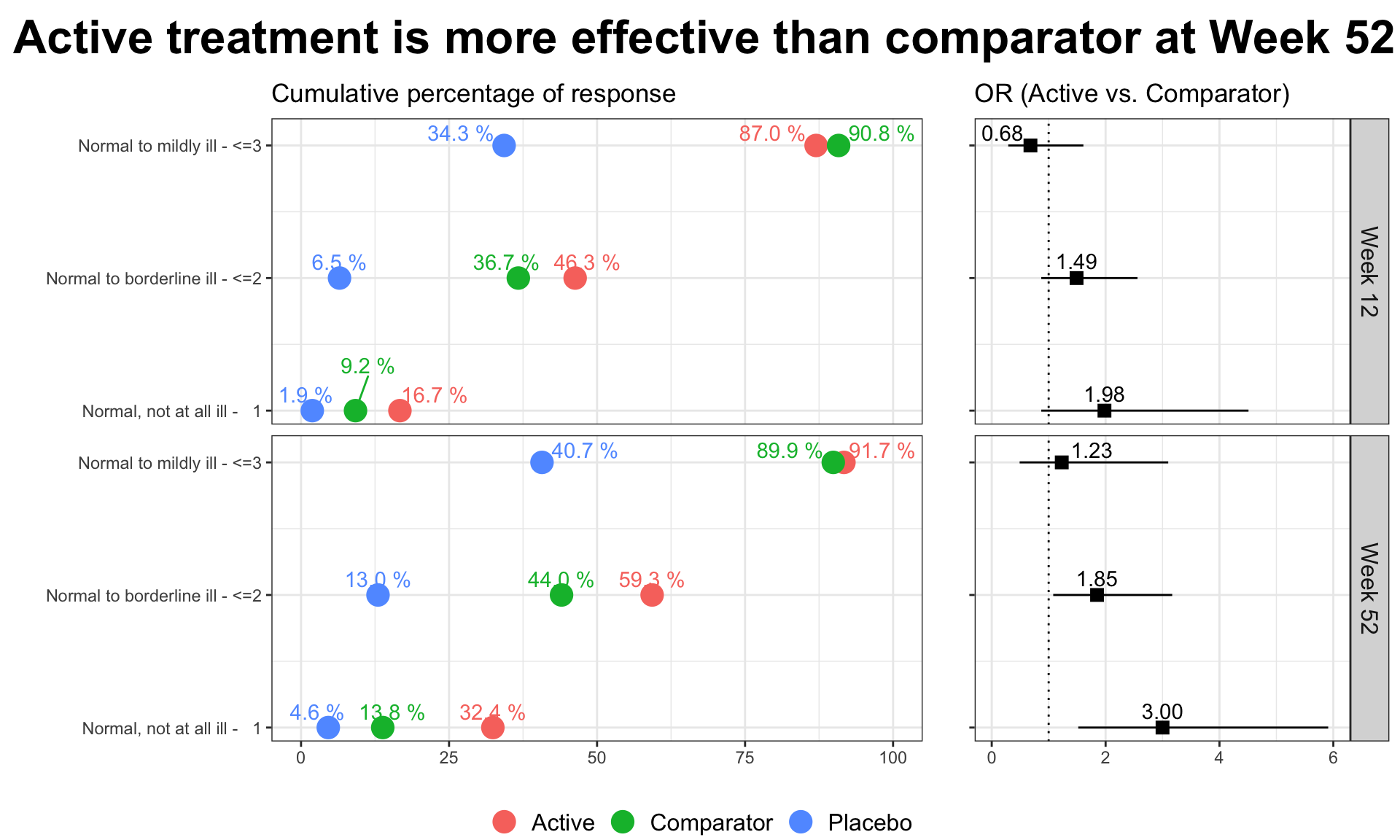

The clinical global impression – severity scale (CGI-S) is a 7-point scale that requires the clinician to rate the severity of the patient’s illness at the time of assessment, relative to the clinician’s past experience with patients who have the same diagnosis. The challenge was to provide data visualisations to show this data and also to provide comparisons between the different groups (e.g. based on response differences or odds ratios for the different response categories) using Clinical Global Impression Data.

plot.fun <- function(dat, name, v.just = 1.5, gci.s = "<=3", y.max = 100,

title.text = "The <span style = 'color: #08519C'>Active</span> and

<span style = 'color: #BDD7E7'>Comparator</span> result in similar clinical global impression",

col.ann = c(rep(c("black", "black", "white"), 2)),

title.h.just = 0.6) {

require(ggplot2)

require(ggtext)

require(RColorBrewer)

ggplot(dat, aes(y=Value, x=VISITNUM, fill=Treatment)) +

geom_bar(position="dodge", stat="identity") +

ylab("") + xlab ("") +

ylim(-12, y.max) +

theme(panel.grid.major = element_blank(), panel.grid.minor = element_blank(),

panel.background = element_blank(), axis.line = element_blank(),

axis.ticks = element_blank(),# axis.text = element_text(size = 12),

axis.text.x = element_blank(),

axis.text.y = element_blank(),

plot.subtitle = element_text(size = 15, color = "grey40", hjust = 0.14),

plot.caption = element_text(color = "grey60", size = 12, hjust = 0.85),

plot.title = element_markdown(color = "grey40", size = 20,

face = "bold", hjust = title.h.just),

plot.margin = margin(0.3, 0.2, -0.38, -0.2, "in")) +

annotate("text", x=1, y=-4, label= "Week 12", size = 4.25, color = "grey40") +

annotate("text", x=2, y=-4, label= "Week 52", size = 4.25, color = "grey40") +

# annotate("text", x=3, y=-4, label= "Week 24", size = 4.25, color = "grey40") +

# annotate("text", x=2.27, y=-12,

# label= "Good glycemic control is defined as Glucose values within a range of 72 and 140 mg/dL.",

# size = 3.5, color = "grey60") +

scale_fill_manual(breaks = c("Active", "Comparator", "Placebo"),

values = c(brewer.pal(n = 5, name = "Blues")[c(5, 2)], "grey90")) +

geom_text(aes(label=val.t), vjust = v.just, size = 4, position = position_dodge(.9),

col = col.ann) +

labs(title = title.text,

subtitle = paste0("Bars show the relative frequency of subjects with CGI-S", gci.s))#,

# caption = "Good glycemic control is defined as Glucose values within a range of 72 and 140 mg/dL.")

# ggsave(name, width = 12, height = 6, units = "in", dpi = 150)

}

dat <- read.csv("./01_Datasets/CGI_S_3_groups_csv.csv")

dat$X1 <- (dat$X1 + dat$X2 + dat$X3) / dat$Total.sample.size * 100

# dat$X1 <- (dat$X1) / dat$Total.sample.size * 100

dat <- dat[, 1:3]

names(dat) <- c("VISITNUM", "Treatment", "Value")

dat$val.t <- paste(format(round(dat$Value, 1), nsmall = 1), "%")

dat$VISITNUM <- as.factor(dat$VISITNUM)

plot.fun(dat, "barplot_3.png")

dat <- read.csv("./01_Datasets/CGI_S_3_groups_csv.csv")

dat$X1 <- (dat$X1 + dat$X2) / dat$Total.sample.size * 100

# dat$X1 <- (dat$X1) / dat$Total.sample.size * 100

dat <- dat[, 1:3]

names(dat) <- c("VISITNUM", "Treatment", "Value")

dat$val.t <- paste(format(round(dat$Value, 1), nsmall = 1), "%")

dat$VISITNUM <- as.factor(dat$VISITNUM)

plot.fun(dat, "barplot_2.png", gci.s = "<=2", y.max = 70,

title.text = "The <span style = 'color: #08519C'>Active</span> results

in better clinical global impression than the

<span style = 'color: #BDD7E7'>Comparator</span>",

title.h.just = 1.25)

dat <- read.csv("./01_Datasets/CGI_S_3_groups_csv.csv")

dat$X1 <- (dat$X1) / dat$Total.sample.size * 100

# dat$X1 <- (dat$X1) / dat$Total.sample.size * 100

dat <- dat[, 1:3]

names(dat) <- c("VISITNUM", "Treatment", "Value")

dat$val.t <- paste(format(round(dat$Value, 1), nsmall = 1), "%")

dat$VISITNUM <- as.factor(dat$VISITNUM)

plot.fun(dat, "barplot_1.png", v.just = -0.5, gci.s = "=1", y.max = 50,

title.text = "The <span style = 'color: #08519C'>Active</span> results

in better clinical global impression than the

<span style = 'color: #BDD7E7'>Comparator</span>",

col.ann = c(rep(c("black", "black", "black"), 2)),

title.h.just = 1.25)

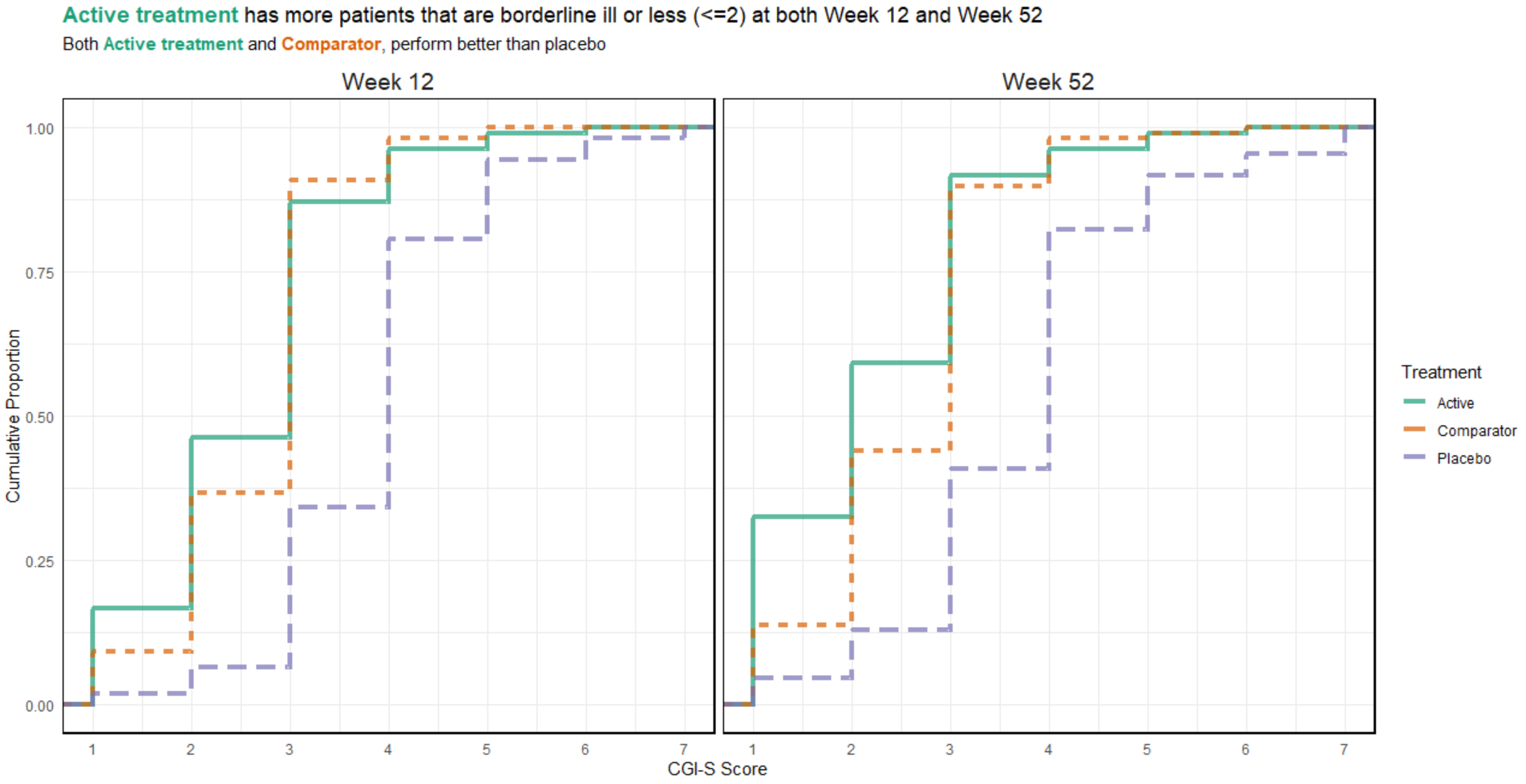

3 CGI-I

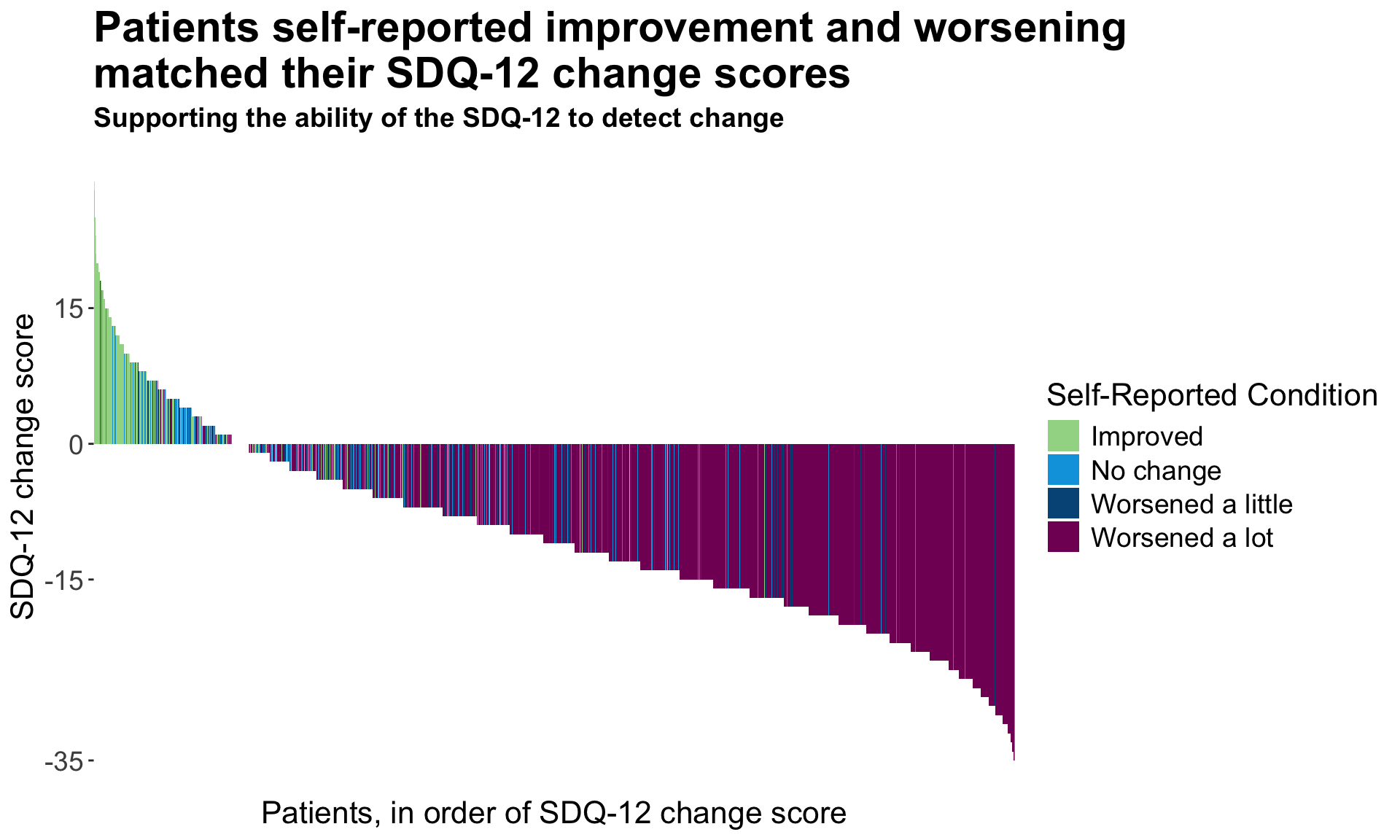

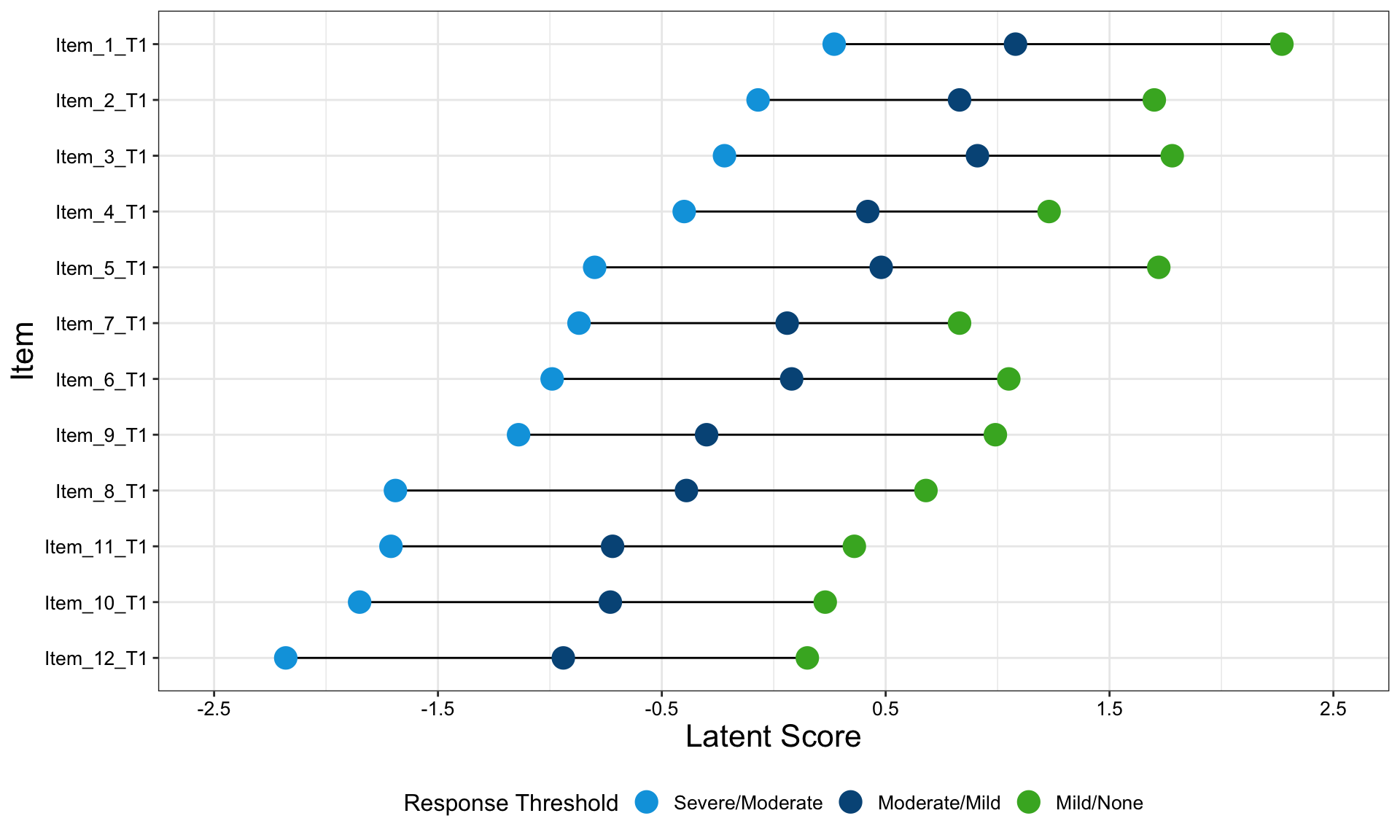

The Clinical Global Impressions-Improvement (CGI-I) scale is a widely used tool in clinical trials and practice to evaluate the overall change in a patient’s clinical condition over time, as perceived by the clinician. It is often used in conjunction with patient-reported outcomes (PROs) to assess the relevance and clinical significance of observed changes.

- Purpose:

- The CGI-I measures the degree of improvement (or worsening) in a patient’s condition compared to baseline, from the clinician’s perspective.

- It provides an overall, global impression, considering all available information, including clinical observations and patient-reported experiences.

- Scale:

- CGI-I is typically a 7-point scale, where:

- 1 = Very much improved

- 2 = Much improved

- 3 = Minimally improved

- 4 = No change

- 5 = Minimally worse

- 6 = Much worse

- 7 = Very much worse

- CGI-I is typically a 7-point scale, where:

- Relation to Clinically Meaningful Differences:

- Clinically meaningful differences help define thresholds where changes in outcomes are considered significant or relevant to patients and clinicians.

- By comparing changes in PROs (like pain scores or quality of life measures) to the CGI-I ratings, researchers can determine what magnitude of change on the PRO corresponds to meaningful improvement in the clinician’s eyes.

- For instance, if a 4-point decrease in a symptom severity score consistently aligns with a “Much improved” (rating of 2) on the CGI-I, this threshold can guide interpretation of treatment effects.

- Utility in Research:

- Anchor-Based Methodology: CGI-I often serves as an anchor in defining clinically meaningful changes in PROs because it reflects a holistic and intuitive judgment from the clinician.

- Validation: PRO instruments may be validated by examining how well their scores correlate with CGI-I ratings.

3.1 Line graphs

3.2 Stacked Bar Chart

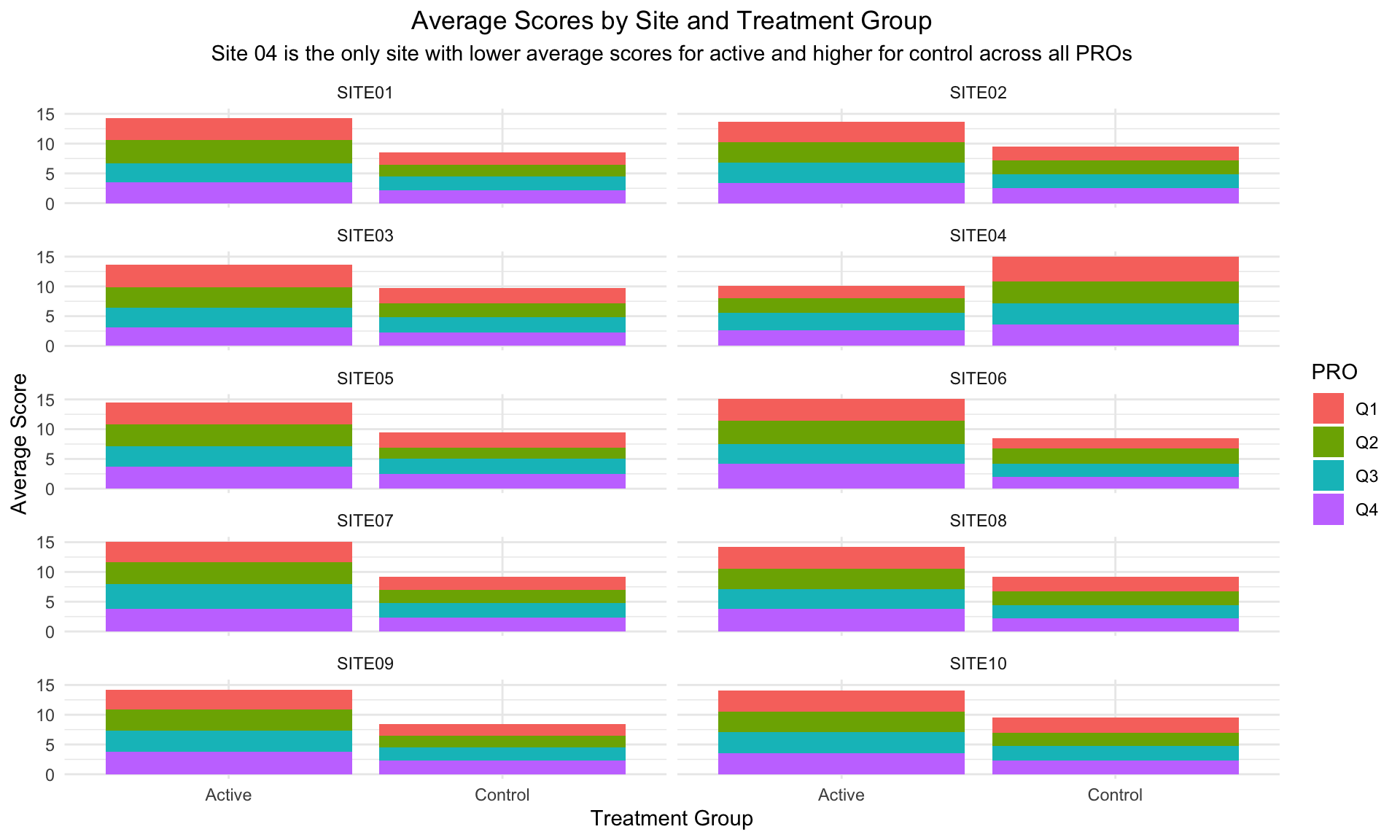

Annotation (circling Site 4 in red) and sorting (Site 4 in the upper left hand corner) to make clear that Site 4 is a visual outlier. The visual idiom is a stacked bar chart, one color for each questionnaire. Stacked barcharts are limited when comparing any of the components except the one on the bottom. For the purpose of this data viz challenge, it was only necessary to compare the totals, ie the heights of the stacked bars. This is easy to do for the stacked bars from a visual perception point of view. But the colors for the different components (Q1, Q2, Q3 and Q4) can be distracting.

data <- read.csv("./01_Datasets/PROdata.csv")

# Convert data to long format for easier plotting with ggplot2

data_long <- data %>%

pivot_longer(cols = starts_with("Q"), names_to = "Question", values_to = "Score")

# Calculate average scores by SITE, TRT, and Question

avg_scores <- data_long %>%

group_by(SITE, TRT, Question) %>%

summarise(Average_Score = mean(Score, na.rm = TRUE)) %>%

ungroup()

# Plot

ggplot(avg_scores, aes(x = TRT, y = Average_Score, fill = Question)) +

geom_bar(stat = "identity", position = "stack") +

facet_wrap(~ SITE, ncol = 2) +

labs(

title = "Average Scores by Site and Treatment Group",

subtitle = "Site 04 is the only site with lower average scores for active and higher for control across all PROs",

x = "Treatment Group",

y = "Average Score",

fill = "PRO"

) +

theme_minimal() +

theme(

plot.title = element_text(hjust = 0.5),

plot.subtitle = element_text(hjust = 0.5)

)

3.3 Slope Plots

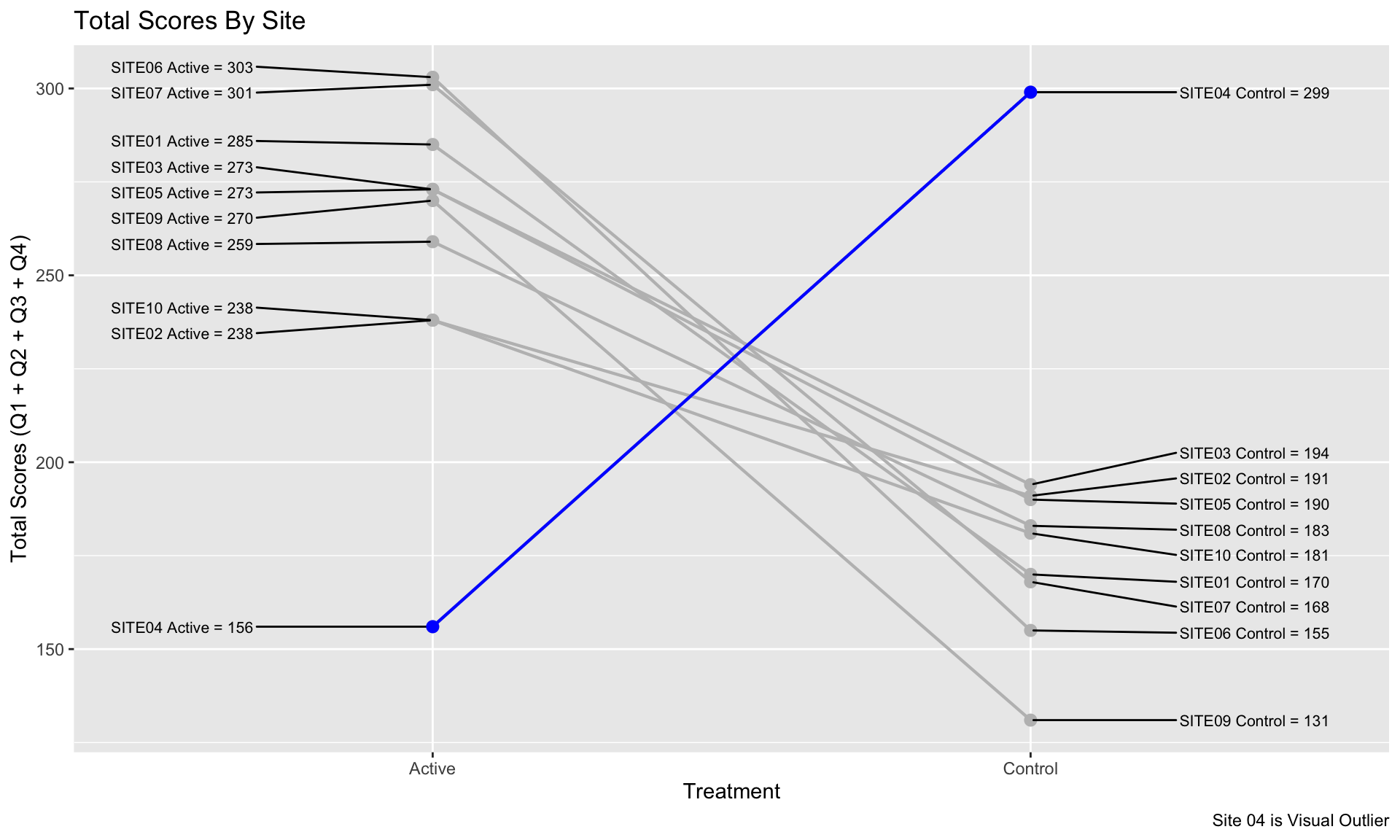

Leverage visual analytics to identify data issues. Consider 4 questions on a Likert scale. 5 rating levels (Strongly disagree, Disagree, Neutral, Agree, Strongly Agree) coded as 1, 2, 3, 4 and 5, respectively. The higher the number the better. 400 Subjects (200 active, 200 control).

We can clearly see that Site 4 is a visual outlier. The Active data for Site 4 looks like it belongs with the Control data from the other sites and likewise the Contorl data for Site 4 looks like it belo9ngs with the Active data from the other sites. Color and a useful title are used to highlight the difference.

PROdata.vert <- read.csv("./01_Datasets/PROdata.csv")

PROdata.slopes <- PROdata.vert %>%

mutate(total=Q1+Q2+Q3+Q4) %>%

group_by(SITE, TRT) %>%

summarise(total.score = sum(total, na.rm = TRUE)) %>%

ungroup()

# apply a plot to a data set where this works

# library(ggrepel)

p <- ggplot(PROdata.slopes, aes(

x = TRT,

y = total.score,

group = SITE

)) +

geom_line(

size = 0.75,

color = "grey"

) +

geom_point(

size = 2.5,

#color = unhcr_pal(n = 1, "pal_blue")

color = "grey"

) +

labs(

title = "Total Scores By Site",

caption = "Site 04 is Visual Outlier"

) +

geom_text_repel(

data = PROdata.slopes |> filter(TRT=="Active"),

aes(label = paste(SITE, TRT,"=", total.score)),

size = 8 / .pt,

hjust = 1,

direction = "y",

nudge_x = -0.3,

) +

geom_text_repel(

data = PROdata.slopes |> filter(TRT=="Control"),

aes(label = paste(SITE, TRT,"=", total.score)),

size = 8 / .pt,

hjust = 1,

direction = "y",

nudge_x = 0.5,

) +

# Make Site 04 appear in blue

geom_line(

data=PROdata.slopes |> filter(SITE=="SITE04"),

size = 0.75,

color = "blue"

) +

geom_point(

data=PROdata.slopes |> filter(SITE=="SITE04"),

size = 2.5,

#color = unhcr_pal(n = 1, "pal_blue")

color = "blue"

) +

xlab("Treatment") + ylab("Total Scores (Q1 + Q2 + Q3 + Q4)")

p

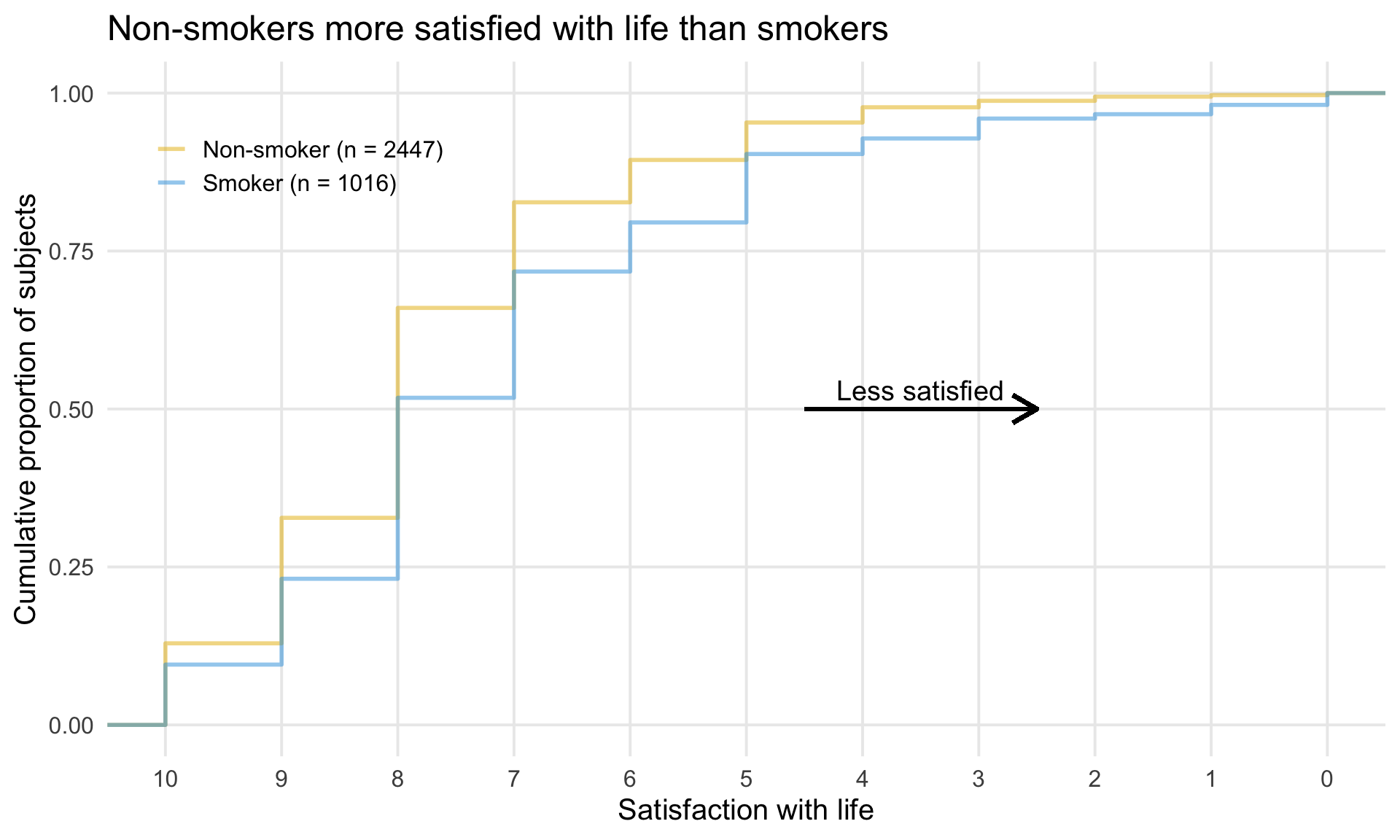

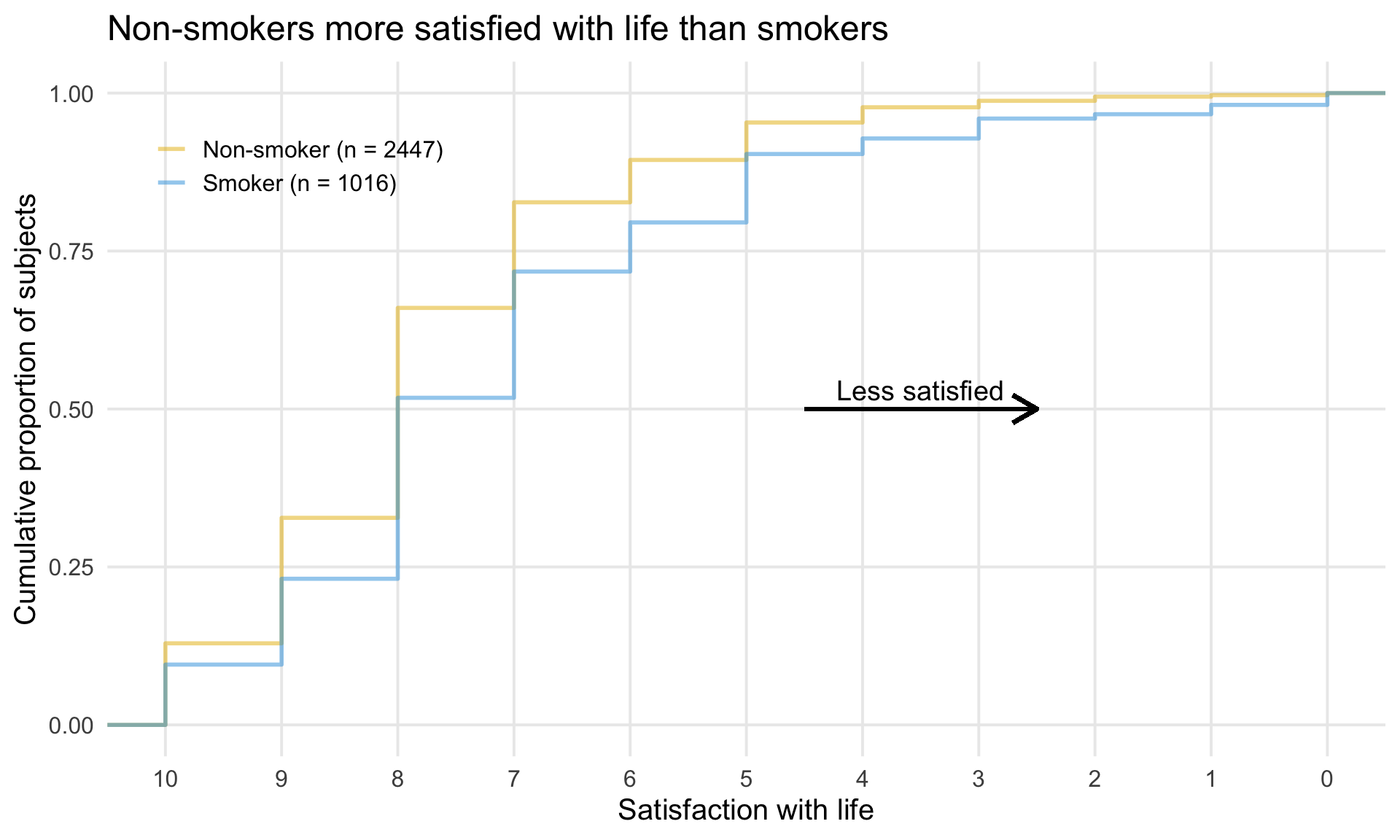

# ggsave(plot=p, ofile("slopes.png"))3.4 Cumulative distribution plot

3.5 Exploring Uncertainty

This graph is formed in two panels, with the left panel showing the Cumulative Distribution Function (CDF) plots of percentage change from baseline in score, grouped by the anchor measure. A measure of variability has been added to the plot, derived using bootstrapping methods. The right panel is showing 95% CIs and 95% Prediction Intervals of scores for each category.

CDF plots used for comparing distributions are sometimes superimposed, so this approach may have been useful. However the confidence envelopes couldn’t then be shown simultaneously, so there’d need to be a way of interactively selecting one category at a time. Use of percentage change was discussed – absolute changes are often preferable in a scenario where clinical meaningful changes are being assessed.

# {r,echo = T,message = FALSE, error = FALSE, warning = FALSE}

# Data and packages ----

# library(readr)

# library(ggplot2)

# library(dplyr)

# library(cowplot)

# library(ggpubr)

# urlfile <- "https://raw.githubusercontent.com/VIS-SIG/Wonderful-Wednesdays/master/data/2021/2021-10-13/WWW_example_minimal_clinical_improvement.csv"

df <- read_csv("./01_Datasets/WWW_example_minimal_clinical_improvement.csv") %>%

mutate(change=`total score follow up`-`total score baseline`,

change_pct=(`total score follow up`-`total score baseline`)*100/`total score baseline`) %>%

rename(CGI_I=`CGI-I`)

# Bootstrapping ECDFs ----

set.seed(1234)

nboot <- 100

boot <- NULL

for (i in 1:nboot){

d <- df[sample(seq_len(nrow(df)), nrow(df), replace=T), ]

d$sim <- i

boot <- rbind(boot, d)

}

res1 <- boot %>%

group_by(CGI_I, sim) %>%

summarise(change_pct_median=mean(change_pct)) %>%

ungroup() %>%

group_by(CGI_I) %>%

summarise(boot_median = median(change_pct_median),

boot_lo95ci = quantile(change_pct_median, 0.025),

boot_hi95ci = quantile(change_pct_median, 0.975))

res1

res2 <- boot %>%

group_by(CGI_I) %>%

summarise(boot_lo95pi = quantile(change_pct, 0.025),

boot_hi95pi = quantile(change_pct, 0.975))

res2

res3 <- df %>%

group_by(CGI_I) %>%

summarise(n = n(),

mean = mean(change_pct),

sd = sd(change_pct),

lo95pi = mean - 1.96*sd,

hi95pi = mean + 1.96*sd,

lo95ci = mean - 1.96*(sd/sqrt(n)),

hi95ci = mean + 1.96*sd/sqrt(n))

res3

chg <- res1 %>%

left_join(res2) %>%

left_join(res3)

chg

no_chg <- res1 %>%

left_join(res3) %>%

filter(CGI_I==4) %>%

select(lo95ci, hi95ci, lo95pi, hi95pi) %>%

right_join(tibble(id=1:7), by = character())

no_chg

# Final plot ----

## CDFs

p1 <- ggplot() +

stat_ecdf(aes(x=change_pct, group=as.factor(sim)), data = boot, colour = alpha("deepskyblue4", 0.2)) +

stat_ecdf(aes(x=change_pct), data = df, size = 1.25, color = "orange2") +

scale_x_continuous(limits = c(-80, 80)) +

facet_grid(rows = vars(CGI_I))+

labs(title = "Cumulative Distribution Functions of Score % Changes",

subtitle = "Empirical CDF (Orange) with 1000 Bootstrap Samples (Blue)",

y = "Cumulative Distribution Function",

x = "Change %") +

theme_minimal() +

theme(strip.text = element_blank(),

panel.grid.minor.y = element_blank(),

panel.grid.major.y = element_blank(),

plot.title = element_text(size = 18, face = "bold"),

plot.subtitle = element_text(size = 12))

p1

labels_cgii <- as_labeller(c(`1` = "1\nVery Much\nImproved",

`2` = "2\nMuch\nImproved",

`3` = "3\nMinimally\nImproved",

`4` = "4\nNo Change",

`5` = "5\nMinimally\nWorse",

`6` = "6\nMuch\nWorse",

`7` = "7\nVery Much\nWorse"))

## Forest plot

p2 <- ggplot(data=chg) +

geom_rect(data=no_chg, aes(ymin=0, ymax=1,

xmin=lo95ci, xmax=hi95ci),

fill="orange2", alpha=0.025) +

geom_point(aes(x = boot_median, y=0.6),

size = 4, color = "deepskyblue4") +

geom_errorbarh(aes(xmin = boot_lo95ci, xmax = boot_hi95ci, y = 0.6),

height = 0.1, size=1.25, color = "deepskyblue4") +

geom_errorbarh(aes(xmin = boot_lo95pi, xmax = boot_hi95pi, y = 0.6),

height = 0.0, size=0.75, color = "deepskyblue4", linetype="dotted") +

geom_point(aes(x = mean, y = 0.4),

size = 4, color = "orange2") +

geom_errorbarh(aes(xmin = lo95ci, xmax = hi95ci, y = 0.4),

height = 0.1, size=1.25, color = "orange2") +

geom_errorbarh(aes(xmin = lo95pi, xmax = hi95pi, y = 0.4),

height = 0.0, size=0.75, color = "orange2", linetype="dotted") +

facet_grid(rows = vars(CGI_I), labeller = labels_cgii)+

ylim(0, 1) +

theme_minimal() +

labs(title = "Estimates, 95% Confidence Intervals and 95% Prediction Intervals",

subtitle = "Parametric (Orange) and with 1000 Bootstrap Samples (Blue)",

x = "Change %",

y = "") +

theme(strip.text.y = element_text(size = 14, angle = 0),

axis.text.y = element_blank(),

panel.grid.major.y = element_blank(),

panel.grid.minor.y = element_blank(),

plot.title = element_text(size = 18, face = "bold"),

plot.subtitle = element_text(size = 12)) +

coord_cartesian(xlim = c(-50, 50), ylim=c(0,1), expand=F, clip = "on")

p2

## Legend

dummy1 <- chg[chg$CGI_I==4,]

dummy2 <- chg[chg$CGI_I==5,]

tl<-0.015 # tip.length

l1 <- ggplot(data=dummy2) +

labs(title = "Legend") +

geom_rect(data=dummy1, aes(ymin=0, ymax=1, xmin=lo95ci, xmax=hi95ci),

fill="orange2", alpha = 0.15) +

geom_bracket(xmin=dummy1$lo95ci, xmax=dummy1$hi95ci,

y.position = 0,

label = "95% CI - No Change", tip.length = c(-tl, -tl)) +

geom_point(aes(x = boot_median, y=0.75),

size = 4, color = "deepskyblue4") +

geom_errorbarh(aes(xmin = boot_lo95ci, xmax = boot_hi95ci, y = 0.75),

height = 0.1, size=1.25, color = "deepskyblue4") +

geom_errorbarh(aes(xmin = boot_lo95pi, xmax = boot_hi95pi, y = 0.75),

height = 0.0, size=0.75, color = "deepskyblue4", linetype="dotted") +

geom_bracket(xmin=dummy2$lo95ci, xmax=dummy2$hi95ci, y.position = 0.35,

label = "95% Confidence Interval", tip.length = c(tl, tl)) +

geom_bracket(xmin=dummy2$mean, xmax=dummy2$mean, y.position = 0.3,

label = "Estimate", tip.length = c(tl, tl)) +

geom_bracket(xmin=dummy2$lo95pi, xmax=dummy2$hi95pi, y.position = 0.15, vjust = 4,

label = "95% Prediction Interval", tip.length = c(-tl, -tl)) +

geom_point(aes(x = mean, y = 0.25),

size = 4, color = "orange2") +

geom_errorbarh(aes(xmin = lo95ci, xmax = hi95ci, y = 0.25),

height = 0.1, size=1.25, color = "orange2") +

geom_errorbarh(aes(xmin = lo95pi, xmax = hi95pi, y = 0.25),

height = 0.0, size=0.75, color = "orange2", linetype="dotted") +

geom_bracket(xmin=dummy2$boot_lo95ci, xmax=dummy2$boot_hi95ci,

y.position = 0.85,

label = "95% Confidence Interval (Bootstrap)", tip.length = c(tl, tl)) +

geom_bracket(xmin=dummy2$boot_median, xmax=dummy2$boot_median,

y.position = 0.8,

label = "Estimate (Bootstrap)", tip.length = c(tl, tl)) +

geom_bracket(xmin=dummy2$boot_lo95pi, xmax=dummy2$boot_hi95pi,

y.position = 0.65, vjust = 4,

label = "95% Prediction Interval (Bootstrap)", tip.length = c(-tl, -tl)) +

coord_cartesian(xlim = c(-30, 50), ylim=c(0,1)) +

theme_void() +

theme(plot.title = element_text(size = 18, face = "bold"),

plot.subtitle = element_text(size = 12))

l1

pnull <- ggplot() +

coord_cartesian(xlim = c(-30, 50), ylim=c(0,1)) +

theme_void()

legend <- plot_grid(l1, pnull, ncol=1,

rel_heights = c(0.5, 0.5))

## Title

title <- ggdraw() +

draw_label(

"Exploring uncertainty of minimally clinically relevant changes",

fontface = 'bold', size = 36,

x = 0,

hjust = 0

) +

theme(

plot.margin = margin(0, 0, 0, 7)

)

title

plot_row <- plot_grid(p1, p2, pnull , legend, nrow=1, rel_widths = c(3, 3, 0.25, 1.25))

g2 <- plot_grid(title, plot_row, ncol = 1, rel_heights = c(0.1, 1))

g2

f<-1.7

# ggsave(file="plot_par_nonpar.pdf", g2, width = 16*f, height = 9*f)3.6 Spaghetti and Distribution Plot

The upper part of the graph is showing the distribution of the score values within each CGI-I category, using density plots of scores on an absolute scale. Distributions are shown separately for baseline and follow-up visits within each category, rather than change from baseline. Individual patient and mean changes are shown using slope plots.

The lower part of the graph shows the distribution of change from baseline values, with a measure of variability being derived using bootstrapping methods. Colour has been used to show paired mean differences outside a +/- 10 unit range.

In general, the graph contains a lot of information and takes some time to understand. However the graph tells a clear story in terms of the distribution of score across different categories, and shows clearly that the Minimally Improved category does not seem to be well differentiated from No Change category.

3.7 Distribution Plot by Category I

This graph includes stacked density plots, sometimes known as a ridgeline plot. This graph type is useful where there are approximately 4-8 categories with a natural ordering, which is the case in this example. The graph is also showing patient level data as transparent dots on the X axis, and reference lines have been added. There is a lot of overplotting of the dots, so the opacity of dots is representing the data density at each value on the X-axis.

WW_data <- read.csv("./01_Datasets/WWW_example_minimal_clinical_improvement.csv")

# library(tidyverse)

# library(ggplot2)

# library(dplyr)

# library(ggridges)

# library(gt)

# library(psych)

#####

#1 - calculate SEM

#The Standard Error of Measurement (SEM) quantifies

#the precision of the individual measurements

#and gives an indication of the absolute reliability

#2 - calculate SDC

#The SEM can be used to calculate the Minimal Detectable Change (MDC)

#which is the minimal amount of change that a measurement

#must show to be greater than the within subject variability

#and measurement error, also referred to as the sensitivity to change

pre_post <- WW_data[,c(1:2)]

sd_baseline <- sd(WW_data$total.score.baseline, na.rm = T)

icc <- ICC(pre_post)#0.032 - reliability for SEM

sem_baseline <- psychometric::SE.Meas(sd_baseline, 0.032)

#Smallest detectable change(SDC)/Minimal detectable change (MDC)

#SEM*1.92*sqrt(2)

sdc <- sem_baseline*1.96*sqrt(2)

sdc_comp <- sdc*-1

WW_data <- rename(WW_data, baseline = total.score.baseline, followup = total.score.follow.up, CGI = CGI.I)

WW_data <- within(WW_data, CHG <- followup-baseline)

WW_data <- within(WW_data, {

CGI_cat <- NA

CGI_cat[CGI==1] <- "Very much improved"

CGI_cat[CGI==2] <- "Much improved"

CGI_cat[CGI==3] <- "Minimally improved"

CGI_cat[CGI==4] <- "No change"

CGI_cat[CGI==5] <- "Minimally worse"

CGI_cat[CGI==6] <- "Much worse"

CGI_cat[CGI==7] <- "Very much worse"

})

WW_data <- WW_data <- WW_data %>%

filter(!is.na(CGI_cat))

WW_data$CGI_cat <- factor(WW_data$CGI_cat, levels = c("Very much improved",

"Much improved",

"Minimally improved",

"No change",

"Minimally worse",

"Much worse",

"Very much worse"

))

gg <- ggplot(WW_data, aes(x = CHG,

y = CGI_cat)) +

stat_density_ridges(

geom = "density_ridges_gradient",

quantile_lines = TRUE,

quantiles = 2, scale = 1, rel_min_height = 0.01,

jittered_points = TRUE) +

scale_x_continuous(breaks=seq(-40,40,10),

limits = c(-40,40)) +

ylab("CGI-I Response") + xlab("Change in PRO Score") +

labs(title = "Minimally Improved & Minimally Worse CGI-I Categories\nAre Not Differentiated From No change",

subtitle = "Smoothed Distributions with Individual Patients (dots) and Means (|) \nReference Lines Display Smallest Detectable Change of PRO Score",

caption = "Smallest Detectable Change defined by Standard Error of Measurement of PRO Score at Baseline") +

theme(

plot.title = element_text(color = "black", size = 15),

plot.subtitle = element_text(color = "black", size = 10),

plot.caption = element_text(color = "black", size = 8)

)

#theme_ridges(font_size = 12)

#Build ggplot and extract data

d <- ggplot_build(gg)$data[[1]]

# Add geom_ribbon for shaded area

rcc <- gg +

geom_ribbon(

data = transform(subset(d, x >= sdc), CGI_cat = group),

aes(x, ymin = ymin, ymax = ymax, group = group),

fill = "red",

alpha = 0.2,

show.legend = TRUE) +

geom_ribbon(

data = transform(subset(d, x <= sdc_comp), CGI_cat = group),

aes(x, ymin = ymin, ymax = ymax, group = group),

fill = "green",

alpha = 0.2,

show.legend = TRUE) +

geom_vline(xintercept =sdc, linetype="dashed") +

geom_vline(xintercept =sdc_comp, linetype="dashed")+

annotate("segment", x = -15, xend = -35, y = 0.7, yend = 0.7, colour = "black", size=0.5, arrow=arrow(length = unit(0.03, "npc"))) +

annotate("segment", x = 15, xend = 35, y = 0.7, yend = 0.7, colour = "black", size=0.5, arrow=arrow(length = unit(0.03, "npc"))) +

geom_text(aes(x = -30, y = 0.45, label = "Improvement"),

hjust = 0,

vjust = 0,

colour = "black",

size = 2.5) +

geom_text(aes(x = 20, y = 0.45, label = "Deterioration"),

hjust = 0,

vjust = 0,

colour = "black",

size = 2.5) +

ylab("CGI-I Response") + xlab("Change in PRO Score")

rcc

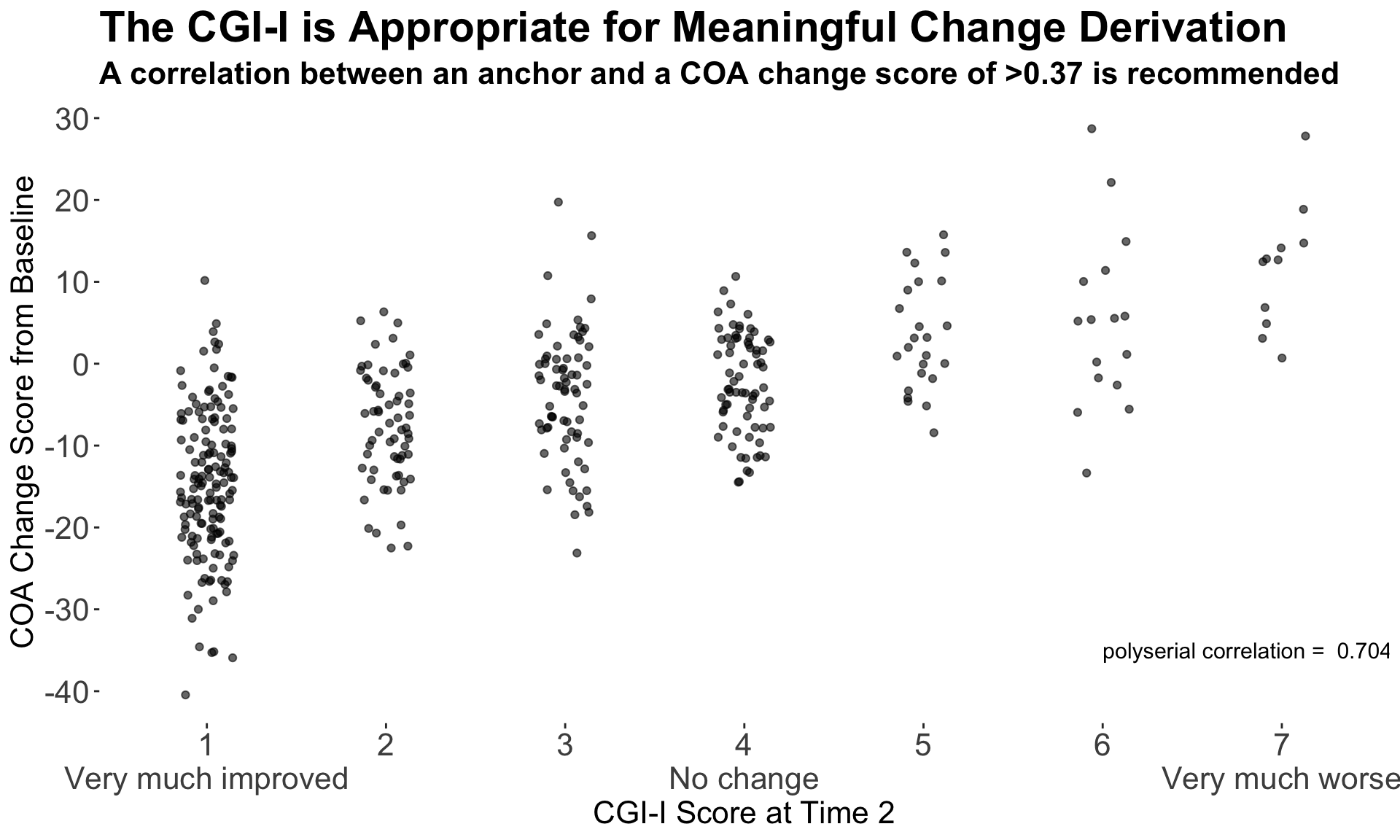

# ggsave("reliable_clinical_change_plot_red_green_v0_2.png", plot = rcc, device = png)3.8 Dot plot

This graph used a different approach, showing only the patient-level data within each category as a scatter plot, with added jittering. The trend between categories is clear, and the number of patients can be compared between groups visually. It was noted that some people find it hard to compare distributions from scatter plots, so a hybrid of scatter plot and a elements showing the distribution, e.g. boxplot or density plot, may have been useful. However the author of the graph mentioned that this graph was intended to be used at the first step of an assessment, to answer the question “is CGI a good enough measure to investigate the meaningful change threshold”

# library(tidyverse)

# library(ggridges)

# library(polycor)

# library(grid)

# Clear environment

# rm(list=ls())

# Colour scheme

Turquoise100 <- "#00a3e0"

Turquoise75 <- "#40bae8"

Turquoise50 <- "#7fd1ef"

Turquoise25 <- "#bfe8f7"

Blue100 <- "#005587"

Blue50 <- "#7FAAC3"

Green100 <- "#43b02a"

Green100a <- rgb(67, 176, 42, alpha = 0.5 * 255, maxColorValue = 255)

Green50 <- "#a1d794"

Purple100 <-"#830065"

Purple100a <- rgb(131, 0, 101, alpha = 0.5 * 255, maxColorValue = 255)

Purple50 <- "#c17fb2"

dat <- read.csv("./01_Datasets/WWW_example_minimal_clinical_improvement.csv")

# glimpse(dat)

dat$USUBJID <- c(1:nrow(dat)) #MAKE A NEW SUBJECT ID COLUMN

dat <- dat %>%

rename("Time1" = `total.score.baseline`, "Time2" = `total.score.follow.up`, "CGI" =`CGI.I`) %>% #RENAME COLUMNS

mutate(CHANGE = Time2 - Time1) # MAKE CHANGE SCORES. NEGATIVE SCORE IS IMPROVEMENT

dat$CGI <- as.factor(dat$CGI)

corr <- round(polyserial(dat$CHANGE, dat$CGI),3)

#CREATE A PLOT SHOWING TH CORRELATION BETWEEN THE ANCHOR AND PRO MEASURE

p <- ggplot(dat, aes(x=CGI, y=CHANGE)) +

geom_jitter(width = 0.15, height = 0.5,alpha = 0.6) +

ggtitle("The CGI-I is Appropriate for Meaningful Change Derivation", subtitle = "A correlation between an anchor and a COA change score of >0.37 is recommended")+

labs(x="CGI-I Score at Time 2", y = "COA Change Score from Baseline")+

scale_y_continuous(breaks=-40:+40*10) + # SET TICK EVERY 10

scale_x_discrete(labels=c("1\nVery much improved", "2", "3","4\nNo change", "5", "6","7\nVery much worse")) +

theme(panel.background = element_blank(), axis.line = element_blank(),

axis.text=element_text(size=16),

axis.title=element_text(size=16),

legend.text=element_text(size=16),

legend.title=element_text(size=16),

plot.title = element_text(size=22, face="bold"),

plot.subtitle = element_text(size=16, face="bold")) +

annotate("text", x=6, y=-35, label=paste("polyserial correlation = ", corr), size=4, hjust=0)

p

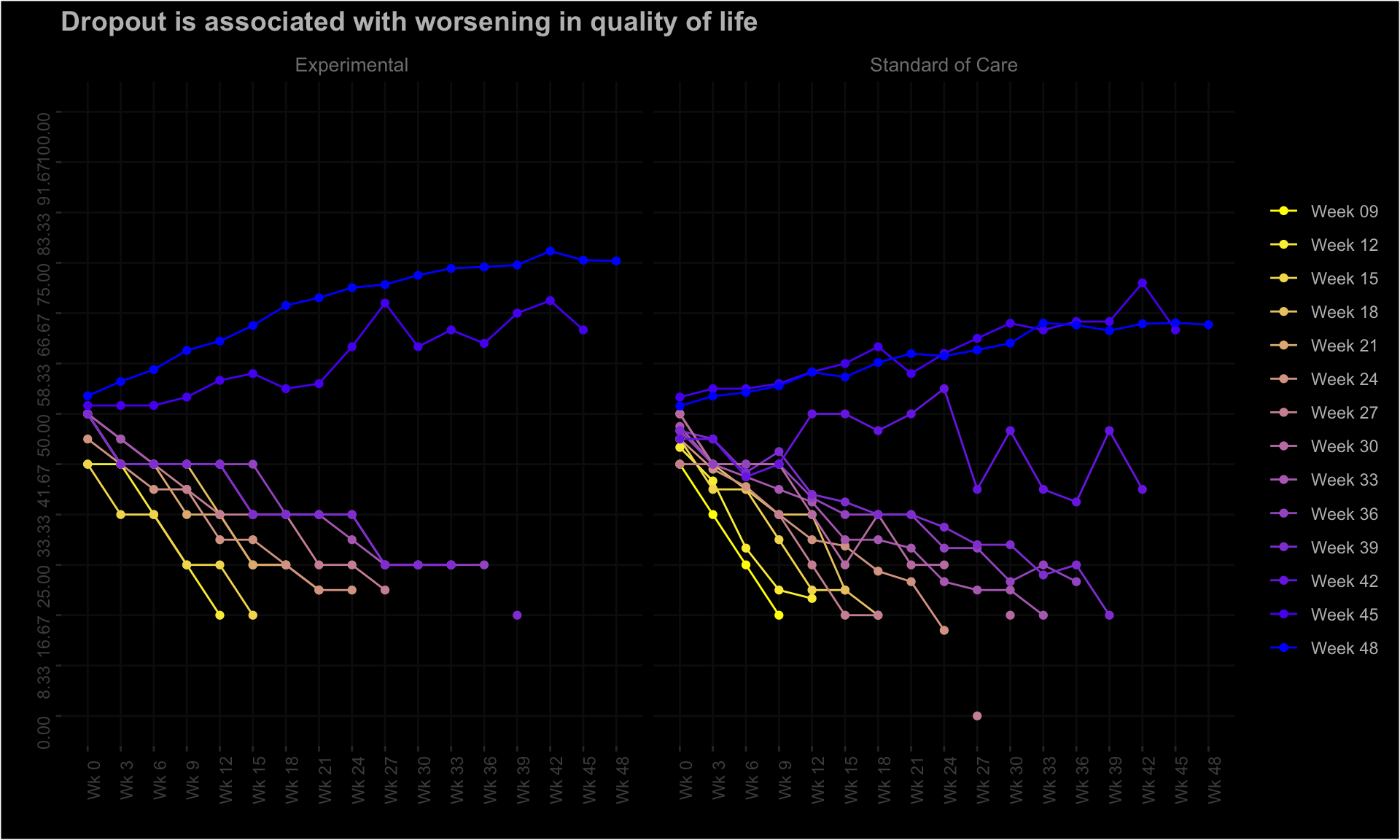

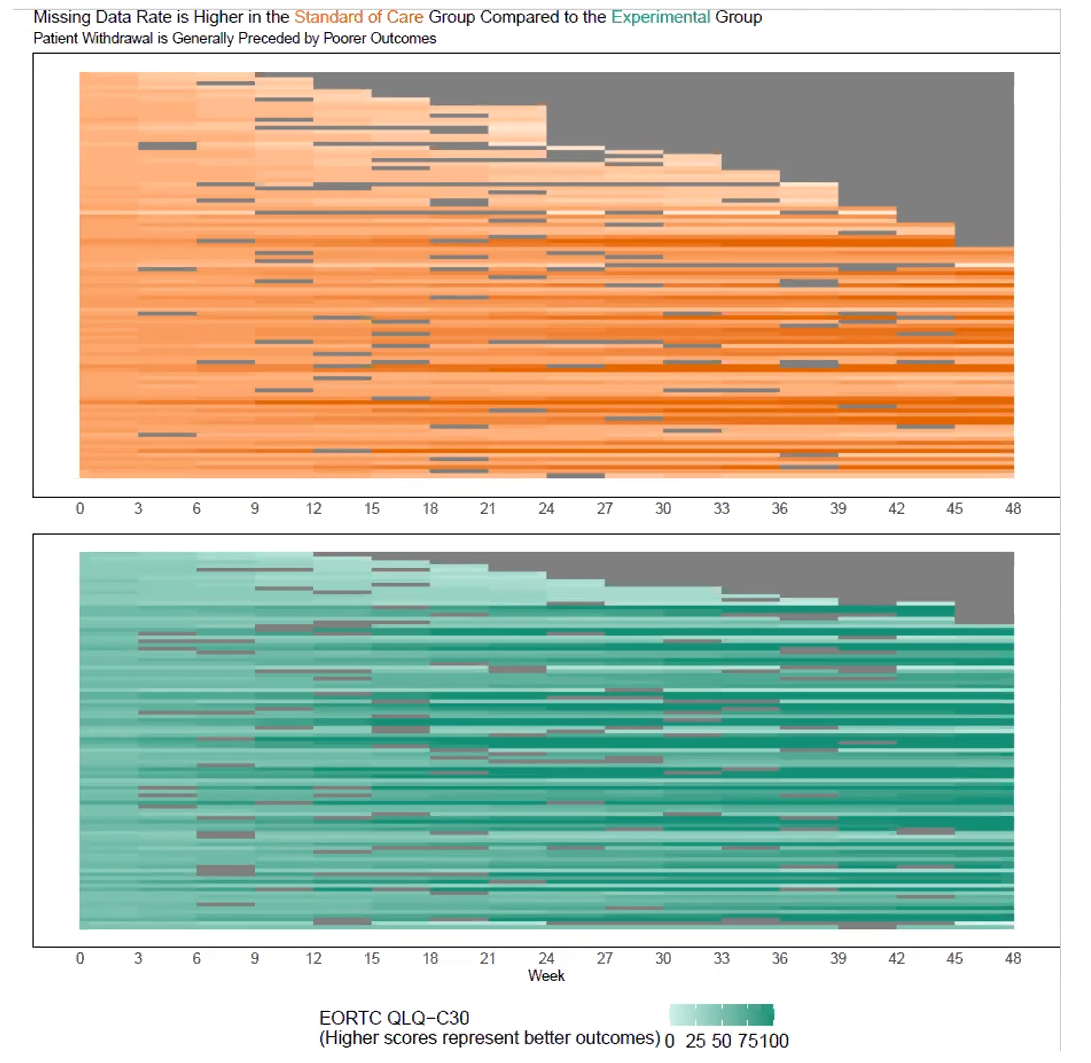

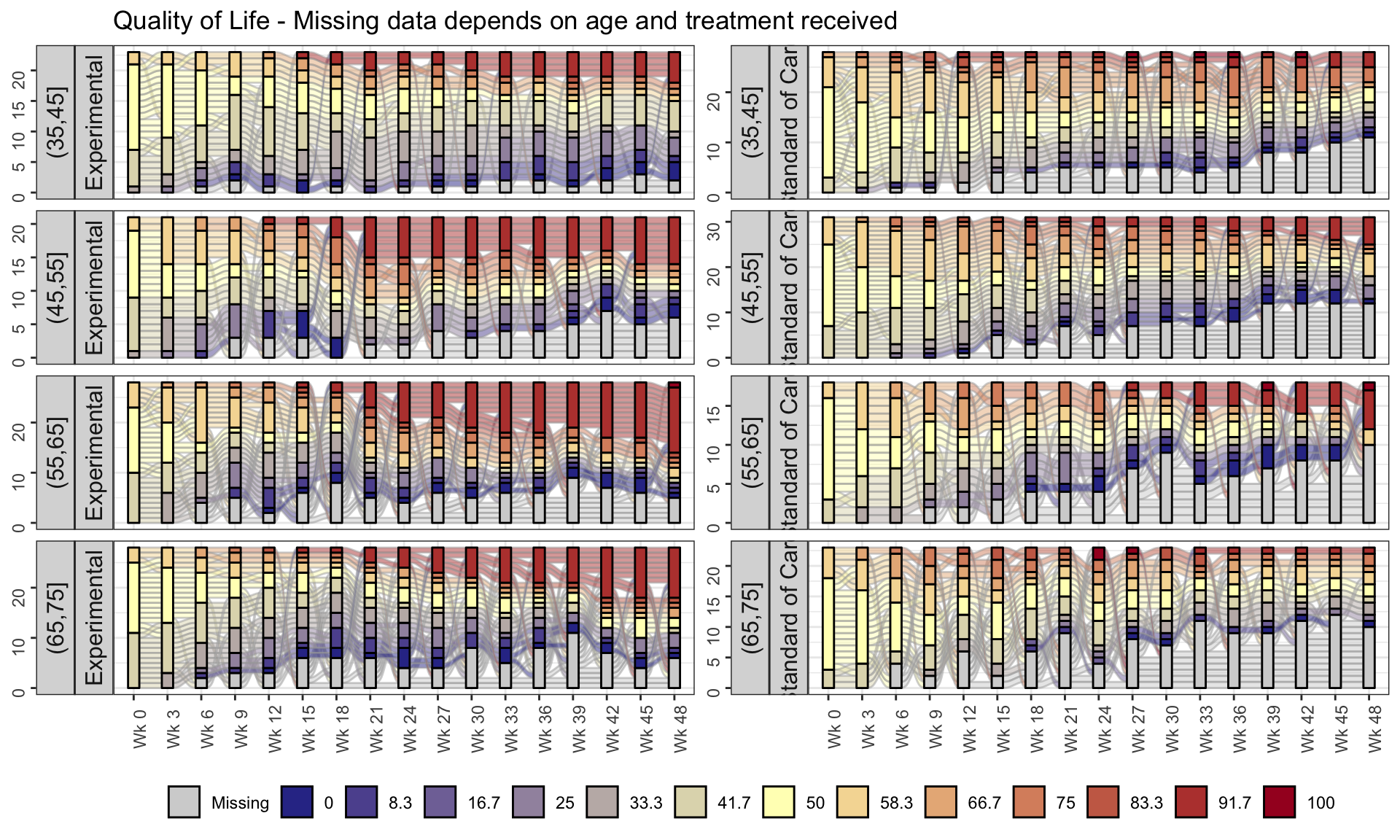

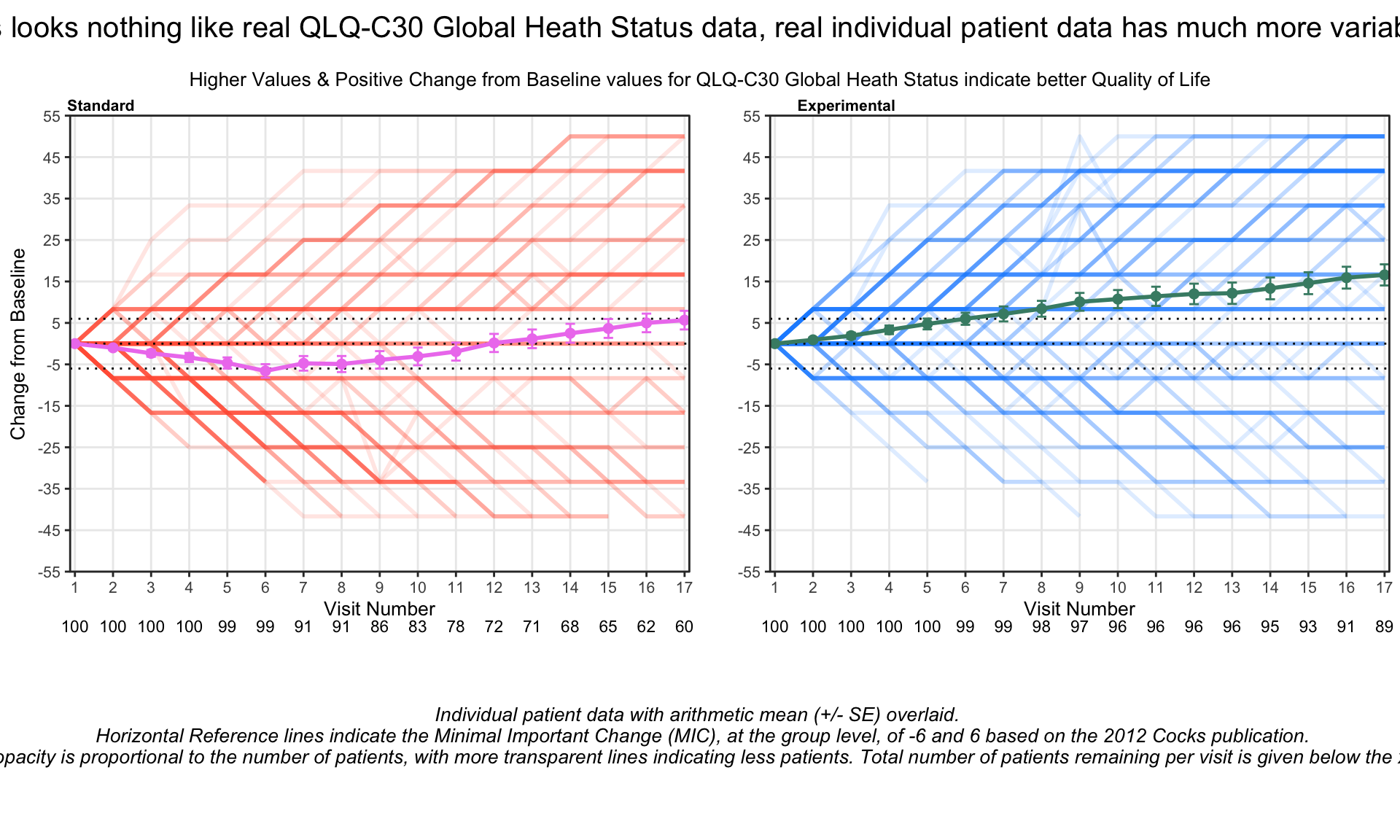

4 EORTC QLQ-C30

The EORTC QLQ-C30 is a 30-item questionnaire that has been designed for use in a wide range of cancer patient populations and is a reliable and valid measure of the quality of life in cancer patients. It includes a number of different scales, but this challenge is focussed on the global health and quality of life scale (QL).

4.1 Line graphs

# library(dplyr)

# library(tidyr)

# library(ggplot2)

# library(forcats)

# library(scales)

d0 <- read.csv2("./01_Datasets/ww eortc qlq-c30 missing.csv", sep=",") %>%

as_tibble()

# d0

d1 <- d0 %>%

pivot_longer(cols=starts_with("WEEK"), names_to = "AVISIT", values_to = "AVAL") %>%

mutate(AVAL=as.numeric(AVAL)) %>%

select(USUBJID, ARM, LASTVIS, AGE:AVAL)

# d1

d2 <- d1 %>%

group_by(ARM, LASTVIS, AVISIT) %>%

dplyr::summarize(AVAL = mean(AVAL, na.rm=TRUE)) %>%

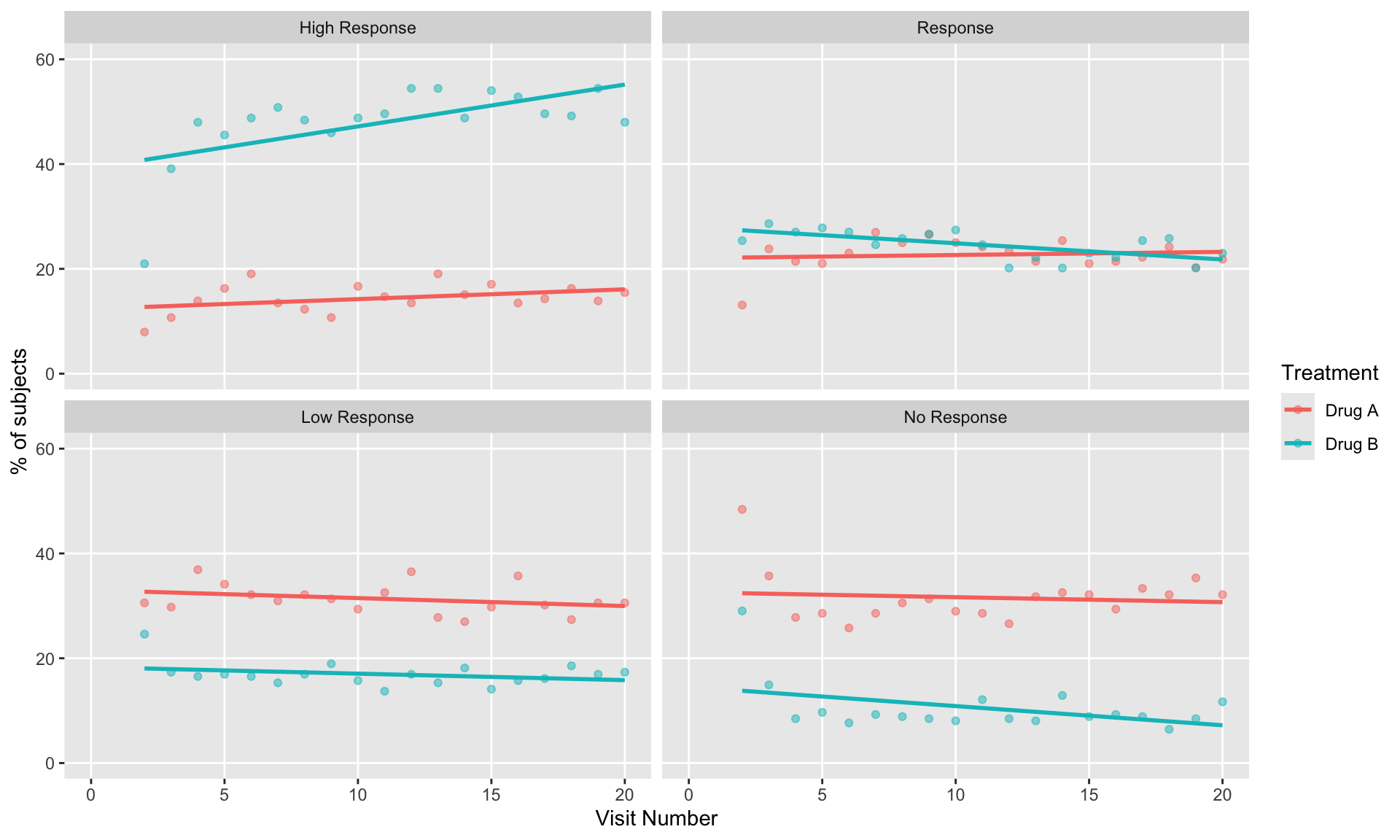

mutate(LASTVISC=as.factor(paste("Week", sprintf("%02.f", LASTVIS))),